【图像算法】彩色图像分割专题八:基于MeanShift的彩色分割

-------------------------------------------------------------------------------------------------------------------------------

【图像算法】彩色图像分割专题八:基于MeanShift的彩色分割

SkySeraph July 14th 2011 HQU

Email:[email protected] QQ:452728574

Latest Modified Date:July 14th 2011 HQU

-------------------------------------------------------------------------------------------------------------------------------

》原理

不啰嗦了,看这:http://people.csail.mit.edu/sparis/#cvpr07

-------------------------------------------------------------------------------------------------------------------------------

》源码

核心代码(参考网络)

//============================Meanshift==============================//

void MyClustering::MeanShiftImg(IplImage * src , IplImage * dst , float r , int Nmin ,int Ncon )

{

int i , j , p ,k=0,run_meanshift_slec_number=0;

int pNmin; //mean shift产生的特征的搜索框内的特征数

IplImage * temp , * gray; //转换到Luv空间的图像

CvMat * distance , * result , *mask; //

CvMat * temp_mat ,*temp_mat_sub ,*temp_mat_sub2 ,* final_class_mat; //Luv空间的图像到矩阵,图像矩阵与随机选择点之差,

CvMat * cn ,* cn1 , * cn2 , * cn3;

double /*covar_img[3] ,*/ avg_img[3]; //图像的协方差主对角线上的元素和,各个通道的均值

double r1; //搜索半径

int temp_number;

meanshiftpoint meanpoint[25]; //存储随机产生的25点

CvScalar cvscalar1,cvscalar2;

int order[25];

Feature feature[100]; //特征

double shiftor;

CvMemStorage * storage=NULL;

CvSeq * seq=0 , * temp_seq=0 , *prev_seq;

//---------------------------------------------RGB to Luv空间,初始化----------------------------------------------

temp = cvCreateImage(cvSize(src->width,src->height),IPL_DEPTH_8U, src->nChannels);

gray = cvCreateImage(cvSize(src->width,src->height),IPL_DEPTH_8U, 1);

temp_mat = cvCreateMat(src->height,src->width,CV_8UC3);

final_class_mat = cvCreateMat(src->height,src->width,CV_8UC3);

mask = cvCloneMat(temp_mat);

temp_mat_sub = cvCreateMat(src->height,src->width,CV_32FC3);

temp_mat_sub2 = cvCreateMat(src->height,src->width,CV_32FC3);

cvZero(temp);

cvCvtColor(src,temp,CV_RGB2Luv); //RGB to Luv空间

distance = cvCreateMat(src->height,src->width,CV_32FC1);

result = cvCreateMat(src->height,src->width,CV_8UC1);

cvConvert(temp,temp_mat); //IplImage to Mat

cn = cvCreateMat(src->height,src->width,CV_32FC1);

cn1 = cvCloneMat(cn);

cn2 = cvCloneMat(cn);

cn3 = cvCloneMat(cn);

storage = cvCreateMemStorage(0);

//-------------------------------------------计算搜索窗口半径 r --------------------------------------------

if(r!=NULL)

r1=r;

else

{

cvscalar1 = cvSum(temp_mat);

avg_img[0] = cvscalar1.val[0]/(src->width * src->height);

avg_img[1] = cvscalar1.val[1]/(src->width * src->height);

avg_img[2] = cvscalar1.val[2]/(src->width * src->height);

cvscalar1 = cvScalar(avg_img[0],avg_img[1],avg_img[2],NULL);

cvScale(temp_mat,temp_mat_sub,1.0,0.0);

cvSubS(temp_mat_sub , cvscalar1 , temp_mat_sub ,NULL);

cvMul(temp_mat_sub , temp_mat_sub , temp_mat_sub2);

cvscalar1 = cvSum(temp_mat_sub2);

r1 = 0.4*cvSqrt( (cvscalar1.val[0] + cvscalar1.val[1] + cvscalar1.val[2])/(src->width * src->height));;

}

//初始化随机数生成种子

srand((unsigned)time(NULL));

//--------------------循环,使用meanshift进行特征空间分析,终止条件是Nmin--------------------------------------

do

{

//--------------------------------------------初始化搜索窗口位置-------------------------------------------

run_meanshift_slec_number++;

cvSet(distance,cvScalar(r1*r1,NULL,NULL,NULL),NULL);

for( i = 0 ; i < 25 ; i++)

{

meanpoint[i].pt.x = rand()%src->width;

meanpoint[i].pt.y = rand()%src->height;

}

cvScale(temp_mat,temp_mat_sub,1.0,0.0);

for( i = 0 ; i < 25 ; i++)

{

/*cvSubS(temp_mat_sub ,cvScalar(cvGetReal3D(temp_mat,meanpoint[i].pt.x,meanpoint[i].pt.y,0),

cvGetReal3D(temp_mat,meanpoint[i].pt.x,meanpoint[i].pt.y,1),

cvGetReal3D(temp_mat,meanpoint[i].pt.x,meanpoint[i].pt.y,2),

NULL),temp_mat_sub,NULL);*/

cvSplit(temp_mat_sub,cn,cn1,cn2,NULL);

cvSubS(temp_mat_sub,cvScalar(cvmGet(cn,meanpoint[i].pt.y,meanpoint[i].pt.x),

cvmGet(cn1,meanpoint[i].pt.y,meanpoint[i].pt.x),

cvmGet(cn2,meanpoint[i].pt.y,meanpoint[i].pt.x),NULL),temp_mat_sub,NULL);

cvMul(temp_mat_sub,temp_mat_sub,temp_mat_sub2,1);

cvSplit(temp_mat_sub2,cn,cn1,cn2,NULL);

cvAdd(cn,cn1,cn3,NULL);

cvAdd(cn2,cn3,cn3,NULL); //cn3中存放着,当前随机点与空间中其它点距离的平方。

cvCmp(cn3,distance,result,CV_CMP_LE); //距离小于搜索半径则result相应位为1

cvAndS(result,cvScalar(1,NULL,NULL,NULL),result,NULL);

cvscalar1 = cvSum(result);

meanpoint[i].con_f_number = (int)cvscalar1.val[0];

}

for(i = 0 ; i < 25 ; i++)

{

order[i]=i;

}

for(i = 0 ; i < 25 ; i++)

for(j = 0 ; j < 25-i-1; j++)

{

if(meanpoint[order[j]].con_f_number < meanpoint[order[j+1]].con_f_number)

{

temp_number=order[j];

order[j]=order[j+1];

order[j+1]=temp_number;

}

}

//--------------------------------------------meanshift算法------------------------------------------------

double temp_mean[3];

for( i = 0 ; i < 25 ; i++)

{

cvScale(temp_mat,temp_mat_sub,1.0,0.0);

cvSplit(temp_mat_sub,cn,cn1,cn2,NULL);

temp_mean[0] = cvmGet(cn , meanpoint[order[i]].pt.y , meanpoint[order[i]].pt.x);

temp_mean[1] = cvmGet(cn1 , meanpoint[order[j]].pt.y , meanpoint[order[i]].pt.x);

temp_mean[2] = cvmGet(cn2 , meanpoint[order[j]].pt.y , meanpoint[order[i]].pt.x);

//meanshift过程

do

{

//计算出在搜索窗口内的特征点,并且生成对应的模板,即对应的点置一的矩阵表示对应的点在搜索框内

cvScale(temp_mat,temp_mat_sub,1.0,0.0);

cvSubS(temp_mat_sub,cvScalar(temp_mean[0],temp_mean[1],temp_mean[2],NULL),temp_mat_sub,NULL);

cvMul(temp_mat_sub,temp_mat_sub,temp_mat_sub2,1);

cvSplit(temp_mat_sub2 , cn , cn1 , cn2 , NULL );

cvAdd(cn,cn1,cn3,NULL);

cvAdd(cn2,cn3,cn3,NULL); //cn3中存放着,当前随机点与空间中其它点距离的平方。

cvCmp(cn3,distance,result,CV_CMP_LE); //距离小于搜索半径则result相应位为0XFF

//计算shiftor

cvCopy(temp_mat , final_class_mat ,NULL); //

cvMerge(result , result ,result ,NULL,mask);

cvAnd(final_class_mat , mask ,final_class_mat ,NULL); //与mask(3通道,0XFF)做与操作,把搜索半径外的点置零

cvScale(final_class_mat,temp_mat_sub,1.0,0.0); //搜索半径内的点从8U转换成32F

cvAndS(result,cvScalar(1,NULL,NULL,NULL),result,NULL); //相应位set 1

cvscalar1 = cvSum(result); //reslut 作为 模板 ,返回搜索窗口内的特征数

cvSubS(temp_mat_sub,cvScalar(temp_mean[0],temp_mean[1],temp_mean[2],NULL),temp_mat_sub,result);

cvscalar2 = cvSum(temp_mat_sub);

cvscalar2.val[0] = cvscalar2.val[0]/cvscalar1.val[0] ;

cvscalar2.val[1] = cvscalar2.val[1]/cvscalar1.val[0] ;

cvscalar2.val[2] = cvscalar2.val[2]/cvscalar1.val[0] ;

shiftor = cvSqrt(pow(cvscalar2.val[0], 2) + pow(cvscalar2.val[1], 2) + pow(cvscalar2.val[2], 2));

temp_mean[0]=temp_mean[0]+cvscalar2.val[0];

temp_mean[1]=temp_mean[1]+cvscalar2.val[1];

temp_mean[2]=temp_mean[2]+cvscalar2.val[2];

/*cvCopy(temp_mat , final_class_mat ,NULL); //

cvMerge(result , result ,result ,NULL,mask);

cvAnd(final_class_mat , mask ,final_class_mat ,NULL); //与result做与操作,把搜索半径外的点置零

cvScale(final_class_mat,temp_mat_sub,1.0,0.0); //搜索半径内的点从8U转换成32F

cvSplit(temp_mat_sub,cn,cn1,cn2,NULL);

cvSubS(cn , cvScalar(temp_mean[0],NULL,NULL,NULL),cn,result);

cvSubS(cn1, cvScalar(temp_mean[1],NULL,NULL,NULL),cn1,result);

cvSubS(cn2, cvScalar(temp_mean[2],NULL,NULL,NULL),cn2,result);

cvMerge(cn,cn1,cn2,NULL,temp_mat_sub);

cvscalar2 = cvSum(temp_mat_sub);

shiftor = cvSqrt(pow(cvscalar2.val[0] , 2) + pow(cvscalar2.val[1] , 2) + pow(cvscalar2.val[2] , 2));

temp_mean[0]=temp_mean[0]+cvscalar2.val[0];

temp_mean[1]=temp_mean[1]+cvscalar2.val[1];

temp_mean[2]=temp_mean[2]+cvscalar2.val[2];*/

}

while(shiftor>0.1); //meanshift算法过程

//--------------------------------------------去除不重要特征-----------------------------------------------

if(k==0)

{

feature[k].pt.x = temp_mean[0];

feature[k].pt.y = temp_mean[1];

feature[k].pt.z = temp_mean[2];

feature[k].number= (int)cvscalar1.val[0]; //因为小于等于的情况成立时,result对应位置是0XFF,不成立时对应位置为0

pNmin = (int)cvscalar1.val[0]; //此特征搜索窗口内,特征空间的向量个数

feature[k].result=cvCreateMat(src->height,src->width,CV_8UC1);

cvAndS(result,cvScalar(1,NULL,NULL,NULL),result,NULL);

cvCopy(result,feature[k].result,NULL);

k++;

}

else

{

int flag = 0;

for(j = 0 ; j < k ; j++)

{

if(pow(temp_mean[0]-feature[j].pt.x , 2) + pow(temp_mean[1]-feature[j].pt.y ,2) + pow(temp_mean[2]-feature[j].pt.z, 2)

< r1*r1)

{

flag = 1;

break;

}

}

if(flag==0)

{

feature[k].pt.x = temp_mean[0];

feature[k].pt.y = temp_mean[1];

feature[k].pt.z = temp_mean[2];

feature[k].number=(int)cvscalar1.val[0];

pNmin = (int)cvscalar1.val[0]; //此特征搜索窗口内,特征空间的向量个数

feature[k].result=cvCreateMat(src->height,src->width,CV_8UC1);

cvCopy(result,feature[k].result,NULL);

k++;

//if(pNmin < Nmin )

// break;

}

}//去除不重要特征

//if(pNmin < Nmin)

// break;

} //

}while(pNmin > Nmin || run_meanshift_slec_number>60 );

//------------------------------------------------后处理---------------------------------------------------------

cvSetZero(result);

for( i = 0 ; i < k ; i ++)

{

cvOr(result,feature[i].result,result,NULL);

}

cvScale(temp_mat,temp_mat_sub,1.0,0.0);

cvSplit(temp_mat_sub,cn,cn1,cn2,NULL);

for(i = 0 ; i < src->width ; i++)

for( j = 0 ; j < src->height ; j++)

{

if(cvGetReal2D(result,j,i)==0) //未分类的像素点,进行分类,为最近的特征中心

{

double unclass_dis , min_dis;

int min_dis_index;

for( p = 0 ; p < k ; p++ )

{

unclass_dis = pow(feature[p].pt.x - cvmGet(cn,j,i),2) //(temp_mat,i,j,0) ,2)

+ pow(feature[p].pt.y - cvmGet(cn1,j,i),2) //(temp_mat,i,j,1) ,2)

+ pow(feature[p].pt.z - cvmGet(cn2,j,i),2);//(temp_mat,i,j,2) ,2);

if(p==0)

{

min_dis = unclass_dis;

min_dis_index = p;

}

else

{

if(unclass_dis < min_dis)

{

min_dis = unclass_dis;

min_dis_index = p;

}

}

}// end for 与特征比较

cvSetReal2D(feature[min_dis_index].result ,j ,i ,1);

}

}//完成未分类的像素点的分类

cvSetZero(final_class_mat);

for( i = 0 ; i < k ; i++)

{

cvSet(temp_mat, cvScalar(rand()%255,rand()%255,rand()%255,rand()%255), feature[i].result);

cvCopy(temp_mat,final_class_mat,feature[i].result);

}

cvConvert(final_class_mat,dst);

//删除小于Ncon大小的区域

for( i = 0 ; i < k ; i++)

{

cvClearMemStorage(storage);

if(seq) cvClearSeq(seq);

cvConvert( feature[i].result , gray);

cvFindContours( gray , storage , & seq ,sizeof(CvContour) , CV_RETR_LIST);

for(temp_seq = seq ; temp_seq ; temp_seq = temp_seq->h_next)

{

CvContour * cnt = (CvContour*)seq;

if(cnt->rect.width * cnt->rect.height < Ncon)

{

prev_seq = temp_seq->h_prev;

if(prev_seq)

{

prev_seq->h_next = temp_seq->h_next;

if(temp_seq->h_next) temp_seq->h_next->h_prev = prev_seq ;

}

else

{

seq = temp_seq->h_next ;

if(temp_seq->h_next ) temp_seq->h_next->h_prev = NULL ;

}

}

}//

cvDrawContours(src, seq , CV_RGB(0,0,255) ,CV_RGB(0,0,255),1);

}

//----------------释放空间-------------------------------------------------------

cvReleaseImage(& temp);

cvReleaseImage(& gray);

cvReleaseMat(&distance);

cvReleaseMat(&result);

cvReleaseMat(&temp_mat);

cvReleaseMat(&temp_mat_sub);

cvReleaseMat(&temp_mat_sub2);

cvReleaseMat(&final_class_mat);

cvReleaseMat(&cn);

cvReleaseMat(&cn1);

cvReleaseMat(&cn2);

cvReleaseMat(&cn3);

}

-------------------------------------------------------------------------------------------------------------------------------

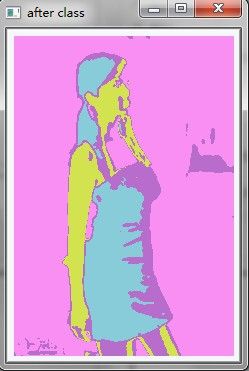

》效果

运行时间16.5s

原图:

分割图:

被改写了的原图:

-------------------------------------------------------------------------------------------------------------------------------

Author: SKySeraph

Email/GTalk: [email protected] QQ:452728574

From: http://www.cnblogs.com/skyseraph/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利.

-------------------------------------------------------------------------------------------------------------------------------