Memcached内存池分析

针对Memcacged1.4.15代码

1.完整slabs内存池图

这是我画的memcached的slabs内存池对象关系图:

2.内存池数据结构

typedef struct {

unsigned int size; /* 每个item的大小 */

unsigned int perslab; /* 每个page中包含多少个item */

void *slots; //空闲的item列表,指向最后一个空闲的chunk的地址,如果perslab=1那么slots和slab_list[0]指向的地址是同一个

unsigned int sl_curr; //当前空闲的item位置(也就是实际空闲item个数),从后往前的

unsigned int slabs; //已分配chunk数目

void **slab_list; //所有的page指针,指向page的地址,也是第一个chunk的地址,可以用(item *)((&slabclass[N])->slab_list[0])获取第一个item

unsigned int list_size; //每个数组trunk数目,默认是16

unsigned int killing; /* index+1 of dying slab, or zero if none */

size_t requested; //已分配总内存大小

} slabclass_t;

//slots:指向free chunk块的指针数组,slots的指针指向一个void //*的数组,该数组中的每一个元素的内容均指向一个空闲的chunk块,而且相同slabclass上的所有slab中的free chunk块均挂接到这个链表上;

//slabs:当前slabclass中分配的页内存个数;

//slab_list:当前slabclass所分配的页内存(slab)的指针数组,每一个数组元素的内容均是一个指向页内存地址的指针;

// size_t new_size = (p->list_size != 0) ? p->list_size * 2 : 16;

// void *new_list = realloc(p->slab_list, new_size * sizeof(void *));

// if (new_list == 0) return 0;

// p->list_size = new_size;

// p->slab_list = new_list;

static slabclass_t slabclass[MAX_NUMBER_OF_SLAB_CLASSES];

static size_t mem_limit = 0;//内存限制大小,如果默认64M slabs_init的时候

static size_t mem_malloced = 0;//已分配大小

static int power_largest;//数组最大个数,默认是42

static void *mem_base = NULL;

static void *mem_current = NULL;//内存使用当前地址

static size_t mem_avail = 0;//剩余内存

/**

* slab 线程锁

*/

static pthread_mutex_t slabs_lock = PTHREAD_MUTEX_INITIALIZER;

static pthread_mutex_t slabs_rebalance_lock = PTHREAD_MUTEX_INITIALIZER;

3.初始化slabs_init

/* slab初始化*/

/* limit:内存大小(字节);factor:增长因子;prealloc:是否一次性分配内存*/

void slabs_init(const size_t limit, const double factor, const bool prealloc) {

int i = POWER_SMALLEST - 1;//0

unsigned int size = sizeof(item) + settings.chunk_size;//chunk_size 最小分配空间

mem_limit = limit;//内存限制大小

if (prealloc) {//一次分配所有设置的内存

/* Allocate everything in a big chunk with malloc */

mem_base = malloc(mem_limit);

if (mem_base != NULL) {

mem_current = mem_base;

mem_avail = mem_limit;

} else {

fprintf(stderr, "Warning: Failed to allocate requested memory in one large chunk.\nWill allocate in smaller chunks\n");

}

}

memset(slabclass, 0, sizeof(slabclass));

//settings.item_size_max = 1024 * 1024=1M; /* The famous 1MB upper limit. */

//settings.item_size_max / factor 1048576/1.25=838860.8 这就是单page最大的chunk大小 字节

//slabclass[41] {size=717184 perslab=1 slots=0x00000000 ...} slabclass_t

//所以到了42就跳出循环了

//slabclass[42] {size=1048576 perslab=1 slots=0x00000000 ...} slabclass_t

//43就不分配了

//slabclass[43] {size=0 perslab=0 slots=0x00000000 ...} slabclass_t

while (++i < POWER_LARGEST && size <= settings.item_size_max / factor) {

/* Make sure items are always n-byte aligned */

if (size % CHUNK_ALIGN_BYTES)//字节数为8的倍数

size += CHUNK_ALIGN_BYTES - (size % CHUNK_ALIGN_BYTES);

slabclass[i].size = size;//item大小

slabclass[i].perslab = settings.item_size_max / slabclass[i].size;//item数目

size *= factor;//乘以增长因子

if (settings.verbose > 1) {

fprintf(stderr, "slab class %3d: chunk size %9u perslab %7u\n",i, slabclass[i].size, slabclass[i].perslab);

}

}

power_largest = i;//默认=42

slabclass[power_largest].size = settings.item_size_max;

slabclass[power_largest].perslab = 1;//最大的只能存储一个item

if (settings.verbose > 1) {

fprintf(stderr, "slab class %3d: chunk size %9u perslab %7u\n",i, slabclass[i].size, slabclass[i].perslab);

}

/* for the test suite: faking of how much we've already malloc'd */

{

char *t_initial_malloc = getenv("T_MEMD_INITIAL_MALLOC");

if (t_initial_malloc) {

mem_malloced = (size_t)atol(t_initial_malloc);

}

}

#ifndef DONT_PREALLOC_SLABS

{

char *pre_alloc = getenv("T_MEMD_SLABS_ALLOC");

if (pre_alloc == NULL || atoi(pre_alloc) != 0) {

slabs_preallocate(power_largest);

}

}

#endif

}

4.第一次分配slab

最初是在do_item_alloc中调用do_slabs_alloc

#0 do_item_alloc (key=0x7ffff0013754 "key", nkey=3, flags=0, exptime=0, nbytes=5, cur_hv=0) at items.c:190

#1 0x0000000000415706 in item_alloc (key=0x7ffff0013754 "key", nkey=3, flags=0, exptime=0, nbytes=5) at thread.c:486

#2 0x000000000040a38e in process_update_command (c=0x7ffff0013550, tokens=0x7ffff7ae4b00, ntokens=6, comm=2, handle_cas=false) at memcached.c:2917

#3 0x000000000040b43d in process_command (c=0x7ffff0013550, command=0x7ffff0013750 "set") at memcached.c:3258

#4 0x000000000040bfa1 in try_read_command (c=0x7ffff0013550) at memcached.c:3504

#5 0x000000000040cc25 in drive_machine (c=0x7ffff0013550) at memcached.c:3824

#6 0x000000000040d81f in event_handler (fd=37, which=2, arg=0x7ffff0013550) at memcached.c:4065

#7 0x00007ffff7dc9e0c in event_process_active_single_queue (base=0x635bb0, flags=0) at event.c:1350

#8 event_process_active (base=0x635bb0, flags=0) at event.c:1420

#9 event_base_loop (base=0x635bb0, flags=0) at event.c:1621

#10 0x0000000000415416 in worker_libevent (arg=0x628d60) at thread.c:384

#11 0x0000003441607851 in start_thread () from /lib64/libpthread.so.0

#12 0x00000034412e890d in clone () from /lib64/libc.so.6

客户端申请存储key value会调用到do_item_alloc

/*@null@*/

item *do_item_alloc(char *key, const size_t nkey, const int flags,

const rel_time_t exptime, const int nbytes,

const uint32_t cur_hv) {

uint8_t nsuffix;

item *it = NULL;

char suffix[40];

size_t ntotal = item_make_header(nkey + 1, flags, nbytes, suffix, &nsuffix);

if (settings.use_cas) {

ntotal += sizeof(uint64_t);//算出需要分配的内存的大小,原始长度+结构体自身大小

}

unsigned int id = slabs_clsid(ntotal);

if (id == 0)

return 0;

mutex_lock(&cache_lock);

/* do a quick check if we have any expired items in the tail.. */

int tries = 5;

int tried_alloc = 0;

item *search;

void *hold_lock = NULL;

rel_time_t oldest_live = settings.oldest_live;

search = tails[id];

/* We walk up *only* for locked items. Never searching for expired.

* Waste of CPU for almost all deployments */

for (; tries > 0 && search != NULL; tries--, search=search->prev) {

uint32_t hv = hash(ITEM_key(search), search->nkey, 0);

/* Attempt to hash item lock the "search" item. If locked, no

* other callers can incr the refcount

*/

/* FIXME: I think we need to mask the hv here for comparison? */

if (hv != cur_hv && (hold_lock = item_trylock(hv)) == NULL)

continue;

/* Now see if the item is refcount locked */

if (refcount_incr(&search->refcount) != 2) {

refcount_decr(&search->refcount);

/* Old rare bug could cause a refcount leak. We haven't seen

* it in years, but we leave this code in to prevent failures

* just in case */

if (search->time + TAIL_REPAIR_TIME < current_time) {

itemstats[id].tailrepairs++;

search->refcount = 1;

do_item_unlink_nolock(search, hv);

}

if (hold_lock)

item_trylock_unlock(hold_lock);

continue;

}

/* Expired or flushed */

if ((search->exptime != 0 && search->exptime < current_time)

|| (search->time <= oldest_live && oldest_live <= current_time)) {

itemstats[id].reclaimed++;

if ((search->it_flags & ITEM_FETCHED) == 0) {

itemstats[id].expired_unfetched++;

}

it = search;

slabs_adjust_mem_requested(it->slabs_clsid, ITEM_ntotal(it), ntotal);

do_item_unlink_nolock(it, hv);

/* Initialize the item block: */

it->slabs_clsid = 0;

} else if ((it = slabs_alloc(ntotal, id)) == NULL) {

调用slabs_clsid()

//寻找适合给定大小的item存储的slab

unsigned int slabs_clsid(const size_t size) {

int res = POWER_SMALLEST;

if (size == 0)

return 0;

while (size > slabclass[res].size)//找到第一个比item size大的slab

if (res++ == power_largest)

return 0;

return res;

}

调用do_slabs_alloc返回slots指向的item,并使slots指向下一个item

/*存储item*/

static void *do_slabs_alloc(const size_t size, unsigned int id) {

slabclass_t *p;

void *ret = NULL;

item *it = NULL;

if (id < POWER_SMALLEST || id > power_largest) {

MEMCACHED_SLABS_ALLOCATE_FAILED(size, 0);

return NULL;

}

p = &slabclass[id];

assert(p->sl_curr == 0 || ((item *)p->slots)->slabs_clsid == 0);

/* fail unless we have space at the end of a recently allocated page,

we have something on our freelist, or we could allocate a new page */

//p->sl_curr != 0 说明还有空闲就不要调用do_slabs_newslab重新分配下一个page

if (! (p->sl_curr != 0 || do_slabs_newslab(id) != 0)) {

/* We don't have more memory available */

ret = NULL;

} else if (p->sl_curr != 0) {

/* return off our freelist */

it = (item *)p->slots;//将当前p空闲的slots其实也就是第一个,实际上是链表最后一个chunk分配给item

p->slots = it->next;//修改p的空闲的slots,为倒数第二个,也就是it的前一个

if (it->next) it->next->prev = 0;//因为it的前一个再前一个已经给item分配了,自然没了

p->sl_curr--;//-1,虽然perslab在分割好之后和sl_curr一样大,但是sl_curr是要递减的,而perslab是永远不变的存储的是chunk个数

ret = (void *)it;

}

if (ret) {

p->requested += size;

MEMCACHED_SLABS_ALLOCATE(size, id, p->size, ret);

} else {

MEMCACHED_SLABS_ALLOCATE_FAILED(size, id);

}

return ret;

}

下面是gdb下调试的函数调用关系:

#0 do_slabs_alloc (size=71, id=1) at slabs.c:241

#1 0x000000000041161d in slabs_alloc (size=71, id=1) at slabs.c:404

#2 0x0000000000412ae6 in do_item_alloc (key=0x7ffff0013754 "key", nkey=3, flags=0, exptime=0, nbytes=5, cur_hv=0) at items.c:188

#3 0x0000000000415706 in item_alloc (key=0x7ffff0013754 "key", nkey=3, flags=0, exptime=0, nbytes=5) at thread.c:486

#4 0x000000000040a38e in process_update_command (c=0x7ffff0013550, tokens=0x7ffff7ae4b00, ntokens=6, comm=2, handle_cas=false) at memcached.c:2917

#5 0x000000000040b43d in process_command (c=0x7ffff0013550, command=0x7ffff0013750 "set") at memcached.c:3258

#6 0x000000000040bfa1 in try_read_command (c=0x7ffff0013550) at memcached.c:3504

#7 0x000000000040cc25 in drive_machine (c=0x7ffff0013550) at memcached.c:3824

#8 0x000000000040d81f in event_handler (fd=37, which=2, arg=0x7ffff0013550) at memcached.c:4065

#9 0x00007ffff7dc9e0c in event_process_active_single_queue (base=0x635bb0, flags=0) at event.c:1350

#10 event_process_active (base=0x635bb0, flags=0) at event.c:1420

#11 event_base_loop (base=0x635bb0, flags=0) at event.c:1621

#12 0x0000000000415416 in worker_libevent (arg=0x628d60) at thread.c:384

#13 0x0000003441607851 in start_thread () from /lib64/libpthread.so.0

#14 0x00000034412e890d in clone () from /lib64/libc.so.6

5.第一次slab_list

初始化slabs,分配trunk

static int do_slabs_newslab(const unsigned int id) {

slabclass_t *p = &slabclass[id];

//settings.item_size_max = 1024 * 1024; /* The famous 1MB upper limit. */

int len = settings.slab_reassign ? settings.item_size_max

: p->size * p->perslab;

char *ptr;

if ((mem_limit && mem_malloced + len > mem_limit && p->slabs > 0) ||

(grow_slab_list(id) == 0) ||

((ptr = memory_allocate((size_t)len)) == 0)) {

MEMCACHED_SLABS_SLABCLASS_ALLOCATE_FAILED(id);

return 0;

}

memset(ptr, 0, (size_t)len);

split_slab_page_into_freelist(ptr, id);

//这里很巧妙,如果是第一次do_slabs_newslab,那么p->slabs=0,++之后就=1,

//第二次来自然就是2,这次会把第2次申请的page也就是ptr串在p->slab_list[1]上

p->slab_list[p->slabs++] = ptr;

mem_malloced += len;

MEMCACHED_SLABS_SLABCLASS_ALLOCATE(id);

return 1;

}

p->slab_list第一次是存16*8个字节

//扩充trunk数目,重新分配slabs个数,默认是分配16个页,后续按照2倍增加

static int grow_slab_list (const unsigned int id) {

slabclass_t *p = &slabclass[id];

if (p->slabs == p->list_size) {

//默认new_zise=16

size_t new_size = (p->list_size != 0) ? p->list_size * 2 : 16;

//因为以后会扩容,所以这里用realloc

//p->slab_list一开始=0,64位sizeof(void *)=8,32位sizeof(void *)=4

void *new_list = realloc(p->slab_list, new_size * sizeof(void *));

if (new_list == 0) return 0;

p->list_size = new_size;

p->slab_list = new_list;

}

return 1;

}

调用split_slab_page_into_freelist分割ptr

5.分割ptr

split_slab_page_into_freelist:

static void split_slab_page_into_freelist(char *ptr, const unsigned int id) {

slabclass_t *p = &slabclass[id];

int x;

for (x = 0; x < p->perslab; x++) {

do_slabs_free(ptr, 0, id);

ptr += p->size;//ptr偏移一个size,第一次是96字节,如果是默认配置的话

}

}

这个函数很短,就是循环去调用do_slabs_free函数

//分割item内存

static void do_slabs_free(void *ptr, const size_t size, unsigned int id) {

slabclass_t *p;

item *it;//首先声明一个item对象

assert(((item *)ptr)->slabs_clsid == 0);

assert(id >= POWER_SMALLEST && id <= power_largest);

if (id < POWER_SMALLEST || id > power_largest)

return;

MEMCACHED_SLABS_FREE(size, id, ptr);

p = &slabclass[id];//获取第id个slabclass_t并声明为p

it = (item *)ptr;//将需要切割的内存空间实例化为item *

it->it_flags |= ITEM_SLABBED;

it->prev = 0;//it一开始无头

it->next = p->slots;//it的新卡一个是p的当前的slots,也就是ptr上一次切割的item,也可以理解为是当前item的上一个item

if (it->next) it->next->prev = it;//如果当前的it的下一个item也就是也就是ptr上一次切割的item存在(第2次切割以后才会有)

//前一个item的后一个item指向当前,其实也就是正常的双链表指向操作

p->slots = it;//p的slots永远指向当前的item,每次如果执行do_slabs_free就会划走一块item,并串前后的指针

p->sl_curr++;//p的当前空闲的item个数+1

p->requested -= size;

return;

}

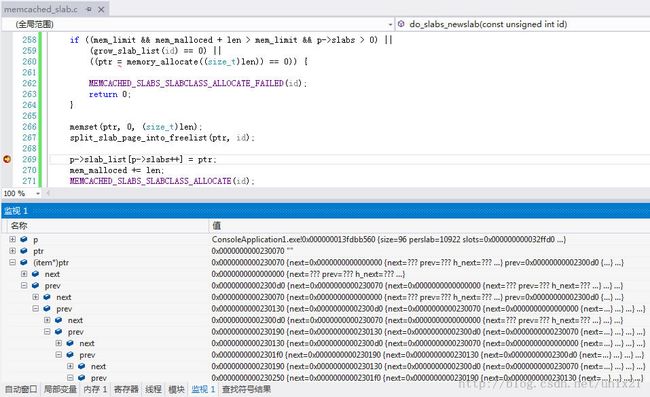

第一次:

(gdb) p ptr

$5 = 0x7ffff51e1010 ""

vs2012+ ptr 0x0000000000230070 "" char *

> split_slab_page_into_freelist(ptr, id);

第二次:

(gdb) p ptr

$25 = (void *) 0x7ffff51e1070

0x70-0x10=0x60=96 正好是一个chunk大小

第三次

(gdb) p ptr

$50 = (void *) 0x7ffff51e10d0

(gdb) p *(it-2)

$46 = {next = 0x7ffff51e1010, prev = 0x7ffff51e10d0, h_next = 0x0, time = 0, exptime = 0, nbytes = 0, refcount = 0, nsuffix = 0 '\000', it_flags = 4 '\004

', slabs_clsid = 0 '\000', nkey = 0 '\000', data = 0x7ffff51e1070}

(gdb) p *(it-4)

$54 = {next = 0x0, prev = 0x7ffff51e1070, h_next = 0x0, time = 0, exptime = 0, nbytes = 0, refcount = 0, nsuffix = 0 '\000', it_flags = 4 '\004', slabs_cl

sid = 0 '\000', nkey = 0 '\000', data = 0x7ffff51e1010}

为什么是it-2才是上一个呢?

因为

(gdb) p sizeof(it)

$57 = 8

(gdb) p sizeof(item)

$58 = 48

it是指针自然是8字节,而item结构是48字节,96正好是48的2倍,其实这里是巧合,求上一个item不应该这么求!

static void split_slab_page_into_freelist(char *ptr, const unsigned int id) {

slabclass_t *p = &slabclass[id];

int x;

for (x = 0; x < p->perslab; x++) {

do_slabs_free(ptr, 0, id);

ptr += p->size;

}

}

因为每次是Ptr增加p->size的长度。

所以直接用next指针就可以了

(gdb) p it->next

$67 = (struct _stritem *) 0x7ffff51e1070

(gdb) p *it->next

$68 = {next = 0x7ffff51e1010, prev = 0x7ffff51e10d0, h_next = 0x0, time = 0, exptime = 0, nbytes = 0, refcount = 0, nsuffix = 0 '\000', it_flags = 4 '\004

', slabs_clsid = 0 '\000', nkey = 0 '\000', data = 0x7ffff51e1070}

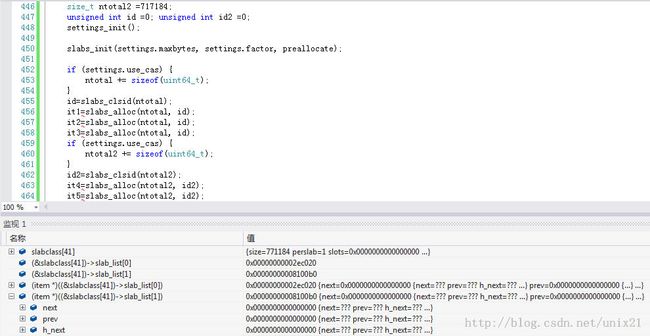

下面是在vs2012下的调试数据,一看就清楚了

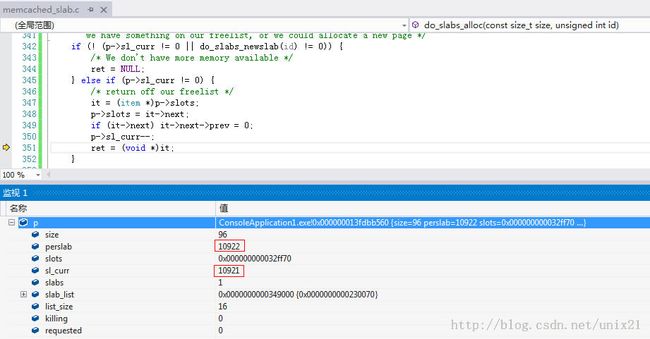

7.分割完ptr之后

回到do_slabs_newslab中,修改了p->slab_list[p->slabs++] = ptr;mem_malloced += len;

然后又回到do_slabs_alloc函数中:

it = (item *)p->slots;//将当前p空闲的slots其实也就是第一个,实际上是链表最后一个chunk分配给item

p->slots = it->next;//修改p的空闲的slots,为倒数第二个,也就是it的前一个

if (it->next) it->next->prev = 0;//因为it的前一个再前一个已经给item分配了,自然没了

p->sl_curr--;//-1,虽然perslab在分割好之后和sl_curr一样大,但是sl_curr是要递减的,而perslab是永远不变的存储的是chunk个数

ret = (void *)it;

(gdb) p *p$72 = {size = 96, perslab = 10922, slots = 0x7ffff52e0f70, sl_curr = 10922, slabs = 1, slab_list = 0x7ffff00169e0, list_size = 16, killing = 0, requested

= 0}

(gdb) n

(gdb) p *p

$73 = {size = 96, perslab = 10922, slots = 0x7ffff52e0f10, sl_curr = 10922, slabs = 1, slab_list = 0x7ffff00169e0, list_size = 16, killing = 0, requested

= 0}

p->sl_curr--;之后的调试数据,可以看到sl_curry已经减1 ,而perslab是不变的。

$75 = {size = 96, perslab = 10922, slots = 0x7ffff52e0f10, sl_curr = 10921, slabs = 1, slab_list = 0x7ffff00169e0, list_size = 16, killing = 0, requested

= 0}

下面是vs2012中的结果,一样的:

8.分配好item之后

回到do_item_alloc,已经得到分配的item

(gdb) p *it

$79 = {next = 0x7ffff52e0f10, prev = 0x0, h_next = 0x0, time = 0, exptime = 0, nbytes = 0, refcount = 0, nsuffix = 0 '\000', it_flags = 4 '\004', slabs_cl

sid = 0 '\000', nkey = 0 '\000', data = 0x7ffff52e0f70}

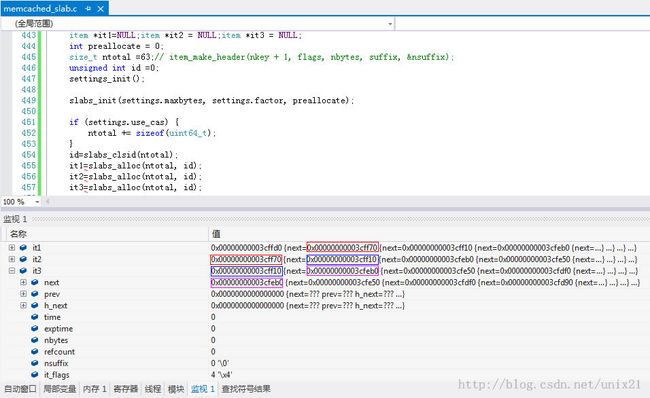

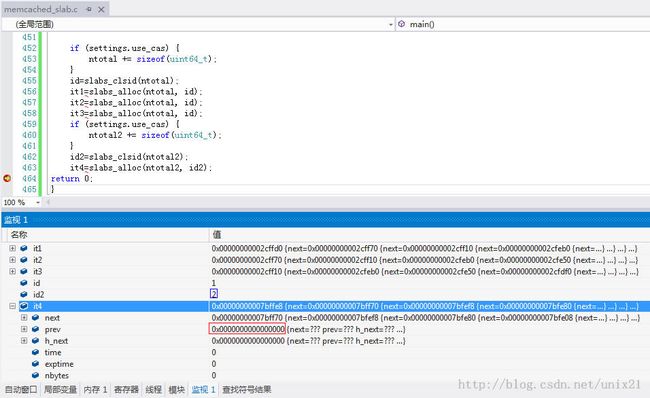

9.模拟memcached调用slabs

3次分配一样大小的

2.故意设一个比默认chunk=96大的需求设置100,从后面的调试信息可以看出id2已经被算出应该是第2个slabs上:

int main()

{

item *it1=NULL;item *it2 = NULL;item *it3 = NULL;item *it4 = NULL;

int preallocate = 0;

size_t ntotal =63;// item_make_header(nkey + 1, flags, nbytes, suffix, &nsuffix);

size_t ntotal2 =100;

unsigned int id =0; unsigned int id2 =0;

settings_init();

slabs_init(settings.maxbytes, settings.factor, preallocate);

if (settings.use_cas) {

ntotal += sizeof(uint64_t);

}

id=slabs_clsid(ntotal);

it1=slabs_alloc(ntotal, id);

it2=slabs_alloc(ntotal, id);

it3=slabs_alloc(ntotal, id);

if (settings.use_cas) {

ntotal2 += sizeof(uint64_t);

}

id2=slabs_clsid(ntotal2);

it4=slabs_alloc(ntotal2, id2);

return 0;

}

制造perslab=1的情景

分配超过settings.item_size_max / factor=717184的size

这样就会命中id=41的slabs[41],同时我们设置2个需要分配717184的:

从调试结果可以看出来slabclass[41]已经分配了2个slab_list,由于这2个slab_list指向的page都只有一个chunk,所以这个chunk中存储的item的next和prev都是0,

因为第一次分割do_slabs_free的时候:

it->prev = 0;

it->next = p->slots;由于p->slots一开始=0,因为后面不会再切割chunk了,所以这个item的next和prev都是0。

item *it1=NULL;item *it2 = NULL;item *it3 = NULL;item *it4 = NULL;item *it5 = NULL;

int preallocate = 0;

size_t ntotal =63;// item_make_header(nkey + 1, flags, nbytes, suffix, &nsuffix);

size_t ntotal2 =717184;

unsigned int id =0; unsigned int id2 =0;

settings_init();

slabs_init(settings.maxbytes, settings.factor, preallocate);

if (settings.use_cas) {

ntotal += sizeof(uint64_t);

}

id=slabs_clsid(ntotal);

it1=slabs_alloc(ntotal, id);

it2=slabs_alloc(ntotal, id);

it3=slabs_alloc(ntotal, id);

if (settings.use_cas) {

ntotal2 += sizeof(uint64_t);

}

id2=slabs_clsid(ntotal2);

it4=slabs_alloc(ntotal2, id2);

it5=slabs_alloc(ntotal2, id2);