开源项目之视频会议程序 Omnimeeting

Omnimeeting是一个多平台的Ç+ +视频会议程序,使用库如 LiveMedia、OpenCv、DevIL、WxWidgets、ffmpeg等库,通过RTSP协议在互联网做到实时的摄像/音频流传输。它采用了流媒体的协议有:MJPEG、H263+、MP3、MP3ADU等等,它可以得到360度全方位影像和做一张脸检测/跟踪运动检测。一种新的算法,也可以做一个直接的全方位图像的人脸检测(支持人脸识别和跟踪提供I转换/发送全方位图像的API)。

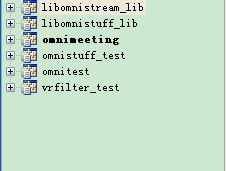

项目如图:

程序效果如图:

omnimeeting提供的软件包包括omnistuff、streaming、gui三个主要文件和数据示例文件。

部分源码分析:

//创建发送和接受流

void MainFrame::OnMenuPreferencesClick( wxCommandEvent& event )

{

if ( m_streamer_thread == NULL ) {

m_streamer_thread = new StreamerCtrlThread( this );

}

if ( m_receiver_thread == NULL ) {

m_receiver_thread = new ReceiverCtrlThread( this );

}

DialogOptions* window = new DialogOptions(this, m_streamer_thread, m_receiver_thread, this,

ID_OPTIONS, _("Preferences..."));

window->Show(true);

}

//创建发送和接受流

void MainFrame::OnMenuPreferencesClick( wxCommandEvent& event )

{

if ( m_streamer_thread == NULL ) {

m_streamer_thread = new StreamerCtrlThread( this );

}

if ( m_receiver_thread == NULL ) {

m_receiver_thread = new ReceiverCtrlThread( this );

}

DialogOptions* window = new DialogOptions(this, m_streamer_thread, m_receiver_thread, this,

ID_OPTIONS, _("Preferences..."));

window->Show(true);

}

//关闭 则释放资源

void MainFrame::OnCloseWindow( wxCloseEvent& event )

{

// provide a clean deletion of objects.

if ( m_receiver_thread && m_receiver_thread->isReceiving() ) {

wxMessageDialog dlg1( this,

wxT("Please stop by hand the receiver, then close.") , wxT( "Warning" ), wxOK );

dlg1.ShowModal();

return;

}

if ( m_streamer_thread && m_streamer_thread->isStreaming() ) {

wxMessageDialog dlg1( this,

wxT("Please stop by hand the server streaming, then close."), wxT( "Warning" ), wxOK );

dlg1.ShowModal();

return;

}

destroy_garbage_collector_for_images ();

wxWindow* window = this;

window->Destroy();

event.Skip();

}

//停止服务

void MainFrame::OnMenuitemActionsStopServerClick( wxCommandEvent& event )

{

if ( m_streamer_thread != NULL ) {

if ( m_streamer_thread->isStreaming() ) {

m_streamer_thread->stopStreaming();

PrintStatusBarMessage( wxT( "Streaming stopped" ), 0 );

// no need to delete the object! Being a thread it will auto-destroy after

// its exiting

// recreate a new ojbect

m_streamer_thread = new StreamerCtrlThread( this );

}

else {

wxMessageDialog dlg1( this, wxT( "Server is not running" ), wxT( "Info" ), wxOK );

dlg1.ShowModal();

delete m_streamer_thread;

m_streamer_thread = new StreamerCtrlThread( this );

}

}

else {

wxMessageDialog dlg1( this, wxT( "Server is not running" ), wxT( "Info" ), wxOK );

dlg1.ShowModal();

}

}

//停止接收

void MainFrame::OnMenuitemActionsStopReceivingClick( wxCommandEvent& event )

{

if ( m_receiver_thread != NULL ) {

if ( m_receiver_thread->isReceiving() ) {

m_receiver_thread->StopReceiving();

PrintStatusBarMessage( wxT( "Receiving stopped" ), 1 );

}

else {

wxMessageDialog dlg1( this, wxT( "Receiver is not running" ), wxT( "Info" ), wxOK );

dlg1.ShowModal();

delete m_receiver_thread;

m_receiver_thread = new ReceiverCtrlThread( this );

}

}

else {

wxMessageDialog dlg1( this, wxT( "Receiver is not running" ), wxT( "Info" ), wxOK );

dlg1.ShowModal();

}

}

//接收完毕则释放资源

void MainFrame::OnReceiverCtrlThreadEnd()

{

// provide a stop for the face_detecting/tracking class if it's available

if ( face_detect ) {

if ( gcard_use == true ) {

if ( ctrl_gcard ) {

ctrl_gcard->loop_stop();

delete ctrl_gcard;

ctrl_gcard = NULL;

}

if ( ctrl_vr_gcard ) {

ctrl_vr_gcard->loop_stop();

delete ctrl_vr_gcard;

ctrl_vr_gcard = NULL;

}

}

else {

if ( ctrl_lookup ) {

ctrl_lookup->loop_stop();

delete ctrl_lookup;

ctrl_lookup = NULL;

}

if ( ctrl_vr_lookup ) {

ctrl_vr_lookup->loop_stop();

delete ctrl_vr_lookup;

ctrl_vr_lookup = NULL;

}

}

ctrl_initialized = false;

face_detect = false;

}

// important!: set to null our class, so that we can recreate it when necessary

m_receiver_thread = NULL;

reset_displayed_image_vector();

clear_garbage_collector_for_images();

video_received_window = false;

}

omnistuff测试代码:

int main(int argc, char**argv ) {

/* ok, hardcode them for now... */

double min_radius = 40;

double max_radius = 220;

double omni_center_x = 326;

double omni_center_y = 250;

OmniAlgoSimpleDetection<OmniGCardConverter, double, IplImage, CvPoint> *ctrl;

OmniConversion<OmniGCardConverter, double, IplImage, CvPoint> *conv;

bool ctrl_initialized = false;

IplImage *frame;

// init capturing

CvCapture* capture = 0;

#ifdef WIN32

char* filename = "..\\..\\data\\videos\\video_test-1-divx5.avi";

#else

char* filename = "../../data/videos/video_test-1-divx5.avi";

#endif

// hardcoding... it ugly but this is a sample test file

const int c_array_length = 2;

const char *c_array[c_array_length];

#ifdef WIN32

// this seems to be the best Haar-cascade combination: it gives the lowest number of false-positive

// Anyway improving the xml's with a good haar learning can rise up the performances.

c_array[0] = "..\\..\\omnistuff\\data\\haarcascades\\haarcascade_upperbody.xml";

c_array[1] = "..\\..\\omnistuff\\data\\haarcascades\\haarcascade_frontalface_default.xml";

#else

c_array[0] = "../../omnistuff/data/haarcascades/haarcascade_upperbody.xml";

c_array[1] = "../../omnistuff/data/haarcascades/haarcascade_frontalface_default.xml";

#endif

if ( argc != 2 ) {

printf( "To play a different file read below.\n" );

printf( "usage: %s <avi file>\n", argv[0] );

printf( "Now using %s\n", filename );

}

else {

filename = argv[1];

printf( "Now using %s\n", filename );

}

capture = cvCaptureFromAVI( filename );

while ( 1 ) {

frame = cvQueryFrame( capture );

if( !frame ) {

printf( "Some error occurred or end of video reached\n");

break;

}

if ( !ctrl_initialized ) {

conv = new OmniConversion<OmniGCardConverter, double, IplImage, CvPoint>(

new OmniGCardConverter( min_radius,

max_radius,

omni_center_x,

omni_center_y,

640,

480,

"omnigcardo",

"../../omnistuff/data/shaders/vertex_shader.cg",

"vertex_shader_main",

"../../omnistuff/data/shaders/pixel_shader.cg",

"pixel_shader_main") );

if ( conv->is_initialized () ) {

printf("great, gcard is initialized and ready to use...\n");

ctrl_initialized = true;

}

else {

printf( "some problem occurred: no gcard available...\n");

exit(-1);

}

ctrl = new OmniAlgoSimpleDetection<OmniGCardConverter, double, IplImage, CvPoint>( conv,

frame,

(const char**)c_array,

c_array_length,

FREEZE_FRAME_BOUND,

TRACK_WINDOW_HITRATE);

}

ctrl->loop_next_frame( frame );

cvWaitKey ( WAIT_KEY_VALUE );

}

cvReleaseCapture( &capture );

return 0;

}

omnitest测试代码:

int main( int argc, char** argv ) {

#ifdef WIN32

char* filename = "..\\..\\data\\videos\\video_test-1-divx5.avi";

#else

char* filename = "../../data/videos/video_test-1-divx5.avi";

#endif

if ( argc != 2 ) {

printf( "To play a different file read below.\n" );

printf( "usage: %s <avi file>\n", argv[0] );

printf( "Now using %s\n", filename );

}

else {

filename = argv[1];

printf( "Now using %s\n", filename );

}

MyTimer timer;

// hardcoding... it ugly but this is a sample test file

const int c_array_length = 2;

const char *c_array[c_array_length];

#ifdef WIN32

// this seems to be the best Haar-cascade combination: it gives the lowest number of false-positive

// Anyway improving the xml's with a good haar learning can rise up the performances.

c_array[0] = "..\\..\\omnistuff\\data\\haarcascades\\haarcascade_upperbody.xml";

c_array[1] = "..\\..\\omnistuff\\data\\haarcascades\\haarcascade_frontalface_default.xml";

#else

c_array[0] = "../../omnistuff/data/haarcascades/haarcascade_upperbody.xml";

c_array[1] = "../../omnistuff/data/haarcascades/haarcascade_frontalface_default.xml";

#endif

// Our OmniCtrl class.

OmniLoopCtrl *ctrl;

bool ctrl_initialized = false;

// one second of timeout

timer.Start(1000);

IplImage *frame;

// init capturing

CvCapture* capture = 0;

capture = cvCaptureFromAVI( filename );

while ( 1 ) {

frame = cvQueryFrame( capture );

if( !frame ) {

printf( "Some error occurred or end of video reached\n");

break;

}

if ( !ctrl_initialized ) {

ctrl = new OmniLoopCtrl( frame,

1/(double)RADIUS_MAX,

RADIUS_MIN,

RADIUS_MAX,

CENTER_X,

CENTER_Y,

c_array,

c_array_length,

FREEZE_FRAME_BOUND,

TRACK_WINDOW_HITRATE );

ctrl_initialized = true;

}

ctrl->loop_next_frame( frame );

timer.frame_per_second++;

}

cvReleaseCapture( &capture );

timer.Stop();

if ( ctrl_initialized ) {

ctrl->loop_stop();

delete ctrl;

}

return 0;

}

vrfilter_test测试代码:

int main( int argc, char** argv )

{

//time for fps

double now_time, end_time ;

char fps_buff [20];

CvPoint fps_point = cvPoint(10, 450);

CvFont font;

cvInitFont( &font, CV_FONT_HERSHEY_SIMPLEX, 0.6f, 0.6f, 0.0f, 2, CV_AA);

CvVideoWriter *avi_writer = NULL;

#ifdef USE_GRAPHIC_CARD

OmniConversion<OmniGCardConverter, double, IplImage, CvPoint> *conv_gcard;

OmniAlgoVRFilterDetection<OmniGCardConverter, double, IplImage, CvPoint> *ctrl_gcard;

#else

OmniConversion<OmniFastLookupTable, int, IplImage, CvPoint> *conv_lu;

OmniAlgoVRFilterDetection<OmniFastLookupTable, int, IplImage, CvPoint> *ctrl_lu;

#endif

/* ok, hardcode them for now... */

int min_radius = 55;

int max_radius = 220;

int omni_center_x = 320;

int omni_center_y = 255;

CvCapture* capture = 0;

char *filename = NULL;

cvNamedWindow( "face_window", 1 );

if ( argc != 2 )

filename =

#ifdef WIN32

"..\\..\\data\\videos\\video_test-3-xvid.avi";

#else

"../../data/videos/video_test-3-xvid.avi";

#endif

else

filename = argv[1];

#ifdef SHOW_FPS_ON_IMAGE

int fps = 0;

int rate = 0;

#endif

capture = cvCaptureFromAVI( filename );

printf("Using %s as omnidirectional video...\n", filename);

if( !capture )

{

fprintf(stderr,"Could not initialize capturing...\n");

return -1;

}

now_time = (double) clock ();

end_time = (double) clock () + (1 * CLOCKS_PER_SEC);

sprintf(fps_buff, " %d FPS", fps);

bool initialized = false;

while ( 1 )

{

IplImage* frame = 0;

frame = cvQueryFrame( capture );

if( !frame )

break;

if ( !initialized ) {

#ifdef USE_GRAPHIC_CARD

conv_gcard = new OmniConversion<OmniGCardConverter, double, IplImage, CvPoint>(

new OmniGCardConverter(min_radius,

max_radius,

omni_center_x,

omni_center_y,

640,

480,

"omnigcardo",

"../../omnistuff/data/shaders/vertex_shader.cg",

"vertex_shader_main",

"../../omnistuff/data/shaders/pixel_shader.cg",

"pixel_shader_main",

true));

ctrl_gcard = new OmniAlgoVRFilterDetection<OmniGCardConverter, double, IplImage, CvPoint>( conv_gcard,

frame );

#else

conv_lu = new OmniConversion<OmniFastLookupTable, int, IplImage, CvPoint>(

new OmniFastLookupTable(min_radius,

max_radius,

omni_center_x,

omni_center_y,

0.0,

true ));

ctrl_lu = new OmniAlgoVRFilterDetection<OmniFastLookupTable, int, IplImage, CvPoint>( conv_lu,

frame );

#endif /* USE_GRAPHIC_CARD */

#ifdef SAVE_PROCESSED_VIDEO

// FIXME: change that -1 value to a fourcc value to work with linux

avi_writer = cvCreateAVIWriter( PROCESSED_VIDEO_PATH, CV_FOURCC('X', 'V', 'I', 'D') , PROCESSED_VIDEO_FPS,

cvSize(frame->width, frame->height) );

#endif /* SAVE_PROCESSED_VIDEO */

initialized = true;

}

#ifdef USE_GRAPHIC_CARD

ctrl_gcard->loop_next_frame( frame );

#else

ctrl_lu->loop_next_frame( frame );

#endif

#ifdef SHOW_FPS_ON_IMAGE

now_time = (double) clock ();

if (end_time - now_time > DBL_EPSILON){

fps++;

}

else{

sprintf(fps_buff, " %d FPS", fps);

end_time = (double) clock () + (1 * CLOCKS_PER_SEC);

fps = 0;

}

cvPutText( frame, fps_buff , fps_point, &font, CV_RGB(0,255,0) );

rate++;

#endif /* SHOW_FPS_ON_IMAGE */

cvShowImage( "face_window", frame);

#ifdef SAVE_PROCESSED_VIDEO

cvWriteToAVI (avi_writer, frame);

#endif /* SAVE_PROCESSED_VIDEO */

cvWaitKey( WAIT_KEY_VALUE );

}

cvReleaseCapture( &capture );

#ifdef SAVE_PROCESSED_VIDEO

if (avi_writer != NULL)

cvReleaseVideoWriter(&avi_writer);

#endif /* SAVE_PROCESSED_VIDEO */

}

学习的目标是成熟!~~~