Architecture enabling business (LAAAM)

It’s pronounced like “lamb”, not like “lame”

With that out of the way, it’s time for an update on the Lightweight Architecture Alternative Assessment Method (if you’re judging – negatively – the quality of the acronym, you wouldn’t be the first), which I first blogged about four years and three jobs ago. Since then, LAAAM has undergone some refinement, shaped by collaboration and experience in its use at Fidelity and VistaPrint. This week is Microsoft TechEd, and I’m giving a presentation on LAAAM this Tuesday at 10:15AM: ARC314. I’m also doing a Tech Talk Interview with Bob Familiar on Thursday.Why do LAAAM?

The first question should actually be: “why evaluate architectures?” Generally speaking, analyzing architectures gives us an opportunity to make good decisions early in the software development lifecycle, reducing risk and improving the quality of what we deliver. This benefit is possible because architectural decisions – the earliest decisions in a system’s life – are the primary determiners of quality: performance, scalability, reliability, flexibility, and so forth. Unfortunately, the state of the practice in making architectural decisions is most senior/loudest/most patient debater wins. Sometimes this produces good decisions, but it’s not very predictable or repeatable, and we don’t usually end up with a clear and documented rationale.

LAAAM’s first, and perhaps most important, contribution to the software development lifecycle is its focus on defining what quality means for a given system or product. The "–ilities" are commonly used, coarsely, to talk about architectural quality, and they usually give us a nice warm-and-fuzzy; who wouldn’t want a system that exhibits scalability and reliability and flexibility and maintainability?

The second important contribution of LAAAM is the way it brings together stakeholders – i.e. anyone that cares about the outcome of a decision – from the business,the development team, operations, QA, etc. LAAAM helps achieve a common language and decision framework. LAAAM helps to build consensus. That doesn’t mean everyone has to agree on everything, but it does mean that everyone’s perspective is on the table and the final result represents a fusion of those perspectives into a rational whole.

Finally, LAAAM produces a set of artifacts that represent a rigorous, rational decision-making process. These artifacts are extremely valuable in justifying architectural decisions; yes, ideally all of the stakeholders in those decisions would participate in the process as described above, but obviously that’s not always possible. So the LAAAM artifacts give us a way to express the reasoning that went into a given decision, with a foundation in the definition of quality for a system.

Quality

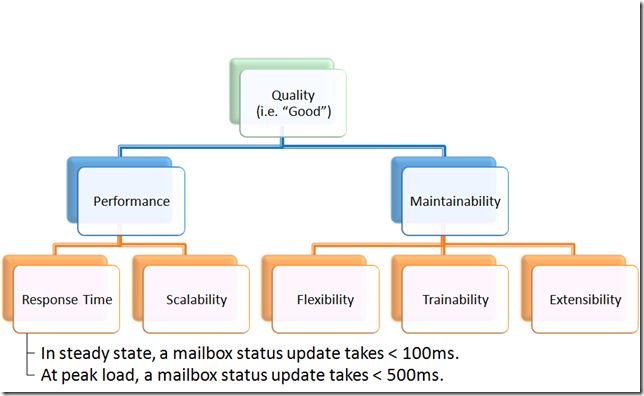

The first, and most important, step in LAAAM is to figure out what quality means for a given decision. In other words, when all is said and done, and we look back on the decision, what’s going to make us say “that was the rightdecision”? The –ilities give us a starting point, but they’re not enough. LAAAM takes a page from the ATAMplaybook here, and has us build a quality tree (ATAM calls it a utility tree, and LAAAM used to, but the term confused people) to structure our thinking. This is easiest by example:

Here, performance (an honorary –itility) and maintainability are the two important quality attributes for whatever decision we’re trying to make (yes, there are always more than two in the real world). Performance has been refined to have two sub-qualities: response time and scalability. You get the idea.

Scenarios

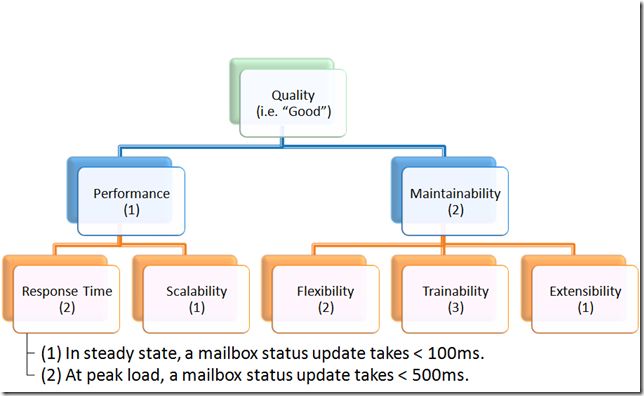

Now the punch line. LAAAM doesn’t let us stop here, but rather forces another level in the tree: scenarios. Scenarios (also borrowed from ATAM) give us the mechanism to be precise in defining quality, by ensuring each of our quality attributes are refined to a point of measurability. In other words, we can look at the alternatives we’re considering and assess how well they achieve a scenario. You can’t do this when you stop at “flexibility” or “scalability’”. Scenarios have a consistent structure: a context, a stimulus and a response. The context is the state of the environment when the scenario is applied, the stimulus is something happens to the system, and the response is how we want the system to react. Again, by example:

A couple more:

- The system network is partitioned and restored to normal condition; complete database resynchronization occurs within 30 minutes.

- For a new release, integrate a new component implementation within two days.

The context/stimulus/response identification is left as an exercise (hopefully trivial) for the reader. But note that the last example is different from the first two: it doesn’t apply at run-time, but rather at development time.

(Sidebar: while I’ve presented this a top-down exercise – first level quality attributes, second level quality attributes, scenarios – it’s not always done this way; in fact, it’s probably rare. More often, the quality definition comes cyclically – you start with a few first level quality attributes that you think are important, someone throws out a scenario or two, you add some second level quality attributes to group them, and so on. LAAAM doesn’t insist on any specific approach to building the quality tree, so long as it gets done.)

Now, let’s put some scenarios into our example quality tree:

Great, I want it all! But wait …

Knowing What’s Important

Unfortunately, quality attributes trade off against one another. There come times when you have to choose to achieve versus another, and it’d be nice to know in advance how to know which to is the right one. In the most general case, we could try to weight all of our scenarios, but this is really hard, especially when trying to get people with competing concerns to agree. Instead, LAAAM follows an approach of ranking at each node in the quality tree, like so:

So performance is more important than maintainability, scalability is more important than response time, and the steady state scenario is more important than the peak load scenario. Easy, right? Everyone’s happy to agree? Not always, but at least we we have a much more constrained series of problems to solve, rather than trying to tackle one big problem. Sometimes you have to resort to tricks like voting to get to closure, but I’ve seen this be pretty rare.

Scenario Weights

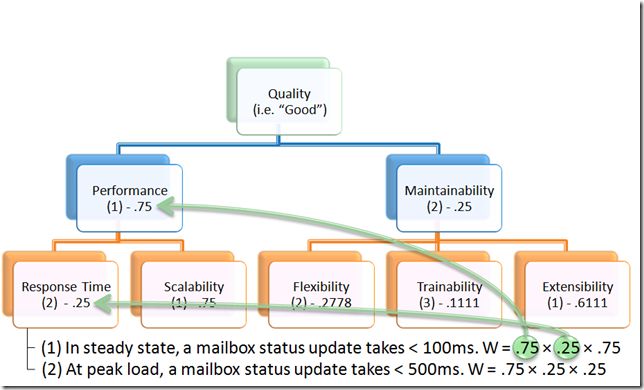

The next step is completely mechanical. Using a formula called “rank order centroids” ROC, we compute a weight for each scenario. I came across ROC in an MSDN Magazine Test Run article called “Competitive Analysis Using MAGIQ”, but it originated with Barron and Barrett’s 1996 paper “Decision Quality Using Ranked Attribute Weights”. Anyhow, ROC computes a weight for a given ranking like this:

Where N is the number of options and k is the rank. So above, flexibility was ranked 2 out of 3 options:

If you’re thinking “wow, this really favors the highly ranked options”, you’re right – that’s the point. The idea is that this is, psychologically speaking, how people actually apply importance to rankings. There’s lots of interesting other work in this area. Check out: Attribute Weighting Methods and Decision Quality in the Presence of Response Error: A Simulation Study.

If you’re thinking “wow, this really favors the highly ranked options”, you’re right – that’s the point. The idea is that this is, psychologically speaking, how people actually apply importance to rankings. There’s lots of interesting other work in this area. Check out: Attribute Weighting Methods and Decision Quality in the Presence of Response Error: A Simulation Study.

LAAAM isn’t wed to rank order centroids, and sometimes we use linear, or even manual (danger, Will Robinson) weightings at one node in the utility tree when the stakeholders decide it’s more reflective of the actual relative importance of sub-qualities or scenarios. Rank order centroids has proven pretty effective though, so it’s the default. Whichever approach you use to determine the weight for a given ranking, the last step is to compute the product of the rankings for each path through the utility tree, to come up with the scenario weights:

So the steady state scenario has a total weight of .140625 and the peak load scenario has a total weight of .046875. Nothin’ to it.

Alternatives

Excellent, now we’ll know what we like when we see it. The next step is to figure out what the choices are – what are the alternative decisions, solutions, platforms, whatever, that we’re trying to choose among. But first, note that all of the work we’ve done up to this point (i.e. creating a fully ranked quality tree with scenarios) is completely independent of the decision itself – this makes it much more persistent through time, even able to outlive a single instantiation of a system.

Generally, compared to defining quality, it’s pretty easy to identify our alternatives; usually the participants in the process have a pretty good idea about the options – .NET or Java or Ruby? On-premise or in the cloud? Hibernateor iBATIS? Entity Framework or LINQ to SQL or NHibernate? SharePoint or Documentum? The common challenge at this point isn’t in identification of the alternatives, but in defining them to a sufficiently precise degree that we can assess them. Sometimes this means we have to do more investigative work during the LAAAM process, but the scenarios guide us to where this investigation is required.

Assessment

Finally, we get to the third A in LAAAM – Assessment. Somewhat counter-intuitively, this part is actually the easiest. All we need to do is fill in this table:

Scenario |

Alternative 1 |

Alternative 2 |

Alternative 3 |

| Scenario 1 | |||

| Scenario 2 | How well does alternative 2 achieve scenario 2? | ||

| … |

Each cell will contain a very simple assessment measure: usually we use a scale like poor, fair, adequate, good, excellent. This part of the process is done collaboratively with the subject matter experts for each of the alternatives, plus whatever additional supporting players are required. Comparing alternatives is fine; in fact, it commonly makes the process go very quickly when you can establish a firm baseline (usually with the alternative that’s best understood) and ask questions like “is this solution better or worse?”

Now turn the ratings into numeric values (it doesn’t matter what they are, as long as they’re linearly distributed), multiply each by the weight, and you’re done (d’oh, I just realized the math in my TechEd deck is wrong):

Scenario |

Weight |

Alternative 1 |

Alternative 2 |

Alternative 3 |

| Scenario 1 | .140625 | Poor (0) | Fair (1) | Excellent (4) |

| Scenario 2 | .046875 | Good (3) | Adequate (2) | Fair (1) |

| Total | .140625 | .234375 | .609375 |

(Footnote: if you read my earlier blog on LAAAM, you’ll notice that each cell actually contained three assessment “dimensions”: fit, development cost and operations cost. Repeated application of the method made it clear that cost is almost always smeared across the scenarios in a way that makes asking about it in each cell very difficult. Effectively incorporating cost back into the method is an important subject for future work, but it hasn’t inhibited adoption yet.)

Final Analysis

Unfortunately, we don’t get to simply declare that Alternative 3 is the winner, even though it had the highest score. We do have an ordering of our alternatives, and we can get an idea about their relative adequacy. To be thorough with the method, though, we need to ask a question about sensitivity: which scenarios are heavily weighted and exhibit significant differentiation among the alternatives? These are the important ones, where we want to be sure about our assessment. A second pass to validate sensitive scenarios is a good follow-up.

Also, in cases where a narrow margin exists between highly-rated alternatives (e.g. when they’re within 20%), one approach to refining the analysis is to add scenarios to further differentiate the alternatives. One way to do this is to decompose sensitive scenarios into sub-scenarios to probe related concerns. Sometimes it’s necessary to refine the definition of one of the alternatives and iterate on the analysis; such refinement may require, for example, a proof-of-concept implementation.

Tool

At TechEd I showed a demo of the Quality-Oriented Decision Assessment (QODA) tool, developed by Gary Chamberlain, the lead for Platform Architecture here at VistaPrint. Although it’s pretty new, there’s already plenty of evidence that adoption of the method (which we’re now calling QODA inside VistaPrint) is substantially assisted with this tool support. Previously the only “support” was an Excel spreadsheet, which was painful to work with. The tool isn’t currently generally available, but we’re hoping to make it so sometime soon. Stay tuned.

From: http://technogility.sjcarriere.com/2009/05/11/its-pronounced-like-lamb-not-like-lame/