Camera显示之Hal层的适配(二)

接着上一篇:

Camera显示之Hal层的适配(一)

一.基本关系

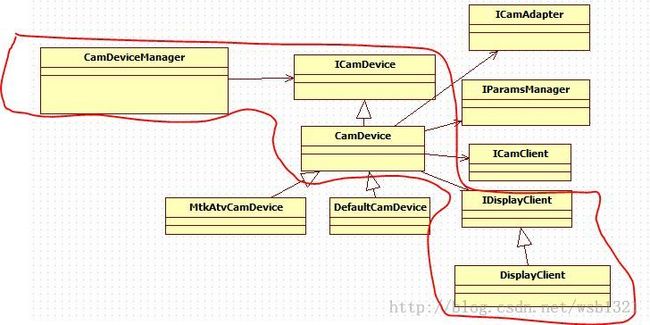

1.先来看看KTM hal层大概类图关系:

大概类图关系就是这样, 其中和显示相关的类图关系如红线所圈区域。

可以猜测到 与显示相关的逻辑处理应该都会在DisplayClient这个类去实现。

以后app下达有关预览显示相关的东西啊在hal层基本上都是这一条先进行传递命令, 不过总1中我们可以看到CamDevice还有一些衍生类, 这些都是mtk为不同设备做的一些定制, 主要的路径还是如上图所示。

二.接着之前的在CameraClient中的代码:

//!++

else if ( window == 0 ) {

result = mHardware->setPreviewWindow(window);

}

1.setPreviewWindow(window)通过CameraHardwareInterface适配:

mDevice->ops->set_preview_window(mDevice,

buf.get() ? &mHalPreviewWindow.nw : 0);

来实现向hal层下达命令和设置参数。

在这里我们发现传入的是mHalPreviewWindow.nw, 而不是我们之前所讲述的ANativeWindow 这是因为mHalPreviewWindow.nw将ANativeWindow的一些流的操作进行封装, 使之操作更加简便。

mHalPreviewWindow.nw的定义:

struct camera_preview_window {

struct preview_stream_ops nw;

void *user;

};

就是结构体:struct :

typedef struct preview_stream_ops {

int (*dequeue_buffer)(struct preview_stream_ops* w,

buffer_handle_t** buffer, int *stride);

int (*enqueue_buffer)(struct preview_stream_ops* w,

buffer_handle_t* buffer);

int (*cancel_buffer)(struct preview_stream_ops* w,

buffer_handle_t* buffer);

int (*set_buffer_count)(struct preview_stream_ops* w, int count);

int (*set_buffers_geometry)(struct preview_stream_ops* pw,

int w, int h, int format);

int (*set_crop)(struct preview_stream_ops *w,

int left, int top, int right, int bottom);

int (*set_usage)(struct preview_stream_ops* w, int usage);

int (*set_swap_interval)(struct preview_stream_ops *w, int interval);

int (*get_min_undequeued_buffer_count)(const struct preview_stream_ops *w,

int *count);

int (*lock_buffer)(struct preview_stream_ops* w,

buffer_handle_t* buffer);

// Timestamps are measured in nanoseconds, and must be comparable

// and monotonically increasing between two frames in the same

// preview stream. They do not need to be comparable between

// consecutive or parallel preview streams, cameras, or app runs.

int (*set_timestamp)(struct preview_stream_ops *w, int64_t timestamp);

对显示流的操作都是通过这些函数实现的,而mHalPreviewWindow中实现了具体操的方法, 在这些方法的实现中实现对作ANativeWindow的操作。 而在hal端就是通过mHalPreviewWindow.nw 进行对ANativeWindow的具体操作。

基本类图关系:

2.继续1中的:

mDevice->ops->set_preview_window(mDevice,

buf.get() ? &mHalPreviewWindow.nw : 0);

我已经知道了mHalPreviewWindow.nw为传入的一个重要参数mHalPreviewWindow.nw 为preview_stream_ops。

继续看看set_preview_window这个方法。 我们有上篇文章知道ops是ICamDevice的一个成员gCameraDevOps,类型为camera_device_ops_t:

可以看到:

static camera_device_ops_t const gCameraDevOps = {

set_preview_window: camera_set_preview_window,

set_callbacks: camera_set_callbacks,

enable_msg_type: camera_enable_msg_type,

disable_msg_type: camera_disable_msg_type,

msg_type_enabled: camera_msg_type_enabled,

start_preview: camera_start_preview,

stop_preview: camera_stop_preview,

preview_enabled: camera_preview_enabled,

store_meta_data_in_buffers: camera_store_meta_data_in_buffers,

start_recording: camera_start_recording,

stop_recording: camera_stop_recording,

recording_enabled: camera_recording_enabled,

release_recording_frame: camera_release_recording_frame,

auto_focus: camera_auto_focus,

cancel_auto_focus: camera_cancel_auto_focus,

take_picture: camera_take_picture,

cancel_picture: camera_cancel_picture,

set_parameters: camera_set_parameters,

get_parameters: camera_get_parameters,

put_parameters: camera_put_parameters,

send_command: camera_send_command,

release: camera_release,

dump: camera_dump,

};

gCameraDevOps 中的函数地址映射到ICamDevice中的函数实现。

所以 :ops->set_preview_window(mDevice, buf.get() ? &mHalPreviewWindow.nw : 0) 就对应到ICamDevice::camera_set_preview_window的发发调用。

static int camera_set_preview_window(

struct camera_device * device,

struct preview_stream_ops *window

)

{

int err = -EINVAL;

//

ICamDevice*const pDev = ICamDevice::getIDev(device);

if ( pDev )

{

err = pDev->setPreviewWindow(window);

}

//

return err;

}

static inline ICamDevice* getIDev(camera_device*const device)

{

return (NULL == device)

? NULL

: reinterpret_cast<ICamDevice*>(device->priv);//得到device->priv

由上篇文章:

知道device->pri实际上是在创建实例的时候指向的自己:

ICamDevice::

ICamDevice()

: camera_device_t()

, RefBase()

, mDevOps()

//

, mMtxLock()

//

{

MY_LOGD("ctor");

::memset(static_cast<camera_device_t*>(this), 0, sizeof(camera_device_t));

this->priv = this; //用priv指针保存自己。

this->ops = &mDevOps;//ops指向了mDevOps

mDevOps = gCameraDevOps;//mDevOps为gCameraDevOps指向的结构体

}

继续回到pDev->setPreviewWindow(window);

在ICamDevice中没有对setPreviewWindow具体的实现,而是在其子类CamDevice对ICamDevice进行了具体的实现;

随意代码定位到CamDevice:

status_t

CamDevice::

setPreviewWindow(preview_stream_ops* window)

{

MY_LOGI("+ window(%p)", window);

//

status_t status = initDisplayClient(window);//开始初始化DisplayClient

if ( OK == status && previewEnabled() && mpDisplayClient != 0 )

{

status = enableDisplayClient();//时能DisplayClient端

}

//

return status;

}

status_t

CamDevice::

initDisplayClient(preview_stream_ops* window)

{

#if '1'!=MTKCAM_HAVE_DISPLAY_CLIENT

#warning "Not Build Display Client"

MY_LOGD("Not Build Display Client");

..............

.............

/ [3.1] create a Display Client.

mpDisplayClient = IDisplayClient::createInstance();

if ( mpDisplayClient == 0 )

{

MY_LOGE("Cannot create mpDisplayClient");

status = NO_MEMORY;

goto lbExit;

}

// [3.2] initialize the newly-created Display Client.

if ( ! mpDisplayClient->init() )

{

MY_LOGE("mpDisplayClient init() failed");

mpDisplayClient->uninit();

mpDisplayClient.clear();

status = NO_MEMORY;

goto lbExit;

}

// [3.3] set preview_stream_ops & related window info.

if ( ! mpDisplayClient->setWindow(window, previewSize.width, previewSize.height, queryDisplayBufCount()) )//绑定window

{

status = INVALID_OPERATION;

goto lbExit;

}

// [3.4] set Image Buffer Provider Client if it exist.

if ( mpCamAdapter != 0 && ! mpDisplayClient->setImgBufProviderClient(mpCamAdapter) )//重要! 设置流数据的Buffer提供者。

{

status = INVALID_OPERATION;

goto lbExit;

}

..................

..................

status_t

CamDevice::

enableDisplayClient()

{

status_t status = OK;

Size previewSize;

//

// [1] Get preview size.

if ( ! queryPreviewSize(previewSize.width, previewSize.height) )

{

MY_LOGE("queryPreviewSize");

status = DEAD_OBJECT;

goto lbExit;

}

//

// [2] Enable

if ( ! mpDisplayClient->enableDisplay(previewSize.width, previewSize.height, queryDisplayBufCount(), mpCamAdapter) )//设置了预览数据的尺寸和Buffer提供者相关的数据

{

MY_LOGE("mpDisplayClient(%p)->enableDisplay()", mpDisplayClient.get());

status = INVALID_OPERATION;

goto lbExit;

}

//

status = OK;

lbExit:

return status;

}

3.定位到DisplayClient中:

enableDisplay(

int32_t const i4Width,

int32_t const i4Height,

int32_t const i4BufCount,

sp<IImgBufProviderClient>const& rpClient

)

{

bool ret = false;

preview_stream_ops* pStreamOps = mpStreamOps;

//

// [1] Re-configurate this instance if any setting changes.

if ( ! checkConfig(i4Width, i4Height, i4BufCount, rpClient) )

{

MY_LOGW("<Config Change> Uninit the current DisplayClient(%p) and re-config...", this);

//

// [.1] uninitialize

uninit();

//

// [.2] initialize

if ( ! init() )

{

MY_LOGE("re-init() failed");

goto lbExit;

}

//

// [.3] set related window info.

if ( ! setWindow(pStreamOps, i4Width, i4Height, i4BufCount) )//window的尺寸和预览数据的大小一致

{

goto lbExit;

}

//

// [.4] set Image Buffer Provider Client.

if ( ! setImgBufProviderClient(rpClient) )//Buffer的数据提供者为mpCamAdapter, 就是CamAdapter, 后面的预览数据元都是通过它来提供。

{

goto lbExit;

}

}

//

// [2] Enable.

if ( ! enableDisplay() )//开始进行数据的获取和显示

{

goto lbExit;

}

//

ret = true;

lbExit:

return ret;

}

先来看看第一个关键函数:setWindow(pStreamOps, i4Width, i4Height, i4BufCount)

bool

DisplayClient::

setWindow(

preview_stream_ops*const window,

int32_t const wndWidth,

int32_t const wndHeight,

int32_t const i4MaxImgBufCount

)

{

MY_LOGI("+ window(%p), WxH=%dx%d, count(%d)", window, wndWidth, wndHeight, i4MaxImgBufCount);

//

if ( ! window )

{

MY_LOGE("NULL window passed into");

return false;

}

//

if ( 0 >= wndWidth || 0 >= wndHeight || 0 >= i4MaxImgBufCount )

{

MY_LOGE("bad arguments - WxH=%dx%d, count(%d)", wndWidth, wndHeight, i4MaxImgBufCount);

return false;

}

//

//

Mutex::Autolock _l(mModuleMtx);

return set_preview_stream_ops(window, wndWidth, wndHeight, i4MaxImgBufCount);//

}

ool

DisplayClient::

set_preview_stream_ops(

preview_stream_ops*const window,

int32_t const wndWidth,

int32_t const wndHeight,

int32_t const i4MaxImgBufCount

)

{

CamProfile profile(__FUNCTION__, "DisplayClient");

//

bool ret = false;

status_t err = 0;

int32_t min_undequeued_buf_count = 0;

//

// (2) Check

if ( ! mStreamBufList.empty() )

{

MY_LOGE(

"locked buffer count(%d)!=0, "

"callers must return all dequeued buffers, "

// "and then call cleanupQueue()"

, mStreamBufList.size()

);

dumpDebug(mStreamBufList, __FUNCTION__);

goto lbExit;

}

//

// (3) Sava info.

mpStreamImgInfo.clear();//mpStreamImgInfo封装的视屏数据流的基本信息。

mpStreamImgInfo = new ImgInfo(wndWidth, wndHeight, CAMERA_DISPLAY_FORMAT, CAMERA_DISPLAY_FORMAT_HAL, "Camera@Display");//设置了Stream的宽高和显示类型。

mpStreamOps = window;//mpStreamOps保存了上层传进来的对象指针。后面就通过它和显示方进行交互。

mi4MaxImgBufCount = i4MaxImgBufCount;

........................

........................

err = mpStreamOps->set_buffer_count(mpStreamOps, mi4MaxImgBufCount+min_undequeued_buf_count);

if ( err )

{

MY_LOGE("set_buffer_count failed: status[%s(%d)]", ::strerror(-err), -err);

if ( ENODEV == err )

{

MY_LOGD("Preview surface abandoned!");

mpStreamOps = NULL;

}

goto lbExit;

}

//

// (4.4) Set window geometry

err = mpStreamOps->set_buffers_geometry(//设置基本的流信息

mpStreamOps,

mpStreamImgInfo->mu4ImgWidth,

mpStreamImgInfo->mu4ImgHeight,

mpStreamImgInfo->mi4ImgFormat

);

通过 上面的代码片段和分析, 确定了上层传递下来的对象指针保存在mpStreamOps, 与显示相关的交互都将通过mpStreamOps来进行操作。 而mpStreamImgInfo封装了流数据的大小和格式等。

再来看看第二个关键函数:setImgBufProviderClient(rpClient):

bool

DisplayClient::

setImgBufProviderClient(sp<IImgBufProviderClient>const& rpClient)

{

bool ret = false;

//

MY_LOGD("+ ImgBufProviderClient(%p), mpImgBufQueue.get(%p)", rpClient.get(), mpImgBufQueue.get());

//

if ( rpClient == 0 )

{

MY_LOGE("NULL ImgBufProviderClient");

mpImgBufPvdrClient = NULL;

goto lbExit;

}

//

if ( mpImgBufQueue != 0 )

{

if ( ! rpClient->onImgBufProviderCreated(mpImgBufQueue) )//通知Provider端(Buffer数据提供者端),我这边已经建好Buffer队列, 后面你就填充数据到对应的Buffer供我使用。

{

goto lbExit;

}

mpImgBufPvdrClient = rpClient;//用mpImgBufPvdrClient保存provider的对象指针, 方便使用。

}

//

ret = true;

lbExit:

MY_LOGD("-");

return ret;

};

再来看看第三个关键函数 enableDisplay() :

bool

DisplayClient::

enableDisplay()

{

bool ret = false;

//

// (1) Lock

Mutex::Autolock _l(mModuleMtx);

//

MY_LOGD("+ isDisplayEnabled(%d), mpDisplayThread.get(%p)", isDisplayEnabled(), mpDisplayThread.get());

//

// (2) Check to see if it has been enabled.

if ( isDisplayEnabled() )

{

MY_LOGD("Display is already enabled");

ret = true;

goto lbExit;

}

//

// (3) Check to see if thread is alive.

if ( mpDisplayThread == 0 )

{

MY_LOGE("NULL mpDisplayThread");

goto lbExit;

}

//

// (4) Enable the flag.

::android_atomic_write(1, &mIsDisplayEnabled);

//

// (5) Post a command to wake up the thread.

mpDisplayThread->postCommand(Command(Command::eID_WAKEUP));//通知获取数据的线程开始运行

//

//

ret = true;

lbExit:

MY_LOGD("- ret(%d)", ret);

return ret;

}

bool

DisplayThread::

threadLoop()

{

Command cmd;

if ( getCommand(cmd) )

{

switch (cmd.eId)

{

case Command::eID_EXIT:

MY_LOGD("Command::%s", cmd.name());

break;

//

case Command::eID_WAKEUP://对应上面发送的命令

default:

if ( mpThreadHandler != 0 )

{

mpThreadHandler->onThreadLoop(cmd);//注意此处, mpThreadHandler就是DisplayClient(它继承了IDisplayThreadHandler),

}

else

{

MY_LOGE("cannot handle cmd(%s) due to mpThreadHandler==NULL", cmd.name());

}

break;

}

}

//

MY_LOGD("- mpThreadHandler.get(%p)", mpThreadHandler.get());

return true;

}

回到DisplayClient的onThreadLoop函数:

bool

DisplayClient::

onThreadLoop(Command const& rCmd)

{

// (0) lock Processor.

sp<IImgBufQueue> pImgBufQueue;

{

Mutex::Autolock _l(mModuleMtx);

pImgBufQueue = mpImgBufQueue;

if ( pImgBufQueue == 0 || ! isDisplayEnabled() )//判断显示相关的初始化是否完成和启动

{

MY_LOGW("pImgBufQueue.get(%p), isDisplayEnabled(%d)", pImgBufQueue.get(), isDisplayEnabled());

return true;

}

}

// (1) Prepare all TODO buffers.

if ( ! prepareAllTodoBuffers(pImgBufQueue) )//为pImgBufQueue添加空Buffer。

{

return true;

}

// (2) Start

if ( ! pImgBufQueue->startProcessor() )//开始获取数据

{

return true;

}

//

{

Mutex::Autolock _l(mStateMutex);

mState = eState_Loop;

mStateCond.broadcast();

}

//

// (3) Do until disabled.

while ( 1 )//进入无限循环

{

// (.1)

waitAndHandleReturnBuffers(pImgBufQueue);//等待pImgBufQueue中的数据,并送到显示端显示

// (.2) break if disabled.

if ( ! isDisplayEnabled() )

{

MY_LOGI("Display disabled");

break;

}

// (.3) re-prepare all TODO buffers, if possible,

// since some DONE/CANCEL buffers return.

prepareAllTodoBuffers(pImgBufQueue);//又重新准备Buffer。

}

//

// (4) Stop

pImgBufQueue->pauseProcessor();

pImgBufQueue->flushProcessor();

pImgBufQueue->stopProcessor();//停止数据获取

//

// (5) Cancel all un-returned buffers.

cancelAllUnreturnBuffers();//没有来得及显示额数据, 也取消掉。

//

{

Mutex::Autolock _l(mStateMutex);

mState = eState_Suspend;

mStateCond.broadcast();

}

//

return true;

}

上边这个代码片段对预览数据的处理就在waitAndHandleReturnBuffers(pImgBufQueue);中。

4.对waitAndHandleReturnBuffers(pImgBufQueue);进行分析:

bool

DisplayClient::

waitAndHandleReturnBuffers(sp<IImgBufQueue>const& rpBufQueue)

{

bool ret = false;

Vector<ImgBufQueNode> vQueNode;

//

MY_LOGD_IF((1<=miLogLevel), "+");

//

// (1) deque buffers from processor.

rpBufQueue->dequeProcessor(vQueNode);//从provider端(数据提供端)获取一个填充数据了的Buffer。

if ( vQueNode.empty() ) {

MY_LOGW("vQueNode.empty()");

goto lbExit;

}

// (2) handle buffers dequed from processor.

ret = handleReturnBuffers(vQueNode);//处理填充了数据的这个Buffer中的数据。

lbExit:

//

MY_LOGD_IF((2<=miLogLevel), "- ret(%d)", ret);

return ret;

}

看看handleReturnBuffers函数:

bool

DisplayClient::

handleReturnBuffers(Vector<ImgBufQueNode>const& rvQueNode)

{

/*

* Notes:

* For 30 fps, we just enque (display) the latest frame,

* and cancel the others.

* For frame rate > 30 fps, we should judge the timestamp here or source.

*/

// (1) determine the latest DONE buffer index to display; otherwise CANCEL.

int32_t idxToDisp = 0;

for ( idxToDisp = rvQueNode.size()-1; idxToDisp >= 0; idxToDisp--)

{

if ( rvQueNode[idxToDisp].isDONE() )

break;

}

if ( rvQueNode.size() > 1 )

{

MY_LOGW("(%d) display frame count > 1 --> select %d to display", rvQueNode.size(), idxToDisp);

}

//

// Show Time duration.

if ( 0 <= idxToDisp )

{

nsecs_t const _timestamp1 = rvQueNode[idxToDisp].getImgBuf()->getTimestamp();

mProfile_buffer_timestamp.pulse(_timestamp1);

nsecs_t const _msDuration_buffer_timestamp = ::ns2ms(mProfile_buffer_timestamp.getDuration());

mProfile_buffer_timestamp.reset(_timestamp1);

//

mProfile_dequeProcessor.pulse();

nsecs_t const _msDuration_dequeProcessor = ::ns2ms(mProfile_dequeProcessor.getDuration());

mProfile_dequeProcessor.reset();

//

MY_LOGD_IF(

(1<=miLogLevel), "+ %s(%lld) %s(%lld)",

(_msDuration_buffer_timestamp < 0 ) ? "time inversion!" : "", _msDuration_buffer_timestamp,

(_msDuration_dequeProcessor > 34) ? "34ms < Duration" : "", _msDuration_dequeProcessor

);

}

//

// (2) Lock

Mutex::Autolock _l(mModuleMtx);

//

// (3) Remove from List and enquePrvOps/cancelPrvOps, one by one.

int32_t const queSize = rvQueNode.size();

for (int32_t i = 0; i < queSize; i++)

{

sp<IImgBuf>const& rpQueImgBuf = rvQueNode[i].getImgBuf(); // ImgBuf in Queue.

sp<StreamImgBuf>const pStreamImgBuf = *mStreamBufList.begin(); // ImgBuf in List.

// (.1) Check valid pointers to image buffers in Queue & List

if ( rpQueImgBuf == 0 || pStreamImgBuf == 0 )

{

MY_LOGW("Bad ImgBuf:(Que[%d], List.begin)=(%p, %p)", i, rpQueImgBuf.get(), pStreamImgBuf.get());

continue;

}

// (.2) Check the equality of image buffers between Queue & List.

if ( rpQueImgBuf->getVirAddr() != pStreamImgBuf->getVirAddr() )

{

MY_LOGW("Bad address in ImgBuf:(Que[%d], List.begin)=(%p, %p)", i, rpQueImgBuf->getVirAddr(), pStreamImgBuf->getVirAddr());

continue;

}

// (.3) Every check is ok. Now remove the node from the list.

mStreamBufList.erase(mStreamBufList.begin());//经过检查返回的这一帧数据的Buffer是DisplayClient端分配和提供的。

//

// (.4) enquePrvOps/cancelPrvOps

if ( i == idxToDisp ) {

MY_LOGD_IF(

(1<=miLogLevel),

"Show frame:%d %d [ion:%d %p/%d %lld]",

i, rvQueNode[i].getStatus(), pStreamImgBuf->getIonFd(),

pStreamImgBuf->getVirAddr(), pStreamImgBuf->getBufSize(), pStreamImgBuf->getTimestamp()

);

//

if(mpExtImgProc != NULL)

{

if(mpExtImgProc->getImgMask() & ExtImgProc::BufType_Display)

{

IExtImgProc::ImgInfo img;

//

img.bufType = ExtImgProc::BufType_Display;

img.format = pStreamImgBuf->getImgFormat();

img.width = pStreamImgBuf->getImgWidth();

img.height = pStreamImgBuf->getImgHeight();

img.stride[0] = pStreamImgBuf->getImgWidthStride(0);

img.stride[1] = pStreamImgBuf->getImgWidthStride(1);

img.stride[2] = pStreamImgBuf->getImgWidthStride(2);

img.virtAddr = (MUINT32)(pStreamImgBuf->getVirAddr());

img.bufSize = pStreamImgBuf->getBufSize();

//

mpExtImgProc->doImgProc(img);

}

}

//

enquePrvOps(pStreamImgBuf);//送入显示端显示

}

else {

MY_LOGW(

"Drop frame:%d %d [ion:%d %p/%d %lld]",

i, rvQueNode[i].getStatus(), pStreamImgBuf->getIonFd(),

pStreamImgBuf->getVirAddr(), pStreamImgBuf->getBufSize(), pStreamImgBuf->getTimestamp()

);

cancelPrvOps(pStreamImgBuf);

}

}

//

MY_LOGD_IF((1<=miLogLevel), "-");

return true;

}

void

DisplayClient::

enquePrvOps(sp<StreamImgBuf>const& rpImgBuf)

{

mProfile_enquePrvOps.pulse();

if ( mProfile_enquePrvOps.getDuration() >= ::s2ns(2) ) {

mProfile_enquePrvOps.updateFps();

mProfile_enquePrvOps.showFps();

mProfile_enquePrvOps.reset();

}

//

status_t err = 0;

//

CamProfile profile(__FUNCTION__, "DisplayClient");

profile.print_overtime(

((1<=miLogLevel) ? 0 : 1000),

"+ locked buffer count(%d), rpImgBuf(%p,%p), Timestamp(%lld)",

mStreamBufList.size(), rpImgBuf.get(), rpImgBuf->getVirAddr(), rpImgBuf->getTimestamp()

);

//

// [1] unlock buffer before sending to display

GraphicBufferMapper::get().unlock(rpImgBuf->getBufHndl());

profile.print_overtime(1, "GraphicBufferMapper::unlock");

//

// [2] Dump image if wanted.

dumpImgBuf_If(rpImgBuf);

//

// [3] set timestamp.

err = mpStreamOps->set_timestamp(mpStreamOps, rpImgBuf->getTimestamp());

profile.print_overtime(2, "mpStreamOps->set_timestamp, Timestamp(%lld)", rpImgBuf->getTimestamp());

if ( err )

{

MY_LOGE(

"mpStreamOps->set_timestamp failed: status[%s(%d)], rpImgBuf(%p), Timestamp(%lld)",

::strerror(-err), -err, rpImgBuf.get(), rpImgBuf->getTimestamp()

);

}

//

// [4] set gralloc buffer type & dirty

::gralloc_extra_setBufParameter(

rpImgBuf->getBufHndl(),

GRALLOC_EXTRA_MASK_TYPE | GRALLOC_EXTRA_MASK_DIRTY,

GRALLOC_EXTRA_BIT_TYPE_CAMERA | GRALLOC_EXTRA_BIT_DIRTY

);

//

// [5] unlocks and post the buffer to display.

err = mpStreamOps->enqueue_buffer(mpStreamOps, rpImgBuf->getBufHndlPtr());//注意这里可以看到最终是通过mpStreamOps送入送给显示端显示的。

profile.print_overtime(10, "mpStreamOps->enqueue_buffer, Timestamp(%lld)", rpImgBuf->getTimestamp());

if ( err )

{

MY_LOGE(

"mpStreamOps->enqueue_buffer failed: status[%s(%d)], rpImgBuf(%p,%p)",

::strerror(-err), -err, rpImgBuf.get(), rpImgBuf->getVirAddr()

);

}

}

从上面的代码片段, 可以看到从显示数据最终是通过mpStreamOps(CameraHardwareInterface中传下来的的mHalPreviewWindow.nw)来进行处理的。

至此预览数据就算完全交给了ANativeWindow进行显示。

但是预览数据究竟是怎样从Provider端来的, 我们也提到在DisplayClient也会去分配一些buffer, 这些Buffer又是如何管理的。 后续会接着分享。