Mahout推荐系统引擎UserCF中的IRStats部分源码解析

Mahout提供推荐系统引擎是模块化的,分为5个主要部分组成:

1. 数据模型

2. 相似度算法

3. 近邻算法

4. 推荐算法

5. 算法评分器

今天好好看了看关于推荐算法以及算法评分部分的源码。

以http://blog.csdn.net/jianjian1992/article/details/46582713

里边数据的为例进行实验。

整体流程的代码如下,依照上面的5个模块,看起来倒是很简单呀。

public static RecommenderBuilder userRecommender(final UserSimilarity us, final UserNeighborhood un, boolean pref) throws TasteException {

return pref ? new RecommenderBuilder() {

public Recommender buildRecommender(DataModel model) throws TasteException {

//user对每个item都有评价preference的时候使用

return new GenericUserBasedRecommender(model, un, us);

}

} : new RecommenderBuilder() {

public Recommender buildRecommender(DataModel model) throws TasteException {

//user对item没有评价preference的时候使用

return new GenericBooleanPrefUserBasedRecommender(model, un, us);

}

};

}

final static int NEIGHBORHOOD_NUM = 3;

final static int RECOMMENDER_NUM = 2;

public static void main(String[] args) throws IOException, TasteException {

String file = "item.csv";

//1.构建数据模型

DataModel model = new FileDataModel(new File(file));

//2.相似度算法,采用欧式距离

UserSimilarity user = new EuclideanDistanceSimilarity(model);

//3.近邻算法,采用K = NEIGHBORHOOD_NUM最近邻

NearestNUserNeighborhood neighbor = new NearestNUserNeighborhood(NEIGHBORHOOD_NUM, user, model);

//4.基于以上三个模块构建推荐器,构造好之后就已经确定了

UserBasedRecommender r = new GenericUserBasedRecommender(model, neighbor, user);

//5.a推荐器的构造方法,用于评价时根据新采集的训练集生成推荐器

RecommenderBuilder recommenderBuilder = userRecommender(user, neighbor, false);

//5.b评价算法,采用平均绝对值距离,也即L1距离

RecommenderEvaluator evalutor = new AverageAbsoluteDifferenceRecommenderEvaluator();

System.out.println("eval:"+ evalutor.evaluate(recommenderBuilder, null, model, 0.5, 1));

//5.c评价算法,计算推荐系统的准确率,召回率等指标

RecommenderIRStatsEvaluator evaluator = new GenericRecommenderIRStatsEvaluator();

IRStatistics stats = evaluator.evaluate(recommenderBuilder, null, model, null, 2, GenericRecommenderIRStatsEvaluator.CHOOSE_THRESHOLD, 1.0);

System.out.printf("Recommender IR Evaluator: [Precision:%s,Recall:%s,F1:%s,FallOut:%s,nDCG:%s]\n", stats.getPrecision(), stats.getRecall(), stats.getF1Measure(), stats.getFallOut(), stats.getNormalizedDiscountedCumulativeGain());

运行结果如下:

eval:2.368176281452179

Recommender IR Evaluator: [Precision:0.5,Recall:1.0,F1:0.6666666666666666,FallOut:0.16666666666666666,nDCG:1.0]

RecommenderIRStatsEvaluator源码解析

RecommenderIRStatsEvaluator是一个接口,用于得到推荐系统的准确率,召回率等统计指标。

它定义的函数如下

/** * @param recommenderBuilder * 它通过public Recommender buildRecommender(DataModel model)定义推荐系统创建的方式; * @param dataModelBuilder * 数据模型创建的方式,如果已经创建好了数据模型,一般这个参数可以为null * @param dataModel * 推荐系统使用的数据模型 * * @param rescorer * 推荐排序的方式,一般情况下可以为null * * @param at * 这个参数起的名字挺奇怪的,它用来定义计算准确率的时候,一个user可以拥有的相关项items的最大个数,相关项item定义为user对这个item的评价是超过了relevanceThreshold的 * @param relevanceThreshold * 和at一起使用定义一个user的相关项 * * @return {@link IRStatistics} with resulting precision, recall, etc. * @throws TasteException * if an error occurs while accessing the {@link DataModel} */

IRStatistics evaluate(RecommenderBuilder recommenderBuilder,

DataModelBuilder dataModelBuilder,

DataModel dataModel,

IDRescorer rescorer,

int at,

double relevanceThreshold,

double evaluationPercentage) throws TasteException;在代码中是使用

RecommenderIRStatsEvaluator evaluator = new GenericRecommenderIRStatsEvaluator();来创建这个评价器的。

关于GenericRecommenderIRStatsEvaluator的介绍是

For each user, these implementation determine the top {@code n} preferences, then evaluate the IR statistics based on a {@link DataModel} that does not have these values. This number {@code n} is the “at” value, as in “precision at 5”. For example, this would mean precision evaluated by removing the top 5 preferences for a user and then finding the percentage of those 5 items included in the top 5 recommendations for that user.

看起来很拗口啊,似乎是说,对每一个用户,都找出他的最高的at个preferences(即对item的评价)作为训练数据训练出一个推荐系统,之后基于剩下的preferences来做评价。

下面来看看代码里边具体是怎么做的吧。

GenericRecommenderIRStatsEvaluator源码解析

因为使用的数据是有用户对item的评价,所以采用这一个具体的评价器。

我们来看看它的evaluate函数是怎么实现的。

首先检查参数,这是编程好习惯。

接着定义了四个统计指标:

RunningAverage precision = new FullRunningAverage();

RunningAverage recall = new FullRunningAverage();

RunningAverage fallOut = new FullRunningAverage();

RunningAverage nDCG = new FullRunningAverage();RunningAverage是个神奇的结构,可以新加入或删除数据,然后动态更新所有数据的平均值。这里会对每个user都求一次这4个指标,最后求所有user指标的平均值作为整个推荐系统的结果。

接着定义了三个变量

int numItems = dataModel.getNumItems();

int numUsersRecommendedFor = 0;

int numUsersWithRecommendations = 0;然后就开始对数据模型中的每个user进行循环了!

在这里使用到了参数evaluationPercentage,这个参数按照名字就是评价比率,也即在所有users中选择用于评价的比率。

这里通过取个随机double值,如果大于这个参数,则这个user不加入评价。这个random的取值应该是在[0,1]范围均等概率取值才ok的哦!

LongPrimitiveIterator it = dataModel.getUserIDs();

while (it.hasNext()) {

long userID = it.nextLong();

if (random.nextDouble() >= evaluationPercentage) {

// Skipped

continue;

}

...评价部分

}下面来看看循环里边的评价部分

PreferenceArray prefs = dataModel.getPreferencesFromUser(userID);

// List some most-preferred items that would count as (most) "relevant" results

double theRelevanceThreshold = Double.isNaN(relevanceThreshold) ? computeThreshold(prefs) : relevanceThreshold;

FastIDSet relevantItemIDs = dataSplitter.getRelevantItemsIDs(userID, at, theRelevanceThreshold, dataModel);

int numRelevantItems = relevantItemIDs.size();

if (numRelevantItems <= 0) {

continue;

}PreferenceArray的内容

首先得到数据模型中这个userID的所有评价项。PreferenceArray里边的内容是怎样的呢?

看如下代码,显示了uid = 3的结果,可以看到,对于pref里边的每一项,它们的getUserID结果都是3,每一项包括了uid对一个prefs.getItemID(i)的评价prefs.getValue(i)。

int uid = 3;

PreferenceArray prefs = model.getPreferencesFromUser(uid);

//prefs.sortByValueReversed();

for (int i = 0; i < prefs.length(); i++){

System.out.println("user id" + prefs.getUserID(i));

System.out.println("Item id value:" + prefs.getItemID(i) + ":" + prefs.getValue(i));

}user id3

Item id value:101:2.5

user id3

Item id value:104:4.0

user id3

Item id value:105:4.5

user id3

Item id value:107:5.0RelevanceThreshold的计算

按照代码,接下来要根据threshold来选择相关项,那么代码里边的computeThreshold(prefs)是如何计算的呢?

private static double computeThreshold(PreferenceArray prefs) {

if (prefs.length() < 2) {

// Not enough data points -- return a threshold that allows everything

return Double.NEGATIVE_INFINITY;

}

RunningAverageAndStdDev stdDev = new FullRunningAverageAndStdDev();

int size = prefs.length();

for (int i = 0; i < size; i++) {

stdDev.addDatum(prefs.getValue(i));

}

return stdDev.getAverage() + stdDev.getStandardDeviation();

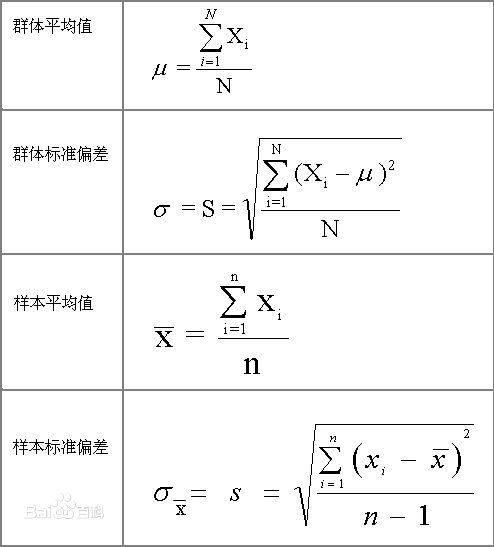

}stdDev是用来计算一组数据的平均值以及样本标准差的。

样本标准差和群体标准差的计算是有差别的,样本标准差中是除以n-1,大概理解应该是一堆样本中,取一个为标准,其它随意,所以自由度就为n-1了吧。

不过这个计算出来的阈值感觉很坑啊,以我的数据为例,用uid = 3计算,最后得到的阈值结果为5.080123449734644,(⊙﹏⊙)b这样子就没有任何一个preference满足要求了。

不知道是不是数据很大的时候效果会好些~~~

relevantItemIDs相关项的确定

dataSplitter选用的是GenericRelevantItemsDataSplitter。

RelevantItemsDataSplitter dataSplitter = new GenericRelevantItemsDataSplitter(); FastIDSet relevantItemIDs = dataSplitter.getRelevantItemsIDs(userID, at, theRelevanceThreshold, dataModel);getRelevantItemsIDs的实现,首先

1.得到userID的Preferences

2.创建一个FastIDSet用来保存相关项的id,大小为at

3.prefs根据值大小排序

3.遍历pref,如果user对这个item的评价prefs.getValue(i)不小于相关阈值,则将这个item的加入相关项,最多取at个满足条件的item

public FastIDSet getRelevantItemsIDs(long userID,

int at,

double relevanceThreshold,

DataModel dataModel) throws TasteException {

PreferenceArray prefs = dataModel.getPreferencesFromUser(userID);

FastIDSet relevantItemIDs = new FastIDSet(at);

prefs.sortByValueReversed();

for (int i = 0; i < prefs.length() && relevantItemIDs.size() < at; i++) {

if (prefs.getValue(i) >= relevanceThreshold) {

relevantItemIDs.add(prefs.getItemID(i));

}

}

return relevantItemIDs;

}下面来看看效果,仍旧以uid = 3 为例,如果relevanceThreshold为4,则结果如下

double relevanceThreshold = 4;//computeThreshold(prefs);

FastIDSet relevantItemIDs = new FastIDSet(at);

prefs.sortByValueReversed();

for (int i = 0; i < prefs.length() && relevantItemIDs.size() < at; i++) {

if (prefs.getValue(i) >= relevanceThreshold) {

relevantItemIDs.add(prefs.getItemID(i));

}

}

long[] itemIDs = relevantItemIDs.toArray();

for (int i = 0; i < itemIDs.length; i++){

System.out.println("Revevant Item id:" + itemIDs[i]);

}结果和上面的结果也正好符合哦!

Revevant Item id:104

Revevant Item id:105

Revevant Item id:107相关项个数<=0的话,这个user就没有评价的价值了,所以会跳过进入下一个循环。

trainingUsers的构造

得到这个user的相关项之后,接着为它构造一个推荐用的训练集。

这个训练集的构造规则便是:

1.对于其它的用户,将他们所有的(item,preference)都加入训练集;

2.对于这个用户user,将它的除了相关项之外的其它项的喜好加入训练集;

//得到训练集

FastByIDMap<PreferenceArray> trainingUsers = new FastByIDMap<PreferenceArray>(dataModel.getNumUsers());

LongPrimitiveIterator it2 = dataModel.getUserIDs();

while (it2.hasNext()) {

dataSplitter.processOtherUser(userID, relevantItemIDs, trainingUsers, it2.nextLong(), dataModel);

}

//构造训练模型

DataModel trainingModel = dataModelBuilder == null ? new GenericDataModel(trainingUsers)

: dataModelBuilder.buildDataModel(trainingUsers);

//如果这个user的所有项都变为了相关项,那也没评价的必要了,因为训练集里边都没有了这个user,怎么计算它与其它用户之间的距离呢?

try {

trainingModel.getPreferencesFromUser(userID);

} catch (NoSuchUserException nsee) {

continue; // Oops we excluded all prefs for the user -- just move on

}仍旧以uid = 3为例,我们来看看运行结果,相关项系数为4,

RelevantItemsDataSplitter dataSplitter = new GenericRelevantItemsDataSplitter();

FastByIDMap<PreferenceArray> trainingUsers = new FastByIDMap<PreferenceArray>(model.getNumUsers());

LongPrimitiveIterator it2 = model.getUserIDs();

while (it2.hasNext()) {

dataSplitter.processOtherUser(uid, relevantItemIDs, trainingUsers, it2.nextLong(), model);

}

for (Entry<Long, PreferenceArray> entry : trainingUsers.entrySet()) {

Long userid = entry.getKey();

PreferenceArray pref = entry.getValue();

System.out.println("user id is " + userid + ":" + pref.getUserID(0));

for (int i = 0; i < pref.length(); i++){

System.out.println("item id -> value is :" + pref.getItemID(i) + "->" + pref.getValue(i));

}

}如下结果中,除了uid = 3,其它user的所有项都加了进来;uid则只加了非相关项。

user id is 1:1

item id -> value is :101->5.0

item id -> value is :102->3.0

item id -> value is :103->2.5

user id is 2:2

item id -> value is :101->2.0

item id -> value is :102->2.5

item id -> value is :103->5.0

item id -> value is :104->2.0

user id is 3:3

item id -> value is :101->2.5

user id is 4:4

item id -> value is :101->5.0

item id -> value is :104->4.5

item id -> value is :106->4.0

item id -> value is :103->3.0

user id is 5:5

item id -> value is :101->4.0

item id -> value is :106->4.0

item id -> value is :104->4.0

item id -> value is :105->3.5

item id -> value is :102->3.0

item id -> value is :103->2.0

接下来又有一个判断,size是user的相关项个数+训练集中user的项的个数,也即user本身有的preferences个数,这里判定at的大小的两倍,也即相关项最大个数的2倍应该不大于user本身的item个数时才会对这个user进行评价,挺严格的啊!

int size = numRelevantItems + trainingModel.getItemIDsFromUser(userID).size();

if (size < 2 * at) {

// Really not enough prefs to meaningfully evaluate this user

continue;

}IR Statics指标

首先用上面得到的训练模型构建推荐器,之后对这个user进行推荐,得到recommendedItems。

之后求intersectionSize,也即推荐项与相关项之间相同的个数。

Recommender recommender = recommenderBuilder.buildRecommender(trainingModel);

int intersectionSize = 0;

List<RecommendedItem> recommendedItems = recommender.recommend(userID, at, rescorer);

for (RecommendedItem recommendedItem : recommendedItems) {

if (relevantItemIDs.contains(recommendedItem.getItemID())) {

intersectionSize++;

}

}准确率(Precision)与召回率

首先先介绍下准确率(Precision)与召回率,也叫找全率(Recall)。

一般都从分类的角度来说明的,类别有正类和负类。

预测为正类的样本中真正属于正类的样本个数

准确率 = ------------------------------------------------

所有预测为正类的样本的个数

预测为正类的样本中真正属于正类的样本个数

召回率 = ------------------------------------------------

所有真正为正类的样本的个数放到推荐系统中便是

推荐给user的Items中属于user相关项的个数

准确率 = ------------------------------------------------

推荐给user的Items的总个数

推荐给user的Items中属于user相关项的个数

召回率 = ------------------------------------------------

user的所有相关项item个数所以上面的intersectionSize便是 推荐给user的Items中属于user相关项的个数。

// 推荐给user的Items的总个数

int numRecommendedItems = recommendedItems.size();

// Precision

if (numRecommendedItems > 0) {

precision.addDatum((double) intersectionSize / (double) numRecommendedItems);

}

// Recall

recall.addDatum((double) intersectionSize / (double) numRelevantItems);

另外还有三个指标:

Fall-out

预测为正类的样本中真正属于负类的样本个数

Fall-out = ------------------------------------------------

所有为负类的样本的个数

推荐给user的Items中不属于user相关项的个数

Fall-out = ------------------------------------------------

模型中所有不为user相关项的item总个数// Fall-out

if (numRelevantItems < size) {

fallOut.addDatum((double) (numRecommendedItems - intersectionSize)

/ (double) (numItems - numRelevantItems));

}nDCG

概述搜索排序算法的评价指标NDCG(Normalized Discounted Cumulative Gain)

N指的是归一化,D指的是衰减率,C指的累加,G指的是熵,其实这个公式的关键就是就是熵,再多了三个形容词:归一化的,带有衰减函数的,再带有累加的熵即是了

具体可以查看http://eletva.com/tower/?p=159

// nDCG

// In computing, assume relevant IDs have relevance 1 and others 0

double cumulativeGain = 0.0;

double idealizedGain = 0.0;

for (int i = 0; i < numRecommendedItems; i++) {

RecommendedItem item = recommendedItems.get(i);

double discount = 1.0 / log2(i + 2.0); // Classical formulation says log(i+1), but i is 0-based here

if (relevantItemIDs.contains(item.getItemID())) {

cumulativeGain += discount;

}

// otherwise we're multiplying discount by relevance 0 so it doesn't do anything

// Ideally results would be ordered with all relevant ones first, so this theoretical

// ideal list starts with number of relevant items equal to the total number of relevant items

if (i < numRelevantItems) {

idealizedGain += discount;

}

}

if (idealizedGain > 0.0) {

nDCG.addDatum(cumulativeGain / idealizedGain);

}

reach

也即所有待推荐的user中成功推荐的user比例

// Reach

numUsersRecommendedFor++;

if (numRecommendedItems > 0) {

numUsersWithRecommendations++;

}

(double) numUsersWithRecommendations / (double) numUsersRecommendedFor