统计学习笔记(1) 监督学习概论(1)

原作品:The Elements of Statistical Learning Data Mining, Inference, and Prediction, Second Edition, by Trevor Hastie, Robert Tibshirani and Jerome Friedman

An Introduction to Statistical Learning. by Gareth James Daniela Witten Trevor Hastie and

Robert Tibshirani

We make predictions based on input vector X and get result Y:

Different components in X have different importance. E(Y|X=x) means expected value (average) of Y given X=x. This ideal f(x)=E(Y|X=x) is called the regression function.

There is the irreducible error---

we can certainly make errors in prediction, since at each X=x there is typically a distribution of possible Y values. The bias can be reduced, but the variance cannot.

We can understand this as, we use the same input x to make several predictions: f1(x), f2(x),..., fm(x), the predictions are still different although the input keeps unchanged, due to distribution. The statistical average value of f1(x), f2(x),..., fm(x) will be our known correct result f(x) and also is the statistical value of Y. The above statements can make the reducible error, which is the bias, to be zero.

Tips: if we want to compute E(Y|X=x) but there's few points with X=x, we should find the neiboring points of X=x, let

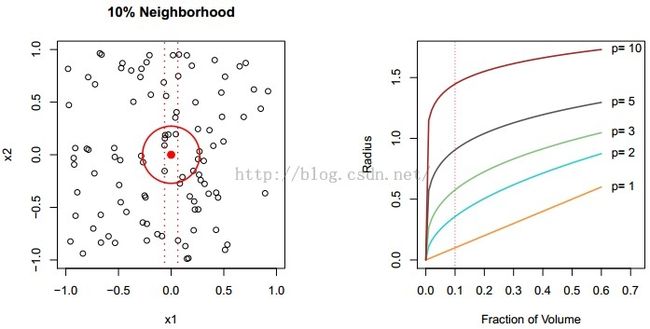

The above method is called nearest neighbor method, it can be bad when the dimensionality is large, considering curse of dimensionality, but it works well when the dimensionality is small and when the number of samples is large.

e.g. a 10% neighborhood in high dimensions need no longer be local, so we lose the spirit of estimating E(Y|X=x) by local averaging. The fraction of volume of local neighbors can be large even if the fraction is small when the dimensionality is large.

We can apply linear models or quadratic models(Introduction to thin-plate splines: paper "Thin-Plate Spline Interpolation" on SpringerLink and code onhttp://elonen.iki.fi/code/tpsdemo/). Also, thin-plate spline can be applied.

There's some trade-offs in curve fitting:

1. Linear models are easy interpret while thin-plate splines are not.

2. Good fit Versus Overfitting.

3. We often prefer a simpler model involving fewer variables over a black-box predictor involving them all.

When we try to avoid overfitting by selecting the fresh test data, we find that the fluctuation influences the performance when fitting dimension (flexibility) is low, compare the second figure with the third figure, compare their low-flexibility parts, we can see if fluctuation increases, model with low flexibility cannot deal well, and in the third figure, as the flexibility increases from low values, the mean square error decreases, this is the advantage of high flexibility. From the second point of view, we compare the second figure with the third figure, the second figure suffers from strong noise while the third figure suffers from weak noise, when the flexibility (dimension of model) is very high, we can see from the second image that it fits to the noise well, but it's useless and it's overfitting, we can see when the noise is weak, too high flexibility is almost useless in the third figure. So a moderate in the middle will be enough. The red curve represents the error evaluated on the fresh test set, the gray curve represents the error evaluated on the training set.

The horizontal dashed line indicates ![]() , which corresponds to the lowest achievable test MSE among all methods, as is shown in the following graphs, training error can be lower than the bound, but test error cannot.

, which corresponds to the lowest achievable test MSE among all methods, as is shown in the following graphs, training error can be lower than the bound, but test error cannot.

Corresponding to the above 3 graphs, blue-squared bias, orange-variance, red-test MSE, dashed line-![]() .

.

To help us evaluat test MSE with only training data, we can refer to cross validation in "Resampling Methods".

4. Bias-Variance Trade-off: Suppose we have fit a model f^(x) to the training set. The true model is

with f(x)=E(Y|X=x), and

Bias

If a method has high variance, then small changes in the training set can result in large changes in f^. Bias refers to the error that is introduced by approximating a real-life problem, which may be extremely complicated, by a much simpler model. As the flexibility increases, the variance of estimation increases, the bias decreases. So choosing the flexibility based on average test error amounts to a bias-variance trade-off.

As to classification problems, consider a classifier C(X) that assigns a class label to observation X. Denote

The Bayes optimal classifier is

Typically we measure the estimation performance using

The Bayes classifier has smallest error.

SVMs build structured models for C(x), we also build structured models for representing the pk(x), e.g. Logistic regression, generalized additive models, K-nearest neighbors.