ceph中的Pools、PGs和OSDs介绍(tmp)

How are Placement Groups used ?

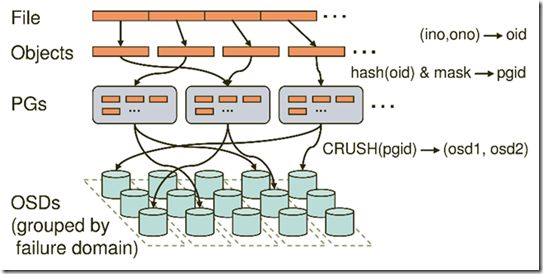

A placement group (PG) aggregates objects within a pool because tracking object placement and object metadata on a per-object basis is computationally expensive–i.e., a system with millions of objects cannot realistically track placement on a per-object basis.

The Ceph client will calculate which placement group an object should be in. It does this by hashing the object ID and applying an operation based on the number of PGs in the defined pool and the ID of the pool. See Mapping PGs to OSDs for details.

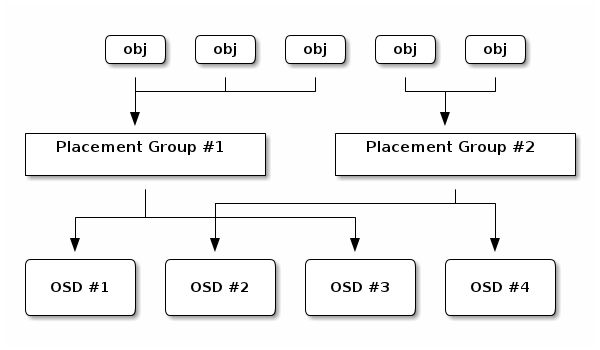

The object’s contents within a placement group are stored in a set of OSDs. For instance, in a replicated pool of size two, each placement group will store objects on two OSDs, as shown below.

Should OSD #2 fail, another will be assigned to Placement Group #1 and will be filled with copies of all objects in OSD #1. If the pool size is changed from two to three, an additional OSD will be assigned to the placement group and will receive copies of all objects in the placement group.

Placement groups do not own the OSD, they share it with other placement groups from the same pool or even other pools. If OSD #2 fails, the Placement Group #2 will also have to restore copies of objects, using OSD #3.

When the number of placement groups increases, the new placement groups will be assigned OSDs. The result of the CRUSH function will also change and some objects from the former placement groups will be copied over to the new Placement Groups and removed from the old ones.

存储池为了提供了一些额外的功能,包括:

- 复制: 你可以设置一个对象期望的副本数量。典型配置存储一个对象和一个它的副本(如 size = 2),但你可以更改副本的数量。

- 配置组: 你可以设置一个存储池的配置组数量。典型配置在每个 OSD 上使用大约 100 个归置组,这样,不用过多计算资源就得到了较优的均衡。设置多个存储池的时候,要注意为这些存储池和集群设置合理的配置组数量。

- CRUSH规则:当你在存储池里存数据的时候,映射到存储池的 CRUSH 规则集使得 CRUSH 确定一条规则,用于集群内主对象的归置和其副本的复制。你可以给存储池定制 CRUSH 规则。

- 快照: 你用 ceph osd pool mksnap 创建快照的时候,实际上创建了一小部分存储池的快照。

- 设置所有者:你可以设置一个用户 ID 为一个存储池的所有者。

When creating a new pool with:

ceph osd pool create {pool-name} pg_num

it is mandatory to choose the value of pg_num because it cannot be calculated automatically. Here are a few values commonly used:

- Less than 5 OSDs set pg_num to 128

- Between 5 and 10 OSDs set pg_num to 512

- Between 10 and 50 OSDs set pg_num to 4096

- If you have more than 50 OSDs, you need to understand the tradeoffs and how to calculate the pg_num value by yourself

- For calculating pg_num value by yourself please take help of pgcalc tool

一个配置组(PG)聚集了一系列的对象至一个组,并且映射这个组至一系列的OSD。在每一个对象的基础上追踪对象的配置和对象的元数据在计算上是十分昂贵的–比如,拥有上百万对象的系统在每一个对象的基础上追踪对象的配置是不切实际的。配置组解决这一障碍性能和可伸缩性 。此外,配置组降低进程的数目时,必须跟踪每个对象的元数据量的Ceph的存储和检索数据。

增加配置组的数量减少了在你的集群中每个OSD负载的变动。我们建议每个OSD约50-100配置组为了平衡内存和CPU需求和每OSD个负载。对于单个对象池,你可以用下面的公式:

当使用多个数据存储对象池,你需要确保你平衡每个池配置组和每个OSD配置组的数量为了让你到达在一个合理的配置组总数,为每个OSD提供合理的低变动不占用系统资源或同步操作进程太慢。

Pool, PG and CRUSH Config Reference¶

When you create pools and set the number of placement groups for the pool, Ceph uses default values when you don’t specifically override the defaults. We recommend overridding some of the defaults. Specifically, we recommend setting a pool’s replica size and overriding the default number of placement groups. You can specifically set these values when running pool commands. You can also override the defaults by adding new ones in the [global] section of your Ceph configuration file.

[global] # By default, Ceph makes 3 replicas of objects. If you want to make four # copies of an object the default value--a primary copy and three replica # copies--reset the default values as shown in 'osd pool default size'. # If you want to allow Ceph to write a lesser number of copies in a degraded # state, set 'osd pool default min size' to a number less than the # 'osd pool default size' value. osd pool default size = 4 # Write an object 4 times. osd pool default min size = 1 # Allow writing one copy in a degraded state. # Ensure you have a realistic number of placement groups. We recommend # approximately 100 per OSD. E.g., total number of OSDs multiplied by 100 # divided by the number of replicas (i.e., osd pool default size). So for # 10 OSDs and osd pool default size = 4, we'd recommend approximately # (100 * 10) / 4 = 250. osd pool default pg num = 250 osd pool default pgp num = 250