引导滤波 Guided Image Filtering

本文主要介绍导向滤波,引导滤波在滤波的同时,具有保边缘的特效,考虑了空间因素,可以用在图像去雾算法中对透射率t的优化中,能起到很好的效果

论文如下:

Guided Image Filtering

Kaiming He , Jian Sun , and Xiaoou Tang ,

Department of Information Engineering, The Chinese University of Hong Kong

Microsoft Research Asia

Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, China

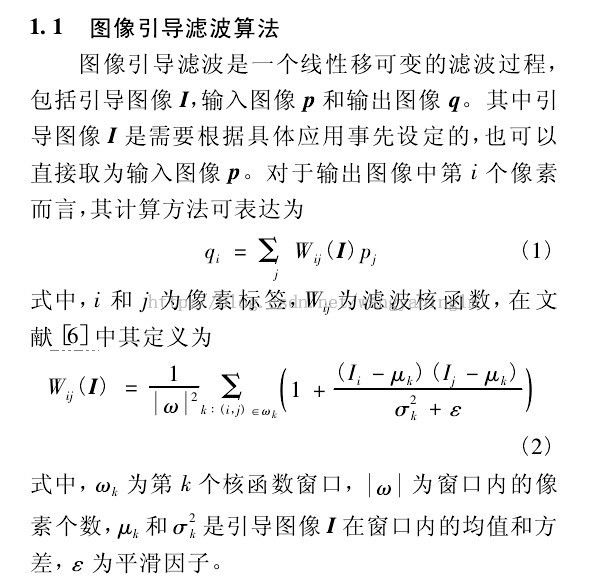

原理如下:

代码如下:

#include "afxwin.h"

#include <cv.h>

#include "cxcore.h"

#include <highgui.h>

#include<vector>

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

void Ctry::OnTryTyr1() //消息响应函数

{

int r = 4;

double eps = 0.01;

Mat image_src = imread("C:\\Users\\Administrator\\Desktop\\38.jpg", CV_LOAD_IMAGE_COLOR);

vector<Mat> bgr_src, bgr_dst;

split(image_src, bgr_src);//分解每个通道

Mat dst_color;

Mat temp;

for (int i = 0; i<3; i++)

{

Mat I = getimage(bgr_src[i]);

Mat p = I.clone();

Mat q = guidedFilter2(I, p, r, eps);

bgr_dst.push_back(q);

}

merge(bgr_dst, dst_color);

imwrite("C:\\Users\\Administrator\\Desktop\\result2.jpg", dst_color*255);

double time2 = 0;

time2 = (double)getTickCount();

Mat image_gray(image_src.size(), CV_8UC1);

cvtColor(image_src, image_gray, CV_BGR2GRAY);

Mat I = getimage(image_gray);

Mat p = I.clone();

Mat Output;

guidedFilter2(I, p, r, eps).copyTo(Output);

imshow("方法:", Output);

Mat tt;

Output.convertTo(tt, -1,255.0);

imwrite("C:\\Users\\Administrator\\Desktop\\mert.JPG", tt);

time2 = 1000 * ((double)getTickCount() - time2) / getTickFrequency();

cout << endl << "Time of guided filter2 for runs: " << time2 << " milliseconds." << endl;

waitKey(0);

}

//convert image depth to CV_64F

Mat Ctry::getimage(Mat &a)

{

int hei = a.rows;

int wid = a.cols;

Mat I(hei, wid, CV_64FC1);

//convert image depth to CV_64F

a.convertTo(I, CV_64FC1, 1.0 / 255.0);

return I;

}

Mat Ctry::guidedFilter2(cv::Mat I, cv::Mat p, int r, double eps)

{

/*

% GUIDEDFILTER O(1) time implementation of guided filter.

%

% - guidance image: I (should be a gray-scale/single channel image)

% - filtering input image: p (should be a gray-scale/single channel image)

% - local window radius: r

% - regularization parameter: eps

*/

cv::Mat _I;

I.convertTo(_I, CV_64FC1);

I = _I;

cv::Mat _p;

p.convertTo(_p, CV_64FC1);

p = _p;

//[hei, wid] = size(I);

int hei = I.rows;

int wid = I.cols;

//N = boxfilter(ones(hei, wid), r); % the size of each local patch; N=(2r+1)^2 except for boundary pixels.

cv::Mat N;

cv::boxFilter(cv::Mat::ones(hei, wid, I.type()), N, CV_64FC1, cv::Size(r, r));

//mean_I = boxfilter(I, r) ./ N;

cv::Mat mean_I;

cv::boxFilter(I, mean_I, CV_64FC1, cv::Size(r, r));

//mean_p = boxfilter(p, r) ./ N;

cv::Mat mean_p;

cv::boxFilter(p, mean_p, CV_64FC1, cv::Size(r, r));

//mean_Ip = boxfilter(I.*p, r) ./ N;

cv::Mat mean_Ip;

cv::boxFilter(I.mul(p), mean_Ip, CV_64FC1, cv::Size(r, r));

//cov_Ip = mean_Ip - mean_I .* mean_p; % this is the covariance of (I, p) in each local patch.

cv::Mat cov_Ip = mean_Ip - mean_I.mul(mean_p);

//mean_II = boxfilter(I.*I, r) ./ N;

cv::Mat mean_II;

cv::boxFilter(I.mul(I), mean_II, CV_64FC1, cv::Size(r, r));

//var_I = mean_II - mean_I .* mean_I;

cv::Mat var_I = mean_II - mean_I.mul(mean_I);

//a = cov_Ip ./ (var_I + eps); % Eqn. (5) in the paper;

cv::Mat a = cov_Ip / (var_I + eps);

//b = mean_p - a .* mean_I; % Eqn. (6) in the paper;

cv::Mat b = mean_p - a.mul(mean_I);

//mean_a = boxfilter(a, r) ./ N;

cv::Mat mean_a;

cv::boxFilter(a, mean_a, CV_64FC1, cv::Size(r, r));

mean_a = mean_a / N;

//mean_b = boxfilter(b, r) ./ N;

cv::Mat mean_b;

cv::boxFilter(b, mean_b, CV_64FC1, cv::Size(r, r));

mean_b = mean_b / N;

//q = mean_a .* I + mean_b; % Eqn. (8) in the paper;

cv::Mat q = mean_a.mul(I) + mean_b;

return q;

}

效果图: