机器学习实战--CART

上一节中介绍的回归方法,主要用于线性问题中,但当数据量变大,特征值变多时,这些方法就变得不那么实用了。这一节介绍一下CART(分类回归树)用于回归。主要讲解两种树:回归树和模型数

在学习CART时,可以回顾一下我们前面所讲的决策树:

http://blog.csdn.net/sunnyxiaohu/article/details/50826016

一、回归树

每个叶节点包含单个值

算法原理:

主要算法实现:

1、根据特征维度和特征值分割

def binSplitDataSet(dataSet, feature, value):

mat0 = dataSet[nonzero(dataSet[:,feature] > value)[0],:][0]

mat1 = dataSet[nonzero(dataSet[:,feature] <= value)[0],:][0]

return mat0,mat12、构建叶节点的方法和对应的总方差计算

def regLeaf(dataSet):#returns the value used for each leaf

return mean(dataSet[:,-1])

def regErr(dataSet):

return var(dataSet[:,-1]) * shape(dataSet)[0]3、选择最适合的特征维度和值进行分割

算法原理:

def chooseBestSplit(dataSet, leafType=regLeaf, errType=regErr, ops=(1,4)):

tolS = ops[0]; tolN = ops[1]

#if all the target variables are the same value: quit and return value

if len(set(dataSet[:,-1].T.tolist()[0])) == 1: #exit cond 1

return None, leafType(dataSet)

m,n = shape(dataSet)

#the choice of the best feature is driven by Reduction in RSS error from mean

S = errType(dataSet)

bestS = inf; bestIndex = 0; bestValue = 0

for featIndex in range(n-1):

for splitVal in set(dataSet[:,featIndex]):

mat0, mat1 = binSplitDataSet(dataSet, featIndex, splitVal)

if (shape(mat0)[0] < tolN) or (shape(mat1)[0] < tolN): continue

newS = errType(mat0) + errType(mat1)

if newS < bestS:

bestIndex = featIndex

bestValue = splitVal

bestS = newS

#if the decrease (S-bestS) is less than a threshold don't do the split

if (S - bestS) < tolS:

return None, leafType(dataSet) #exit cond 2

mat0, mat1 = binSplitDataSet(dataSet, bestIndex, bestValue)

if (shape(mat0)[0] < tolN) or (shape(mat1)[0] < tolN): #exit cond 3

return None, leafType(dataSet)

return bestIndex,bestValue#returns the best feature to split on

#and the value used for that split4、创建决策树

def createTree(dataSet, leafType=regLeaf, errType=regErr, ops=(1,4)):#assume dataSet is NumPy Mat so we can array filtering

feat, val = chooseBestSplit(dataSet, leafType, errType, ops)#choose the best split

if feat == None: return val #if the splitting hit a stop condition return val

retTree = {}

retTree['spInd'] = feat

retTree['spVal'] = val

lSet, rSet = binSplitDataSet(dataSet, feat, val)

retTree['left'] = createTree(lSet, leafType, errType, ops)

retTree['right'] = createTree(rSet, leafType, errType, ops)

return retTree 二、模型树

每一个叶节点包含一个线性函数

1、创建叶节点的方法和对应的误差计算

def modelLeaf(dataSet):#create linear model and return coeficients

ws,X,Y = linearSolve(dataSet)

return ws

def modelErr(dataSet):

ws,X,Y = linearSolve(dataSet)

yHat = X * ws

return sum(power(Y - yHat,2))2、用树回归/模型回归进行预测

def regTreeEval(model, inDat):

return float(model)

def modelTreeEval(model, inDat):

n = shape(inDat)[1]

X = mat(ones((1,n+1)))

X[:,1:n+1]=inDat

return float(X*model)

def treeForeCast(tree, inData, modelEval=regTreeEval):

if not isTree(tree): return modelEval(tree, inData)

if inData[tree['spInd']] > tree['spVal']:

if isTree(tree['left']): return treeForeCast(tree['left'], inData, modelEval)

else: return modelEval(tree['left'], inData)

else:

if isTree(tree['right']): return treeForeCast(tree['right'], inData, modelEval)

else: return modelEval(tree['right'], inData)

def createForeCast(tree, testData, modelEval=regTreeEval):

m=len(testData)

yHat = mat(zeros((m,1)))

for i in range(m):

yHat[i,0] = treeForeCast(tree, mat(testData[i]), modelEval)

return yHat三、剪枝

防止过拟合

1、先剪枝

对调试参数很敏感。

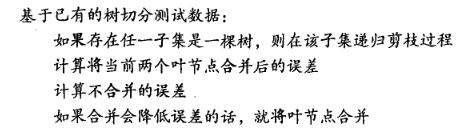

2、后剪枝

算法原理:

算法实现:

def isTree(obj):

return (type(obj).__name__=='dict')

def getMean(tree):

if isTree(tree['right']): tree['right'] = getMean(tree['right'])

if isTree(tree['left']): tree['left'] = getMean(tree['left'])

return (tree['left']+tree['right'])/2.0

def prune(tree, testData):

if shape(testData)[0] == 0: return getMean(tree) #if we have no test data collapse the tree

if (isTree(tree['right']) or isTree(tree['left'])):#if the branches are not trees try to prune them

lSet, rSet = binSplitDataSet(testData, tree['spInd'], tree['spVal'])

if isTree(tree['left']): tree['left'] = prune(tree['left'], lSet)

if isTree(tree['right']): tree['right'] = prune(tree['right'], rSet)

#if they are now both leafs, see if we can merge them

if not isTree(tree['left']) and not isTree(tree['right']):

lSet, rSet = binSplitDataSet(testData, tree['spInd'], tree['spVal'])

errorNoMerge = sum(power(lSet[:,-1] - tree['left'],2)) +\

sum(power(rSet[:,-1] - tree['right'],2))

treeMean = (tree['left']+tree['right'])/2.0

errorMerge = sum(power(testData[:,-1] - treeMean,2))

if errorMerge < errorNoMerge:

print "merging"

return treeMean

else: return tree

else: return tree四、使用python的Tkinter库创建GUI

from numpy import *

from Tkinter import *

import regTrees

import matplotlib

matplotlib.use('TkAgg')

from matplotlib.backends.backend_tkagg import FigureCanvasTkAgg

from matplotlib.figure import Figure

def reDraw(tolS,tolN):

reDraw.f.clf() # clear the figure

reDraw.a = reDraw.f.add_subplot(111)

if chkBtnVar.get():

if tolN < 2: tolN = 2

myTree=regTrees.createTree(reDraw.rawDat, regTrees.modelLeaf,\

regTrees.modelErr, (tolS,tolN))

yHat = regTrees.createForeCast(myTree, reDraw.testDat, \

regTrees.modelTreeEval)

else:

myTree=regTrees.createTree(reDraw.rawDat, ops=(tolS,tolN))

yHat = regTrees.createForeCast(myTree, reDraw.testDat)

sortInx = argsort(reDraw.raDatt,0).A[:,0]

reDraw.a.scatter(reDraw.rawDat[sortInx][:,0], reDraw.rawDat[sortInx][:,1], s=5) #use scatter for data set

reDraw.a.plot(reDraw.testDat, yHat, linewidth=2.0) #use plot for yHat

reDraw.canvas.show()

def getInputs():

try: tolN = int(tolNentry.get())

except:

tolN = 10

print "enter Integer for tolN"

tolNentry.delete(0, END)

tolNentry.insert(0,'10')

try: tolS = float(tolSentry.get())

except:

tolS = 1.0

print "enter Float for tolS"

tolSentry.delete(0, END)

tolSentry.insert(0,'1.0')

return tolN,tolS

def drawNewTree():

tolN,tolS = getInputs()#get values from Entry boxes

reDraw(tolS,tolN)

root=Tk()

reDraw.f = Figure(figsize=(5,4), dpi=100) #create canvas

reDraw.canvas = FigureCanvasTkAgg(reDraw.f, master=root)

reDraw.canvas.show()

reDraw.canvas.get_tk_widget().grid(row=0, columnspan=3)

Label(root, text="tolN").grid(row=1, column=0)

tolNentry = Entry(root)

tolNentry.grid(row=1, column=1)

tolNentry.insert(0,'10')

Label(root, text="tolS").grid(row=2, column=0)

tolSentry = Entry(root)

tolSentry.grid(row=2, column=1)

tolSentry.insert(0,'1.0')

Button(root, text="ReDraw", command=drawNewTree).grid(row=1, column=2, rowspan=3)

chkBtnVar = IntVar()

chkBtn = Checkbutton(root, text="Model Tree", variable = chkBtnVar)

chkBtn.grid(row=3, column=0, columnspan=2)

reDraw.rawDat = mat(regTrees.loadDataSet('sine.txt'))

reDraw.testDat = arange(min(reDraw.rawDat[:,0]),max(reDraw.rawDat[:,0]),0.01)

reDraw(1.0, 10)

root.mainloop()