high_memeory 映射---3

非连续内存管理区:

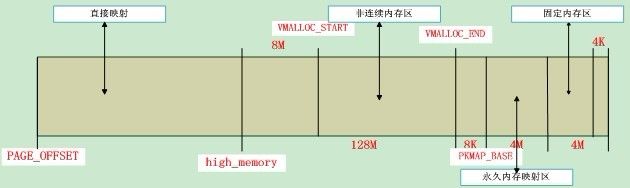

非连续内存分配是指将物理地址不连续的页框映射到线性地址连续的线性地址空间,主要应用于大容量的内存分配。采用这种方式分配内存的主要优点是避免了外部碎片,而缺点是必须打乱内核页表,而且访问速度较连续分配的物理页框慢。

非连续内存分配的线性地址空间是从VMALLOC_START到VMALLOC_END,每当内核要用vmalloc类的函数进行非连续内存分配,就会申请一个vm_struct结构来描述对应的vmalloc区,两个vmalloc区之间的间隔至少为一个页框的大小,即PAGE_SIZE。下图是非连续内存分配区的示意图;

来自:http://blog.csdn.net/vanbreaker/article/details/7591844

#define VMALLOC_START((unsigned long)high_memory + VMALLOC_OFFSET)

- #define VMALLOC_OFFSET (8 * 1024 * 1024) 即 8M

void __init initmem_init(unsigned long start_pfn,

unsigned long end_pfn)

{

highstart_pfn = highend_pfn = max_pfn;

if (max_pfn > max_low_pfn)

highstart_pfn = max_low_pfn;

<span style="white-space:pre"> </span>/******************/

num_physpages = highend_pfn;

/*高端内存开始地址物理*/

high_memory = (void *) __va(highstart_pfn * PAGE_SIZE - 1) + 1;

/**************************/

}

f非连续内存的描述符:

struct vm_struct {

struct vm_struct *next;

void *addr; /*指向第一个内存单元(线性地址)*/

unsigned long size; /*该块内存区的大小*/

unsigned long flags;/*内存类型的标识字段*/

struct page **pages;/*指向页描述符指针数组*/

unsigned int nr_pages; /*内存区大小对应的页框数*/

phys_addr_t phys_addr;/*该字段设为0,除非内存已被创建来映射一个硬件设备的IO共享内存*/

const void *caller;/*调用vmalloc类的函数的返回地址*/

};

struct vmap_area {

unsigned long va_start;

unsigned long va_end;

unsigned long flags;

struct rb_node rb_node; /* address sorted rbtree */

struct list_head list; /* address sorted list */

struct list_head purge_list; /* "lazy purge" list */

void *private;

struct rcu_head rcu_head;

};

内存区由 next 字段链接到一起,并且为了查找简单,他们以地址为次序。为了安全,每个区域至少由一个页面隔离开。

非连续内存区的初始化工作在start_kernel()->mm_init()->vmalloc_init()完成

void __init vmalloc_init(void)

{

struct vmap_area *va;

struct vm_struct *tmp;

int i;

for_each_possible_cpu(i) {

struct vmap_block_queue *vbq;

vbq = &per_cpu(vmap_block_queue, i);

spin_lock_init(&vbq->lock);

INIT_LIST_HEAD(&vbq->free);

}

/* Import existing vmlist entries. */

for (tmp = vmlist; tmp; tmp = tmp->next) { //链表头为vmlist;导入vmlist中已经有的数据

va = kzalloc(sizeof(struct vmap_area), GFP_NOWAIT);

va->flags = VM_VM_AREA;

va->va_start = (unsigned long)tmp->addr;

va->va_end = va->va_start + tmp->size;

va->vm = tmp;

__insert_vmap_area(va);

}

vmap_area_pcpu_hole = VMALLOC_END;

vmap_initialized = true;初始化over

}

分配非连续内存区:

/**

* __vmalloc_node_range - allocate virtually contiguous memory

* @size: allocation size

* @align: desired alignment

* @start: vm area range start

* @end: vm area range end

* @gfp_mask: flags for the page level allocator

* @prot: protection mask for the allocated pages

* @node: node to use for allocation or -1

* @caller: caller's return address

*

* Allocate enough pages to cover @size from the page level

* allocator with @gfp_mask flags. Map them into contiguous

* kernel virtual space, using a pagetable protection of @prot.

*/

void *__vmalloc_node_range(unsigned long size, unsigned long align,

unsigned long start, unsigned long end, gfp_t gfp_mask,

pgprot_t prot, int node, const void *caller)

{

struct vm_struct *area;

void *addr;

unsigned long real_size = size;

size = PAGE_ALIGN(size);

if (!size || (size >> PAGE_SHIFT) > totalram_pages)

goto fail;

<span style="white-space:pre"> </span>/*

<span style="white-space:pre"> </span> 在线性地址VMALLOC_START 和VMALLOC_END之间查找一个空闲区域;

<span style="white-space:pre"> /*申请并设置了一个vm_struct*/ </span>

<span style="white-space:pre"> </span>*/

area = __get_vm_area_node(size, align, VM_ALLOC | VM_UNLIST,

start, end, node, gfp_mask, caller);

if (!area)

goto fail;

<span style="white-space:pre"> /*vmalloc的建立映射的核心部分*/ 其中map_vm_area()建立页表映射机制的实现就是依次对pgd、pud、pmd、pte的设置。</span>

addr = __vmalloc_area_node(area, gfp_mask, prot, node, caller);

if (!addr)

return NULL;

/*

* In this function, newly allocated vm_struct is not added

* to vmlist at __get_vm_area_node(). so, it is added here.

*/ /*将vm_struct插入vmlist链表*/

insert_vmalloc_vmlist(area);

/*

* A ref_count = 3 is needed because the vm_struct and vmap_area

* structures allocated in the __get_vm_area_node() function contain

* references to the virtual address of the vmalloc'ed block.

*/

kmemleak_alloc(addr, real_size, 3, gfp_mask);

return addr;

fail:

warn_alloc_failed(gfp_mask, 0,

"vmalloc: allocation failure: %lu bytes\n",

real_size);

return NULL;

}

/**

* __vmalloc_node - allocate virtually contiguous memory

* @size: allocation size

* @align: desired alignment

* @gfp_mask: flags for the page level allocator

* @prot: protection mask for the allocated pages

* @node: node to use for allocation or -1

* @caller: caller's return address

*

* Allocate enough pages to cover @size from the page level

* allocator with @gfp_mask flags. Map them into contiguous

* kernel virtual space, using a pagetable protection of @prot.

*/

static void *__vmalloc_node(unsigned long size, unsigned long align,

gfp_t gfp_mask, pgprot_t prot,

int node, const void *caller)

{

return __vmalloc_node_range(size, align, VMALLOC_START, VMALLOC_END,

gfp_mask, prot, node, caller);

}

void *__vmalloc(unsigned long size, gfp_t gfp_mask, pgprot_t prot)

{

return __vmalloc_node(size, 1, gfp_mask, prot, -1,

__builtin_return_address(0));

}

对于查找分配一个线性地址:

static struct vm_struct *__get_vm_area_node(unsigned long size,

unsigned long align, unsigned long flags, unsigned long start,

unsigned long end, int node, gfp_t gfp_mask, const void *caller)

{

struct vmap_area *va;

struct vm_struct *area;

BUG_ON(in_interrupt());

if (flags & VM_IOREMAP) {

int bit = fls(size);

if (bit > IOREMAP_MAX_ORDER)

bit = IOREMAP_MAX_ORDER;

else if (bit < PAGE_SHIFT)

bit = PAGE_SHIFT;

align = 1ul << bit;

}

size = PAGE_ALIGN(size);

if (unlikely(!size))

return NULL;

area = kzalloc_node(sizeof(*area), gfp_mask & GFP_RECLAIM_MASK, node); 分配一个VM_struct 类型

if (unlikely(!area))

return NULL;

/*

* We always allocate a guard page.

*/

size += PAGE_SIZE; 加上安全隔离距离一个page=4KB

/*分配vmap_area结构体,并查找满足size大小的线性区域,并且将vmap_area插入到红黑树中*/

va = alloc_vmap_area(size, align, start, end, node, gfp_mask); 具体分析见进程空间分析

if (IS_ERR(va)) {

kfree(area);

return NULL;

}

/*

* When this function is called from __vmalloc_node_range,

* we do not add vm_struct to vmlist here to avoid

* accessing uninitialized members of vm_struct such as

* pages and nr_pages fields. They will be set later.

* To distinguish it from others, we use a VM_UNLIST flag.

*/

if (flags & VM_UNLIST)

setup_vmalloc_vm(area, va, flags, caller);

else

insert_vmalloc_vm(area, va, flags, caller);

return area;

}

<pre name="code" class="cpp"> * Allocate a region of KVA of the specified size and alignment, within the

* vstart and vend.

*/

static struct vmap_area *alloc_vmap_area(unsigned long size,

unsigned long align,

unsigned long vstart, unsigned long vend,

int node, gfp_t gfp_mask)

{

struct vmap_area *va;

struct rb_node *n;

unsigned long addr;

int purged = 0;

struct vmap_area *first;

BUG_ON(!size);

BUG_ON(size & ~PAGE_MASK);

BUG_ON(!is_power_of_2(align));

<span style="white-space:pre"> /*分配vmap_area结构*/ </span>

va = kmalloc_node(sizeof(struct vmap_area),

gfp_mask & GFP_RECLAIM_MASK, node);

if (unlikely(!va))

return ERR_PTR(-ENOMEM);

retry:

spin_lock(&vmap_area_lock);

/*

* Invalidate cache if we have more permissive parameters.

* cached_hole_size notes the largest hole noticed _below_

* the vmap_area cached in free_vmap_cache: if size fits

* into that hole, we want to scan from vstart to reuse

* the hole instead of allocating above free_vmap_cache.

* Note that __free_vmap_area may update free_vmap_cache

* without updating cached_hole_size or cached_align.

*/

if (!free_vmap_cache ||

size < cached_hole_size ||

vstart < cached_vstart ||

align < cached_align) {

nocache:

cached_hole_size = 0;

free_vmap_cache = NULL;

}

/* record if we encounter less permissive parameters */

cached_vstart = vstart;

cached_align = align;

/* find starting point for our search */

if (free_vmap_cache) {

first = rb_entry(free_vmap_cache, struct vmap_area, rb_node);

addr = ALIGN(first->va_end, align);

if (addr < vstart)

goto nocache;

if (addr + size - 1 < addr)

goto overflow;

} else {

addr = ALIGN(vstart, align);

if (addr + size - 1 < addr) //溢出

goto overflow;

n = vmap_area_root.rb_node; //RB_tree的root

first = NULL;

while (n) {

struct vmap_area *tmp;

tmp = rb_entry(n, struct vmap_area, rb_node);

if (tmp->va_end >= addr) {

first = tmp;

if (tmp->va_start <= addr)

break;

n = n->rb_left;

} else

n = n->rb_right;

}

if (!first)/*为最左的孩子,也就是比现有的都小*

goto found;

}

/* from the starting point, walk areas until a suitable hole is found */

while (addr + size > first->va_start && addr + size <= vend) {

if (addr + cached_hole_size < first->va_start)

cached_hole_size = first->va_start - addr;

addr = ALIGN(first->va_end, align);

if (addr + size - 1 < addr)

goto overflow;

if (list_is_last(&first->list, &vmap_area_list))

goto found;

first = list_entry(first->list.next,

struct vmap_area, list);

}

found:

if (addr + size > vend)

goto overflow;

<span style="white-space:pre"> /*初始化va*/ </span>

va->va_start = addr;

va->va_end = addr + size;

va->flags = 0;

__insert_vmap_area(va);/*插入到红黑树*/

free_vmap_cache = &va->rb_node;

spin_unlock(&vmap_area_lock);

BUG_ON(va->va_start & (align-1));

BUG_ON(va->va_start < vstart);

BUG_ON(va->va_end > vend);

return va;

overflow:

spin_unlock(&vmap_area_lock);

if (!purged) {

purge_vmap_area_lazy();

purged = 1;

goto retry;

}

if (printk_ratelimit())

printk(KERN_WARNING

"vmap allocation for size %lu failed: "

"use vmalloc=<size> to increase size.\n", size);

kfree(va);

return ERR_PTR(-EBUSY);

}

static void insert_vmalloc_vm(struct vm_struct *vm, struct vmap_area *va,

<span style="white-space:pre"> </span> unsigned long flags, const void *caller)

{

<span style="white-space:pre"> </span>setup_vmalloc_vm(vm, va, flags, caller); // 根据找到的Vmap_area 设置vm_struct;

<span style="white-space:pre"> </span>insert_vmalloc_vmlist(vm);//将vm_struct 插入到链表中去

}

static void *__vmalloc_area_node(struct vm_struct *area, gfp_t gfp_mask,

pgprot_t prot, int node, const void *caller)

{

const int order = 0;

struct page **pages;

unsigned int nr_pages, array_size, i;

gfp_t nested_gfp = (gfp_mask & GFP_RECLAIM_MASK) | __GFP_ZERO;

<span style="white-space:pre"> /*得到要映射的页框数*/ </span>

nr_pages = (area->size - PAGE_SIZE) >> PAGE_SHIFT;

array_size = (nr_pages * sizeof(struct page *));/*得到page指针数组所需的空间*/

area->nr_pages = nr_pages;

/* Please note that the recursion is strictly bounded. */

if (array_size > PAGE_SIZE) {

pages = __vmalloc_node(array_size, 1, nested_gfp|__GFP_HIGHMEM,

PAGE_KERNEL, node, caller);/*如果array_size大于一个页框的大小,则递归调用__vmalloc_node

area->flags |= VM_VPAGES;

} else {

pages = kmalloc_node(array_size, nested_gfp, node); //调用slab机制

}

area->pages = pages;

area->caller = caller;

if (!area->pages) {

remove_vm_area(area->addr); /*pages域不存在则要将相应的vm_struct和vmap_area分别从vmlist和红黑树中删除*/

kfree(area);

return NULL;

}

for (i = 0; i < area->nr_pages; i++) { /*为area中的每一个page分配一个物理页框*/

struct page *page;

gfp_t tmp_mask = gfp_mask | __GFP_NOWARN;

if (node < 0)

page = alloc_page(tmp_mask);

else

page = alloc_pages_node(node, tmp_mask, order);

if (unlikely(!page)) {

/* Successfully allocated i pages, free them in __vunmap() */

area->nr_pages = i;

goto fail;

}

area->pages[i] = page;

}

<span style="white-space:pre"> /*建立映射*/ </span>

if (map_vm_area(area, prot, &pages))

goto fail;

return area->addr;

fail:

warn_alloc_failed(gfp_mask, order,

"vmalloc: allocation failure, allocated %ld of %ld bytes\n",

(area->nr_pages*PAGE_SIZE), area->size);

vfree(area->addr);

return NULL;

int map_vm_area(struct vm_struct *area, pgprot_t prot, struct page ***pages)

{<span style="white-space:pre"> /*确定映射的起始地址和结束地址,这里结束地址除去了之前分配的安全区*</span>/

unsigned long addr = (unsigned long)area->addr;

unsigned long end = addr + area->size - PAGE_SIZE;

int err;

err = vmap_page_range(addr, end, prot, *pages);

if (err > 0) {

*pages += err;

err = 0;

}

return err;

}

/*

* Set up page tables in kva (addr, end). The ptes shall have prot "prot", and

* will have pfns corresponding to the "pages" array.

*

* Ie. pte at addr+N*PAGE_SIZE shall point to pfn corresponding to pages[N]

*/

static int vmap_page_range_noflush(unsigned long start, unsigned long end,

pgprot_t prot, struct page **pages)

{

pgd_t *pgd;

unsigned long next;

unsigned long addr = start;

int err = 0;

int nr = 0;

BUG_ON(addr >= end);

pgd = pgd_offset_k(addr);/*得到线性地址区域第一个单元对应的pgd,获取主内核页全局目录中的目录项*/

do {

next = pgd_addr_end(addr, end); /*end和addr在同一个pgd的话,next的值为end,否则为addr所在pgd的下一个pgd对应的起始线性地址*/

err = vmap_pud_range(pgd, addr, next, prot, pages, &nr);

if (err)

return err;

} while (pgd++, addr = next, addr != end);

return nr;

}

/*

* the pgd page can be thought of an array like this: pgd_t[PTRS_PER_PGD]

*

* this macro returns the index of the entry in the pgd page which would

* control the given virtual address

*/

#define pgd_index(address) (((address) >> PGDIR_SHIFT) & (PTRS_PER_PGD - 1))

/*

* pgd_offset() returns a (pgd_t *)

* pgd_index() is used get the offset into the pgd page's array of pgd_t's;

*/

#define pgd_offset(mm, address) ((mm)->pgd + pgd_index((address)))

/*

* a shortcut which implies the use of the kernel's pgd, instead

* of a process's

*/

#define pgd_offset_k(address) pgd_offset(&init_mm, (address))

struct mm_struct init_mm = {

.mm_rb = RB_ROOT,

.pgd= swapper_pg_dir,

.mm_users = ATOMIC_INIT(2),

.mm_count = ATOMIC_INIT(1),

.mmap_sem = __RWSEM_INITIALIZER(init_mm.mmap_sem),

.page_table_lock = __SPIN_LOCK_UNLOCKED(init_mm.page_table_lock),

.mmlist = LIST_HEAD_INIT(init_mm.mmlist),

INIT_MM_CONTEXT(init_mm)

};

static int vmap_pud_range(pgd_t *pgd, unsigned long addr,

unsigned long end, pgprot_t prot, struct page **pages, int *nr)

{

pud_t *pud;

unsigned long next;

pud = pud_alloc(&init_mm, pgd, addr); /*创建一个页上级目录,并把它的物理地址写入内核页全局目录的合适表项*/

if (!pud)

return -ENOMEM;

do {

next = pud_addr_end(addr, end);

if (vmap_pmd_range(pud, addr, next, prot, pages, nr))

return -ENOMEM;

} while (pud++, addr = next, addr != end);

return 0;

}

static int vmap_pmd_range(pud_t *pud, unsigned long addr,

unsigned long end, pgprot_t prot, struct page **pages, int *nr)

{

pmd_t *pmd;

unsigned long next;

pmd = pmd_alloc(&init_mm, pud, addr); //中级目录

if (!pmd)

return -ENOMEM;

do {

next = pmd_addr_end(addr, end);

if (vmap_pte_range(pmd, addr, next, prot, pages, nr))

return -ENOMEM;

} while (pmd++, addr = next, addr != end);

return 0;

}

static int vmap_pte_range(pmd_t *pmd, unsigned long addr,

unsigned long end, pgprot_t prot, struct page **pages, int *nr)

{

pte_t *pte;

/*

* nr is a running index into the array which helps higher level

* callers keep track of where we're up to.

*/

pte = pte_alloc_kernel(pmd, addr); /*定位与addr对应的页表项*/

if (!pte)

return -ENOMEM;

do {

struct page *page = pages[*nr];

if (WARN_ON(!pte_none(*pte)))

return -EBUSY;

if (WARN_ON(!page))

return -ENOMEM;

<span style="white-space:pre"> </span>/*将新页框的物理地址写进页表*/

set_pte_at(&init_mm, addr, pte, mk_pte(page, prot)); /*将页描述符对应的页框和页表项进行关联,映射被建立*/

(*nr)++;

} while (pte++, addr += PAGE_SIZE, addr != end);

return 0;

非连续内存的释放:

调用vfree()函数实现:

/**

* vfree - release memory allocated by vmalloc()

* @addr: memory base address

*

* Free the virtually continuous memory area starting at @addr, as

* obtained from vmalloc(), vmalloc_32() or __vmalloc(). If @addr is

* NULL, no operation is performed.

*

* Must not be called in interrupt context.

*/

void vfree(const void *addr)

{

BUG_ON(in_interrupt());

kmemleak_free(addr);

__vunmap(addr, 1);

}

static void __vunmap(const void *addr, int deallocate_pages)

{

struct vm_struct *area;

if (!addr)

return;

if ((PAGE_SIZE-1) & (unsigned long)addr) {

WARN(1, KERN_ERR "Trying to vfree() bad address (%p)\n", addr);

return;

}

<pre name="code" class="cpp"><span style="white-space:pre"> </span>/*得到addr对应的vm_struct的描述地址并清除

<span style="white-space:pre"> </span>非连续内存中的线性地址对应的页表项。 同时从vmlist中以及vmap_area结构中的红黑树删除对应节点

*/area = remove_vm_area(addr); if (unlikely(!area)) {WARN(1, KERN_ERR "Trying to vfree() nonexistent vm area (%p)\n",addr);return;}debug_check_no_locks_freed(addr, area->size);debug_check_no_obj_freed(addr, area->size);if (deallocate_pages) {int i;

/*释放被映射的页面*/ for (i = 0; i < area->nr_pages; i++) {struct page *page = area->pages[i];BUG_ON(!page);__free_page(page);}

/*释放页描述符指针数组区域*/ if (area->flags & VM_VPAGES)vfree(area->pages);elsekfree(area->pages);}kfree(area);return;}

/**

* remove_vm_area - find and remove a continuous kernel virtual area

* @addr: base address

*

* Search for the kernel VM area starting at @addr, and remove it.

* This function returns the found VM area, but using it is NOT safe

* on SMP machines, except for its size or flags.

*/

struct vm_struct *remove_vm_area(const void *addr)

{

struct vmap_area *va;

/*从红黑树种查找而不是链表,为了效率起见*/

va = find_vmap_area((unsigned long)addr);

if (va && va->flags & VM_VM_AREA) {

struct vm_struct *vm = va->private;

struct vm_struct *tmp, **p;

/*

* remove from list and disallow access to this vm_struct

* before unmap. (address range confliction is maintained by

* vmap.)

*/

write_lock(&vmlist_lock);

/*从链表中找到,然后删除*/

for (p = &vmlist; (tmp = *p) != vm; p = &tmp->next)

;

*p = tmp->next;

write_unlock(&vmlist_lock);

/*调试用*/

vmap_debug_free_range(va->va_start, va->va_end);

/*从红黑树中删除*/

free_unmap_vmap_area(va);

vm->size -= PAGE_SIZE;

return vm;

}

return NULL;

}