OpenCV 透视变换的两个实例

参考文献:

http://www.cnblogs.com/self-control/archive/2013/01/18/2867022.html

http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/

透视变换:

http://blog.csdn.net/xiaowei_cqu/article/details/26478135

具体流程为:

a)载入图像→灰度化→边缘处理得到边缘图像(edge map)

cv::Mat im = cv::imread(filename);

cv::Mat gray;

cvtColor(im,gray,CV_BGR2GRAY);

Canny(gray,gray,100,150,3);

b)霍夫变换进行直线检测,此处使用的是probabilistic Hough transform(cv::HoughLinesP)而不是standard Hough transform(cv::HoughLines)

std::vector<Vec4i> lines;

cv::HoughLinesP(gray,lines,1,CV_PI/180,70,30,10);

for(int i = 0; i < lines.size(); i++)

line(im,cv::Point(lines[i][0],lines[i][1]),cv::Point(lines[i][2],lines[i][3]),Scalar(255,0,0),2,8,0);

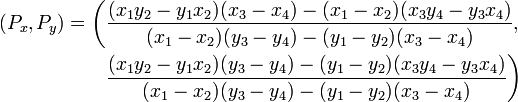

c)通过上面的图我们可以看出,通过霍夫变换检测到的直线并没有将整个边缘包含,但是我们要求的是四个顶点所以并不一定要直线真正的相交,下面就要求四个顶点的坐标,公式为:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

cv::Point2f computeIntersect(cv::Vec4i a, cv::Vec4i b)

{

}

|

|

1

2

3

4

5

6

7

8

9

10

|

std::vector<cv::Point2f> corners;

{

}

|

d)检查是不是四边形

|

1

2

3

4

5

6

7

8

9

|

std::vector<cv::Point2f> approx;

cv::approxPolyDP(cv::Mat(corners), approx,

{

}

|

e)确定四个顶点的具体位置(top-left, bottom-left, top-right, and bottom-right corner)→通过四个顶点求出映射矩阵来.

|

{

}

|

下面是获得中心点坐标然后利用上面的函数确定四个顶点的坐标

|

center *= (1. / corners.size());

sortCorners(corners, center);

|

定义目的图像并初始化为0

cv::Mat quad = cv::Mat::zeros(300, 220, CV_8UC3); |

获取目的图像的四个顶点

std::vector<cv::Point2f> dst_pt;

dst.push_back(cv::Point2f(0,0));

dst.push_back(cv::Point2f(quad.cols,0));

dst.push_back(cv::Point2f(quad.cols,quad.rows));

dst.push_back(cv::Point2f(0,quad.rows));

|

计算映射矩阵

cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts); |

进行透视变换并显示结果

cv::warpPerspective(im, quad, transmtx, quad.size());

|

// affine transformation.cpp : 定义控制台应用程序的入口点。 // #include "stdafx.h" /** * Automatic perspective correction for quadrilateral objects. See the tutorial at * http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/ */ #include <opencv2/imgproc/imgproc.hpp> #include <opencv2/highgui/highgui.hpp> #include <iostream> #pragma comment(lib,"opencv_core2410d.lib") #pragma comment(lib,"opencv_highgui2410d.lib") #pragma comment(lib,"opencv_imgproc2410d.lib") cv::Point2f center(0,0); cv::Point2f computeIntersect(cv::Vec4i a, cv::Vec4i b) { int x1 = a[0], y1 = a[1], x2 = a[2], y2 = a[3], x3 = b[0], y3 = b[1], x4 = b[2], y4 = b[3]; float denom; if (float d = ((float)(x1 - x2) * (y3 - y4)) - ((y1 - y2) * (x3 - x4))) { cv::Point2f pt; pt.x = ((x1 * y2 - y1 * x2) * (x3 - x4) - (x1 - x2) * (x3 * y4 - y3 * x4)) / d; pt.y = ((x1 * y2 - y1 * x2) * (y3 - y4) - (y1 - y2) * (x3 * y4 - y3 * x4)) / d; return pt; } else return cv::Point2f(-1, -1); } void sortCorners(std::vector<cv::Point2f>& corners, cv::Point2f center) { std::vector<cv::Point2f> top, bot; for (int i = 0; i < corners.size(); i++) { if (corners[i].y < center.y) top.push_back(corners[i]); else bot.push_back(corners[i]); } corners.clear(); if (top.size() == 2 && bot.size() == 2){ cv::Point2f tl = top[0].x > top[1].x ? top[1] : top[0]; cv::Point2f tr = top[0].x > top[1].x ? top[0] : top[1]; cv::Point2f bl = bot[0].x > bot[1].x ? bot[1] : bot[0]; cv::Point2f br = bot[0].x > bot[1].x ? bot[0] : bot[1]; corners.push_back(tl); corners.push_back(tr); corners.push_back(br); corners.push_back(bl); } } int main() { cv::Mat src = cv::imread("image.jpg"); if (src.empty()) return -1; cv::Mat bw; cv::cvtColor(src, bw, CV_BGR2GRAY); cv::blur(bw, bw, cv::Size(3, 3)); cv::Canny(bw, bw, 100, 100, 3); std::vector<cv::Vec4i> lines; cv::HoughLinesP(bw, lines, 1, CV_PI/180, 70, 30, 10); // Expand the lines for (int i = 0; i < lines.size(); i++) { cv::Vec4i v = lines[i]; lines[i][0] = 0; lines[i][1] = ((float)v[1] - v[3]) / (v[0] - v[2]) * -v[0] + v[1]; lines[i][2] = src.cols; lines[i][3] = ((float)v[1] - v[3]) / (v[0] - v[2]) * (src.cols - v[2]) + v[3]; } std::vector<cv::Point2f> corners; for (int i = 0; i < lines.size(); i++) { for (int j = i+1; j < lines.size(); j++) { cv::Point2f pt = computeIntersect(lines[i], lines[j]); if (pt.x >= 0 && pt.y >= 0) corners.push_back(pt); } } std::vector<cv::Point2f> approx; cv::approxPolyDP(cv::Mat(corners), approx, cv::arcLength(cv::Mat(corners), true) * 0.02, true); if (approx.size() != 4) { std::cout << "The object is not quadrilateral!" << std::endl; return -1; } // Get mass center for (int i = 0; i < corners.size(); i++) center += corners[i]; center *= (1. / corners.size()); sortCorners(corners, center); if (corners.size() == 0){ std::cout << "The corners were not sorted correctly!" << std::endl; return -1; } cv::Mat dst = src.clone(); // Draw lines for (int i = 0; i < lines.size(); i++) { cv::Vec4i v = lines[i]; cv::line(dst, cv::Point(v[0], v[1]), cv::Point(v[2], v[3]), CV_RGB(0,255,0)); } // Draw corner points cv::circle(dst, corners[0], 3, CV_RGB(255,0,0), 2); cv::circle(dst, corners[1], 3, CV_RGB(0,255,0), 2); cv::circle(dst, corners[2], 3, CV_RGB(0,0,255), 2); cv::circle(dst, corners[3], 3, CV_RGB(255,255,255), 2); // Draw mass center cv::circle(dst, center, 3, CV_RGB(255,255,0), 2); cv::Mat quad = cv::Mat::zeros(300, 220, CV_8UC3); std::vector<cv::Point2f> quad_pts; quad_pts.push_back(cv::Point2f(0, 0)); quad_pts.push_back(cv::Point2f(quad.cols, 0)); quad_pts.push_back(cv::Point2f(quad.cols, quad.rows)); quad_pts.push_back(cv::Point2f(0, quad.rows)); cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts); cv::warpPerspective(src, quad, transmtx, quad.size()); cv::imshow("image", dst); cv::imshow("quadrilateral", quad); cv::waitKey(); return 0; }

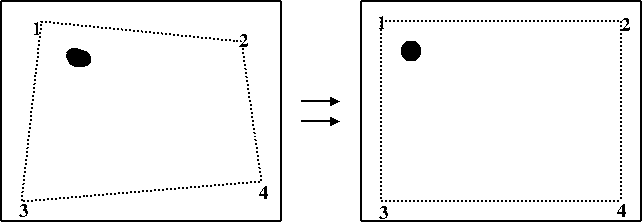

实现结果:

实现透视变换

目标:

在这篇教程中你将学到:

1、如何进行透视变化

2、如何生存透视变换矩阵

理论:

什么是透视变换:

1、透视变换(Perspective Transformation)是将图片投影到一个新的视平面(Viewing Plane),也称作投影映射(Projective Mapping)。

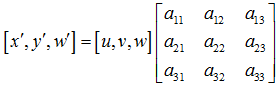

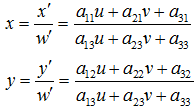

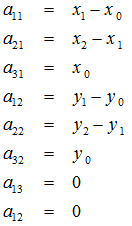

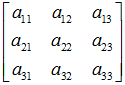

2、换算公式

u,v是原始图片左边,对应得到变换后的图片坐标x,y,其中![]() 。

。

变换矩阵 可以拆成4部分,

可以拆成4部分, 表示线性变换,比如scaling,shearing和ratotion。

表示线性变换,比如scaling,shearing和ratotion。![]() 用于平移,

用于平移,![]() 产生透视变换。所以可以理解成仿射等是透视变换的特殊形式。经过透视变换之后的图片通常不是平行四边形(除非映射视平面和原来平面平行的情况)。

产生透视变换。所以可以理解成仿射等是透视变换的特殊形式。经过透视变换之后的图片通常不是平行四边形(除非映射视平面和原来平面平行的情况)。

重写之前的变换公式可以得到:

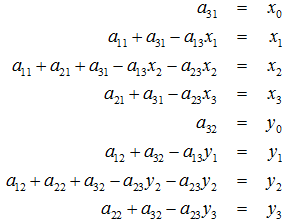

所以,已知变换对应的几个点就可以求取变换公式。反之,特定的变换公式也能新的变换后的图片。简单的看一个正方形到四边形的变换:

变换的4组对应点可以表示成:![]()

根据变换公式得到:

定义几个辅助变量:

![]() 都为0时变换平面与原来是平行的,可以得到:

都为0时变换平面与原来是平行的,可以得到:

![]() 不为0时,得到:

不为0时,得到:

求解出的变换矩阵就可以将一个正方形变换到四边形。反之,四边形变换到正方形也是一样的。于是,我们通过两次变换:四边形变换到正方形+正方形变换到四边形就可以将任意一个四边形变换到另一个四边形。

代码:

#include "opencv2/highgui.hpp"

#include "opencv2/imgproc.hpp"

#include <iostream>

#include <stdio.h>

using namespace cv;

using namespace std;

/** @function main */

int main( int argc, char** argv )

{

cv::Mat src= cv::imread( "test.jpg",0);

if (!src.data)

return 0;

vector<Point> not_a_rect_shape;

not_a_rect_shape.push_back(Point(122,0));

not_a_rect_shape.push_back(Point(814,0));

not_a_rect_shape.push_back(Point(22,540));

not_a_rect_shape.push_back(Point(910,540));

// For debugging purposes, draw green lines connecting those points

// and save it on disk

const Point* point = ¬_a_rect_shape[0];

int n = (int )not_a_rect_shape.size();

Mat draw = src.clone();

polylines(draw, &point, &n, 1, true, Scalar(0, 255, 0), 3, CV_AA);

imwrite( "draw.jpg", draw);

// topLeft, topRight, bottomRight, bottomLeft

cv::Point2f src_vertices[4];

src_vertices[0] = not_a_rect_shape[0];

src_vertices[1] = not_a_rect_shape[1];

src_vertices[2] = not_a_rect_shape[2];

src_vertices[3] = not_a_rect_shape[3];

Point2f dst_vertices[4];

dst_vertices[0] = Point(0, 0);

dst_vertices[1] = Point(960,0);

dst_vertices[2] = Point(0,540);

dst_vertices[3] = Point(960,540);

Mat warpMatrix = getPerspectiveTransform(src_vertices, dst_vertices);

cv::Mat rotated;

warpPerspective(src, rotated, warpMatrix, rotated.size(), INTER_LINEAR, BORDER_CONSTANT);

// Display the image

cv::namedWindow( "Original Image");

cv::imshow( "Original Image",src);

cv::namedWindow( "warp perspective");

cv::imshow( "warp perspective",rotated);

imwrite( "result.jpg",src);

cv::waitKey();

return 0;

}

代码解释:

1、获取图片,如果输入路径为空的话程序直接退出

if (!src.data)

return 0;

not_a_rect_shape.push_back(Point(122,0));

not_a_rect_shape.push_back(Point(814,0));

not_a_rect_shape.push_back(Point(22,540));

not_a_rect_shape.push_back(Point(910,540));

int n = ( int )not_a_rect_shape.size();

Mat draw = src.clone();

polylines(draw, &point, &n, 1, true, Scalar( 0, 255, 0), 3, CV_AA);

imwrite( "draw.jpg", draw);

src_vertices[0] = not_a_rect_shape[0];

src_vertices[1] = not_a_rect_shape[1];

src_vertices[2] = not_a_rect_shape[2];

src_vertices[3] = not_a_rect_shape[3];

Point2f dst_vertices[4];

dst_vertices[0] = Point(0, 0);

dst_vertices[1] = Point(960,0);

dst_vertices[2] = Point(0,540);

dst_vertices[3] = Point(960,540);

Mat warpMatrix = getPerspectiveTransform(src_vertices, dst_vertices);

warpPerspective(src, rotated, warpMatrix, rotated.size(), INTER_LINEAR, BORDER_CONSTANT);

cv::namedWindow( "Original Image");

cv::imshow( "Original Image",src);

cv::namedWindow( "warp perspective");

cv::imshow( "warp perspective",rotated);

imwrite( "result.jpg",src);

原始图片

标注四个边界点

透视变换后的图片

需要注意的是,这里变化后的图像丢失了一些边界细节,这在具体实现的时候是需要注意的。