gazebo+ros搭建单目仿真环境:贴有二维码的天花板+kobuki+camera(2)

二 。 实验平台搭建: 机器人kobuki + camera

有了环境后,那得准备实验平台了。 考虑到kobuki有现成的模型,也有gazebo下的仿真。再者,之前改写过turtlebotgazebo的仿真平台,

见ros中利用gazebo进行gmapping仿真:kobuki+kinct -> kobuki+rplidar :用gazebo模拟激光的仿真。现在是模拟摄像头的仿真。。

分析:

直接利用之前的turtlebot的gazebo 2d激光slam的模型改写实验平台。。第一步需要加一个模块当做摄像头;第二步将此摄像头模块具有摄像头功能,即附上插件功能。。

第三部,调试效果,位置姿态信息是否符合进行验证。

1. 启动的launch文件。环境配置 + 机器人平台配置+ camera_to_depth的节点处理(turtlebot利用fake 2d laser的节点)

2. 环境已好,fake可以不关注, 关注机器人平台配置。 :传感器上

slam_world.launch ---》

===》机器人配置信息 :SENSOR的组合

<include file="$(find turtlebot_gazebo)/launch/includes/$(arg base).launch.xml">

kobuki.launch.xml---》》

====》》xacro文件

<arg name="urdf_file" default="$(find xacro)/xacro.py '$(find turtlebot_description)/robots/$(arg base)_$(arg stacks)_$(arg 3d_sensor).urdf.xacro'" />

kobuki_nostack_camera.urdf.xacro (新建一文件)

===》 <sensor_camera parent="base_link"/> 注意 坐标系的依赖。

<robot name="turtlebot" xmlns:xacro="http://ros.org/wiki/xacro"> <xacro:include filename="$(find turtlebot_description)/urdf/turtlebot_library.urdf.xacro" /> <kobuki/> <!--<stack_hexagons parent="base_link"/>--> <sensor_rplidar parent="base_link"/> <sensor_camera parent="base_link"/> </robot>

turtlebot_library.urdf.xacro 每个模块的xacro文件。

<robot xmlns:xacro="http://ros.org/wiki/xacro"> <!-- General --> <xacro:include filename="$(find turtlebot_description)/urdf/common_properties.urdf.xacro"/> <xacro:include filename="$(find turtlebot_description)/urdf/turtlebot_properties.urdf.xacro"/> <!-- Bases --> <xacro:include filename="$(find create_description)/urdf/create.urdf.xacro"/> <xacro:include filename="$(find kobuki_description)/urdf/kobuki.urdf.xacro" /> <!-- Stacks --> <xacro:include filename="$(find turtlebot_description)/urdf/stacks/circles.urdf.xacro"/> <xacro:include filename="$(find turtlebot_description)/urdf/stacks/hexagons.urdf.xacro"/> <!-- 3D Sensors --> <xacro:include filename="$(find turtlebot_description)/urdf/sensors/kinect.urdf.xacro"/> <xacro:include filename="$(find turtlebot_description)/urdf/sensors/asus_xtion_pro.urdf.xacro"/> <xacro:include filename="$(find turtlebot_description)/urdf/sensors/asus_xtion_pro_offset.urdf.xacro"/> <xacro:include filename="$(find turtlebot_description)/urdf/sensors/rplidar.urdf.xacro"/> <xacro:include filename="$(find turtlebot_description)/urdf/sensors/camera.urdf.xacro"/> </robot>

camera.urdf.xacro ××××××8传感器的信息配置

这里面有两个信息 : 一个是 你要加的模块当做相机的属性信息,gazebo显示。。。。。二是 该模块要具有相机采集环境信息的能力。。相机插件。

同时include上层文件,坐标系统轴就清楚了。。

<?xml version="1.0"?>

<!-- script_version=1.1 -->

<robot name="sensor_camera" xmlns:xacro="http://ros.org/wiki/xacro">

<xacro:include filename="$(find turtlebot_description)/urdf/turtlebot_gazebo.urdf.xacro"/>

<xacro:include filename="$(find turtlebot_description)/urdf/turtlebot_properties.urdf.xacro"/>

<!-- camera -->

<xacro:macro name="sensor_camera" params="parent">

<joint name="camera" type="fixed">

<origin xyz="0.15 0.0 0.35" rpy="0 -1.5708 0.0" />

<parent link="base_link" />

<child link="base_camera_link" />

</joint>

<link name="base_camera_link">

<visual>

<geometry>

<box size="0.05 0.05 0.05" />

</geometry>

<material name="Red" />

</visual>

<inertial>

<mass value="0.000001" />

<origin xyz="0 0 0" />

<inertia ixx="0.0001" ixy="0.0" ixz="0.0"

iyy="0.0001" iyz="0.0"

izz="0.0001" />

</inertial>

</link>

<!-- Set up laser gazebo details -->

<camera_image />

</xacro:macro>

</robot>

urtlebot_gazebo.urdf.xacro ==》传感器的信息 plugin 插件参数的修改

<?xml version="1.0"?>

<robot name="turtlebot_gazebo" xmlns:xacro="http://ros.org/wiki/xacro">

<!-- Microsoft Kinect / ASUS Xtion PRO Live for simulation -->

<xacro:macro name="turtlebot_sim_3dsensor">

<gazebo reference="camera_link">

<sensor type="depth" name="camera">

<always_on>true</always_on>

<update_rate>20.0</update_rate>

<camera>

<horizontal_fov>${60.0*M_PI/180.0}</horizontal_fov>

<image>

<format>R8G8B8</format>

<width>640</width>

<height>480</height>

</image>

<clip>

<near>0.05</near>

<far>8.0</far>

</clip>

</camera>

<plugin name="kinect_camera_controller" filename="libgazebo_ros_openni_kinect.so">

<cameraName>camera</cameraName>

<alwaysOn>true</alwaysOn>

<updateRate>10</updateRate>

<imageTopicName>rgb/image_raw</imageTopicName>

<depthImageTopicName>depth/image_raw</depthImageTopicName>

<pointCloudTopicName>depth/points</pointCloudTopicName>

<cameraInfoTopicName>rgb/camera_info</cameraInfoTopicName>

<depthImageCameraInfoTopicName>depth/camera_info</depthImageCameraInfoTopicName>

<frameName>camera_depth_optical_frame</frameName>

<baseline>0.1</baseline>

<distortion_k1>0.0</distortion_k1>

<distortion_k2>0.0</distortion_k2>

<distortion_k3>0.0</distortion_k3>

<distortion_t1>0.0</distortion_t1>

<distortion_t2>0.0</distortion_t2>

<pointCloudCutoff>0.4</pointCloudCutoff>

</plugin>

</sensor>

</gazebo>

</xacro:macro>

<!-- RPLidar LIDAR for simulation -->

<xacro:macro name="rplidar_laser">

<gazebo reference="base_laser_link">

<sensor type="ray" name="laser">

<pose>0 0 0 0 0 0</pose>

<visualize>false</visualize>

<update_rate>5.5</update_rate>

<ray>

<scan>

<horizontal>

<samples>360</samples>

<resolution>1</resolution>

<min_angle>-3.1415926</min_angle>

<max_angle>3.1415926</max_angle>

</horizontal>

</scan>

<range>

<min>0.10</min>

<max>6.0</max>

<resolution>0.01</resolution>

</range>

<noise>

<type>Gaussian</type>

<mean>0.0</mean>

<stddev>0.01</stddev>

</noise>

</ray>

<plugin name="rplidar_node" filename="libgazebo_ros_laser.so">

<topicName>/scan</topicName>

<frameName>base_laser_link</frameName>

</plugin>

</sensor>

</gazebo>

</xacro:macro>

<!-- camera -->

<xacro:macro name="camera_image">

<gazebo reference="base_camera_link">

<sensor type="camera" name="camera1">

<update_rate>30.0</update_rate>

<camera name="head">

<horizontal_fov>1.3962634</horizontal_fov>

<image>

<width>640</width>

<height>480</height>

<format>R8G8B8</format>

</image>

<clip>

<near>0.02</near>

<far>300</far>

</clip>

<noise>

<type>gaussian</type>

<!-- Noise is sampled independently per pixel on each frame.

That pixel's noise value is added to each of its color

channels, which at that point lie in the range [0,1]. -->

<mean>0.0</mean>

<stddev>0.007</stddev>

</noise>

</camera>

<plugin name="camera_controller" filename="libgazebo_ros_camera.so">

<alwaysOn>true</alwaysOn>

<updateRate>30.0</updateRate>

<cameraName>camera1</cameraName>

<imageTopicName>/image_raw</imageTopicName>

<cameraInfoTopicName>/camera_info</cameraInfoTopicName>

<frameName>base_camera_link</frameName>

<hackBaseline>0.07</hackBaseline>

<distortionK1>0.0</distortionK1>

<distortionK2>0.0</distortionK2>

<distortionK3>0.0</distortionK3>

<distortionT1>0.0</distortionT1>

<distortionT2>0.0</distortionT2>

</plugin>

</sensor>

</gazebo>

</xacro:macro>

</robot>

环境变量 ~/.bahsrc

export TURTLEBOT_BASE=kobuki

export TURTLEBOT_STACKS=nostack

#export TURTLEBOT_3D_SENSOR=rplidar

export TURTLEBOT_3D_SENSOR=camera

======

运行显示结果:

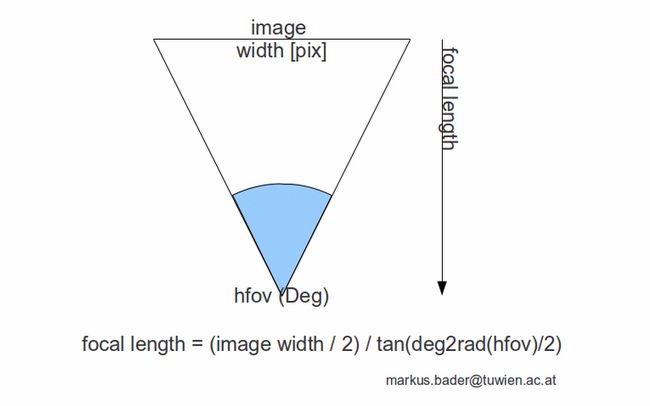

gazebo 仿真中的相机内参说明: 可以用 视角和 像素大小来求 fx fy

例如 640x480 视角1.3962634 : dx =320 dy = 240 fx=381.3612 fy=286.0209

例如 640x480 视角1.3962634 : 应该是认为 水平视角下 求得 f = 381.3612 (fx = fy)

图片:

参考:

ros中利用gazebo进行gmapping仿真:kobuki+kinct -> kobuki+rplidar

gazebo搭建单目仿真环境:贴有二维码的天花板+kobuki+image(1)

Tutorial: Using Gazebo plugins with ROS

http://gazebosim.org/tutorials?tut=ros_gzplugins

sdf http://sdformat.org/spec?ver=1.5&elem=visual#visual_material

gazebo http://www.gazebosim.org/tutorials?cat=build_world