Haoop selfjoin 左表 右表 自连接

Haoop selfjoin 左表 右表 自连接

map

context.write写2次,作为左表、右表

左表:

context.write(new Text(array[1].trim()), new Text("1_"+array[0].trim()));

左表第一列是父亲,第二列是孩子;

右表:

context.write(new Text(array[0].trim()), new Text("0_"+array[1].trim()));

右表第一列是孩子,第二列是父亲;

reduce:

判断孩子还是父亲,生成grandChildList和grandParentList,做笛卡尔积

1、数据文件

1列是孩子 2列是父亲,找祖父亲

[root@master IMFdatatest]#hadoop dfs -cat /library/selfjoin/selfjoin.txt

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

16/02/20 17:22:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Tom Lucy

Tom Jack

Jone Lucy

Jone Jack

Lucy Mary

Lucy Ben

Jack Alice

Jack Jesse

Terry Alice

Terry Jesse

Philip Terry

Philip Alma

Mark Terry

Mark Alma

2、上传hdfs

3、结果

[root@master IMFdatatest]#hadoop dfs -cat /library/outputselfjoin3/part-r-00000

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

16/02/20 18:33:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Tom Alice

Tom Jesse

Jone Alice

Jone Jesse

Tom Ben

Tom Mary

Jone Ben

Jone Mary

Philip Alice

Philip Jesse

Mark Alice

Mark Jesse

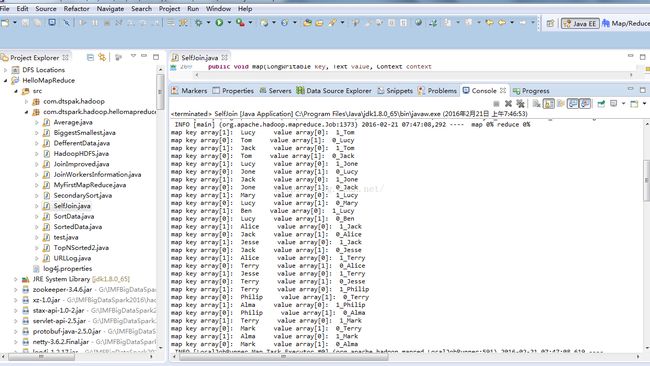

打的日志

map key array[1]: Lucy value array[0]: 1_Tom

map key array[0]: Tom value array[1]: 0_Lucy

map key array[1]: Jack value array[0]: 1_Tom

map key array[0]: Tom value array[1]: 0_Jack

map key array[1]: Lucy value array[0]: 1_Jone

map key array[0]: Jone value array[1]: 0_Lucy

map key array[1]: Jack value array[0]: 1_Jone

map key array[0]: Jone value array[1]: 0_Jack

map key array[1]: Mary value array[0]: 1_Lucy

map key array[0]: Lucy value array[1]: 0_Mary

map key array[1]: Ben value array[0]: 1_Lucy

map key array[0]: Lucy value array[1]: 0_Ben

map key array[1]: Alice value array[0]: 1_Jack

map key array[0]: Jack value array[1]: 0_Alice

map key array[1]: Jesse value array[0]: 1_Jack

map key array[0]: Jack value array[1]: 0_Jesse

map key array[1]: Alice value array[0]: 1_Terry

map key array[0]: Terry value array[1]: 0_Alice

map key array[1]: Jesse value array[0]: 1_Terry

map key array[0]: Terry value array[1]: 0_Jesse

map key array[1]: Terry value array[0]: 1_Philip

map key array[0]: Philip value array[1]: 0_Terry

map key array[1]: Alma value array[0]: 1_Philip

map key array[0]: Philip value array[1]: 0_Alma

map key array[1]: Terry value array[0]: 1_Mark

map key array[0]: Mark value array[1]: 0_Terry

map key array[1]: Alma value array[0]: 1_Mark

map key array[0]: Mark value array[1]: 0_Alma

4 代码

package com.dtspark.hadoop.hellomapreduce;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class SelfJoin {

public static class DataMapper

extends Mapper<LongWritable, Text, Text, Text>{

public void map(LongWritable key, Text value, Context context

) throws IOException, InterruptedException {

System.out.println("Map Methond Invoked!!!");

// String[] array =new String[2];

// int i=0;

String[] array;

array = value.toString().split("\t");

StringTokenizer stringTokenizer=new StringTokenizer(value.toString());

/* while(stringTokenizer.hasMoreElements()){

array[i] =stringTokenizer.nextToken().trim();

i++;

}*/

// System.out.println("key "+array[1] + "value: "+"1_"+array[0]+"_"+array[1]);

// System.out.println("key "+array[0] + "value: "+"0_"+array[0]+"_"+array[1]);

// context.write(new Text(array[1]), new Text("1_"+array[0]+"_"+array[1]));

// context.write(new Text(array[0]), new Text("0_"+array[0]+"_"+array[1]));

System.out.println("map key array[1]: " +array[1].trim() +" value array[0]: " + "1_"+array[0].trim());

System.out.println("map key array[0]: " +array[0].trim() +" value array[1]: " + "0_"+array[1].trim());

context.write(new Text(array[1].trim()), new Text("1_"+array[0].trim())); //left

context.write(new Text(array[0].trim()), new Text("0_"+array[1].trim())); //right

}

}

public static class DataReducer

extends Reducer<Text,Text,Text, Text> {

public void reduce(Text key, Iterable<Text> values,

Context context

) throws IOException, InterruptedException {

System.out.println("Reduce Methond Invoked!!!" );

Iterator<Text> iterator=values.iterator();

List<String> grandChildList =new ArrayList<String>();

List<String> grandParentList =new ArrayList<String>();

while(iterator.hasNext()){

String item = iterator.next().toString();

String[] splited = item.split("_");

if (splited[0].equals("1")){

grandChildList.add(splited[1]);

} else {

grandParentList.add(splited[1]);

}

}

if (grandChildList.size() > 0 && grandParentList.size() > 0){

for (String grandChild:grandChildList ) {

for (String grandParent: grandParentList) {

// context.write(new Text(grandChild),new Text(grandParent));

context.write(new Text(grandChild), new Text (grandParent));

}

}

}

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length < 2) {

System.err.println("Usage: JoinImproved <in> [<in>...] <out>");

System.exit(2);

}

Job job = Job.getInstance(conf, "JoinImproved");

job.setJarByClass(SelfJoin.class);

job.setMapperClass(DataMapper.class);

job.setReducerClass(DataReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

for (int i = 0; i < otherArgs.length - 1; ++i) {

FileInputFormat.addInputPath(job, new Path(otherArgs[i]));

}

FileOutputFormat.setOutputPath(job,

new Path(otherArgs[otherArgs.length - 1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}