【OpenStack】SSH登录虚拟机出现"Read from socket failed: Connection reset by peer"问题的解决办法

声明:

部署:三个节点(Controller/Compute + Network + Compute)

使用的镜像:precise-server-cloudimg-i386-disk1.img

创建虚拟机命令:nova boot ubuntu-keypair-test --image 1f7f5763-33a1-4282-92b3-53366bf7c695 --flavor 2 --nic net-id=3d42a0d4-a980-4613-ae76-a2cddecff054 --availability-zone nova:compute233 --key_name mykey

虚拟机ACTIVE之后,可以ping通虚拟机的fixedip(10.1.1.6)和floatingip(192.150.73.5)。VNC访问虚拟机正常,出现登录界面。因为Ubuntu的镜像无法使用密码登录,所以只能通过SSH访问,这也是创建虚拟机时指定key_name的原因。

Ubuntu cloud images do not have any ssh HostKey generated inside them (/etc/ssh/ssh_host_{ecdsa,dsa,rsa}_key). The keys are generated by cloud-init after it finds a metadata service. Without a metadata service, they do not get generated. ssh will drop your connections immediately without HostKeys.

看来是因为虚拟机访问169.254.169.254不通造成的。于是到NetworkNode查看下iptables规则。

NetworkNode的nat表规则:

Remote metadata server experienced an internal server error.

接着看metadata agent的日志,同样的,发现如下错误:

content=: 404 Not Found. The resource could not be found.

继续搜索nova-api的日志,找到根源:

ERROR [nova.api.metadata.handler 141] [4541] Failed to get metadata for ip: 192.168.82.232

192.168.82.232是我NetworkNode的IP地址,而metadata应该是从ControllerNode获取啊。于是搜索代码,来到如下地方:

至此,问题分析完毕。

重启虚拟机,查看其console日志输出:

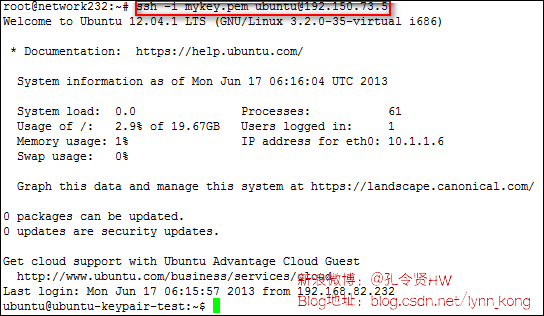

再次在NetworkNode上ssh登录虚拟机:

本博客欢迎转发,但请保留原作者信息!

新浪微博:@孔令贤HW;

博客地址:http://blog.csdn.net/lynn_kong

内容系本人学习、研究和总结,如有雷同,实属荣幸!

1、问题现象

版本:Grizzly master分支代码2013.06.17部署:三个节点(Controller/Compute + Network + Compute)

使用的镜像:precise-server-cloudimg-i386-disk1.img

创建虚拟机命令:nova boot ubuntu-keypair-test --image 1f7f5763-33a1-4282-92b3-53366bf7c695 --flavor 2 --nic net-id=3d42a0d4-a980-4613-ae76-a2cddecff054 --availability-zone nova:compute233 --key_name mykey

虚拟机ACTIVE之后,可以ping通虚拟机的fixedip(10.1.1.6)和floatingip(192.150.73.5)。VNC访问虚拟机正常,出现登录界面。因为Ubuntu的镜像无法使用密码登录,所以只能通过SSH访问,这也是创建虚拟机时指定key_name的原因。

在NetworkNode通过ssh登录虚拟机失败:

- root@network232:~# ssh -i mykey.pem [email protected] -v

- OpenSSH_5.9p1 Debian-5ubuntu1.1, OpenSSL 1.0.1 14 Mar 2012

- debug1: Reading configuration data /etc/ssh/ssh_config

- debug1: /etc/ssh/ssh_config line 19: Applying options for *

- debug1: Connecting to 192.150.73.5 [192.150.73.5] port 22.

- debug1: Connection established.

- debug1: permanently_set_uid: 0/0

- debug1: identity file mykey.pem type -1

- debug1: identity file mykey.pem-cert type -1

- debug1: Remote protocol version 2.0, remote software version OpenSSH_5.9p1 Debian-5ubuntu1

- debug1: match: OpenSSH_5.9p1 Debian-5ubuntu1 pat OpenSSH*

- debug1: Enabling compatibility mode for protocol 2.0

- debug1: Local version string SSH-2.0-OpenSSH_5.9p1 Debian-5ubuntu1.1

- debug1: SSH2_MSG_KEXINIT sent

- Read from socket failed: Connection reset by peer

root@network232:~# ssh -i mykey.pem [email protected] -v OpenSSH_5.9p1 Debian-5ubuntu1.1, OpenSSL 1.0.1 14 Mar 2012 debug1: Reading configuration data /etc/ssh/ssh_config debug1: /etc/ssh/ssh_config line 19: Applying options for * debug1: Connecting to 192.150.73.5 [192.150.73.5] port 22. debug1: Connection established. debug1: permanently_set_uid: 0/0 debug1: identity file mykey.pem type -1 debug1: identity file mykey.pem-cert type -1 debug1: Remote protocol version 2.0, remote software version OpenSSH_5.9p1 Debian-5ubuntu1 debug1: match: OpenSSH_5.9p1 Debian-5ubuntu1 pat OpenSSH* debug1: Enabling compatibility mode for protocol 2.0 debug1: Local version string SSH-2.0-OpenSSH_5.9p1 Debian-5ubuntu1.1 debug1: SSH2_MSG_KEXINIT sent Read from socket failed: Connection reset by peer虚拟机启动日志:

- Begin: Running /scripts/init-bottom ... done.

- [ 1.874928] EXT4-fs (vda1): re-mounted. Opts: (null)

- cloud-init start-local running: Mon, 17 Jun 2013 03:39:11 +0000. up 4.59 seconds

- no instance data found in start-local

- ci-info: lo : 1 127.0.0.1 255.0.0.0 .

- ci-info: eth0 : 1 10.1.1.6 255.255.255.0 fa:16:3e:31:f4:52

- ci-info: route-0: 0.0.0.0 10.1.1.1 0.0.0.0 eth0 UG

- ci-info: route-1: 10.1.1.0 0.0.0.0 255.255.255.0 eth0 U

- cloud-init start running: Mon, 17 Jun 2013 03:39:14 +0000. up 8.23 seconds

- 2013-06-17 03:39:15,590 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [0/120s]: http error [404]

- 2013-06-17 03:39:17,083 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [2/120s]: http error [404]

- 2013-06-17 03:39:18,643 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [3/120s]: http error [404]

- 2013-06-17 03:39:20,153 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [5/120s]: http error [404]

- 2013-06-17 03:39:21,638 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [6/120s]: http error [404]

- 2013-06-17 03:39:23,071 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [8/120s]: http error [404]

- 2013-06-17 03:41:15,356 - DataSourceEc2.py[CRITICAL]: giving up on md after 120 seconds

- no instance data found in start

- Skipping profile in /etc/apparmor.d/disable: usr.sbin.rsyslogd

- * Starting AppArmor profiles [ OK ]

- landscape-client is not configured, please run landscape-config.

- * Stopping System V initialisation compatibility [ OK ]

- * Stopping Handle applying cloud-config [ OK ]

- * Starting System V runlevel compatibility [ OK ]

- * Starting ACPI daemon [ OK ]

- * Starting save kernel messages [ OK ]

- * Starting automatic crash report generation [ OK ]

- * Starting regular background program processing daemon [ OK ]

- * Starting deferred execution scheduler [ OK ]

- * Starting CPU interrupts balancing daemon [ OK ]

- * Stopping save kernel messages [ OK ]

- * Starting crash report submission daemon [ OK ]

- * Stopping System V runlevel compatibility [ OK ]

- * Starting execute cloud user/final scripts [ OK ]

Begin: Running /scripts/init-bottom ... done. [ 1.874928] EXT4-fs (vda1): re-mounted. Opts: (null) cloud-init start-local running: Mon, 17 Jun 2013 03:39:11 +0000. up 4.59 seconds no instance data found in start-local ci-info: lo : 1 127.0.0.1 255.0.0.0 . ci-info: eth0 : 1 10.1.1.6 255.255.255.0 fa:16:3e:31:f4:52 ci-info: route-0: 0.0.0.0 10.1.1.1 0.0.0.0 eth0 UG ci-info: route-1: 10.1.1.0 0.0.0.0 255.255.255.0 eth0 U cloud-init start running: Mon, 17 Jun 2013 03:39:14 +0000. up 8.23 seconds 2013-06-17 03:39:15,590 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [0/120s]: http error [404] 2013-06-17 03:39:17,083 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [2/120s]: http error [404] 2013-06-17 03:39:18,643 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [3/120s]: http error [404] 2013-06-17 03:39:20,153 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [5/120s]: http error [404] 2013-06-17 03:39:21,638 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [6/120s]: http error [404] 2013-06-17 03:39:23,071 - util.py[WARNING]: 'http://169.254.169.254/2009-04-04/meta-data/instance-id' failed [8/120s]: http error [404] 2013-06-17 03:41:15,356 - DataSourceEc2.py[CRITICAL]: giving up on md after 120 seconds no instance data found in start Skipping profile in /etc/apparmor.d/disable: usr.sbin.rsyslogd * Starting AppArmor profiles [ OK ] landscape-client is not configured, please run landscape-config. * Stopping System V initialisation compatibility [ OK ] * Stopping Handle applying cloud-config [ OK ] * Starting System V runlevel compatibility [ OK ] * Starting ACPI daemon [ OK ] * Starting save kernel messages [ OK ] * Starting automatic crash report generation [ OK ] * Starting regular background program processing daemon [ OK ] * Starting deferred execution scheduler [ OK ] * Starting CPU interrupts balancing daemon [ OK ] * Stopping save kernel messages [ OK ] * Starting crash report submission daemon [ OK ] * Stopping System V runlevel compatibility [ OK ] * Starting execute cloud user/final scripts [ OK ]nova-compute日志中,注入密钥过程无错误:

- 2013-06-17 09:46:47 DEBUG [nova.virt.disk.api 436] [24770] Inject key fs=<nova.virt.disk.vfs.localfs.VFSLocalFS object at 0x3fa2210> key=ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDdG2ek7tGR4NLPHDHntNdPBu0hnEA4mts9FL+fuqMQar5k+anndsqTwtD4WTfoRCoXBoiDAiEhiy1LOgr6GDgJorMYkfuKgdrdViz2meT2F5wiZnxm/gdnGLko2jYmwsla/wIvRtjzMRYR/ut1OMcqRXwyGtFXkO3VlE8YJRZj0TqjKmKaAwsa0mkVU1G2w1RjT8FDVt2qW+UVGggaqM3KZLs9rwn/K56X+eSraNx+BSBqDa+OX1h6Z1e8nRNVxYviOHL3FybcvlgZXLVWRUSBemS6P4xgQq0dapRB+D3/0N0hzY67FUQNfhFk4EsZCxKMxIi6EH7ueCssPTz5ESmp Generated by Nova

- _inject_key_into_fs /usr/lib/python2.7/dist-packages/nova/virt/disk/api.py:436

- 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 102] [24770] Make directory path=root/.ssh make_path /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:102

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf mkdir -p /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 145] [24770] Set permissions path=root/.ssh user=root group=root set_ownership /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:145

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf chown root:root /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 139] [24770] Set permissions path=root/.ssh mode=700 set_permissions /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:139

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf chmod 700 /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.virt.disk.api 386] [24770] Inject file fs=<nova.virt.disk.vfs.localfs.VFSLocalFS object at 0x3fa2210> path=root/.ssh/authorized_keys append=True _inject_file_into_fs /usr/lib/python2.7/dist-packages/nova/virt/disk/api.py:386

- 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 107] [24770] Append file path=root/.ssh/authorized_keys append_file /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:107

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh/authorized_keys execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.openstack.common.rpc.amqp 583] [24770] Making synchronous call on conductor ... multicall /usr/lib/python2.7/dist-packages/nova/openstack/common/rpc/amqp.py:583

- 2013-06-17 09:46:47 DEBUG [nova.openstack.common.rpc.amqp 586] [24770] MSG_ID is 56a11872137f46998a7dac3acb225b83 multicall /usr/lib/python2.7/dist-packages/nova/openstack/common/rpc/amqp.py:586

- 2013-06-17 09:46:47 DEBUG [nova.openstack.common.rpc.amqp 337] [24770] UNIQUE_ID is d355a1b88fcc45709f184272ec22e903. _add_unique_id /usr/lib/python2.7/dist-packages/nova/openstack/common/rpc/amqp.py:337

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf tee -a /tmp/openstack-vfs-localfsqvWMch/root/.ssh/authorized_keys execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 139] [24770] Set permissions path=root/.ssh/authorized_keys mode=600 set_permissions /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:139

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh/authorized_keys execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf chmod 600 /tmp/openstack-vfs-localfsqvWMch/root/.ssh/authorized_keys execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 131] [24770] Has file path=etc/selinux has_file /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:131

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/etc/selinux execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -e /tmp/openstack-vfs-localfsqvWMch/etc/selinux execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:48 DEBUG [nova.utils 232] [24770] Result was 1 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:48 DEBUG [nova.virt.disk.mount.api 203] [24770] Umount /dev/nbd6p1 unmnt_dev /usr/lib/python2.7/dist-packages/nova/virt/disk/mount/api.py:203

- 2013-06-17 09:46:48 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf umount /dev/nbd6p1 execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:49 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

- 2013-06-17 09:46:49 DEBUG [nova.virt.disk.mount.api 179] [24770] Unmap dev /dev/nbd6 unmap_dev /usr/lib/python2.7/dist-packages/nova/virt/disk/mount/api.py:179

- 2013-06-17 09:46:49 DEBUG [nova.virt.disk.mount.nbd 126] [24770] Release nbd device /dev/nbd6 unget_dev /usr/lib/python2.7/dist-packages/nova/virt/disk/mount/nbd.py:126

- 2013-06-17 09:46:49 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf qemu-nbd -d /dev/nbd6 execute /usr/lib/python2.7/dist-packages/nova/utils.py:208

- 2013-06-17 09:46:49 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

2013-06-17 09:46:47 DEBUG [nova.virt.disk.api 436] [24770] Inject key fs=<nova.virt.disk.vfs.localfs.VFSLocalFS object at 0x3fa2210> key=ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDdG2ek7tGR4NLPHDHntNdPBu0hnEA4mts9FL+fuqMQar5k+anndsqTwtD4WTfoRCoXBoiDAiEhiy1LOgr6GDgJorMYkfuKgdrdViz2meT2F5wiZnxm/gdnGLko2jYmwsla/wIvRtjzMRYR/ut1OMcqRXwyGtFXkO3VlE8YJRZj0TqjKmKaAwsa0mkVU1G2w1RjT8FDVt2qW+UVGggaqM3KZLs9rwn/K56X+eSraNx+BSBqDa+OX1h6Z1e8nRNVxYviOHL3FybcvlgZXLVWRUSBemS6P4xgQq0dapRB+D3/0N0hzY67FUQNfhFk4EsZCxKMxIi6EH7ueCssPTz5ESmp Generated by Nova _inject_key_into_fs /usr/lib/python2.7/dist-packages/nova/virt/disk/api.py:436 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 102] [24770] Make directory path=root/.ssh make_path /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:102 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf mkdir -p /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 145] [24770] Set permissions path=root/.ssh user=root group=root set_ownership /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:145 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf chown root:root /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 139] [24770] Set permissions path=root/.ssh mode=700 set_permissions /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:139 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf chmod 700 /tmp/openstack-vfs-localfsqvWMch/root/.ssh execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.virt.disk.api 386] [24770] Inject file fs=<nova.virt.disk.vfs.localfs.VFSLocalFS object at 0x3fa2210> path=root/.ssh/authorized_keys append=True _inject_file_into_fs /usr/lib/python2.7/dist-packages/nova/virt/disk/api.py:386 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 107] [24770] Append file path=root/.ssh/authorized_keys append_file /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:107 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh/authorized_keys execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.openstack.common.rpc.amqp 583] [24770] Making synchronous call on conductor ... multicall /usr/lib/python2.7/dist-packages/nova/openstack/common/rpc/amqp.py:583 2013-06-17 09:46:47 DEBUG [nova.openstack.common.rpc.amqp 586] [24770] MSG_ID is 56a11872137f46998a7dac3acb225b83 multicall /usr/lib/python2.7/dist-packages/nova/openstack/common/rpc/amqp.py:586 2013-06-17 09:46:47 DEBUG [nova.openstack.common.rpc.amqp 337] [24770] UNIQUE_ID is d355a1b88fcc45709f184272ec22e903. _add_unique_id /usr/lib/python2.7/dist-packages/nova/openstack/common/rpc/amqp.py:337 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf tee -a /tmp/openstack-vfs-localfsqvWMch/root/.ssh/authorized_keys execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 139] [24770] Set permissions path=root/.ssh/authorized_keys mode=600 set_permissions /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:139 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/root/.ssh/authorized_keys execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf chmod 600 /tmp/openstack-vfs-localfsqvWMch/root/.ssh/authorized_keys execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.virt.disk.vfs.localfs 131] [24770] Has file path=etc/selinux has_file /usr/lib/python2.7/dist-packages/nova/virt/disk/vfs/localfs.py:131 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -nm /tmp/openstack-vfs-localfsqvWMch/etc/selinux execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:47 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:47 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf readlink -e /tmp/openstack-vfs-localfsqvWMch/etc/selinux execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:48 DEBUG [nova.utils 232] [24770] Result was 1 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:48 DEBUG [nova.virt.disk.mount.api 203] [24770] Umount /dev/nbd6p1 unmnt_dev /usr/lib/python2.7/dist-packages/nova/virt/disk/mount/api.py:203 2013-06-17 09:46:48 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf umount /dev/nbd6p1 execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:49 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232 2013-06-17 09:46:49 DEBUG [nova.virt.disk.mount.api 179] [24770] Unmap dev /dev/nbd6 unmap_dev /usr/lib/python2.7/dist-packages/nova/virt/disk/mount/api.py:179 2013-06-17 09:46:49 DEBUG [nova.virt.disk.mount.nbd 126] [24770] Release nbd device /dev/nbd6 unget_dev /usr/lib/python2.7/dist-packages/nova/virt/disk/mount/nbd.py:126 2013-06-17 09:46:49 DEBUG [nova.utils 208] [24770] Running cmd (subprocess): sudo nova-rootwrap /etc/nova/rootwrap.conf qemu-nbd -d /dev/nbd6 execute /usr/lib/python2.7/dist-packages/nova/utils.py:208 2013-06-17 09:46:49 DEBUG [nova.utils 232] [24770] Result was 0 execute /usr/lib/python2.7/dist-packages/nova/utils.py:232

2、问题分析

有问题,多google。

Ubuntu cloud images do not have any ssh HostKey generated inside them (/etc/ssh/ssh_host_{ecdsa,dsa,rsa}_key). The keys are generated by cloud-init after it finds a metadata service. Without a metadata service, they do not get generated. ssh will drop your connections immediately without HostKeys.

看来是因为虚拟机访问169.254.169.254不通造成的。于是到NetworkNode查看下iptables规则。

NetworkNode的nat表规则:

- root@network232:~# ip netns exec qrouter-b147a74b-39bb-4c7a-aed5-19cac4c2df13 iptables-save -t nat

- # Generated by iptables-save v1.4.12 on Mon Jun 17 10:14:57 2013

- *nat

- :PREROUTING ACCEPT [28:8644]

- :INPUT ACCEPT [90:12364]

- :OUTPUT ACCEPT [0:0]

- :POSTROUTING ACCEPT [7:444]

- :quantum-l3-agent-OUTPUT - [0:0]

- :quantum-l3-agent-POSTROUTING - [0:0]

- :quantum-l3-agent-PREROUTING - [0:0]

- :quantum-l3-agent-float-snat - [0:0]

- :quantum-l3-agent-snat - [0:0]

- :quantum-postrouting-bottom - [0:0]

- -A PREROUTING -j quantum-l3-agent-PREROUTING

- -A OUTPUT -j quantum-l3-agent-OUTPUT

- -A POSTROUTING -j quantum-l3-agent-POSTROUTING

- -A POSTROUTING -j quantum-postrouting-bottom

- -A quantum-l3-agent-OUTPUT -d 192.150.73.3/32 -j DNAT --to-destination 10.1.1.4

- -A quantum-l3-agent-OUTPUT -d 192.150.73.4/32 -j DNAT --to-destination 10.1.1.2

- -A quantum-l3-agent-OUTPUT -d 192.150.73.5/32 -j DNAT --to-destination 10.1.1.6

- -A quantum-l3-agent-POSTROUTING ! -i qg-08db2f8b-88 ! -o qg-08db2f8b-88 -m conntrack ! --ctstate DNAT -j ACCEPT

- -A quantum-l3-agent-PREROUTING -d 169.254.169.254/32 -p tcp -m tcp --dport 80 -j REDIRECT --to-ports 9697

- -A quantum-l3-agent-PREROUTING -d 192.150.73.3/32 -j DNAT --to-destination 10.1.1.4

- -A quantum-l3-agent-PREROUTING -d 192.150.73.4/32 -j DNAT --to-destination 10.1.1.2

- -A quantum-l3-agent-PREROUTING -d 192.150.73.5/32 -j DNAT --to-destination 10.1.1.6

- -A quantum-l3-agent-float-snat -s 10.1.1.4/32 -j SNAT --to-source 192.150.73.3

- -A quantum-l3-agent-float-snat -s 10.1.1.2/32 -j SNAT --to-source 192.150.73.4

- -A quantum-l3-agent-float-snat -s 10.1.1.6/32 -j SNAT --to-source 192.150.73.5

- -A quantum-l3-agent-snat -j quantum-l3-agent-float-snat

- -A quantum-l3-agent-snat -s 10.1.1.0/24 -j SNAT --to-source 192.150.73.2

- -A quantum-postrouting-bottom -j quantum-l3-agent-snat

- COMMIT

- # Completed on Mon Jun 17 10:14:57 2013

root@network232:~# ip netns exec qrouter-b147a74b-39bb-4c7a-aed5-19cac4c2df13 iptables-save -t nat # Generated by iptables-save v1.4.12 on Mon Jun 17 10:14:57 2013 *nat :PREROUTING ACCEPT [28:8644] :INPUT ACCEPT [90:12364] :OUTPUT ACCEPT [0:0] :POSTROUTING ACCEPT [7:444] :quantum-l3-agent-OUTPUT - [0:0] :quantum-l3-agent-POSTROUTING - [0:0] :quantum-l3-agent-PREROUTING - [0:0] :quantum-l3-agent-float-snat - [0:0] :quantum-l3-agent-snat - [0:0] :quantum-postrouting-bottom - [0:0] -A PREROUTING -j quantum-l3-agent-PREROUTING -A OUTPUT -j quantum-l3-agent-OUTPUT -A POSTROUTING -j quantum-l3-agent-POSTROUTING -A POSTROUTING -j quantum-postrouting-bottom -A quantum-l3-agent-OUTPUT -d 192.150.73.3/32 -j DNAT --to-destination 10.1.1.4 -A quantum-l3-agent-OUTPUT -d 192.150.73.4/32 -j DNAT --to-destination 10.1.1.2 -A quantum-l3-agent-OUTPUT -d 192.150.73.5/32 -j DNAT --to-destination 10.1.1.6 -A quantum-l3-agent-POSTROUTING ! -i qg-08db2f8b-88 ! -o qg-08db2f8b-88 -m conntrack ! --ctstate DNAT -j ACCEPT -A quantum-l3-agent-PREROUTING -d 169.254.169.254/32 -p tcp -m tcp --dport 80 -j REDIRECT --to-ports 9697 -A quantum-l3-agent-PREROUTING -d 192.150.73.3/32 -j DNAT --to-destination 10.1.1.4 -A quantum-l3-agent-PREROUTING -d 192.150.73.4/32 -j DNAT --to-destination 10.1.1.2 -A quantum-l3-agent-PREROUTING -d 192.150.73.5/32 -j DNAT --to-destination 10.1.1.6 -A quantum-l3-agent-float-snat -s 10.1.1.4/32 -j SNAT --to-source 192.150.73.3 -A quantum-l3-agent-float-snat -s 10.1.1.2/32 -j SNAT --to-source 192.150.73.4 -A quantum-l3-agent-float-snat -s 10.1.1.6/32 -j SNAT --to-source 192.150.73.5 -A quantum-l3-agent-snat -j quantum-l3-agent-float-snat -A quantum-l3-agent-snat -s 10.1.1.0/24 -j SNAT --to-source 192.150.73.2 -A quantum-postrouting-bottom -j quantum-l3-agent-snat COMMIT # Completed on Mon Jun 17 10:14:57 2013NetworkNode的filter表规则:

- root@network232:~# ip netns exec qrouter-b147a74b-39bb-4c7a-aed5-19cac4c2df13 iptables-save -t filter

- # Generated by iptables-save v1.4.12 on Mon Jun 17 13:10:10 2013

- *filter

- :INPUT ACCEPT [1516:215380]

- :FORWARD ACCEPT [81:12744]

- :OUTPUT ACCEPT [912:85634]

- :quantum-filter-top - [0:0]

- :quantum-l3-agent-FORWARD - [0:0]

- :quantum-l3-agent-INPUT - [0:0]

- :quantum-l3-agent-OUTPUT - [0:0]

- :quantum-l3-agent-local - [0:0]

- -A INPUT -j quantum-l3-agent-INPUT

- -A FORWARD -j quantum-filter-top

- -A FORWARD -j quantum-l3-agent-FORWARD

- -A OUTPUT -j quantum-filter-top

- -A OUTPUT -j quantum-l3-agent-OUTPUT

- -A quantum-filter-top -j quantum-l3-agent-local

- -A quantum-l3-agent-INPUT -d 127.0.0.1/32 -p tcp -m tcp --dport 9697 -j ACCEPT

- COMMIT

- # Completed on Mon Jun 17 13:10:10 2013

root@network232:~# ip netns exec qrouter-b147a74b-39bb-4c7a-aed5-19cac4c2df13 iptables-save -t filter # Generated by iptables-save v1.4.12 on Mon Jun 17 13:10:10 2013 *filter :INPUT ACCEPT [1516:215380] :FORWARD ACCEPT [81:12744] :OUTPUT ACCEPT [912:85634] :quantum-filter-top - [0:0] :quantum-l3-agent-FORWARD - [0:0] :quantum-l3-agent-INPUT - [0:0] :quantum-l3-agent-OUTPUT - [0:0] :quantum-l3-agent-local - [0:0] -A INPUT -j quantum-l3-agent-INPUT -A FORWARD -j quantum-filter-top -A FORWARD -j quantum-l3-agent-FORWARD -A OUTPUT -j quantum-filter-top -A OUTPUT -j quantum-l3-agent-OUTPUT -A quantum-filter-top -j quantum-l3-agent-local -A quantum-l3-agent-INPUT -d 127.0.0.1/32 -p tcp -m tcp --dport 9697 -j ACCEPT COMMIT # Completed on Mon Jun 17 13:10:10 2013

Remote metadata server experienced an internal server error.

接着看metadata agent的日志,同样的,发现如下错误:

content=: 404 Not Found. The resource could not be found.

继续搜索nova-api的日志,找到根源:

ERROR [nova.api.metadata.handler 141] [4541] Failed to get metadata for ip: 192.168.82.232

192.168.82.232是我NetworkNode的IP地址,而metadata应该是从ControllerNode获取啊。于是搜索代码,来到如下地方:

- if CONF.service_quantum_metadata_proxy:

- meta_data = self._handle_instance_id_request(req)

- else:

- if req.headers.get('X-Instance-ID'):

- LOG.warn(

- _("X-Instance-ID present in request headers. The "

- "'service_quantum_metadata_proxy' option must be enabled"

- " to process this header."))

- meta_data = self._handle_remote_ip_request(req)

if CONF.service_quantum_metadata_proxy:

meta_data = self._handle_instance_id_request(req)

else:

if req.headers.get('X-Instance-ID'):

LOG.warn(

_("X-Instance-ID present in request headers. The "

"'service_quantum_metadata_proxy' option must be enabled"

" to process this header."))

meta_data = self._handle_remote_ip_request(req)

发现进入了else分支,而与Quantum metadata配合时应该是进入if,于是搜索配置项service_quantum_metadata_proxy,发现默认配置为False,而在/etc/nova/nova.conf中并没有进行覆盖,所以导致问题出现。

至此,问题分析完毕。

3、问题解决

修改/etc/nova/nova.conf中配置项service_quantum_metadata_proxy=True,重启进程。重启虚拟机,查看其console日志输出:

- cloud-init start-local running: Mon, 17 Jun 2013 05:45:40 +0000. up 3.44 seconds

- no instance data found in start-local

- ci-info: lo : 1 127.0.0.1 255.0.0.0 .

- ci-info: eth0 : 1 10.1.1.6 255.255.255.0 fa:16:3e:31:f4:52

- ci-info: route-0: 0.0.0.0 10.1.1.1 0.0.0.0 eth0 UG

- ci-info: route-1: 10.1.1.0 0.0.0.0 255.255.255.0 eth0 U

- cloud-init start running: Mon, 17 Jun 2013 05:45:44 +0000. up 7.66 seconds

- found data source: DataSourceEc2

- 2013-06-17 05:45:55,999 - __init__.py[WARNING]: Unhandled non-multipart userdata ''

- Generating public/private rsa key pair.

- Your identification has been saved in /etc/ssh/ssh_host_rsa_key.

- Your public key has been saved in /etc/ssh/ssh_host_rsa_key.pub.

- The key fingerprint is:

- de:04:ec:82:0c:09:d8:b3:12:ac:4a:40:94:81:e7:48 root@ubuntu-keypair-test

- The key's randomart image is:

- +--[ RSA 2048]----+

- |B=o |

- |=E+. . |

- |+=oo o |

- |+.oo . . . |

- |o. o . S . |

- |. o o |

- | . . |

- | |

- | |

- +-----------------+

- Generating public/private dsa key pair.

- Your identification has been saved in /etc/ssh/ssh_host_dsa_key.

- Your public key has been saved in /etc/ssh/ssh_host_dsa_key.pub.

- The key fingerprint is:

- 6e:aa:2a:da:bb:ef:43:8b:14:5a:99:36:64:74:10:c7 root@ubuntu-keypair-test

- The key's randomart image is:

- +--[ DSA 1024]----+

- | .++o |

- | ooE |

- | o o |

- | B |

- | + o S |

- |. . . . |

- | . o . o |

- |... o o |

- |o.=*+o. |

- +-----------------+

- Generating public/private ecdsa key pair.

- Your identification has been saved in /etc/ssh/ssh_host_ecdsa_key.

- Your public key has been saved in /etc/ssh/ssh_host_ecdsa_key.pub.

- The key fingerprint is:

- 66:c0:a3:48:cb:d7:0b:bf:6e:e2:6d:e5:24:3b:66:f7 root@ubuntu-keypair-test

- The key's randomart image is:

- +--[ECDSA 256]---+

- | |

- | . |

- | . + |

- | o o o o |

- | + + . S |

- | . o.+o |

- | o* |

- | ..B.o |

- | ..B+o .E |

- +-----------------+

- * Starting system logging daemon [ OK ]

- * Starting Handle applying cloud-config [ OK ]

- Skipping profile in /etc/apparmor.d/disable: usr.sbin.rsyslogd

- * Starting AppArmor profiles [ OK ]

- landscape-client is not configured, please run landscape-config.

- * Stopping System V initialisation compatibility [ OK ]

- * Starting System V runlevel compatibility [ OK ]

- * Starting automatic crash report generation [ OK ]

- * Starting save kernel messages [ OK ]

- * Starting ACPI daemon [ OK ]

- * Starting regular background program processing daemon [ OK ]

- * Starting deferred execution scheduler [ OK ]

- * Starting CPU interrupts balancing daemon [ OK ]

- * Stopping save kernel messages [ OK ]

- * Starting crash report submission daemon [ OK ]

- * Stopping System V runlevel compatibility [ OK ]

- Generating locales...

- en_US.UTF-8... done

- Generation complete.

- ec2:

- ec2: #############################################################

- ec2: -----BEGIN SSH HOST KEY FINGERPRINTS-----

- ec2: 1024 6e:aa:2a:da:bb:ef:43:8b:14:5a:99:36:64:74:10:c7 root@ubuntu-keypair-test (DSA)

- ec2: 256 66:c0:a3:48:cb:d7:0b:bf:6e:e2:6d:e5:24:3b:66:f7 root@ubuntu-keypair-test (ECDSA)

- ec2: 2048 de:04:ec:82:0c:09:d8:b3:12:ac:4a:40:94:81:e7:48 root@ubuntu-keypair-test (RSA)

- ec2: -----END SSH HOST KEY FINGERPRINTS-----

- ec2: #############################################################

- -----BEGIN SSH HOST KEY KEYS-----

- ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBCwe6gbVpdgs1dOskAl8M42wwaTZJdfGV3JslsDy9g04f4/JCGJskDSm4Tgv9d4p+a6G85/NofsZSbmj8/6nWZ8= root@ubuntu-keypair-test

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDUrTgq3oTDuw1Bvh62LaYOOxjsEkfOk9IIVOdqASG5c2ExucIAKdRZY8XqlmoN3d64VI65ArsBWQ+PeuofUFfE5z8DvFr13ieNlLw8VgD46TGZ9XYLzZgs1CpN1evoU6Np3NN8q3CihprzcBCh7uKlAsgmwULh22+vDJPMnJamtn0Nk3NVtLJKqyujoN/pEIsWYouyBOJIKWjPLUPnGRpVeqQ1NkRED5w2SHbK9I49e6fItPnA9jVdTG06K2/xThXVUjVE3iwXr/uHMfNpJoejZzSqCmdhD68pIMleOI/Hd6+RPMJurw5CVYvdLOv4lWQMOEOpBzzXSp44JMlN3AKP root@ubuntu-keypair-test

- -----END SSH HOST KEY KEYS-----

- cloud-init boot finished at Mon, 17 Jun 2013 05:46:31 +0000. Up 54.86 seconds

cloud-init start-local running: Mon, 17 Jun 2013 05:45:40 +0000. up 3.44 seconds no instance data found in start-local ci-info: lo : 1 127.0.0.1 255.0.0.0 . ci-info: eth0 : 1 10.1.1.6 255.255.255.0 fa:16:3e:31:f4:52 ci-info: route-0: 0.0.0.0 10.1.1.1 0.0.0.0 eth0 UG ci-info: route-1: 10.1.1.0 0.0.0.0 255.255.255.0 eth0 U cloud-init start running: Mon, 17 Jun 2013 05:45:44 +0000. up 7.66 seconds found data source: DataSourceEc2 2013-06-17 05:45:55,999 - __init__.py[WARNING]: Unhandled non-multipart userdata '' Generating public/private rsa key pair. Your identification has been saved in /etc/ssh/ssh_host_rsa_key. Your public key has been saved in /etc/ssh/ssh_host_rsa_key.pub. The key fingerprint is: de:04:ec:82:0c:09:d8:b3:12:ac:4a:40:94:81:e7:48 root@ubuntu-keypair-test The key's randomart image is: +--[ RSA 2048]----+ |B=o | |=E+. . | |+=oo o | |+.oo . . . | |o. o . S . | |. o o | | . . | | | | | +-----------------+ Generating public/private dsa key pair. Your identification has been saved in /etc/ssh/ssh_host_dsa_key. Your public key has been saved in /etc/ssh/ssh_host_dsa_key.pub. The key fingerprint is: 6e:aa:2a:da:bb:ef:43:8b:14:5a:99:36:64:74:10:c7 root@ubuntu-keypair-test The key's randomart image is: +--[ DSA 1024]----+ | .++o | | ooE | | o o | | B | | + o S | |. . . . | | . o . o | |... o o | |o.=*+o. | +-----------------+ Generating public/private ecdsa key pair. Your identification has been saved in /etc/ssh/ssh_host_ecdsa_key. Your public key has been saved in /etc/ssh/ssh_host_ecdsa_key.pub. The key fingerprint is: 66:c0:a3:48:cb:d7:0b:bf:6e:e2:6d:e5:24:3b:66:f7 root@ubuntu-keypair-test The key's randomart image is: +--[ECDSA 256]---+ | | | . | | . + | | o o o o | | + + . S | | . o.+o | | o* | | ..B.o | | ..B+o .E | +-----------------+ * Starting system logging daemon [ OK ] * Starting Handle applying cloud-config [ OK ] Skipping profile in /etc/apparmor.d/disable: usr.sbin.rsyslogd * Starting AppArmor profiles [ OK ] landscape-client is not configured, please run landscape-config. * Stopping System V initialisation compatibility [ OK ] * Starting System V runlevel compatibility [ OK ] * Starting automatic crash report generation [ OK ] * Starting save kernel messages [ OK ] * Starting ACPI daemon [ OK ] * Starting regular background program processing daemon [ OK ] * Starting deferred execution scheduler [ OK ] * Starting CPU interrupts balancing daemon [ OK ] * Stopping save kernel messages [ OK ] * Starting crash report submission daemon [ OK ] * Stopping System V runlevel compatibility [ OK ] Generating locales... en_US.UTF-8... done Generation complete. ec2: ec2: ############################################################# ec2: -----BEGIN SSH HOST KEY FINGERPRINTS----- ec2: 1024 6e:aa:2a:da:bb:ef:43:8b:14:5a:99:36:64:74:10:c7 root@ubuntu-keypair-test (DSA) ec2: 256 66:c0:a3:48:cb:d7:0b:bf:6e:e2:6d:e5:24:3b:66:f7 root@ubuntu-keypair-test (ECDSA) ec2: 2048 de:04:ec:82:0c:09:d8:b3:12:ac:4a:40:94:81:e7:48 root@ubuntu-keypair-test (RSA) ec2: -----END SSH HOST KEY FINGERPRINTS----- ec2: ############################################################# -----BEGIN SSH HOST KEY KEYS----- ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBCwe6gbVpdgs1dOskAl8M42wwaTZJdfGV3JslsDy9g04f4/JCGJskDSm4Tgv9d4p+a6G85/NofsZSbmj8/6nWZ8= root@ubuntu-keypair-test ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDUrTgq3oTDuw1Bvh62LaYOOxjsEkfOk9IIVOdqASG5c2ExucIAKdRZY8XqlmoN3d64VI65ArsBWQ+PeuofUFfE5z8DvFr13ieNlLw8VgD46TGZ9XYLzZgs1CpN1evoU6Np3NN8q3CihprzcBCh7uKlAsgmwULh22+vDJPMnJamtn0Nk3NVtLJKqyujoN/pEIsWYouyBOJIKWjPLUPnGRpVeqQ1NkRED5w2SHbK9I49e6fItPnA9jVdTG06K2/xThXVUjVE3iwXr/uHMfNpJoejZzSqCmdhD68pIMleOI/Hd6+RPMJurw5CVYvdLOv4lWQMOEOpBzzXSp44JMlN3AKP root@ubuntu-keypair-test -----END SSH HOST KEY KEYS----- cloud-init boot finished at Mon, 17 Jun 2013 05:46:31 +0000. Up 54.86 seconds

再次在NetworkNode上ssh登录虚拟机: