opencv2.4.9图像特征点的提取和匹配

opencv图像特征点的提取和匹配(二)

在上面一节大概分析了一下在opencv中如何实现特征的提取,这一节分析一下opencv中如何生成特征点的描述子并对描述子进行匹配。opencv提取的特征点都保存在一个向量(vector)中,元素的类型是Point类。所有实现特征点描述子提取的类均派生于DescriptorExtractor类。特征描述子的匹配是由DescriptorMatcher类实现,匹配的结果保存在一个向量(vector)中,向量元素的类型为DMatch;DMatch中保存了特征描述子在各自特征描述子集合中索引值和得到匹配的两个描述子之间的欧氏距离。

首先来看用于生成特征描述子的DescriptorExtractor类,具体源码如下:

class CV_EXPORTS DescriptorExtractor

{

public:

virtual ~DescriptorExtractor();

void compute( const Mat& image, vector<KeyPoint>& keypoints,

Mat& descriptors ) const;

void compute( const vector<Mat>& images, vector<vector<KeyPoint> >& keypoints,

vector<Mat>& descriptors ) const;

virtual void read( const FileNode& );

virtual void write( FileStorage& ) const;

virtual int descriptorSize() const = 0;

virtual int descriptorType() const = 0;

<pre name="code" class="cpp">static Ptr<DescriptorExtractor> create( const string& descriptorExtractorType );

protected: ...};

在这个接口中,关键点的特征描述子被表达成密集的、固定维数的向量。特征描述子的集合被表达成一个Mat,其中每一行是一个关键特征点的描述子,Mat矩阵的行数代表提取的特征点的个数,列数代表特征点描述子的维数。

可以通过名字来创建特定的特征描述子,由静态成员函数create实现,代码如下:

static Ptr<DescriptorExtractor> create( const string& descriptorExtractorType );现在只支持以下几种的特征描述子的提取方法:

"SIFT"—SiftDescriptorExtractor

"SURF"—SurfDescriptorExtractor

"ORB"—OrbDescriptorExtractor

"BRIEF"—BriefDescriptorExtractor

并且支持组合类型:提取特征描述子的适配器("Opponent" - OpponentColorDescriptorExtractor)+描述子的提取类型(OpponentSIFT)。

DescriptorExtractor类派生类多个子类用以获取不同类型特征描述子,如:SiftDescriptorExtractor(源码直接定义的是SIFT,这两者等价,具体见opencv图像特征点的提取和匹配(一))、SurfDescriptorExtractor(等价于SURF类)、OrbDescriptorExtractor(等价于ORB)、BriefDescriptorExtractor、CalonderDescriptorExtractor、OpponentColorDescriptorExtractor(在对立颜色空间中计算特征描述子)。

特征描述的生成是由DescriptorExtractor类的成员函数compute来实现。

特征描述子的匹配:

首先得了解DMatch结构体,这个结构体封装了匹配的特征描述子的一些特性:特征描述子的索引、训练图像的索引、特征描述子之间的距离等。具体代码如下:

struct DMatch

{

DMatch() : queryIdx(-1), trainIdx(-1), imgIdx(-1),

distance(std::numeric_limits<float>::max()) {}

DMatch( int _queryIdx, int _trainIdx, float _distance ) :

queryIdx(_queryIdx), trainIdx(_trainIdx), imgIdx(-1),

distance(_distance) {}

DMatch( int _queryIdx, int _trainIdx, int _imgIdx, float _distance ) :

queryIdx(_queryIdx), trainIdx(_trainIdx), imgIdx(_imgIdx),

distance(_distance) {}

int queryIdx; // query descriptor index

int trainIdx; // train descriptor index

int imgIdx; // train image index

float distance;

// less is better

bool operator<( const DMatch &m ) const;

};

DecriptorMatcher类是用来特征关键点描述子匹配的基类,主要用来匹配两个图像之间的特征描述子,或者一个图像和一个图像集的特征描述子。主要包括两种匹配方法(均为 DecriptorMatcher类的子类):BFMatcher和FlannBasedMatcher。

BFMatcher构造函数如下:

BFMatcher::BFMatcher(int normType=NORM_L2, bool crossCheck=false )normType可以取的参数如下:

NORM_L1, NORM_L2, NORM_HAMMING, NORM_HAMMING2.

对于SIFT算子和SURF算子来说,一般推荐NROM_L1和NORM_L2;NORM_HAMMING一般用于ORB、BRISK和BRIEF;NORM_HAMMING2用于ORB且ORB的构造函数的参数WTA = 3或者4时。

crossCheck参数:为false时寻找k个最邻近的匹配点;为true时寻找最好的匹配点对。

FlannBasedMatcher类:采用最邻近算法寻找最好的匹配点。

用BFMatcher进行匹配的程序如下:

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/nonfree/features2d.hpp>

#include <iostream>

using namespace std;

using namespace cv;

void readme();

int main(int argc,char* argv[])

{

Mat img1 = imread("box.png",CV_LOAD_IMAGE_GRAYSCALE);

Mat img2 = imread("box_in_scene.png",CV_LOAD_IMAGE_GRAYSCALE);

if(!img1.data || !img2.data)

{

cout<<"Error reading images!!"<<endl;

return -1;

}

SurfFeatureDetector detector;

vector<KeyPoint> keypoints1,keypoints2;

detector.detect(img1,keypoints1,Mat());

detector.detect(img2,keypoints2,Mat());

SurfDescriptorExtractor extractor;

Mat descriptor1,descriptor2;

extractor.compute(img1,keypoints1,descriptor1);

extractor.compute(img2,keypoints2,descriptor2);

//FlannBasedMatcher matcher;

BFMatcher matcher(NORM_L2);

vector<DMatch> matches;

matcher.match(descriptor1,descriptor2,matches,Mat());

Mat imgmatches;

drawMatches(img1,keypoints1,img2,keypoints2,matches,imgmatches,Scalar::all(-1),Scalar::all(-1));

imshow("Matches",imgmatches);

waitKey(0);

return 0;

}

void readme()

{ cout<<" Usage: ./SURF_descriptor <img1> <img2>"<<endl;}

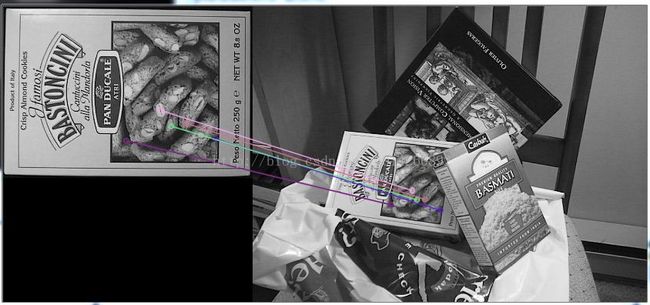

特征检测与匹配结果:

FlannBasedMatcher匹配;并寻找匹配精度小于最小距离两倍的匹配点集。程序如下:

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/nonfree/features2d.hpp>

#include <iostream>

using namespace std;

using namespace cv;

int main(int argc,char* argv[])

{

Mat img1 = imread("box.png",CV_LOAD_IMAGE_GRAYSCALE);

Mat img2 = imread("box_in_scene.png",CV_LOAD_IMAGE_GRAYSCALE);

if(!img1.data || !img2.data)

{

cout<<"Error reading images!!"<<endl;

return -1;

}

SurfFeatureDetector detector;

vector<KeyPoint> keypoints1,keypoints2;

detector.detect(img1,keypoints1,Mat());

detector.detect(img2,keypoints2,Mat());

SurfDescriptorExtractor extractor;

Mat descriptor1,descriptor2;

extractor.compute(img1,keypoints1,descriptor1);

extractor.compute(img2,keypoints2,descriptor2);

FlannBasedMatcher matcher;

vector<DMatch> matches;

matcher.match(descriptor1,descriptor2,matches,Mat());

double dist_max = 0;

double dist_min = 100;

for(int i = 0; i < descriptor1.rows; i++)

{

double dist = matches[i].distance;

if(dist < dist_min)

dist_min = dist;

if(dist > dist_max)

dist_max = dist;

}

printf("Max distance: %f \n",dist_max);

printf("Min distance: %f \n",dist_min);

vector<DMatch> goodmatches;

for(int i = 0;i < matches.size(); i++)

{

if(matches[i].distance < 2*dist_min)

goodmatches.push_back(matches[i]);

}

Mat imgout;

drawMatches(img1,

keypoints1,

img2,

keypoints2,

goodmatches,

imgout,

Scalar::all(-1),

Scalar::all(-1),

vector<char>(),

DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

imshow("Matches",imgout);

for(int i = 0; i < goodmatches.size(); i++)

{

printf("Good Matches[%d] keypoint 1: %d -- keypoint 2: %d",i,goodmatches[i].queryIdx,goodmatches[i].trainIdx);

}

waitKey(0);

return 0;

}

特征检测和匹配结果:

以上程序均是在opencv2.4.9+vs2010+win7条件下运行的,有错误希望指针,相互学习,共同进步!!