Kuryr对接之Baremetal containers networking

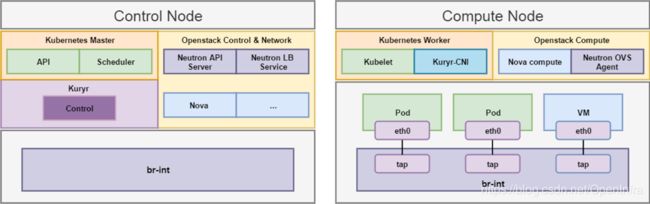

概览

packstack安装openstack

准备

控制节点与计算节点同时运行。

关闭防火墙

1. systemctl stop firewalld

2. systemctl disable firewalld

关闭SELinux

1. sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

2. setenforce 0

修改yum源

1. yum -y install wget

2. wget http://mirrors.aliyun.com/repo/Centos-7.repo

3. yum update

下载安装包

控制节点与计算节点同时运行。

1. yum install -y centos-release-openstack-rocky

2. yum install -y openstack-packstack

控制节点生成并修改answer文件

控制节点运行下述命令生成answer file。

1. packstack --gen-answer-file answer.txt

修改生成的answer.txt,根据实际控制节点与计算节点的IP,修改下述信息。

1. #关闭非必须组件减少安装时间

2. CONFIG_CINDER_INSTALL=n

3. CONFIG_MANILA_INSTALL=n

4. CONFIG_SWIFT_INSTALL=n

5. CONFIG_AODH_INSTALL=n

6. CONFIG_CONTROLLER_HOST=<控制、网络节点IP>

7. CONFIG_COMPUTE_HOSTS=<计算节点IP>

8. CONFIG_NETWORK_HOSTS=<控制、网络节点IP>

9. #启用LB

10.CONFIG_LBAAS_INSTALL=y

11.CONFIG_NEUTRON_METERING_AGENT_INSTALL=n

12.CONFIG_NEUTRON_ML2_TYPE_DRIVERS=vxlan,flat

13.CONFIG_NEUTRON_ML2_TENANT_NETWORK_TYPES=vxlan

14.#将默认的OVN backend切换为ovs

15.CONFIG_NEUTRON_ML2_MECHANISM_DRIVERS=openvswitch

16.CONFIG_NEUTRON_L2_AGENT=openvswitch

控制节点运行安装

1. packstack --answer-file answer.txt

准备openstack中K8S所需项目、用户及网络信息

创建K8S所需资源可以在dashboard中进行,也可以直接通过命令行操作。

创建K8S专用的项目,用户信息

1. openstack project create k8s

2. openstack user create --password 99cloud k8s-user

3. openstack role add --project k8s --user k8s-user admin

在K8S项目下创建pod 网络子网及service 网络子网

1. openstack network create pod_network

2. openstack network create service_network

3. openstack subnet create --ip-version 4 --subnet-range 10.1.0.0/16 --network pod_network pod_subnet

4. openstack subnet create --ip-version 4 --subnet-range 10.2.0.0/16 --network service_network service_subnet

在K8S项目路由器连接pod网络子网与service网络子网

1. openstack router create k8s-router

2. openstack router add subnet k8s-router pod_subnet

3. openstack router add subnet k8s-router service_subnet

在K8S项目创建pod安全组

1. openstack security group create service_pod_access_sg

2. openstack security group rule create --remote-ip 10.1.0.0/16 --ethertype IPv4 --protocol tcp service_pod_access_sg

3. openstack security group rule create --remote-ip 10.2.0.0/16 --ethertype IPv4 --protocol tcp service_pod_access_sg

注意,这里的创建的project id,user name,password,pod subnet id,service subnet id,security group id均需要记录,用于后期配置kuryr controller。

kubeadm安装Kubernetes

准备

master节点

以下操作在master节点和work 节点均执行。

关闭虚拟内存

1. swapoff -a

2. sed -i 's/.*swap.*/#&/' /etc/fstab

配置转发参数

1. cat <

2. net.bridge.bridge-nf-call-ip6tables = 1

3. net.bridge.bridge-nf-call-iptables = 1

4. EOF

5. sysctl --system

配置kubernetes阿里源

1. cat <

2. [kubernetes]

3. name=Kubernetes

4. baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

5. enabled=1

6. gpgcheck=1

7. repo_gpgcheck=1

8. gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

9. EOF

设置docker源

1. wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -P /etc/yum.repos.d/

安装docker

1. yum install docker-ce-18.06.1.ce -y

设置镜像仓库加速

1. sudo mkdir -p /etc/docker

2. sudo tee /etc/docker/daemon.json <<-'EOF'

3. {

4. "registry-mirrors": ["https://hdi5v8p1.mirror.aliyuncs.com"]

5. }

6. EOF

启动docker

1. systemctl daemon-reload

2. systemctl enable docker

3. systemctl start docker

安装kubernetes相关组件

1. yum install kubelet-1.12.2 kubeadm-1.12.2 kubectl-1.12.2 kubernetes-cni-0.6.0 -y

2. systemctl enable kubelet && systemctl start kubelet

开启IPVS

加载ipvs内核,使node节点kube-proxy支持ipvs代理规则。

1. modprobe ip_vs_rr

2. modprobe ip_vs_wrr

3. modprobe ip_vs_sh

4.

5. cat <

6. modprobe ip_vs_rr

7. modprobe ip_vs_wrr

8. modprobe ip_vs_sh

9. EOF

下载镜像

1. cat <

2. #!/bin/bash

3. kube_version=:v1.12.2

4. kube_images=(kube-proxy kube-scheduler kube-controller-manager kube-apiserver)

5. addon_images=(etcd-amd64:3.2.24 coredns:1.2.2 pause-amd64:3.1)

6.

7. for imageName in \${kube_images[@]} ; do

8. docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/\$imageName-amd64\$kube_version

9. docker image tag registry.cn-hangzhou.aliyuncs.com/google_containers/\$imageName-amd64\$kube_version k8s.gcr.io/\$imageName\$kube_version

10. docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/\$imageName-amd64\$kube_version

11.done

12.

13.for imageName in \${addon_images[@]} ; do

14. docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/\$imageName

15. docker image tag registry.cn-hangzhou.aliyuncs.com/google_containers/\$imageName k8s.gcr.io/\$imageName

16. docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/\$imageName

17.done

18.

19.docker tag k8s.gcr.io/etcd-amd64:3.2.24 k8s.gcr.io/etcd:3.2.24

20.docker image rm k8s.gcr.io/etcd-amd64:3.2.24

21.docker tag k8s.gcr.io/pause-amd64:3.1 k8s.gcr.io/pause:3.1

22.docker image rm k8s.gcr.io/pause-amd64:3.1

23.EOF

24.

25.chmod u+x master.sh

26../master.sh

kubeadm init安装master节点

注意这里的pod network cidr与service cidr必须和前文中创建的openstack中pod subnet cidr及service subnet cidr保持一致。

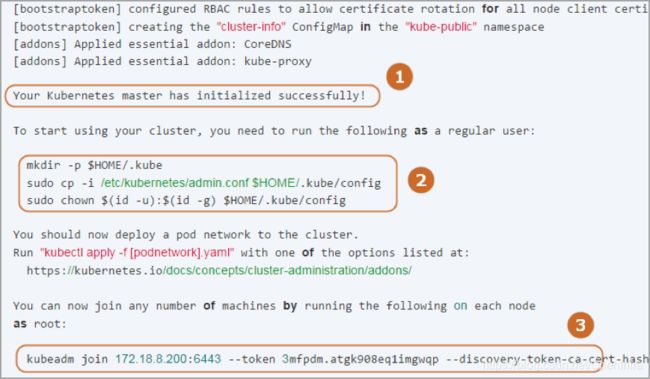

1.kubeadm init --kubernetes-version=v1.12.2 --pod-network-cidr=10.1.0.0/16 --service-cidr=10.2.0.0/16 在成功运行该命令后会输出类似如下图所示的结果:

① 表示kubernetes master节点安装成功。

② 根据命令执行同样操作。

③ 记录该指令,用于后期添加kubernetes node节点。

配置flannel

这里配置flannel网络插件,仅用于对接kuryr前简单验证kubernetes功能是否正常。

下载配置文件

1. curl -LO https://raw.githubusercontent.com/coreos/flannel/bc79dd1505b0c8681ece4de4c0d86c5cd2643275/Documentation/kube-flannel.yml

修改pod network cidr

1. sed -i "s/10.244.0.0/10.1.0.0/g" kube-flannel.yml

启动flannel

1. kubectl apply -f kube-flannel.yml

设置API代理

1. kubectl proxy --port=8080 --accept-hosts='.*' --address='0.0.0.0'

node节点

下载镜像

1. cat <

2. #!/bin/bash

3. kube_version=:v1.12.2

4. coredns_version=1.2.2

5. pause_version=3.1

6.

7. docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64\$kube_version

8. docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64\$kube_version k8s.gcr.io/kube-proxy\$kube_version

9. docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy-amd64\$kube_version

10.

11.docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:\$pause_version

12.docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:\$pause_version k8s.gcr.io/pause:\$pause_version

13.docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:\$pause_version

14.

15.docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:\$coredns_version

16.docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:\$coredns_version k8s.gcr.io/coredns:\$coredns_version

17.docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:\$coredns_version

18.EOF

19.

20.chmod u+x node01.sh

21../node01.sh

添加node节点

使用在master节点安装成功后保存的hubedem join命令,添加node节点。

1. kubeadm join 192.168.1.12:6443 --token lgbnlx.ehciqy1p1rpu6g6g --discovery-token-ca-cert-hash sha256:0acd9f6c7afcab4f4a0ebc1a1dd064f32ef09113829a30ce51dea9822d2f4afd

验证

在master节点运行下述命令可以看到node节点被正确加入

1. [root@kuryra-1 ~]# kubectl get nodes

2. NAME STATUS ROLES AGE VERSION

3. kuryra-1 Ready master 21h v1.12.2

4. kuryra-2 Ready

安装配置kuryr

安装kuryr-k8s-controller

安装

1. mkdir kuryr-k8s-controller

2. cd kuryr-k8s-controller

3. virtualenv env

4. git clone https://git.openstack.org/openstack/kuryr-kubernetes -b stable/rocky

5. . env/bin/activate

6. pip install -e kuryr-kubernetes

配置

1. cd kuryr-kubernetes

2. ./tools/generate_config_file_samples.sh

3. mkdir /etc/kuryr

4. cp etc/kuryr.conf.sample /etc/kuryr/kuryr.conf

这里需要修改配置文件kuryr.conf。具体配置项需根据上文中在openstack内创建的用于K8S环境的信息填写。

1. [DEFAULT]

2. use_stderr = true

3. bindir = /usr/local/libexec/kuryr

4.

5. [kubernetes]

6. api_root = http://192.168.1.11:8080

7.

8. [neutron]

9. # 需根据上文章节中创建的项目、用户信息填写

10.auth_url = http://192.168.1.11:5000/v3

11.username = k8s-user

12.user_domain_name = Default

13.password = 99cloud

14.project_name = ks

15.project_domain_name = Default

16.auth_type = password

17.

18.[neutron_defaults]

19.ovs_bridge = br-int

20.# 下面的网络资源ID需根据上文章节中创建的资源ID填写

21.pod_security_groups = a19813e3-f5bc-41d9-9a1f-54133facb6da

22.pod_subnet = 53f5b742-482b-40b6-b5d0-bf041e98270c

23.project = aced9738cfd44562a22235b1cb6f7993

24.service_subnet = d69dae26-d750-42f0-b844-5eb78a6bb873

运行

1. kuryr-k8s-controller --config-file /etc/kuryr/kuryr.conf -d

安装kuryr-cni

安装

1. mkdir kuryr-k8s-cni

2. cd kuryr-k8s-cni

3. virtualenv env

4. . env/bin/activate

5. git clone https://git.openstack.org/openstack/kuryr-kubernetes -b stable/rocky

6. pip install -e kuryr-kubernetes

配置

1. cd kuryr-kubernetes

2. ./tools/generate_config_file_samples.sh

3. mkdir /etc/kuryr

4. cp etc/kuryr.conf.sample /etc/kuryr/kuryr.conf

此处需要修改配置文件kuryr.conf。

1. [DEFAULT]

2. use_stderr = true

3. bindir = /usr/local/libexec/kuryr

4. lock_path=/var/lib/kuryr/tmp

5.

6. [kubernetes]

7. # 填k8s master的IP

8. api_root = http://192.168.1.11:8080

修改cni配置

执行下述命令。

1. mkdir -p /opt/cni/bin

2. ln -s $(which kuryr-cni) /opt/cni/bin/

由于前文安装k8s时已经安装过flannel,这里/opt/cni/bin目录已经存在。新增/etc/cni/net.d/10-kuryr.conf文件,按如下信息修改配置文件,同时删除同目录下flannel的配置文件。

1. {

2. "cniVersion": "0.3.1",

3. "name": "kuryr",

4. "type": "kuryr-cni",

5. "kuryr_conf": "/etc/kuryr/kuryr.conf",

6. "debug": true

7. }

安装相应依赖包

1. sudo pip install 'oslo.privsep>=1.20.0' 'os-vif>=1.5.0'

运行

1. kuryr-daemon --config-file /etc/kuryr/kuryr.conf -d