Python网络数据采集3:开始采集

Web crawler的本质是一种递归方式。使用Web Crawler的时候,必须非常谨慎地考虑需要消耗多少网络流量,还要尽力思考能不能让采集目标的服务器负载更低一些。

3.1 遍历单个域名

维基百科六度分隔理论:小世界

from urllib.request import urlopen

from bs4 import BeautifulSoup

html = urlopen("http://en.wikipedia.org/wiki/Kevin_Bacon")

bsObj = BeautifulSoup(html)

for link in bsObj.findAll("a"):

if 'href' in link.attrs:

print(link.attrs['href'])每个页面充满了侧边栏、页眉、页脚链接,以及连接到分类页面等,如/wiki/Talk:Kevin_Bacon

有一些我们不需要的链接,而我们需要“ 词条链接”。找出“词条链接”和“其他链接”的差异:

- 它们都在id是bodyContent的div标签里

- URL链接不包含分号

- URL链接都以/wiki/开头

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

html = urlopen("http://en.wikipedia.org/wiki/Kevin_Bacon")

bsObj = BeautifulSoup(html)

for link in bsObj.find("div", {"id":"bodyContent"}).findAll("a", href=re.compile("^(/wiki/)((?!:).)*$")):

if 'href' in link.attrs:

print(link.attrs['href'])from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

import random

import datetime

random.seed(datetime.datetime.now())

def getLinks(articleUrl):

html = urlopen("http://en.wikipedia.org"+articleUrl)

bsObj = BeautifulSoup(html)

return bsObj.find("div", {"id":"bodyContent"}).findAll("a", href=re.compile("^(/wiki/)((?!:).)*$"))

links = getLinks("/wiki/Kevin_Bacon")

while len(links) > 0:

newArticle = links[random.randint(0, len(links)-1)].attrs["href"]

print(newArticle)

links = getLinks(newArticle)3.2 采集整个网站

遍历整个网站的的好处:

- 生成网站地图:

- 收集数据:

为了避免一个页面被采集两次,链接去重是非常重要的。

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

pages = set()

def getLinks(pageUrl):

global pages

html = urlopen("http://en.wikipedia.org" + pageUrl)

bsObj = BeautifulSoup(html)

for link in bsObj.findAll("a", href=re.compile("^(/wiki/)")):

if 'href' in link.attrs:

if link.attrs['href'] not in pages:

newPage = link.attrs['href']

print(newPage)

pages.add(newPage)

getLinks(newPage)

getLinks("")收集整个网站数据:

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

pages = set()

def getLinks(pageUrl):

global pages

html = urlopen("http://en.wikipedia.org" + pageUrl)

bsObj = BeautifulSoup(html)

try:

print(bsObj.h1.get_text())

print(bsObj.find(id="mw-content-text").findAll("p")[0])

print(bsObj.find(id="ca-edit").find("span").find("a").attrs['href'])

except AttributeError:

print("the page lack of some attr!")

for link in bsObj.findAll("a", href=re.compile("^(/wiki/)")):

if 'href' in link.attrs:

if link.attrs['href'] not in pages:

newPage = link.attrs['href']

print(newPage)

pages.add(newPage)

getLinks(newPage)

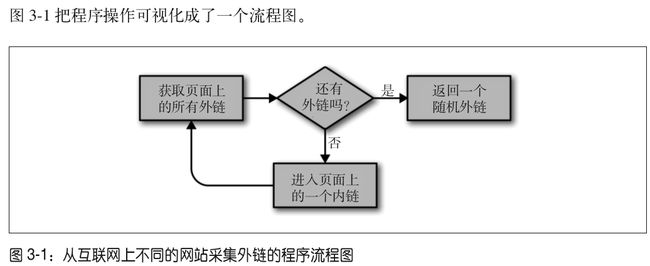

getLinks("")3.3 通过互联网采集

google在1994年成立的时候,就是用一个陈旧的服务器和一个Python网络爬虫

- 在你写爬虫随意跟随外链跳转之前,请问自己几个问题:

- 要收集哪些数据?这些数据可以通过采集几个已经确定的网站完成吗?或者需要发现那些我可能不知道的网站吗?

- 爬虫到某个网站,是立即顺着下一个出站链接跳到一个新网站,还是在网站上待一会儿,深入采集网站的内容?

- 有没有不想采集的一类网站?

- 引起了某个网站网管的怀疑,如何避免法律责任?

# -*- coding: utf-8 -*-

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

import datetime

import random

pages = set()

random.seed(datetime.datetime.now())

# 获取页面所有内链的列表

def getInternalLinks(bsObj, includeUrl):

internalLinks = []

# 找出所有以"/"开头的链接

for link in bsObj.findAll("a", href=re.compile("^(/|.*"+includeUrl+")")):

if link.attrs['href'] is not None:

if link.attrs['href'] not in internalLinks:

internalLinks.append(link.attrs['href'])

return internalLinks

# 获取页面所有外链的列表

def getExternalLinks(bsObj, excludeUrl):

externalLinks = []

# 找出所有以"http"或"www"开头且不包含当前URL的链接

for link in bsObj.findAll("a", href=re.compile("^(http|www)((?!"+excludeUrl+").)*$")):

if link.attrs['href'] is not None:

if link.attrs['href'] not in externalLinks:

externalLinks.append(link.attrs['href'])

return externalLinks

def splitAddress(address):

addressParts = address.replace("http://","").split("/")

return addressParts

def getRandomExternalLink(startingPage):

html = urlopen(startingPage)

bsObj = BeautifulSoup(html)

externalLinks = getExternalLinks(bsObj, splitAddress(startingPage)[0])

if len(externalLinks) == 0:

internalLinks = getInternalLinks(startingPage)

return getNextExternalLink(internalLinks[random.randint(0, len(internalLinks)-1)])

else:

return externalLinks[random.randint(0, len(externalLinks)-1)]

def followExternalOnly(startingSite):

externalLink = getRandomExternalLink("http://oreilly.com")

print("随机外链是:" + externalLink)

followExternalOnly(externalLink)

followExternalOnly("http://oreilly.com")

# -*- coding: utf-8 -*-

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

import datetime

import random

pages = set()

random.seed(datetime.datetime.now())

# 获取页面所有内链的列表

def getInternalLinks(bsObj, includeUrl):

internalLinks = []

print(includeUrl)

# 找出所有以"/"开头的链接

#for link in bsObj.findAll("a", href=re.compile("^(/)(.*"+includeUrl+")")):

for link in bsObj.findAll("a", href=re.compile("^(/)")):

if link.attrs['href'] is not None:

if link.attrs['href'] not in internalLinks:

internalLinks.append(link.attrs['href'])

return internalLinks

# 获取页面所有外链的列表

def getExternalLinks(bsObj, excludeUrl):

externalLinks = []

# 找出所有以"http"或"www"开头且不包含当前URL的链接

for link in bsObj.findAll("a", href=re.compile("^(http|www)((?!"+excludeUrl+").)*$")):

if link.attrs['href'] is not None:

if link.attrs['href'] not in externalLinks:

externalLinks.append(link.attrs['href'])

return externalLinks

def splitAddress(address):

addressParts = address.replace("http://","").split("/")

return addressParts

def printAllLinks(url):

html = urlopen(url)

bsObj = BeautifulSoup(html)

for link in bsObj.findAll("a"):

if 'href' in link.attrs:

print(link.attrs['href'])

#printAllLinks("http://oreilly.com")

# 收集网站上发现的所有外链列表

allExtLinks = set()

allIntLinks = set()

def getAllExternalLinks(siteUrl):

html = urlopen(siteUrl)

bsObj = BeautifulSoup(html)

internalLinks = getInternalLinks(bsObj, splitAddress(siteUrl)[0])

externalLinks = getExternalLinks(bsObj, splitAddress(siteUrl)[0])

for link in externalLinks:

if link not in allExtLinks:

print("即将获取的链接的URL是:" + link)

allExtLinks.add(link)

getAllExternalLinks(link)

for link in internalLinks:

if link not in allIntLinks:

print(link)

allIntLinks.add(link)

#getAllExternalLinks("http://oreilly.com")

getAllExternalLinks("http://www.pku.edu.cn")3.4 用Scrapy采集

重复找出页面上的所有链接,区分内链与外链,跳转到新的页面的简单任务。有几个工具可以帮你自动处理这些细节。

Scrapy runs on Python 2.7 and Python 3.3 or above.

在当前目录下创建新的Scrapy项目: scrapy startproject wikiSpider

创建一个爬虫:

在items.py文件中定义一个Article类,Scrapy的每个Item(条目)对象表示网站上的一个页面。可以根据需要定义不同的条目(如url,content,header image等)

from scrapy import Item, Field

class Article(Item):

title = Field()# -*- coding: utf-8 -*-

from scrapy.selector import Selector

from scrapy import Spider

from wikiSpider.items import Article

class ArticleSpider(Spider):

name = "article"

allowed_domains = ["en.wikipedia.org"]

start_urls = ["http://en.wikipedia.org/wiki/Main_Page",

"http://en.wikipedia.org/wiki/Python_%28programming_language%29"]

def parse(self, response):

item = Article()

title = response.xpath('//h1/text()')[0].extract()

print("Title is: " + title)

item['title'] = title

return item 运行$ scrapy crawl article 命令会用条目名称article来调用爬虫(有name="article"决定的)

日志处理:

在setting.py文件中设置日志显示层级: LOG_LEVEL='ERROR' 有五种层级,CRITICAL, ERROR, WARNING, DEBUG, INFO

scrapy crawl article -s LOG_FILE=wiki.log

scrapy用Item对象决定要从页面中提取哪些信息,支持CSV, JSON或XML文件格式

$ scrapy crawl article -o articles.csv -t csv

$ scrapy crawl article -o articles.json -t json

$ scrapy crawl article -o articles.xml -t xml

在线文档http://doc.scrapy.org/en/latest