Tensorboard 可视化模板例子

Tensorboard 可视化模板例子

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

#载入数据

mnist = input_data.read_data_sets('../data/mnist' , one_hot= True)

#每个批次大小

batch_size = 100

n_batch = mnist.train.num_examples // batch_size

#参考概要

def variable_summaries(var , name = 'summaries'):

with tf.name_scope(name):

mean = tf.reduce_mean(var)

tf.summary.scalar('mean', mean) #平均值

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean)))

tf.summary.scalar('stddev' , stddev) #标准差

tf.summary.scalar('max' , tf.reduce_max(var)) #最大值

tf.summary.scalar('min' , tf.reduce_min(var)) #最小值

tf.summary.histogram('histogram' , var) #直方图

#初始化权值

def weight_variable(shape):

with tf.name_scope('weight'):

initial = tf.truncated_normal(shape,stddev=0.1) #生成一个截断的正态分布

W = tf.Variable(initial , name='W')

return W

#初始化偏置

def bias_variable(shape):

with tf.name_scope('biases'):

initial = tf.truncated_normal(shape=shape , stddev=0.1)

b = tf.Variable(initial , name='b')

return b

#卷积层

def conv2d(x , W):

with tf.name_scope('conv2d'):

return tf.nn.conv2d(x , W , strides=[1,1,1,1] ,padding='SAME')

def max_pool_2x2(x):

with tf.name_scope('max_pool_2x2'):

return tf.nn.max_pool(x ,ksize=[1,2,2,1] , strides=[1,2,2,1] , padding='SAME')

#定义placeholder

with tf.name_scope('input'):

x = tf.placeholder(tf.float32 , [None , 784] ,name = 'x_input') #28*28

y = tf.placeholder(tf.float32, [None , 10] , name='y_input')

#改变x的格式转为4D的向量[batch , in_height , in_width , in_channels]

x_image = tf.reshape(x , [-1,28,28,1] , name='x_image')

tf.summary.image('input_image', x_image,12)

with tf.name_scope('conv1'):

#初始化第一个卷积层的权值和偏置

W_conv1 = weight_variable([5,5,1,32]) #5*5采样窗口,32个卷积核从一个平面抽取特征

b_conv1 = bias_variable([32]) #每一个卷积核一个偏置

variable_summaries(W_conv1 , 'W_conv1')

variable_summaries(b_conv1, 'b_conv1')

#把x_image和权值向量进行卷积,加上偏置,然后应用relu激活函数,maxpooling

h_conv1 = tf.nn.relu(conv2d(x_image , W_conv1) + b_conv1 , name= 'relu')

h_pool1 = max_pool_2x2(h_conv1)

with tf.name_scope('conv2'):

#初始化第二个卷积成的权值和偏置

W_conv2 = weight_variable([5,5,32,64]) #5*5采样窗口,64个卷积核从32个平面抽取特征

b_conv2 = bias_variable([64]) #每一个卷积核一个偏置

variable_summaries(W_conv2 , 'W_conv2')

variable_summaries(b_conv2, 'b_conv2')

#把h_pool1和权值向量进行卷积,加上偏置,然后应用relu激活函数,maxpooling

h_conv2 = tf.nn.relu(conv2d(h_pool1 , W_conv2) + b_conv2 , name='relu')

h_pool2 = max_pool_2x2(h_conv2)

#28*28的图片第一次卷积后28*28,pool后14*14

#第二次卷积14*14,pool后7*7

#经过上述之后得到64张7*7的平面

with tf.name_scope('fc1'):

#初始化第一个全连接的权值

W_fc1 = weight_variable([7*7*64 , 1024]) #上一层有7*7*64个神经元,全连接层有1024个神经元

b_fc1 = bias_variable([1024])

#将池化层2的输出转换成1维

h_pool2_flat = tf.reshape(h_pool2 , [-1,7*7*64])

#求第一个全连接的输出

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat,W_fc1)+ b_fc1)

#dropout

with tf.name_scope('dropout'):

keep_pro = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1 , keep_pro)

with tf.name_scope('fc2'):

#初始化第二个全连接

W_fc2 = weight_variable([1024,10])

b_fc2 = bias_variable([10])

#计算输出

with tf.name_scope('softmax'):

prediction = tf.nn.softmax(tf.matmul(h_fc1_drop , W_fc2) + b_fc2)

with tf.name_scope('cr_ep_loss'):

#交叉熵代价函数

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=prediction))

tf.summary.scalar('cr_ep_loss' , cross_entropy)

with tf.name_scope('train'):

#使用AdamOptimizer优化

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

with tf.name_scope('accuracy'):

#将结果放进布尔列表

correct_prediction = tf.equal(tf.argmax(prediction , 1) , tf.argmax(y ,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction , tf.float32) , name='accuracy')

tf.summary.scalar('accuracy',accuracy)

merged = tf.summary.merge_all()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

train_writer = tf.summary.FileWriter('logs/train' , sess.graph)

test_writer = tf.summary.FileWriter('logs/test' , sess.graph)

for i in range(1001):

batch_xs , batch_ys = mnist.train.next_batch(batch_size)

sess.run([merged , train_step], feed_dict={x:batch_xs , y:batch_ys , keep_pro:0.7})

train_summary = sess.run(merged , feed_dict={x:batch_xs , y:batch_ys , keep_pro:1})

train_writer.add_summary(train_summary , i)

batch_xs, batch_ys = mnist.test.next_batch(batch_size)

test_summary,acc = sess.run([merged , accuracy] , feed_dict={x:batch_xs , y:batch_ys , keep_pro:1})

test_writer.add_summary(test_summary, i)

if i%100 == 0:

test_acc = sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels, keep_pro: 1.0})

train_acc, loss = sess.run([accuracy,cross_entropy] , feed_dict={x:mnist.train.images[:10000] ,

y:mnist.train.labels[0:10000],

keep_pro :1.0})

print('Iter :%s; loss%s; Train Accuracy:%s; Test Accuracy:%s'%(

str(i) , str(loss),str(train_acc),str(test_acc)))

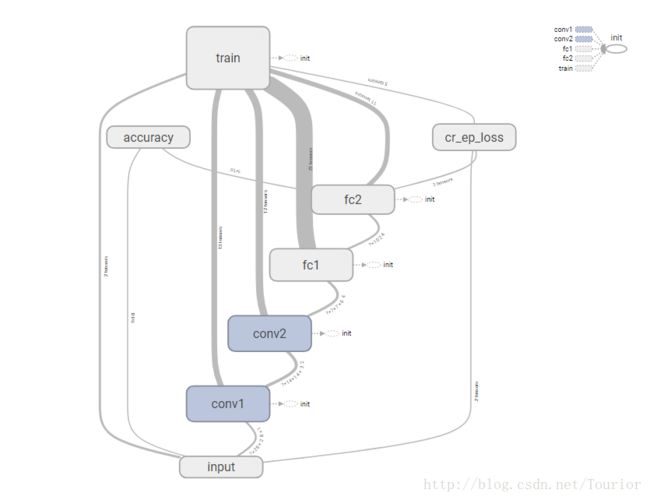

tensorboard 显示的Graphs

使用with tf.name_scope()定义命名空间

train_writer = tf.summary.FileWriter(‘logs/train’ , sess.graph) 语句写入logs文件

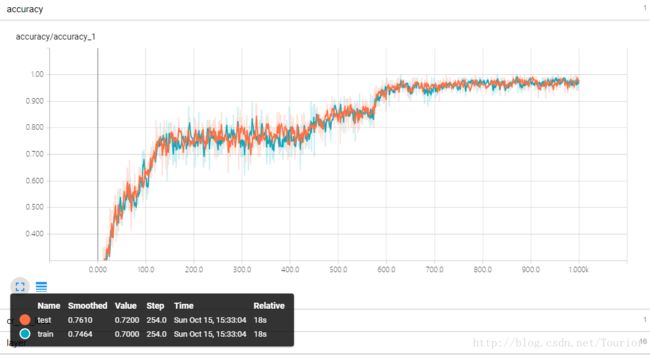

显示loss和accuracy

记录loss

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=prediction))

tf.summary.scalar('cr_ep_loss' , cross_entropy)记录accuracy

batch_xs , batch_ys = mnist.train.next_batch(batch_size)

sess.run([merged , train_step], feed_dict={x:batch_xs , y:batch_ys , keep_pro:0.7})

train_summary = sess.run(merged , feed_dict={x:batch_xs , y:batch_ys , keep_pro:1})

train_writer.add_summary(train_summary , i)

batch_xs, batch_ys = mnist.test.next_batch(batch_size)

test_summary,acc = sess.run([merged , accuracy] , feed_dict={x:batch_xs , y:batch_ys , keep_pro:1})

test_writer.add_summary(test_summary, i)显示权值偏置的统计信息

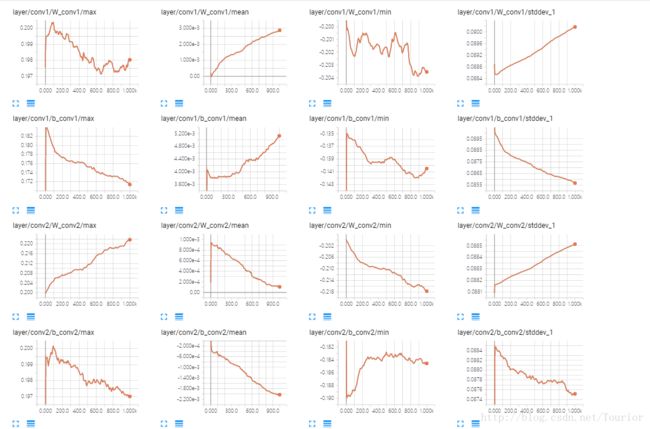

定义统计函数:

def variable_summaries(var , name = 'summaries'):

with tf.name_scope(name):

mean = tf.reduce_mean(var)

tf.summary.scalar('mean', mean) #平均值

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean)))

tf.summary.scalar('stddev' , stddev) #标准差

tf.summary.scalar('max' , tf.reduce_max(var)) #最大值

tf.summary.scalar('min' , tf.reduce_min(var)) #最小值

tf.summary.histogram('histogram' , var) #直方图使用:

传入需统计的变量和命名空间

W_conv1 = weight_variable([5,5,1,32]) #5*5采样窗口,32个卷积核从一个平面抽取特征

b_conv1 = bias_variable([32]) #每一个卷积核一个偏置

variable_summaries(W_conv1 , 'W_conv1')

variable_summaries(b_conv1, 'b_conv1')显示输入图片

记录显示图片

x_image = tf.reshape(x , [-1,28,28,1] , name='x_image')

tf.summary.image('input_image', x_image,12) #12表示在tensorboard中显示12张图片