flink实战—数据写入ES(ElasticSearch)

简介

Flink内置了Elasticsearch Connector用于对ES进行数据的操作,里面主要的类是ElasticsearchSink,使用时我们需要实现里面的抽象方法,自定义自己的逻辑。

版本说明:

ES版本:6.3.2 flink 1.6.1

需要添加的依赖

org.apache.flink

flink-connector-elasticsearch6_2.11

1.6.1

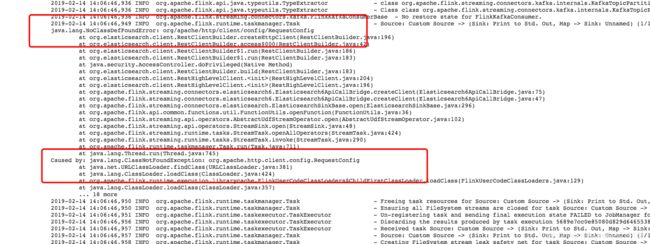

注意:如果出现下面的错误还需要添加下面的依赖

org.apache.httpcomponents

httpclient

4.5.7

Elasticsearch Sink

ElasticsearchSink使用TransportClient(前6.x的)或RestHighLevelClient(与6.x的开始)与Elasticsearch集群通信。对于仍使用现在已经过时,Elasticsearch版本TransportClient与Elasticsearch集群通信(即版本等于或小于5.x的),注意如何Map的StringS用于配置ElasticsearchSink。创建内部使用时,将直接转发此配置映射TransportClient。配置键在此处的Elasticsearch文档中进行了说明 。特别重要的是cluster.name必须与群集名称对应的参数。对于Elasticsearch 6.x及更高版本,在内部,RestHighLevelClient它用于群集通信。默认情况下,连接器使用REST客户端的默认配置。要为REST客户端进行自定义配置,用户可以RestClientFactory在设置ElasticsearchClient.Builder构建接收器时提供实现。

另请注意

本案例仅仅演示单个索引请求。一般地,ElasticsearchSinkFunction 可用于执行不同类型的多个请求(例如, DeleteRequest,UpdateRequest等等)。在内部,Flink Elasticsearch Sink的每个并行实例都使用BulkProcessor向集群发送操作请求。这将在将元素批量发送到集群之前缓冲元素。在BulkProcessor 执行批量同时申请一个,即会出现在正在进行的缓冲动作没有两个并发刷新。

参数设置

BulkProcessor通过在提供的内容中设置以下值,可以进一步配置内部以了解刷新缓冲操作请求的行为Map:

- bulk.flush.max.actions:刷新前缓冲的最大动作量。

- bulk.flush.max.size.mb:刷新前缓冲区的最大数据大小(以兆字节为单位)。

- bulk.flush.interval.ms:无论缓冲操作的数量或大小如何都要刷新的时间间隔。

对于2.x及更高版本,还支持配置重试临时请求错误的方式:

- bulk.flush.backoff.enable:如果一个或多个操作由于临时操作而失败,是否对刷新执行具有退避延迟的重试

EsRejectedExecutionException。 - bulk.flush.backoff.type:退避延迟的类型,

CONSTANT或者EXPONENTIAL - bulk.flush.backoff.delay:退避的延迟量。对于恒定的退避,这只是每次重试之间的延迟。对于指数退避,这是初始基本延迟。

- bulk.flush.backoff.retries:要尝试的退避重试次数。

数据插入es案例代码

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.RuntimeContext;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.tuple.Tuple5;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.elasticsearch.ElasticsearchSinkFunction;

import org.apache.flink.streaming.connectors.elasticsearch.RequestIndexer;

import org.apache.flink.streaming.connectors.elasticsearch6.ElasticsearchSink;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010;

import org.apache.http.HttpHost;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.client.Requests;

import java.util.*;

public class Data2Es {

public static void main(String[] args) throws Exception {

String kafkaBrokers = null;

String zkBrokers = null;

String topic = null;

String groupId = null;

if (args.length == 4) {

kafkaBrokers = args[0];

zkBrokers = args[1];

topic = args[2];

groupId = args[3];

} else {

System.exit(1);

}

System.out.println("===============》 flink任务开始 ==============》");

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//设置kafka连接参数

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", kafkaBrokers);

properties.setProperty("zookeeper.connect", zkBrokers);

properties.setProperty("group.id", groupId);

//设置时间类型

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

//设置检查点时间间隔

env.enableCheckpointing(5000);

//创建kafak消费者,获取kafak中的数据

FlinkKafkaConsumer010 kafkaConsumer010 = new FlinkKafkaConsumer010<>(topic, new SimpleStringSchema(), properties);

kafkaConsumer010.setStartFromEarliest();

DataStreamSource kafkaData = env.addSource(kafkaConsumer010);

kafkaData.print();

//解析kafka数据流 转化成固定格式数据流

DataStream> userData = kafkaData.map(new MapFunction>() {

@Override

public Tuple5 map(String s) throws Exception {

Tuple5 userInfo=null;

String[] split = s.split(",");

if(split.length!=5){

System.out.println(s);

}else {

long userID = Long.parseLong(split[0]);

long itemId = Long.parseLong(split[1]);

long categoryId = Long.parseLong(split[2]);

String behavior = split[3];

long timestamp = Long.parseLong(split[4]);

userInfo = new Tuple5<>(userID, itemId, categoryId, behavior, timestamp);

}

return userInfo;

}

});

List httpHosts = new ArrayList<>();

httpHosts.add(new HttpHost("ip", 9200, "http"));

ElasticsearchSink.Builder> esSinkBuilder = new ElasticsearchSink.Builder<>(

httpHosts,

new ElasticsearchSinkFunction>() {

public IndexRequest createIndexRequest(Tuple5 element) {

Map json = new HashMap<>();

json.put("userid", element.f0.toString());

json.put("itemid", element.f1.toString());

json.put("categoryid", element.f2.toString());

json.put("behavior", element.f3);

json.put("timestamp", element.f4.toString());

return Requests.indexRequest()

.index("flink")

.type("user")

.source(json);

}

@Override

public void process(Tuple5 element, RuntimeContext ctx, RequestIndexer indexer) {

indexer.add(createIndexRequest(element));

}

}

);

/* 必须设置flush参数 */

//刷新前缓冲的最大动作量

esSinkBuilder.setBulkFlushMaxActions(1);

//刷新前缓冲区的最大数据大小(以MB为单位)

esSinkBuilder.setBulkFlushMaxSizeMb(500);

//论缓冲操作的数量或大小如何都要刷新的时间间隔

esSinkBuilder.setBulkFlushInterval(5000);

userData.addSink(esSinkBuilder.build());

env.execute("data2es");

}

} 数据更新并插入es案例代码

注意:必须设置id 在update的时候

SingleOutputStreamOperator outputStream = tuple2DataStream.map(new MapFunction, Row>() {

@Override

public Row map(Tuple2 value) throws Exception {

Row row1 = value.f1;

return row1;

}

});

List httpHosts = new ArrayList<>();

httpHosts.add(new HttpHost("ip1", 9200, "http"));

httpHosts.add(new HttpHost("ip2", 9200, "http"));

httpHosts.add(new HttpHost("ip3", 9200, "http"));

// use a ElasticsearchSink.Builder to create an ElasticsearchSink

ElasticsearchSink.Builder esSinkBuilder = new ElasticsearchSink.Builder<>(

httpHosts,

new ElasticsearchSinkFunction() {

public IndexRequest createIndexRequest(Row element) {

Map map = SqlParse.sqlParse("select count(distinct userId) as uv ,behavior from userTable group by behavior", element);

return Requests.indexRequest()

.index("test_index2")

.type("test_type2")

.source(map);

}

public UpdateRequest updateIndexRequest(Row element) throws IOException {

UpdateRequest updateRequest=new UpdateRequest();

//设置表的index和type,必须设置id才能update

Map map = SqlParse.sqlParse("select count(distinct userId) as uv ,behavior from userTable group by behavior", element);

updateRequest

.index("test_index2")

.type("test_type2")

//必须设置id

.id(map.get("behavior").toString())

.doc(map)

.upsert(createIndexRequest(element));

return updateRequest;

}

@Override

public void process(Row element, RuntimeContext ctx, RequestIndexer indexer) {

try {

indexer.add(updateIndexRequest(element));

} catch (IOException e) {

e.printStackTrace();

}

}

}

);

扫一扫加入大数据技术交流群,了解更多大数据技术,还有免费资料等你哦

扫一扫加入大数据技术交流群,了解更多大数据技术,还有免费资料等你哦

扫一扫加入大数据技术交流群,了解更多大数据技术,还有免费资料等你哦