Spark学习(肆)- 从Hive平滑过渡到Spark SQL

文章目录

- SQLContext的使用

- HiveContext的使用

- SparkSession的使用

- spark-shell&spark-sql的使用

- spark-shell

- spark-sql

- thriftserver&beeline的使用

- jdbc方式编程访问

SQLContext的使用

Spark1.x中Spark SQL的入口点: SQLContext

val sc: SparkContext // An existing SparkContext.

val sqlContext = new org.apache.spark.sql.SQLContext(sc)

// this is used to implicitly convert an RDD to a DataFrame.

import sqlContext.implicits._

建立一个scala maven项目

SQLContext测试:

添加相关pom依赖

<properties>

<scala.version>2.11.8scala.version>

<spark.version>2.1.0spark.version>

properties>

<dependencies>

<dependency>

<groupId>org.scala-langgroupId>

<artifactId>scala-libraryartifactId>

<version>${scala.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_2.11artifactId>

<version>${spark.version}version>

dependency>

dependencies>

测试数据:

{“name”: “zhangsan”, “age”:30}

{"name ": “Michael”}

{“name” : “Andy”, “age”:30}

{“name” : “Justin”, “age” :19}

package com.imooc.spark

import org.apache.spark.SparkContext

import org.apache.spark.sql.SQLContext

import org.apache.spark.SparkConf

/**

* SQLContext的使用:

* 注意:IDEA是在本地,而测试数据是在服务器上 ,能不能在本地进行开发测试的?

*

*/

object SQLContextApp {

def main(args: Array[String]) {

val path = args(0)

//1)创建相应的Context

val sparkConf = new SparkConf()

//在测试集群或者生产集群中,AppName和Master我们是通过脚本进行指定

//sparkConf.setAppName("SQLContextApp").setMaster("local[2]")

val sc = new SparkContext(sparkConf)

val sqlContext = new SQLContext(sc)

//2)相关的处理: json

val people = sqlContext.read.format("json").load(path)

people.printSchema()

people.show()

//3)关闭资源

sc.stop()

}

}

| age | name |

|---|---|

| 30 | zhangsan |

| null | Michaeti |

| 30 | Andy |

| 19 | Justin |

集群测试:

1)编译:mvn clean package -DskipTests

2)上传到集群

提交Spark Application到环境中运行

spark-submit

–name SQLContextApp

–class com.kun.sparksql.SQLContextApp

–master local[2]

/home/hadoop/lib/sql-1.0.jar

/home/hadoop/app/spark-2.1.0-bin-2.6.0-cdh5.7.0/examples/src/main/resources/people.json

结果同上本地测试结果

HiveContext的使用

Spark1.x中Spark SQL的入口点: HiveContext

要使用HiveContext,只需要有一个hive-site.xml

将Hive的配置文件hive-site.xml拷贝到${spark_home}/conf目录下

val sc: SparkContext // An existing SparkContext.

val sqlContext = new org.apache.spark.sql.hive.HiveContext(sc)

添加maven依赖

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-hive_2.11artifactId>

<version>${spark.version}version>

dependency>

测试:

package com.kun.sparksql

import org.apache.spark.sql.hive.HiveContext

import org.apache.spark.{SparkConf, SparkContext}

/**

* HiveContext的使用 (展示hive里emp表的内容)

* 使用时需要通过--jars 把mysql的驱动传递到classpath

*/

object HiveContextApp {

def main(args: Array[String]) {

//1)创建相应的Context

val sparkConf = new SparkConf()

//在测试或者生产中,AppName和Master我们是通过脚本进行指定

//sparkConf.setAppName("HiveContextApp").setMaster("local[2]")

val sc = new SparkContext(sparkConf)

val hiveContext = new HiveContext(sc)

//2)相关的处理:

hiveContext.table("emp").show

//3)关闭资源

sc.stop()

}

}

本地测试和集群测试步骤同SQLContext的使用雷同

提交Spark Application到环境中运行

spark-submit

–name HiveContextApp

–class com.kun.sparksql.HiveContextApp

–master local[2]

/home/hadoop/lib/sql-1.0.jar

–jar /mysql驱动包路径

SparkSession的使用

Spark2.x中Spark SQL的入口点: SparkSession

import org.apache.spark.sql.SparkSession

val spark = SparkSession

.builder()

.appName("Spark SQL basic example")

.config("spark.some.config.option", "some-value")

.getOrCreate()

// For implicit conversions like converting RDDs to DataFrames

import spark.implicits._

测试:

package com.kun.sparksql

import org.apache.spark.sql.SparkSession

/**

* SparkSession的使用

*/

object SparkSessionApp {

def main(args: Array[String]) {

val spark = SparkSession.builder().appName("SparkSessionApp")

.master("local[2]").getOrCreate()

val people = spark.read.json("file:///Users/rocky/data/people.json")

people.show()

spark.stop()

}

}

spark-shell&spark-sql的使用

spark-shell

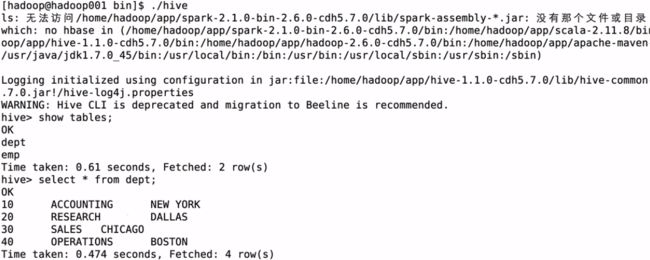

进入hive里:

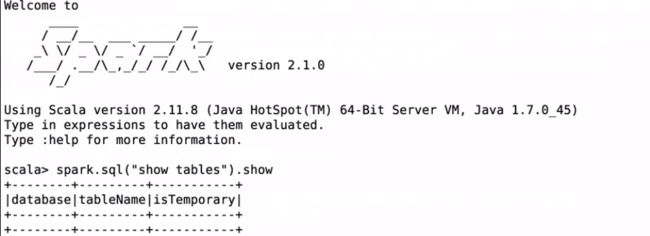

使用 spark-shell --master local[2] 命令来启动spark-shell

没有配置hive-site.xml前spark里是空表

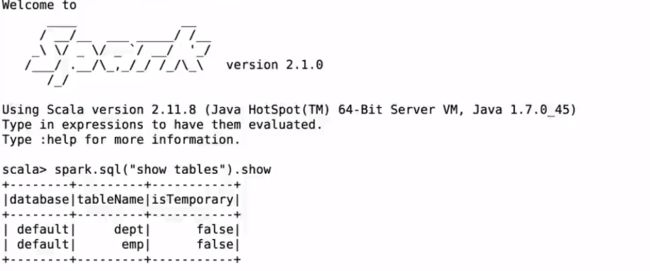

将Hive的配置文件hive-site.xml拷贝到${spark_home}/conf目录下;重新启动spark-shell,启动时需要通过–jars 把mysql的驱动传递到classpath

查看hive里的表:

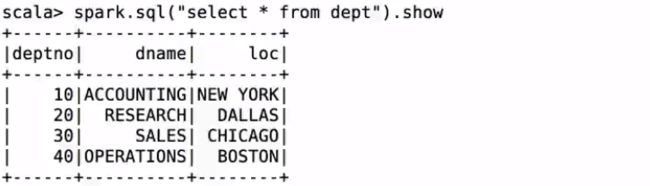

访问dept表:

使用相同的查询语句;hive会进入mr作业;spark会直接出数据

![]()

spark-sql

select * form emp;

查看spark sql执行计划(sql语句是任意写的;只是为了查看执行计划)

create table t(key string, value string);

explain extended select a.key*(2+3), b.value from t a join t b on a.key = b.key and a.key > 3;

执行计划:

== Parsed Logical Plan ==

'Project [unresolvedalias(('a.key * (2 + 3)), None), 'b.value]

+- 'Join Inner, (('a.key = 'b.key) && ('a.key > 3))

:- 'UnresolvedRelation `t`, a

+- 'UnresolvedRelation `t`, b

== Analyzed Logical Plan ==

(CAST(key AS DOUBLE) * CAST((2 + 3) AS DOUBLE)): double, value: string

Project [(cast(key#321 as double) * cast((2 + 3) as double)) AS (CAST(key AS DOUBLE) * CAST((2 + 3) AS DOUBLE))#325, value#324]

+- Join Inner, ((key#321 = key#323) && (cast(key#321 as double) > cast(3 as double)))

:- SubqueryAlias a

: +- MetastoreRelation default, t

+- SubqueryAlias b

+- MetastoreRelation default, t

== Optimized Logical Plan ==

Project [(cast(key#321 as double) * 5.0) AS (CAST(key AS DOUBLE) * CAST((2 + 3) AS DOUBLE))#325, value#324]

+- Join Inner, (key#321 = key#323)

:- Project [key#321]

: +- Filter (isnotnull(key#321) && (cast(key#321 as double) > 3.0))

: +- MetastoreRelation default, t

+- Filter (isnotnull(key#323) && (cast(key#323 as double) > 3.0))

+- MetastoreRelation default, t

== Physical Plan ==

*Project [(cast(key#321 as double) * 5.0) AS (CAST(key AS DOUBLE) * CAST((2 + 3) AS DOUBLE))#325, value#324]

+- *SortMergeJoin [key#321], [key#323], Inner

:- *Sort [key#321 ASC NULLS FIRST], false, 0

: +- Exchange hashpartitioning(key#321, 200)

: +- *Filter (isnotnull(key#321) && (cast(key#321 as double) > 3.0))

: +- HiveTableScan [key#321], MetastoreRelation default, t

+- *Sort [key#323 ASC NULLS FIRST], false, 0

+- Exchange hashpartitioning(key#323, 200)

+- *Filter (isnotnull(key#323) && (cast(key#323 as double) > 3.0))

+- HiveTableScan [key#323, value#324], MetastoreRelation default, t

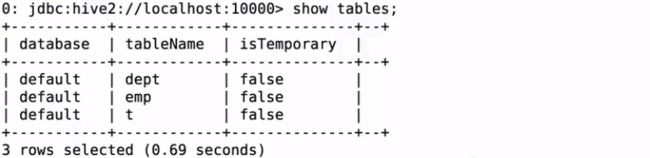

thriftserver&beeline的使用

进入${Spark_home}/sbin下面;启动thriftserver:

start-thriftserver.sh --master local[2] --jars mysql驱动包路径

查看是否启动完成

- 启动thriftserver: 默认端口是10000 ,可以修改

修改thriftserver启动占用的默认端口号:

./start-thriftserver.sh

–master local[2]

–jars ~/software/mysql-connector-java-5.1.27-bin.jar

–hiveconf hive.server2.thrift.port=14000

启动thriftserver后;beeline的对应端口也要修改

beeline -u jdbc:hive2://localhost:14000 -n hadoop

2)启动beeline(${spark_home}/bin/beeline)(可以启动多个beenline客户端)

beeline -u jdbc:hive2://localhost:10000 -n hadoop

运行的sql可以在界面JDBC/ODBC Server界面查看

thriftserver和普通的spark-shell/spark-sql有什么区别?

1)spark-shell、spark-sql都是一个spark application;

2)thriftserver, 不管你启动多少个客户端(beeline/code),永远都是一个spark application

解决了一个数据共享的问题,多个客户端可以共享数据;

jdbc方式编程访问

注意事项:在使用jdbc开发时,一定要先启动thriftserver

Exception in thread “main” java.sql.SQLException:

Could not open client transport with JDBC Uri: jdbc:hive2://hadoop001:14000:

java.net.ConnectException: Connection refused

添加maven依赖

<dependency>

<groupId>org.spark-project.hivegroupId>

<artifactId>hive-jdbcartifactId>

<version>1.2.1.spark2version>

dependency>

package com.kun.sparksql

import java.sql.DriverManager

/**

* 通过JDBC的方式访问

*/

object SparkSQLThriftServerApp {

def main(args: Array[String]) {

Class.forName("org.apache.hive.jdbc.HiveDriver")

val conn = DriverManager.getConnection("jdbc:hive2://hadoop001:14000","hadoop","")

val pstmt = conn.prepareStatement("select empno, ename, sal from emp")

val rs = pstmt.executeQuery()

while (rs.next()) {

println("empno:" + rs.getInt("empno") +

" , ename:" + rs.getString("ename") +

" , sal:" + rs.getDouble("sal"))

}

rs.close()

pstmt.close()

conn.close()

}

}