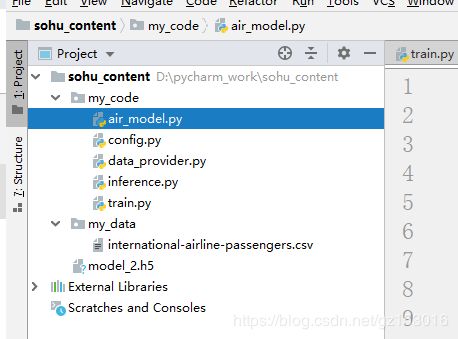

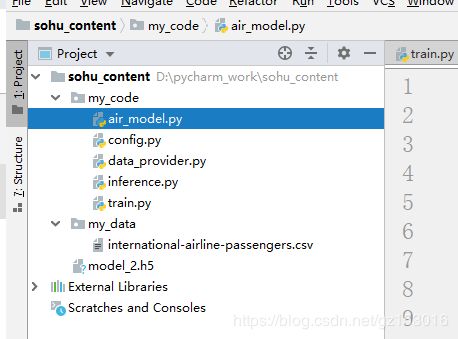

1. project的文件基本结构

2. project配置文件config.py

path_to_dataset = '../my_data/international-airline-passengers.csv'

split_rate = 0.9

infer_seq_length = 10 # 用于推断的历史序列长度

epochs = 100

validation_split=0.1

save_path = "../model_2.h5"

batch_size=20

3. 数据处理文件data_provider.py

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from keras.models import Sequential

from keras.layers import LSTM, Dense, Activation

import config

np.random.seed(1234)

def data_process(dataset_path, split_rate, infer_seq_length):

df = pd.read_csv(dataset_path, usecols=['passengers'])

# print("df:", df)

# print("df.shape:", df.shape) df.shape: (144, 1)

# 归一化

scaler_minmax = MinMaxScaler() # 映射到(0, 1)

# print("scaler_minmax: ", scaler_minmax)scaler_minmax: MinMaxScaler(copy=True, feature_range=(0, 1))

data = scaler_minmax.fit_transform(df)

# print("data:", data)

# print("data.shape: ", data.shape)data.shape: (144, 1)

"""

[[]

[]

[]](144, 1)

"""

infer_seq_length = infer_seq_length # 用于推断的历史序列长度

d = []

for i in range(data.shape[0] - infer_seq_length):

# print("data[i:i + infer_seq_length + 1]:", data[i:i + infer_seq_length + 1])

"""

[[]

[]

[]]

"""

# print("data[i:i + infer_seq_length + 1].tolist():", data[i:i + infer_seq_length + 1].tolist())

"""

[[],[],[],[]]

"""

d.append(data[i:i + infer_seq_length + 1].tolist())

# print("d: ", d)

"""

[[[], [], []]]

"""

d = np.array(d)

"""

[[[]

[]

[]]]

"""

X_train, y_train = d[:int(d.shape[0] * split_rate), :-1], d[:int(d.shape[0] * split_rate), -1]

X_test, y_test = d[int(d.shape[0] * split_rate):, :-1], d[int(d.shape[0] * split_rate):, -1]

# print(X_train.shape, y_train.shape)(120, 10, 1) (120, 1)

# print(X_test.shape, y_test.shape) (14, 10, 1) (14, 1)

return X_train, y_train, X_test, y_test

if __name__ == "__main__":

dataset_path = config.path_to_dataset

split_rate = config.split_rate

infer_seq_length = config.infer_seq_length

data_process(dataset_path, split_rate, infer_seq_length)

4. 网络模型文件air_model.py

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from keras.models import Sequential

from keras.layers import LSTM, Dense, Activation, Dropout

from keras.utils import plot_model

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0, 1"

np.random.seed(1234)

def create_model():

model = Sequential()

#输入数据的shape为(n_samples, timestamps, features)

#隐藏层设置为256, input_shape元组第二个参数1意指features为1

#下面还有个lstm,故return_sequences设置为True

model.add(LSTM(units=256,input_shape=(None,1),return_sequences=True))

model.add(Dropout(0.2))

model.add(LSTM(units=256, return_sequences=False))

model.add(Dropout(0.2))

#后接全连接层,直接输出单个值,故units为1

"""

The last layer we use is a Dense layer ( = feedforward).

Since we are doing a regression, its activation is linear.

"""

model.add(Dense(units=1))

model.add(Activation('linear'))

model.compile(loss='mse',optimizer='adam')

return model

if __name__ == "__main__":

model = create_model()

# plot_model(model, "international-airline-passengers.png", show_shapes=True) # 保存模型图

5. 模型训练文件train.py

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from keras.models import Sequential

from keras.layers import LSTM, Dense, Activation

import air_model

import config

import data_provider

np.random.seed(1234)

def create_network(model=None, data=None):

epochs = config.epochs

infer_seq_length = config.infer_seq_length

path_to_dataset = config.path_to_dataset

save_path = config.save_path

batch_size = config.batch_size

split_rate = config.split_rate

validation_split = config.validation_split

if data is None:

print('Loading data... ')

X_train, y_train, X_test, y_test = \

data_provider.data_process(path_to_dataset, split_rate, infer_seq_length)

# print(X_train.shape)

else:

X_train, y_train, X_test, y_test = data

print('\nData Loaded. Compiling...\n')

if model is None:

model = air_model.create_model()

#model.fit(X_train, y_train,batch_size=512, nb_epoch=epochs, validation_split=0.05)

# History = model.fit(X_train, y_train, batch_size=512, nb_epoch=epochs, validation_data=(X_test, y_test))

# print("History-Train:", History, History.history)

# metrics = model.evaluate(X_test, y_test)

# print("metrics:", metrics)

model.fit(X_train, y_train, batch_size = batch_size,

epochs = epochs, validation_split = validation_split)

# 保存模型

model.save(save_path)

# try:

# model.fit(

# X_train, y_train,

# batch_size=512, nb_epoch=epochs, validation_split=0.05)

# predicted = model.predict(X_test)

# predicted = np.reshape(predicted, (predicted.size,))

# except KeyboardInterrupt:

# print('Training duration (s) : ', time.time() - global_start_time)

# return model, y_test, 0

# try:

# import matplotlib.pyplot as plt

# fig = plt.figure()

# ax = fig.add_subplot(111)

# ax.plot(y_test[:100, 0])

# plt.plot(predicted[:100, 0])

# plt.show()

# except Exception as e:

# print(str(e))

# print('Training duration (s) : ', time.time() - global_start_time)

# return model, y_test, predicted

if __name__ == "__main__":

create_network()

6. 预测文件inference.py

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from keras.models import Sequential

from keras.layers import LSTM, Dense, Activation

from keras.models import load_model

import air_model

import config

import data_provider

np.random.seed(1234)

def inference(model=None, data=None):

infer_seq_length = config.infer_seq_length

path_to_dataset = config.path_to_dataset

save_path = config.save_path

split_rate = config.split_rate

if data is None:

print('Loading data... ')

X_train, y_train, X_test, y_test = \

data_provider.data_process(path_to_dataset, split_rate, infer_seq_length)

# print(X_train.shape)

else:

X_train, y_train, X_test, y_test = data

model = load_model(save_path)

predicted = model.predict(X_test)

predicted = np.reshape(predicted, (predicted.size,))

fig = plt.figure()

ax = fig.add_subplot(111)

print("y_test.shape:", y_test.shape)

ax.plot(y_test[:14])

plt.plot(predicted[:14])

plt.show()

df = pd.read_csv(config.path_to_dataset, usecols=['passengers'])

scaler_minmax = MinMaxScaler()

data = scaler_minmax.fit_transform(df)

infer_seq_length = config.infer_seq_length # 用于推断的历史序列长度

d = []

for i in range(data.shape[0] - infer_seq_length):

d.append(data[i:i + infer_seq_length + 1].tolist())

d = np.array(d)

# inverse_transform获得归一化前的原始数据

plt.plot()

plt.plot(scaler_minmax.inverse_transform(d[:, -1]), label='true data')

plt.plot(scaler_minmax.inverse_transform(model.predict(d[:, :-1])), 'r:', label='predict')

plt.show()

plt.plot()

plt.plot(scaler_minmax.inverse_transform(d[int(len(d) * split_rate):, -1]), label='true data')

plt.plot(scaler_minmax.inverse_transform(model.predict(d[int(len(d) * split_rate):, :-1])), 'r:', label='predict')

plt.legend()

plt.show()

if __name__ == "__main__":

inference()