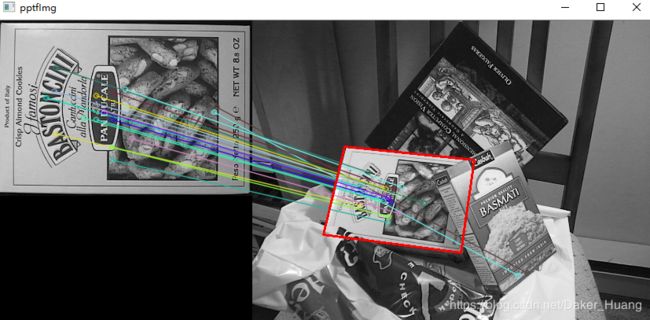

OpenCv-C++-KAZE(AKAZE)局部特征匹配(二)

上一篇已经做出了KAZE(AKAZE)局部特征的检测,就差匹配没有做到。

那么,现在来实现一下:

放上代码:

#include

#include

#include

using namespace cv;

using namespace std;

Mat img1,img2;

int main(int argc, char** argv)

{

img1 = imread("D:/test/box.png", IMREAD_GRAYSCALE);

img2 = imread("D:/test/box_in_scene.png", IMREAD_GRAYSCALE);

if (!img1.data||!img2.data)

{

printf("图片未找到...");

return -1;

}

imshow("input box", img1);

imshow("input box_in_scene", img2);

double t1 = getTickCount();

//检测特征点(非线性)

Ptrdetector = AKAZE::create();

//Ptrdetector = KAZE::create();//KAZE检测

//存放描述子

Mat descriptor_obj,descriptor_scene;

//img1特征点检测并计算描述子

vector keypoints_obj;

detector->detectAndCompute(img1, Mat(), keypoints_obj, descriptor_obj);

//img2特征点检测并计算描述子

vector keypoints_scene;

detector->detectAndCompute(img2, Mat(), keypoints_scene,descriptor_scene);

double t2 = getTickCount();

double t = (t2 - t1) * 1000 / getTickFrequency();//结果转化为毫秒

printf("特征点寻找所花费时间(ms):%f", t);

//使用FLANN匹配器比较两个关键点的匹配度

FlannBasedMatcher fbMatcher(new flann::LshIndexParams(20,10,2));//用LshIndexParams

/*这里不能使用FlannBasedMatcher fbMatcher();这条语句,因为它不支持CV_8UC1类型,

会报错,OpenCv暂时还没有解决这一问题。*/

//也可以使用暴力匹配(BFMatcher bfmatches;)

BFMatcher bfmatches;

vectormatches;

fbMatcher.match(descriptor_obj, descriptor_scene, matches);

//绘制匹配线

Mat resultImg;

drawMatches(img1, keypoints_obj, img2, keypoints_scene, matches, resultImg,

Scalar::all(-1), Scalar::all(-1),vector(),DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

/*最后一个参数使用DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS就可以把检测到的特征点隐去,

只留下匹配到的特征点。*/

imshow("AKAZE Matches", resultImg);

/*那么上面的操作所显示的结果匹配到的特征点很多,为了减少多余的特征点,下面进行

如下操作*/

vectorgoodmatches;//保存从众多匹配点中找出的最优点

/*

1、遍历整个描述子;

2、从描述子中找出最优匹配点(距离最小)

*/

double minDist = 1000;//初始化

double maxDist = 0;

for (int i = 0; i < descriptor_obj.rows; i++)

{

double dist = matches[i].distance;

if (dist > maxDist)

{

maxDist = dist;

}

if (dist < minDist)

{

minDist = dist;

}

}

for (int i = 0; i < descriptor_obj.rows; i++)

{

double dist = matches[i].distance;

if (dist < max(2 * minDist, 0.02))

{

goodmatches.push_back(matches[i]);

}

}

Mat goodmatchesImg;

drawMatches(img1, keypoints_obj, img2, keypoints_scene, goodmatches, goodmatchesImg,

Scalar::all(-1), Scalar::all(-1), vector(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

imshow("goodmatchesImg", goodmatchesImg);

//用线匹配不直观,用透视矩阵来做

//-------------------平面对象识别(将匹配到的内容替换为矩形)--------------------------

//生成透视变换矩阵

vector obj;

vector objinscene;

for (size_t i = 0; i < goodmatches.size(); i++)

{

obj.push_back(keypoints_obj[goodmatches[i].queryIdx].pt);

objinscene.push_back(keypoints_scene[goodmatches[i].trainIdx].pt);

}

Mat H = findHomography(obj, objinscene, RANSAC); //生成透视变换矩阵

vector obj_corner(4);//源图片4个角的坐标

vector objinscene_corner(4);

obj_corner[0] = Point(0,0);

obj_corner[1] = Point(img1.cols, 0);

obj_corner[2] = Point(img1.cols, img1.rows);

obj_corner[3] = Point(0, img1.rows);

//------------------透视变换---------------------

perspectiveTransform(obj_corner, objinscene_corner, H);

Mat pptfImg= goodmatchesImg.clone();

line(pptfImg, objinscene_corner[0] + Point2f(img1.cols, 0), objinscene_corner[1] + Point2f(img1.cols, 0), Scalar(0, 0, 255), 2, 8, 0);

line(pptfImg, objinscene_corner[1] + Point2f(img1.cols, 0), objinscene_corner[2] + Point2f(img1.cols, 0), Scalar(0, 0, 255), 2, 8, 0);

line(pptfImg, objinscene_corner[2] + Point2f(img1.cols, 0), objinscene_corner[3] + Point2f(img1.cols, 0), Scalar(0, 0, 255), 2, 8, 0);

line(pptfImg, objinscene_corner[3] + Point2f(img1.cols, 0), objinscene_corner[0] + Point2f(img1.cols, 0), Scalar(0, 0, 255), 2, 8, 0);

imshow("pptfImg", pptfImg);

waitKey(0);

return 0;

}