Coursera | Andrew Ng (03-week2-2.7)—迁移学习

该系列仅在原课程基础上部分知识点添加个人学习笔记,或相关推导补充等。如有错误,还请批评指教。在学习了 Andrew Ng 课程的基础上,为了更方便的查阅复习,将其整理成文字。因本人一直在学习英语,所以该系列以英文为主,同时也建议读者以英文为主,中文辅助,以便后期进阶时,为学习相关领域的学术论文做铺垫。- ZJ

Coursera 课程 |deeplearning.ai |网易云课堂

转载请注明作者和出处:ZJ 微信公众号-「SelfImprovementLab」

知乎:https://zhuanlan.zhihu.com/c_147249273

CSDN:http://blog.csdn.net/junjun_zhao/article/details/79179658

2.7 Transfer learning (迁移学习)

(字幕来源:网易云课堂)

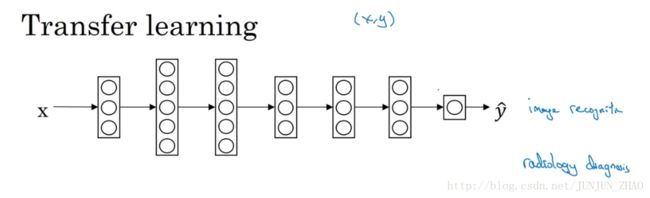

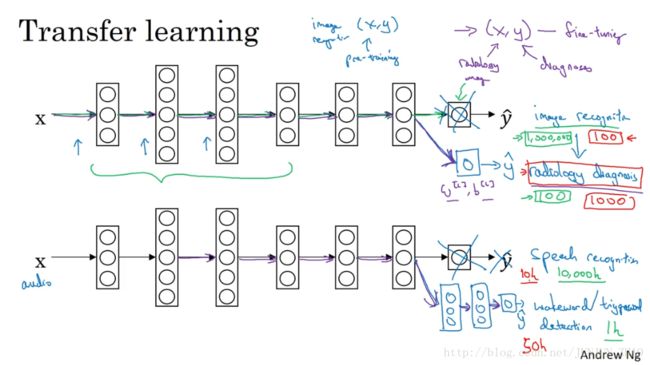

One of the most powerful ideas in deep learning is that sometimes you can take knowledge the neural network has learned from one task and apply that knowledge to a separate task.So for example, maybe you could have the neural network learn to recognize objects like cats and then use that knowledge or use part of that knowledge to help you do a better job reading x-ray scans.This is called transfer learning. Let’s take a look.Let’s say you’ve trained your neural network on image recognition.So you first take a neural network and train it on X Y pairs, where X is an image and Y is some object.An image is a cat or a dog or a bird or something else.If you want to take this neural network and adapt, or we say transfer, what is learned to a different task, such as radiology diagnosis, meaning really reading X-ray scans.

深度学习中最强大的理念之一就是,有的时候,神经网络可以从一个任务中习得知识, 并将这些知识应用到另一个独立的任务中。所以例如 也许你已经训练好一个神经网络,能够识别像猫这样的对象 然后使用,那些知识 或者部分习得的知识,去帮助您更好地阅读 x 射线扫描图,这就是所谓的迁移学习 我们来看看,假设你已经训练好一个图像识别神经网络,所以你首先用一个神经网络 并在 x y 对上训练,其中 x 是图像 y 是某些对象,图像是猫或狗 鸟或其他东西,如果你把这个神经网络拿来 然后让它适应,或者说迁移,在不同任务中学到的知识,比如放射科诊断,就是说阅读 X 射线扫描图。

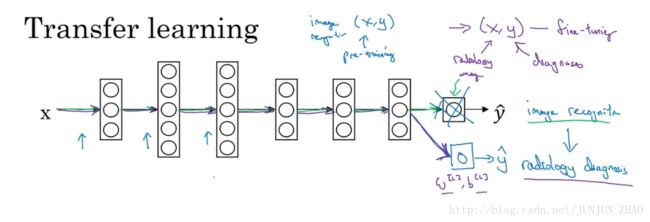

what you can do is take this last output layer of the neural network and just delete that and delete also the weights feeding into that last output layer and create a new set of randomly initialized weights just for the last layer and have that now output radiology diagnosis.So to be concrete, during the first phase of training when you’re training on an image recognition task, you train all of the usual parameters for the neural network, all the weights, all the layers and you have something that now learns to make image recognition predictions.Having trained that neural network, what you now do to implement transfer learning is swap in a new data set X Y, where now these are radiology images.And y are the diagnoses you want to predict and what you do is initialize the last layers’ weights.Let’s call that w[l] w [ l ] and b[l] b [ l ] . randomly.

你可以做的是 把神经网络最后的输出层拿走,就把它删掉 还有进入到最后一层的权重删掉,然后为最后一层重新赋予随机权重,然后让它在放射诊断数据上训练,具体来说 在第一阶段训练过程中,当你进行图像识别任务训练时,你可以训练神经网络的所有常用参数,所有的权重 所有的层,然后你就得到了一个能够做图像识别预测的网络,在训练了这个神经网络后,要实现迁移学习 你现在要做的是 把数据集换成新的 x y 对,现在这些变成放射科图像,而 y 是你想要预测的诊断,你要做的是初始化最后一层的权重,让我们称之为 w[l] w [ l ] 和 b[l] b [ l ] 随机初始化。

And now, retrain the neural network on this new data set, on the new radiology data set.You have a couple options of how you retrain neural network with radiology data.You might, if you have a small radiology dataset, you might want to just retrain the weights of the last layer, just w[l] w [ l ] and b[l] b [ l ] , and keep the rest of the parameters fixed.If you have enough data, you could also retrain all the layers of the rest of the neural network.And the rule of thumb is maybe if you have a small data set, then just retrain the one last layer at the output layer.Or maybe that last one or two layers.But if you have a lot of data, then maybe you can retrain all the parameters in the network.And if you retrain all the parameters in the neural network, then this initial phase of training on image recognition is sometimes called pre-training, because you’re using image recognitions data to pre-initialize or really pre-train the weights of the neural network.And then if you are updating all the weights afterwards, then training on the radiology data sometimes that’s called fine tuning.So you hear the words pre-training and fine tuning in a deep learning context, this is what they mean when they refer to pre-training and fine tuning weights in a transfer learning source.And what you’ve done in this example, is you’ve taken knowledge learned from image recognition and applied it or transferred it to radiology diagnosis.And the reason this can be helpful is that a lot of the low level features such as detecting edges, detecting curves, detecting positive objects.Learning from that, from a very large image recognition data set, might help your learning algorithm do better in radiology diagnosis.It’s just learned a lot about the structure and the nature of how images look like and some of that knowledge will be useful.So having learned to recognize images, it might have learned enough about you know, just what parts of different images look like, that that knowledge about lines, dots, curves, and so on, maybe small parts of objects, that knowledge could help your radiology diagnosis network learn a bit faster or learn with less data.

现在 我们在这个新数据集上重新训练网络,在新的放射科数据集上训练网络,要用放射科数据集从新训练神经网络有几种做法,你可能 如果你的放射科数据集很小,你可能只需要重新训练最后一层的权重,就是 w[l] w [ l ] b[l] b [ l ] , 并保持其他参数不变,如果你有足够多的数据,你可以重新训练神经网络中剩下的所有层,经验规则是 如果你有一个小数据集,就只训练输出层前的最后一层,或者也许是最后一两层,但是如果你有很多数据,那么也许你可以重新训练网络中的所有参数,如果你重新训练神经网络中的所有参数,那么这个在图像识别数据的初期训练阶段,有时称为预训练,因为你再用图像识别数据,去预先初始化 或者预训练神经网络的权重,然后 如果你以后更新所有权重,然后在放射科数据上训练 有时这个过程叫微调,如果你在深度学习文献中看到预训练和微调,你就知道它们说的是这个意思,预训练和微调迁移学习来源的权重,在这个例子中你做的是,把图像识别中学到的知识,应用或迁移到放射科诊断上来,为什么这样做有效果呢?有很多 低层次特征 比如说边缘检测,曲线检测 阳性对象检测,从非常大的图像识别数据库中习得这些能力,可能有助于你的学习算法在放射科诊断中做得更好,算法学到了很多结构信息 图像形状的信息,其中一些知识可能会很有用,所以学会了图像识别,它就可能学到足够多的信息 可以了解,不同图像的组成部分是怎样的,学到线条,点 曲线 这些知识,也许对象的一小部分,这些知识有可能帮助你的放射科诊断网络,学习更快一些 或者需要更少的学习数据。

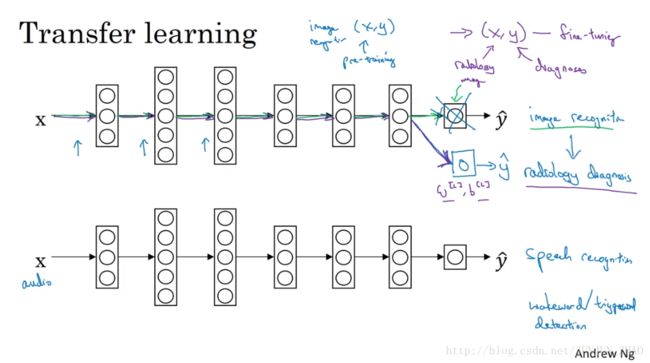

Here’s another example.Let’s say that you’ve trained a speech recognition system so now x is input of audio or audio snippets, and y is some ink transcript.So you’ve trained in speech recognition system to output your transcripts.And let’s say that you now want to build a “wake words” or a “trigger words”detection system.So, recall that a wake word or the trigger word are the words we say in order to wake up speech control devices in our houses such as saying”Alexa” to wake up an Amazon Echo or “OK Google” to wake up a Google device or”hey Siri” to wake up an Apple device or saying “Ni hao baidu” to wake up a baidu device.

这里是另一个例子,假设你已经训练出一个语音识别系统,现在 x 是音频或音频片段输入,而 y 是听写文本,所以你已经训练了语音识别系统 让它输出听写文本,现在我们说你想搭建一个“唤醒词”或“触发词”,检测系统,所谓唤醒词或触发词就是我们说的一句话,可以唤醒家里的语音控制设备 比如你说,“Alexa”可以唤醒一个亚马逊 Echo 设备 或用“OK Google”来唤醒 Google 设备,用” Hey Siri” 来唤醒苹果设备 用”你好百度”唤醒一个百度设备。

So in order to do this, you might take out the last layer of the neural network again and create a new output node.But sometimes another thing you could do is actually create not just a single new output, but actually create several new layers to your neural network to try to put the labels Y for your wake word detection problem.Then again, depending on how much data you have, you might just retrain the new layers of the network or maybe you could retrain even more layers of this neural network.So, when does transfer learning make sense?Transfer learning makes sense when you have a lot of data for the problem you’re transferring from and usually relatively less data for the problem you’re transferring to.

要做到这点,你可能需要去掉神经网络的最后一层,然后加入新的输出节点,但有时你可以不只加入一个新节点,或者甚至往你的神经网络加入几个新层,然后把唤醒词检测问题的标签 Y 喂进去训练,再次 这取决于你有多少数据,你可能只需要重新训练网络的新层 也许你需要,重新训练神经网络中更多的层,那么迁移学习什么时候是有意义的呢? 迁移学习起作用的场合是,迁移来源问题你有很多数据,但迁移目标问题你没有那么多数据。

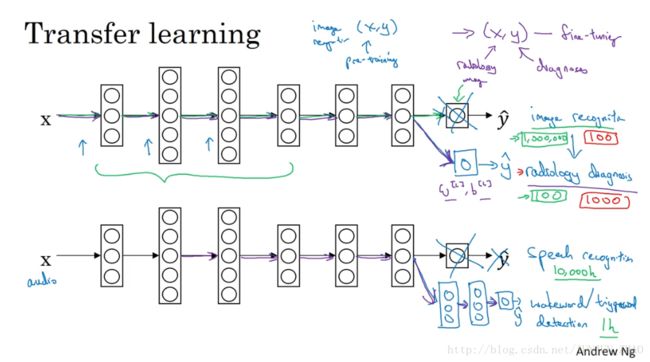

So for example, let’s say you have a million examples for image recognition task.So that’s a lot of data to learn a lot of low level features or to learn a lot of useful features in the earlier layers in neural network.But for the radiology task, maybe you have only a hundred examples.So you have very low data for the radiology diagnosis problem, maybe only 100 x-ray scans.So a lot of knowledge you learn from image recognition can be transferred and can really help you get going with radiology recognition even if you don’t have all the data for radiology.For speech recognition, maybe you’ve trained the speech recognition system on 10000 hours of data.So, you’ve learned a lot about what human voices sounds like from that 10000 hours of data, which really is a lot.But for your trigger word detection, maybe you have only one hour of data.So, that’s not a lot of data to fit a lot of parameters.So in this case, a lot of what you learn about what human voices sound like, what are components of human speech and so on, that can be really helpful for building a good wake word detector, even though you have a relatively small dataset or at least a much smaller dataset for the wake word detection task.So in both of these cases, you’re transferring from a problem with a lot of data to a problem with relatively little data.One case where transfer learning would not make sense, is if the opposite was true.So, if you had a hundred images for image recognition and you had100 images for radiology diagnosis or even a thousand images for radiology diagnosis, one would think about it is that to do well on radiology diagnosis, assuming what you really want to do well on this radiology diagnosis, having radiology images is much more valuable than having cat and dog and so on images.So each example here is much more valuable than each example there, at least for the purpose of building a good radiology system.

例如 假设图像识别任务中你有 1 百万个样本,所以这里数据相当多,可以学习低层次特征 可以在神经网络的,前面几层学到如何识别很多有用的特征,但是对于放射科任务,也许你只有一百个样本,所以你的放射学诊断问题数据很少,也许只有 100 次 X 射线扫描,所以你从图像识别训练中学到的很多知识可以迁移,并且真正帮你加强,放射科识别任务的性能 即使你的放射科数据很少,对于语音识别 也许你已经用 10000 小时数据,训练过你的语言识别系统,所以你从这 10000 小时数据学到了很多人类声音的特征,这数据量其实很多了,但对于触发字检测,也许你只有 1 小时数据,所以这数据太小 不能用来拟合很多参数,所以在这种情况下 预先学到很多人类声音的特征,人类语言的组成部分等等知识,可以帮你建立一个很好的唤醒字检测器,即使你的数据集相对较小,对于唤醒词任务来说 至少数据集要小得多,所以在这两种情况下,你从数据量很多的问题 迁移到,数据量相对小的问题,然后反过来的话,迁移学习可能就没有意义了,比如 你用 100 张图训练图像识别系统,然后有 100甚至 1000 张图用于训练放射科诊断系统,人们可能会想 为了提升放射科诊断的性能,假设你真的希望这个放射科诊断系统做得好,那么用放射科图像训练 可能比使用猫和狗的图像更有价值,所以这里的每个样本价值比这里要大得多,至少就建立性能良好的放射科系统而言是这样。

So, if you already have more data for radiology, it’s not that likely that having 100 images of your random objects of cats and dogs and cars and so on will be that helpful, because the value of one example of image from your image recognition task of cats and dogs is just less valuable than one example of an x-ray image for the task of building a good radiology system.So, this would be one example where transfer learning, well, it might not hurt but I wouldn’t expect it to give you any meaningful gain either.And similarly, if you’d built a speech recognition system on 10 hours of data and you actually have 10 hours or maybe even more, say 50 hours of data for wake word detection, you know it won’t, it may or may not hurt, maybe it won’t hurt to include that 10 hours of data to your transfer learning, but you just wouldn’t expect to get a meaningful gain.

所以 如果你的放射科数据更多,那么你这 100 张,猫猫狗狗或者随机物体的图片肯定不会有太大帮助,因为来自猫狗识别任务中,每一张图的价值,肯定不如一张 X 射线扫描图有价值,对于建立良好的放射科诊断系统而言是这样,所以 这是其中一个例子 说明迁移学习,可能不会有害 但也别指望这么做可以带来有意义的增益,同样 如果你用 10 小时数据训练出一个语音识别系统,然后你实际上有 10 个小时甚至更多,比如说 50 个小时唤醒字检测的数据,你知道迁移学习有可能会有帮助 也可能不会,也许把这10小时数据迁移学习不会有太大坏处,但是你也别指望会得到有意义的增益。

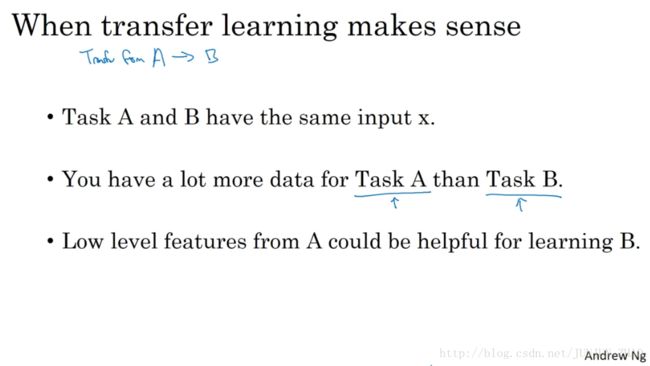

So to summarize, when does transfer learning make sense?If you’re trying to learn from some Task A and transfer some of the knowledge to some Task B, then transfer learning makes sense when Task A and B have the same input x.In the first example,A and B both have images as input.In the second example, both have audio clips as input.It tends to make sense when you have a lot more data for Task A than for Task B.All this is under the assumption that what you really want to do well on is Task B.And because data for Task B is more valuable for Task B, usually you just need a lot more data for Task A because you know, each example from Task A is just less valuable for Task B than each example for Task B.And then finally, transfer learning will tend to make more sense if you suspect that low level features from Task A could be helpful for learning Task B.And in both of the earlier examples, maybe learning image recognition teaches you enough about images to have a radiology diagnosis and maybe learning speech recognition teaches you about human speech to help you with trigger word or wake word detection.

所以总结一下 什么时候迁移学习是有意义的?如果你想从任务 A 学习,并迁移一些知识到任务 B,那么当任务 A 和任务 B 都有同样的输入 x 时 迁移学习是有意义的,在第一个例子中,A 和 B 的输入都是图像,在第二个例子中,两者输入都是音频,当任务 A 的数据比任务 B 多得多时 迁移学习意义更大,所有这些假设的前提都是 你希望提高任务 B 的性能,因为任务 B 每个数据更有价值 对任务 B 来说,通常任务 A 的数据量必须大得多 才有帮助,因为任务 A 里单个样本的价值比任务 B 单个样本价值更大,然后如果你觉得任务 A 的低层次特征,可以帮助任务 B 的学习 那迁移学习更有意义一些,而在这两个前面的例子中,也许学习图像识别教给系统足够多图像相关的知识,让它可以进行放射科诊断,也许学习语音识别教给系统,足够多人类语言信息 能帮助你开发触发字或唤醒字检测器。

So to summarize, transfer learning has been most useful if you’re trying to do well on some Task B, usually a problem where you have relatively little data.So for example, in radiology, you know it’s difficult to get that many x-ray scans to build a good radiology diagnosis system.So in that case, you might find a related but different task, such as image recognition, where you can get maybe a million images and learn a lot of low level features from that, so that you can then try to do well on Task B on your radiology task despite not having that much data for it.When transfer learning makes sense?It does help the performance of your learning task significantly.But I’ve also seen sometimes seen transfer learning applied in settings whereTask A actually has less data than Task B and in those cases, you kind of don’t expect to see much of a gain.

所以总结一下 迁移学习最有用的场合是,如果你尝试优化任务 B 的性能,通常这个任务数据相对较少,例如 在放射科中,你知道很难收集很多 x 射线扫描图,来搭建一个性能良好的放射科诊断系统,所以在这种情况下 你可能会找一个相关但不同的任务,如图像识别,其中你可能用1 百万张图片训练过了,并从中学到很多低层次特征,所以那也许能帮助网络在任务 B 在放射科任务上做得更好,尽管任务 B 没有这么多数据,迁移学习什么时候是有意义的?

So, that’s it for transfer learning where you learn from one task and try to transfer to a different task.There’s another version of learning from multiple tasks which is called multitask learning, which is when you try to learn from multiple tasks at the same time rather than learning from one and then sequentially, or after that, trying to transfer to a different task.So in the next video, let’s discuss multitasking learning.

它确实可以显著提高你的学习任务的性能,但我有时候也见过 有些场合使用迁移学习时,任务 A 实际上数据量比任务 B 要少 这种情况下,增益可能不多,好 这就是迁移学习,你从一个任务中学习 然后尝试迁移到另一个不同任务中,从多个任务中学习还有另外一个版本,就是所谓的多任务学习,当你尝试从多个任务中并行学习,而不是串行学习 在训练了一个任务之后,试图迁移到另一个任务,所以在下一个视频中,让我们来讨论多任务学习。

重点总结:

迁移学习

将从一个任务中学到的知识,应用到另一个独立的任务中。

迁移学习的意义:

迁移学习适合以下场合:迁移来源问题有很多数据,但是迁移目标问题却没有那么多的数据。

假设图像识别任务中有1百万个样本,里面的数据相当多;但对与一些特定的图像识别问题,如放射科图像,也许只有一百个样本,所以对于放射学诊断问题的数据很少。所以从图像识别训练中学到的很多知识可以迁移,来帮助我们提升放射科识别任务的性能。

同样一个例子是语音识别,可能在普通的语音识别中,我们有庞大的数据量来训练模型,所以模型从中学到了很多人类声音的特征。但是对于触发字检测任务,可能我们拥有的数据量很少,所以对于这种情况下,学习人类声音特征等知识就显得相当重要。所以迁移学习可以帮助我们建立一个很好的唤醒字检测系统。

迁移学习有意义的情况:

- 任务 A 和任务 B 有着相同的输入;

- 任务 A 所拥有的数据要远远大于任务 B(对于更有价值的任务 B,任务 A 所拥有的数据要比 B 大很多);

- 任务 A 的低层特征学习对任务 B 有一定的帮助;

参考文献:

[1]. 大树先生.吴恩达Coursera深度学习课程 DeepLearning.ai 提炼笔记(3-2)– 机器学习策略(2)

PS: 欢迎扫码关注公众号:「SelfImprovementLab」!专注「深度学习」,「机器学习」,「人工智能」。以及 「早起」,「阅读」,「运动」,「英语 」「其他」不定期建群 打卡互助活动。