Linux进程地址空间管理

目录

1. 重要数据结构说明 2

2. 进程地址空间概览 3

3. 地址区间操作 4

3.1 地址区间查找 4

3.2 地址区间合并 4

3.3 地址区间插入 6

3.4 地址区间创建 6

4. 映射的创建 8

4.1 mmap 8

4.2 munmap 9

4.3堆的管理 10

5. 缺页异常 12

5.1 缺页异常流程 12

5.2匿名映射 14

5.3文件映射 15

5.3.1读异常 16

5.3.2写时复制 16

5.3.3共享映射 16

6. 反向映射 17

6.1 文件映射页 17

6.2匿名页 18

1. 重要数据结构说明

结构体mm_struct管理整个进程地中空间

| struct mm_struct |

|

| struct vm_area_struct *mmap |

进程中所有地址区间(vma)按照升序排列成双向链表 |

| struct rb_root mm_rb |

进程中所有地址区间除了会放到链表mmap还会插入这个红黑树中,分别用于不同应用场景的查找 |

| unsigned long (*get_unmapped_area) () |

查找一个空闲的空间,与体系架构相关 |

| unsigned long mmap_base |

Mmap映射的基地址 |

| unsigned long task_size |

栈大小 |

| unsigned long highest_vm_end |

进程地址空间中没有被使用的highest位置 |

| pgd_t * pgd |

指向进程页表起始地址 |

| atomic_long_t nr_ptes |

进程中用于pte的页数 |

| atomic_long_t nr_pmds |

进程中用于pmd的页数 |

| int map_count |

Vma个数 |

| struct list_head mmlist |

系统中所有mm_struct都会链接到init_mm.mmlist中 |

| unsigned long start_code, end_code, start_data, end_data |

指向进程代码段和数据段的起止地址 |

| unsigned long start_brk, brk, start_stack |

指向堆的起始地址,增长点,栈顶 |

| unsigned long arg_start, arg_end, env_start, env_end |

指向进程的参数和环境变量的起止位置 |

| unsigned long saved_auxv[AT_VECTOR_SIZE] |

指向二进制文件本身的一些新,比如入口位置,程序段的个数等等 |

结构体vm_area_struct用于描述地址空间中一段连续的地址区间。

| struct vm_area_struct |

|

| unsigned long vm_start |

地址区间的起始位置 |

| unsigned long vm_end |

地址区间的结束位置 |

| struct vm_area_struct *vm_next, *vm_prev |

用于链表mm_struct-> mmap |

| struct rb_node vm_rb |

用于插入红黑树mm_struct-> mm_rb |

| unsigned long rb_subtree_gap |

红黑树中vma->left之下最大间隙大小 |

| struct mm_struct *vm_mm |

指向vma所属的mm_struct |

| pgprot_t vm_page_prot |

Vma的访问权限等属性 |

| struct list_head anon_vma_chain |

用于匿名映射,具体请看"反向映射"一节 |

| struct anon_vma *anon_vma |

每个vma都对应一个anon_vma结构,参考"反向映射"一节 |

| unsigned long vm_pgoff |

Vma在file中的偏移 |

| struct file * vm_file |

Vma所映射的文件 |

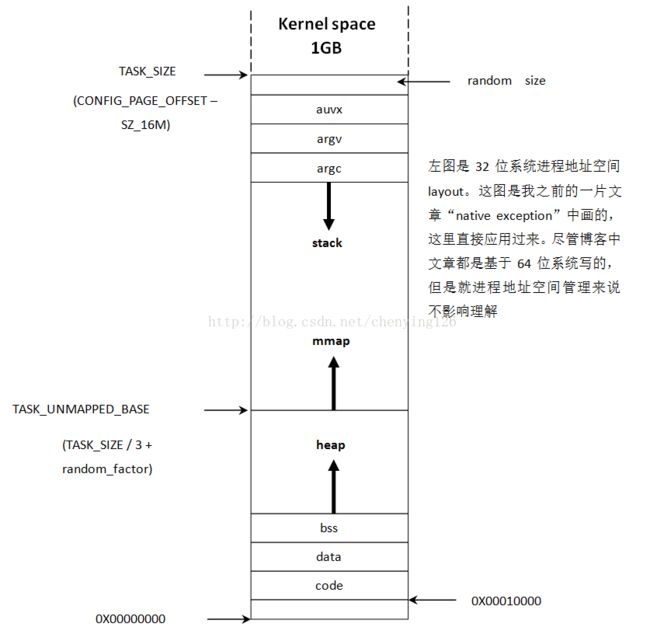

2. 进程地址空间概览

3. 地址区间操作

3.1 地址区间查找

查找包含指定地址addr的区间,并返回其对应的描述结构vm_area_struct。

struct vm_area_struct *find_vma(struct mm_struct *mm, unsigned long addr)

{

struct rb_node *rb_node;

struct vm_area_struct *vma;

//到进程缓存(current->vmacache.vmas[])中去查找,之前缓存的vma结构

vma = vmacache_find(mm, addr);

if (likely(vma))

return vma;

//从红黑树的根节点开始查找包含地址addr的区间

rb_node = mm->mm_rb.rb_node;

while (rb_node) {

struct vm_area_struct *tmp;

tmp = rb_entry(rb_node, struct vm_area_struct, vm_rb);

if (tmp->vm_end > addr) {

vma = tmp;

if (tmp->vm_start <= addr)

break;

rb_node = rb_node->rb_left;

} else

rb_node = rb_node->rb_right;

}

//将找到的地址区间缓存到current->vmacache.vmas[],方便下次快速查找

if (vma)

vmacache_update(addr, vma);

return vma;

}3.2 地址区间合并

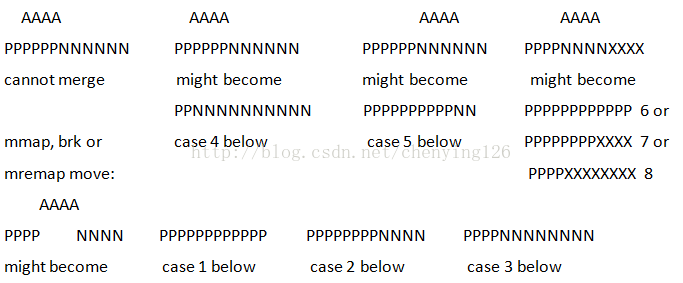

下图来至于源码注释,它描述了8中可以合并的场景。可合并的条件是:1),可以组成地址连续的区间;2),两个地址区间的各种属性相同。

映射请求 (addr,end,vm_flags,file,pgoff),函数vma_merge用于机算这个请求是否可以和它的前面或者后面或者两者合并。

struct vm_area_struct *vma_merge(struct mm_struct *mm,

struct vm_area_struct *prev, unsigned long addr,

unsigned long end, unsigned long vm_flags,

struct anon_vma *anon_vma, struct file *file,

pgoff_t pgoff, struct mempolicy *policy,

struct vm_userfaultfd_ctx vm_userfaultfd_ctx)

{

pgoff_t pglen = (end - addr) >> PAGE_SHIFT;

struct vm_area_struct *area, *next;

int err;

……

if (prev)

next = prev->vm_next;

else

next = mm->mmap;

area = next;

if (area && area->vm_end == end)//映射请求包含next vma的场景,这属于前面cases 6, 7, 8

next = next->vm_next;

/*检查看是否可以和它的前一个区间合并,合并条件是1),可以组成地址连续的区间;2),两个地址区间的各种属性相同。*/

if (prev && prev->vm_end == addr &&

mpol_equal(vma_policy(prev), policy) &&

can_vma_merge_after(prev, vm_flags,

anon_vma, file, pgoff,

vm_userfaultfd_ctx)) {

//到这里说明可以和前面的地址区间合并,再检查是否页可以和后一个地址区间合并

if (next && end == next->vm_start &&

mpol_equal(policy, vma_policy(next)) &&

can_vma_merge_before(next, vm_flags,

anon_vma, file,

pgoff+pglen,

vm_userfaultfd_ctx) &&

is_mergeable_anon_vma(prev->anon_vma,

next->anon_vma, NULL)) {

/*程序走到这里说明可以和前后两个区间合并,这属于前面的 cases 1, 6.

函数__vma_adjust 做实际的合并工作,做一些链表的删除与插入操作*/

err = __vma_adjust(prev, prev->vm_start,

next->vm_end, prev->vm_pgoff, NULL,

prev);

} else//只与前一个区间合并这属于 cases 2, 5, 7

err = __vma_adjust(prev, prev->vm_start,

end, prev->vm_pgoff, NULL, prev);

if (err)

return NULL;

khugepaged_enter_vma_merge(prev, vm_flags);

return prev;

}

//下面属于request区间与next相连接的情况

if (next && end == next->vm_start &&

mpol_equal(policy, vma_policy(next)) &&

can_vma_merge_before(next, vm_flags,

anon_vma, file, pgoff+pglen,

vm_userfaultfd_ctx)) {

if (prev && addr < prev->vm_end)//属于case4,将pre缩小,并让next与request合并

err = __vma_adjust(prev, prev->vm_start,

addr, prev->vm_pgoff, NULL, next);

else { //属于 cases 3, 8 ,将next与request合并

err = __vma_adjust(area, addr, next->vm_end,

next->vm_pgoff - pglen, NULL, next);

area = next;

}

if (err)

return NULL;

return area;

}

return NULL;

}3.3 地址区间插入

将vma插入进程地址空间,

int insert_vm_struct(struct mm_struct *mm, struct vm_area_struct *vma)

{

struct vm_area_struct *prev;

struct rb_node **rb_link, *rb_parent;

//查找vma是否已经被占用,如果是就返回ENOMEM,否者就返回vma的prev, rb_link, rb_parent

if (find_vma_links(mm, vma->vm_start, vma->vm_end,

&prev, &rb_link, &rb_parent))

return -ENOMEM;

………

if (vma_is_anonymous(vma)) {

vma->vm_pgoff = vma->vm_start >> PAGE_SHIFT;

}

//将vma插入地址空间链表和vma->vm_file->f_mapping

vma_link(mm, vma, prev, rb_link, rb_parent);

return 0;

}

(vma_link---->__vma_link_file)如果是文件映射就将vma插入其对应的address_space

static void __vma_link_file(struct vm_area_struct *vma)

{

struct file *file;

file = vma->vm_file;

if (file) {

struct address_space *mapping = file->f_mapping;

……

flush_dcache_mmap_lock(mapping);

vma_interval_tree_insert(vma, &mapping->i_mmap);

flush_dcache_mmap_unlock(mapping);

}

}将vma按照地址升序插入链表mm ->mmap,以及插入红黑树mm ->mm_rb

static void

__vma_link(struct mm_struct *mm, struct vm_area_struct *vma,

struct vm_area_struct *prev, struct rb_node **rb_link,

struct rb_node *rb_parent)

{

__vma_link_list(mm, vma, prev, rb_parent);

__vma_link_rb(mm, vma, rb_link, rb_parent);

}3.4 地址区间创建

从地址空间中查找一个长度为len的空闲地址区间,如果addr不为0,就检查addr处是否已经被占用如果没有就返回addr。

unsigned long get_unmapped_area(struct file *file, unsigned long addr, unsigned long len,

unsigned long pgoff, unsigned long flags)

{

unsigned long (*get_area)(struct file *, unsigned long,

unsigned long, unsigned long, unsigned long);

……

get_area = current->mm->get_unmapped_area;

……

addr = get_area(file, addr, len, pgoff, flags); //查找空闲区间的主要工作由get_area来实现

……

}在进程启动阶段会调用arch_pick_mmap_layout初始化地址空间layout

void arch_pick_mmap_layout(struct mm_struct *mm)

{

unsigned long random_factor = 0UL;

……

if (mmap_is_legacy()) {

mm->mmap_base = TASK_UNMAPPED_BASE + random_factor;

mm->get_unmapped_area = arch_get_unmapped_area; //用于获取地址空间中一个空闲区间

} else {

mm->mmap_base = mmap_base(random_factor);

mm->get_unmapped_area = arch_get_unmapped_area_topdown;

}

}arch_get_unmapped_area---->vm_unmapped_area----> unmapped_area

unsigned long unmapped_area(struct vm_unmapped_area_info *info)

{

struct mm_struct *mm = current->mm;

struct vm_area_struct *vma;

unsigned long length, low_limit, high_limit, gap_start, gap_end;

/************对齐和一些边界检查*******************/

//如果红黑树中最大的间隙也没有请求的length大,就从highest位置查找

vma = rb_entry(mm->mm_rb.rb_node, struct vm_area_struct, vm_rb);

if (vma->rb_subtree_gap < length)

goto check_highest;

/*这里说明有足够大的间隙可以容纳length大小的请求,查看红黑数中vma左侧是否由可容纳length大小的请求*/

while (true) {

gap_end = vm_start_gap(vma);

if (gap_end >= low_limit && vma->vm_rb.rb_left) {

struct vm_area_struct *left =

rb_entry(vma->vm_rb.rb_left,

struct vm_area_struct, vm_rb);

if (left->rb_subtree_gap >= length) {

vma = left;

continue;

}

}

gap_start = vma->vm_prev ? vm_end_gap(vma->vm_prev) : 0;

check_current:

……

//查看红黑数中vma右侧是否由可容纳length大小的请求

if (vma->vm_rb.rb_right) {

struct vm_area_struct *right =

rb_entry(vma->vm_rb.rb_right,

struct vm_area_struct, vm_rb);

if (right->rb_subtree_gap >= length) {

vma = right;

continue;

}

}

//从红黑树vma的上级查找可容纳length大小的请求

while (true) {

struct rb_node *prev = &vma->vm_rb;

if (!rb_parent(prev))

goto check_highest;

vma = rb_entry(rb_parent(prev),

struct vm_area_struct, vm_rb);

if (prev == vma->vm_rb.rb_left) {

gap_start = vm_end_gap(vma->vm_prev);

gap_end = vm_start_gap(vma);

goto check_current;

}

}

}

check_highest: //如果前面的查找都没有找到就从地址空间的highest位置查找

gap_start = mm->highest_vm_end;

gap_end = ULONG_MAX; /* Only for VM_BUG_ON below */

if (gap_start > high_limit)

return -ENOMEM;

found:

if (gap_start < info->low_limit)

gap_start = info->low_limit;

gap_start += (info->align_offset - gap_start) & info->align_mask;

return gap_start;

}4. 映射的创建

4.1 mmap

系统调用mmap会调用到内核中的函数mmap_pgoff,该函数完成映射的创建

unsigned long mmap_region(struct file *file, unsigned long addr,

unsigned long len, vm_flags_t vm_flags, unsigned long pgoff,

struct list_head *uf)

{

struct mm_struct *mm = current->mm;

struct vm_area_struct *vma, *prev;

int error;

struct rb_node **rb_link, *rb_parent;

unsigned long charged = 0;

…………

//查找addr到addr + len区间中重合的映射并解除这些映射,并且返回请求区间的prev和rb_parent

while (find_vma_links(mm, addr, addr + len, &prev, &rb_link,

&rb_parent)) {

if (do_munmap(mm, addr, len, uf))

return -ENOMEM;

}

//尝试将addr到addr + len这段区间合并到现有的映射中去

vma = vma_merge(mm, prev, addr, addr + len, vm_flags,

NULL, file, pgoff, NULL, NULL_VM_UFFD_CTX);

if (vma)

goto out;

//如果没有可以合并的区间就创建一个独立的区间

vma = kmem_cache_zalloc(vm_area_cachep, GFP_KERNEL);

if (!vma) {

error = -ENOMEM;

goto unacct_error;

}

//vma的初始化

vma->vm_mm = mm;

vma->vm_start = addr;

vma->vm_end = addr + len;

vma->vm_flags = vm_flags;

vma->vm_page_prot = vm_get_page_prot(vm_flags);

vma->vm_pgoff = pgoff;

INIT_LIST_HEAD(&vma->anon_vma_chain);

if (file) {

……

vma->vm_file = get_file(file);

/*如果是文件映射就调用对应的file->f_op->mmap(file, vma),mmap特定于文件系统,如果是ext2,主要做的事就是vma->vm_ops = &generic_file_vm_ops;*/

error = call_mmap(file, vma);

if (error)

goto unmap_and_free_vma;

addr = vma->vm_start;

vm_flags = vma->vm_flags;

} else if (vm_flags & VM_SHARED) {

error = shmem_zero_setup(vma); //如果是共享映射就打开dev/zero作为vma->vm_file

if (error)

goto free_vma;

}

/*将vma添加到相关链表中:mm ->mmap,红黑树mm ->mm_rb,和address space vma->vm_file->f_mapping*/

vma_link(mm, vma, prev, rb_link, rb_parent);

file = vma->vm_file;

out:

……

vma->vm_flags |= VM_SOFTDIRTY;

vma_set_page_prot(vma);

return addr;

……

return error;

}4.2 munmap

函数munmap用于解除start到start + len区间的映射,这个区间可能横跨多个vma,这中间可能会拆分其他vma。

munmap---->vm_munmap----> do_munmap

int do_munmap(struct mm_struct *mm, unsigned long start, size_t len,

struct list_head *uf)

{

unsigned long end;

struct vm_area_struct *vma, *prev, *last;

//查找start所在的vma

vma = find_vma(mm, start);

if (!vma)

return 0;

prev = vma->vm_prev;

end = start + len;

if (vma->vm_start >= end)

return 0;

//如果start在vma中间就将vma在start处拆分,保留vma->vm_start到start区间,剩余被删除

if (start > vma->vm_start) {

int error;

error = __split_vma(mm, vma, start, 0);

if (error)

return error;

prev = vma;

}

//查找start+len,所在的vma

last = find_vma(mm, end);

//如果start+len在vma中间就在start+len处拆分vma,将start+len到last->vm_end保留其余释放

if (last && end > last->vm_start) {

int error = __split_vma(mm, last, end, 1);

if (error)

return error;

}

vma = prev ? prev->vm_next : mm->mmap;

//从mm ->mmap,红黑树mm ->mm_rb中删除

detach_vmas_to_be_unmapped(mm, vma, prev, end);

//释放页表中映射

unmap_region(mm, vma, prev, start, end);

//从vma->vm_file->f_mapping中删除

remove_vma_list(mm, vma);

return 0;

}4.3堆的管理

系统调用brk用于控制堆的增长和堆的收缩,参数brk是堆生长到的位置

SYSCALL_DEFINE1(brk, unsigned long, brk)

{

unsigned long retval;

unsigned long newbrk, oldbrk;

struct mm_struct *mm = current->mm;

struct vm_area_struct *next;

unsigned long min_brk;

bool populate;

LIST_HEAD(uf);

min_brk = mm->start_brk; //获取堆的最低位置

if (brk < min_brk)

goto out;

newbrk = PAGE_ALIGN(brk);

oldbrk = PAGE_ALIGN(mm->brk);

……

// mm->brk是堆的当前位置,如果brk小于当前位置说明要释放堆的部分空间

if (brk <= mm->brk) {

if (!do_munmap(mm, newbrk, oldbrk-newbrk, &uf)) //释放掉部分堆空间

goto set_brk;

goto out;

}

/*查找进程地址空间中堆所对应的vma的next,如果vma到next中间没有足够的空间就返回,堆是一个连续的虚拟地址区间,区间顶点由mm->brk来指定。*/

next = find_vma(mm, oldbrk);

if (next && newbrk + PAGE_SIZE > vm_start_gap(next))

goto out;

//为堆空间分配地址区间,具体后面讲解

if (do_brk(oldbrk, newbrk-oldbrk, &uf) < 0)

goto out;

set_brk:

mm->brk = brk; //更新堆的增长点

populate = newbrk > oldbrk && (mm->def_flags & VM_LOCKED) != 0;

if (populate) //如果设置了populate,就为新增地址区间分配物理内存

mm_populate(oldbrk, newbrk - oldbrk);

return brk;

out:

retval = mm->brk;

up_write(&mm->mmap_sem);

return retval;

}前面提到的函数do_brk 实际工作由do_brk_flags来完成。

static int do_brk_flags(unsigned long addr, unsigned long request, unsigned long flags, struct list_head *uf)

{

struct mm_struct *mm = current->mm;

struct vm_area_struct *vma, *prev;

unsigned long len;

struct rb_node **rb_link, *rb_parent;

pgoff_t pgoff = addr >> PAGE_SHIFT;

int error;

//检查addr到addr+len区间是不是空闲

error = get_unmapped_area(NULL, addr, len, 0, MAP_FIXED);

if (offset_in_page(error))

return error;

//查找vma是否已经被其他区间覆盖,如果是就将其释放掉,并且返回vma的prev, rb_link, rb_parent

while (find_vma_links(mm, addr, addr + len, &prev, &rb_link,

&rb_parent)) {

if (do_munmap(mm, addr, len, uf))

return -ENOMEM;

}

//尝试与其他vma合并,如果不是进程创建阶段都会合并成功,因为堆只有一个vma。

vma = vma_merge(mm, prev, addr, addr + len, flags,

NULL, NULL, pgoff, NULL, NULL_VM_UFFD_CTX);

if (vma)

goto out;

//为堆创建vma

vma = kmem_cache_zalloc(vm_area_cachep, GFP_KERNEL);

if (!vma) {

vm_unacct_memory(len >> PAGE_SHIFT);

return -ENOMEM;

}

INIT_LIST_HEAD(&vma->anon_vma_chain);

vma->vm_mm = mm;

vma->vm_start = addr;

vma->vm_end = addr + len;

vma->vm_pgoff = pgoff;

vma->vm_flags = flags;

vma->vm_page_prot = vm_get_page_prot(flags);

vma_link(mm, vma, prev, rb_link, rb_parent);

out:

perf_event_mmap(vma);

mm->total_vm += len >> PAGE_SHIFT;

mm->data_vm += len >> PAGE_SHIFT;

if (flags & VM_LOCKED)

mm->locked_vm += (len >> PAGE_SHIFT);

vma->vm_flags |= VM_SOFTDIRTY;

return 0;

}5. 缺页异常

5.1 缺页异常流程

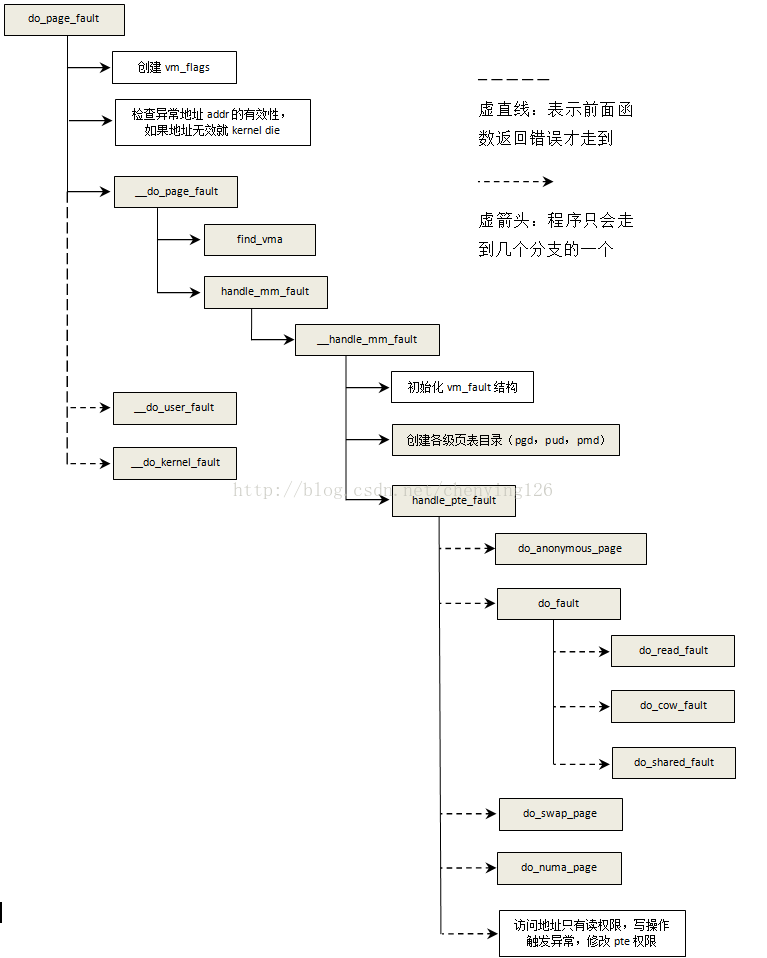

程序访问一个地址addr,但是在页表中没有找到,就会触发一个数据异常,这有两种情况:1)程序逻辑错误导致访问非法地址,这种情况会导致进程crash(异常发生在用户空间),也可能导致kernel panic(异常发生在内核空间);2)程序已经分配了相应的虚拟地址但是没有映射对应的物理页或者页已经交换出去。第一种情况在以后讲内核调试的时候再去详细分析其流程,这里只讲第二种情况。

这个流程图就不细讲了,后面直接到代码中去看起实现的主要逻辑。在进一步讲解之前先熟悉一个结构vm_fault,这个结构里面用于临时保存缺页异常的所有信息。

| struct vm_fault |

|

| struct vm_area_struct *vma |

触发异常的地址addr所属的vma |

| unsigned int flags |

包含FAULT_FLAG_xxx flags |

| gfp_t gfp_mask |

用于控制异常过程中的内存分配 |

| pgoff_t pgoff |

Addr在vma中的page offset |

| unsigned long address |

触发异常的地址 |

| pmd_t *pmd |

异常地址所在的pmd |

| pud_t *pud |

异常地址所在的pud |

| pte_t orig_pte |

异常时候的pte |

| struct page *cow_page |

写时复制用于映射到pte中的页 |

| struct page *page |

vma->vm_ops->fault返回的页 |

| pte_t *pte |

异常之后的pte |

| spinlock_t *ptl |

页表锁,操作页表的时候避免竞争 |

static int __handle_mm_fault(struct vm_area_struct *vma, unsigned long address,

unsigned int flags)

{

struct vm_fault vmf = { //暂存异常过程中的信息

.vma = vma,

.address = address & PAGE_MASK,

.flags = flags,

.pgoff = linear_page_index(vma, address),

.gfp_mask = __get_fault_gfp_mask(vma),

};

struct mm_struct *mm = vma->vm_mm;

pgd_t *pgd;

p4d_t *p4d;

int ret;

pgd = pgd_offset(mm, address); //获取pgd

p4d = p4d_alloc(mm, pgd, address); //我们只考虑三级页表的情况,这里的p4d就是pgd

if (!p4d)

return VM_FAULT_OOM;

vmf.pud = pud_alloc(mm, p4d, address); //在三级页表的系统中pud就是pgd

if (!vmf.pud)

return VM_FAULT_OOM;

......

vmf.pmd = pmd_alloc(mm, vmf.pud, address); //如果pmd在pud中不存在就分配一个,并且写到pud中

if (!vmf.pmd)

return VM_FAULT_OOM;

......

//前面都是为了构建页表路径,函数handle_pte_fault完成page fault的主要工作

return handle_pte_fault(&vmf);

}

static int handle_pte_fault(struct vm_fault *vmf)

{

pte_t entry;

if (unlikely(pmd_none(*vmf->pmd))) {

vmf->pte = NULL;

} else {

if (pmd_devmap_trans_unstable(vmf->pmd))

return 0;

vmf->pte = pte_offset_map(vmf->pmd, vmf->address); //获取pte在pmd中的entry地址

vmf->orig_pte = *vmf->pte; //获取pte本身的地址,这可能为NULL

}

if (!vmf->pte) { // vmf->pte为NULL表明没有对应的物理页

if (vma_is_anonymous(vmf->vma))

//如果是匿名映射就调用函数do_anonymous_page分配匿名页,匿名映射后面细讲

return do_anonymous_page(vmf);

else//如果是文件映射,就走这里

return do_fault(vmf);

}

/*程序走到这里说明pte是存在的,下面就是处理这种pte存在却仍然触发异常的情况的*/

if (!pte_present(vmf->orig_pte))

/*如果页被交换出去了,就调用函数do_swap_page将页从交换缓存或者交换分区中取出来,页的交换后面有专门的文章讲解,这里就不细讲了*/

return do_swap_page(vmf);

//如果pte是invalid的,这里使其重新valid

if (pte_protnone(vmf->orig_pte) && vma_is_accessible(vmf->vma))

return do_numa_page(vmf);

//下面处理的就是pte只读的,但是有写访问,触发异常的情况

vmf->ptl = pte_lockptr(vmf->vma->vm_mm, vmf->pmd);

spin_lock(vmf->ptl);

entry = vmf->orig_pte;

if (unlikely(!pte_same(*vmf->pte, entry)))

goto unlock;

if (vmf->flags & FAULT_FLAG_WRITE) {

if (!pte_write(entry))

return do_wp_page(vmf);

entry = pte_mkdirty(entry);

}

entry = pte_mkyoung(entry);

if (ptep_set_access_flags(vmf->vma, vmf->address, vmf->pte, entry,

vmf->flags & FAULT_FLAG_WRITE)) {

update_mmu_cache(vmf->vma, vmf->address, vmf->pte);

} else {

if (vmf->flags & FAULT_FLAG_WRITE)

flush_tlb_fix_spurious_fault(vmf->vma, vmf->address);

}

unlock:

pte_unmap_unlock(vmf->pte, vmf->ptl);

return 0;

}

5.2匿名映射

所有的映射区间都会按地址升序排列在mm_struct中,在进程这个层面来讲没有匿名映射或者文件映射的区分。因为文件映射的数据来源于文件,匿名映射数据是运行时产生,在底层处理中他们有区别,所以将映射分为匿名映射和文件映射,并提供一些各自的附加结构用于管理。堆、栈、私有映射、和没有提供fd的mmap映射都属于匿名映射。

static int do_anonymous_page(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

struct mem_cgroup *memcg;

struct page *page;

pte_t entry;

//分配pte页表,并且写到pmd对应entry

if (pte_alloc(vma->vm_mm, vmf->pmd, vmf->address))

return VM_FAULT_OOM;

//如果是读触发的缺页异常就将0页写到pte中

if (!(vmf->flags & FAULT_FLAG_WRITE) &&

!mm_forbids_zeropage(vma->vm_mm)) {

entry = pte_mkspecial(pfn_pte(my_zero_pfn(vmf->address),

vma->vm_page_prot));

vmf->pte = pte_offset_map_lock(vma->vm_mm, vmf->pmd,

vmf->address, &vmf->ptl);

if (!pte_none(*vmf->pte))

goto unlock;

/* Deliver the page fault to userland, check inside PT lock */

if (userfaultfd_missing(vma)) {

pte_unmap_unlock(vmf->pte, vmf->ptl);

return handle_userfault(vmf, VM_UFFD_MISSING);

}

goto setpte;

}

//准备匿名映射自有的相关结构,anon_vma和anon_vma_chain,用于反向映射,后面细讲

if (unlikely(anon_vma_prepare(vma)))

goto oom;

//分配一个物理页

page = alloc_zeroed_user_highpage_movable(vma, vmf->address);

if (!page)

goto oom;

//标识页是更新了的

__SetPageUptodate(page);

//更具page物理地址和prot创建pte

entry = mk_pte(page, vma->vm_page_prot);

if (vma->vm_flags & VM_WRITE)

entry = pte_mkwrite(pte_mkdirty(entry));

//锁定页表,避免被其他地方修改

vmf->pte = pte_offset_map_lock(vma->vm_mm, vmf->pmd, vmf->address,

&vmf->ptl);

//将页到添加反向映射,后面细讲

page_add_new_anon_rmap(page, vma, vmf->address, false);

//锁定页并且添加到unevictable_list中

lru_cache_add_active_or_unevictable(page, vma);

setpte:

set_pte_at(vma->vm_mm, vmf->address, vmf->pte, entry);

………

}

5.3文件映射

文件映射包含读触发的异常、写时复制异常、访问共享页触发异常

static int do_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

int ret;

if (!vma->vm_ops->fault)

ret = VM_FAULT_SIGBUS;

else if (!(vmf->flags & FAULT_FLAG_WRITE))

ret = do_read_fault(vmf); //读触发的异常

else if (!(vma->vm_flags & VM_SHARED))

ret = do_cow_fault(vmf);//写时复制触发异常

else

ret = do_shared_fault(vmf); //写异常和访问共享页触发异常

…….

return ret;

}5.3.1读异常

static int do_read_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

int ret = 0;

if (vma->vm_ops->map_pages && fault_around_bytes >> PAGE_SHIFT > 1) {

ret = do_fault_around(vmf); //将异常地址周围几页都读入内存

if (ret)

return ret;

}

/*调用vma->vm_ops->fault(vmf); (默认filemap_fault),分配一个新页或者在页缓存中查找相关页并且将文件中对应页读到页中,让vmf->page指向新的页*/

ret = __do_fault(vmf);

…….

ret |= finish_fault(vmf);//将vmf->page写到pte中完成物理页到虚拟地址的映射

………

return ret;

}5.3.2写时复制

static int do_cow_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

int ret;

if (unlikely(anon_vma_prepare(vma))) //准备匿名映射自有的相关结构,anon_vma和anon_vma_chain

return VM_FAULT_OOM;

/*之前页是共享的,现在页要分开了,分配一个页用于拷贝之前共享页的内容,比如进程创建子进程之后就会将页设置为只读,如果发生写请求就会触发写时复制*/

vmf->cow_page = alloc_page_vma(GFP_HIGHUSER_MOVABLE, vma, vmf->address);

// 分配一个新页或者到页缓存中查找对应页vmf->page并且将文件中对应页读到新页中

ret = __do_fault(vmf);

//将共享页内容拷贝到vmf->cow_page

copy_user_highpage(vmf->cow_page, vmf->page, vmf->address, vma);

__SetPageUptodate(vmf->cow_page); //设置页已经被更新标识

//将vmf->cow_page(与前面vmf->page不同)写到pte中完成物理页到虚拟地址的映射。

ret |= finish_fault(vmf);

………

return ret;

}5.3.3共享映射

static int do_shared_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

int ret, tmp;

//调用shmem_fault去find page in cache, or get from swap, or allocate

ret = __do_fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

return ret;

if (vma->vm_ops->page_mkwrite) {//通知之前只读的页将要变为可写

unlock_page(vmf->page);

tmp = do_page_mkwrite(vmf);

……

}

//将vmf->page写到pte中完成物理页到虚拟地址的映射

ret |= finish_fault(vmf);

……

fault_dirty_shared_page(vma, vmf->page);

return ret;

}6. 反向映射

通常情况下的映射是从虚拟地址找到对应的物理页。反向映射是更具物理页找到映射了该页的所有虚拟地址区间的,有些页是被多个进程映射到自己地址空间的,根据反向映射可以找到所有进程中对应的虚拟地址区间vma。

6.1 文件映射页

用于文件映射的页建立反向映射的结构关系如下:

add_to_page_cache_lru ---->__add_to_page_cache_locked

static int __add_to_page_cache_locked(struct page *page,

struct address_space *mapping,

pgoff_t offset, gfp_t gfp_mask,

void **shadowp)

{

......

get_page(page);

page->mapping = mapping; //设置address_space到page->mapping

page->index = offset;

spin_lock_irq(&mapping->tree_lock);

error = page_cache_tree_insert(mapping, page, shadowp); //将页插入到页缓存中

......

return error;

}反向映射过程:1)根据page->mapping找到address_space;2)根据page->index找到address_space-> page_tree中所有的映射了该偏移位置的vma。3)从这些vma对应的页表中去查找映射了该page的vma

6.2匿名页

下面是匿名页建立反向映射所需结构之间的关系:

前面提到函数anon_vma_prepare用于准备匿名映射相关结构:

anon_vma_prepare---->__anon_vma_prepare

int __anon_vma_prepare(struct vm_area_struct *vma)

{

struct mm_struct *mm = vma->vm_mm;

struct anon_vma *anon_vma, *allocated;

struct anon_vma_chain *avc;

//分配avc用于链接vma和anon_vma

avc = anon_vma_chain_alloc(GFP_KERNEL);

if (!avc)

goto out_enomem;

//每个vma都有一个其对应的anon_vma,这里先尝试与相邻的anon_vma合并,如果不成功就分配

anon_vma = find_mergeable_anon_vma(vma);

allocated = NULL;

if (!anon_vma) {

anon_vma = anon_vma_alloc();

if (unlikely(!anon_vma))

goto out_enomem_free_avc;

allocated = anon_vma;

}

if (likely(!vma->anon_vma)) {

vma->anon_vma = anon_vma;

//让avc连接vma和anon_vma,并且将avc加入到其对应的anon_vma红黑树中

anon_vma_chain_link(vma, avc, anon_vma);

anon_vma->degree++;

allocated = NULL;

avc = NULL;

}

}static void anon_vma_chain_link(struct vm_area_struct *vma,

struct anon_vma_chain *avc,

struct anon_vma *anon_vma)

{

avc->vma = vma;

avc->anon_vma = anon_vma;

list_add(&avc->same_vma, &vma->anon_vma_chain); //avc添加到vma->anon_vma_chain

anon_vma_interval_tree_insert(avc, &anon_vma->rb_root);//将avc加入到其对应的anon_vma红黑树中

}函数page_add_new_anon_rmap用于建立反向映射:

page_add_new_anon_rmap ---->__page_set_anon_rmap

static void __page_set_anon_rmap(struct page *page,

struct vm_area_struct *vma, unsigned long address, int exclusive)

{

struct anon_vma *anon_vma = vma->anon_vma;

if (PageAnon(page))

return;

if (!exclusive)//如果页不是当前进程独享的,也即共享页,就用根anon_vma而不用当前区间anon_vma

anon_vma = anon_vma->root;

//让page->mapping指向anon_vma,并用最低位表示为匿名映射

anon_vma = (void *) anon_vma + PAGE_MAPPING_ANON;

page->mapping = (struct address_space *) anon_vma;

page->index = linear_page_index(vma, address);

}匿名页反向映射流程:1)根据page->mapping找到其所属的anon_vma;2)根据anon_vma->rb_root找到映射了页所属地址区间的所有vma;3)从这些vma对应的页表中去查找映射了该page的vma