Sqoop安装和导入导出

概述

Latest stable release is 1.4.7 (download, documentation). Latest cut of Sqoop2 is 1.99.7 (download, documentation). Note that 1.99.7 is not compatible with 1.4.7 and not feature complete, it is not intended for production deployment.

这句话的意思:1.99.7不能用于生产化境,所以还是先用1.4.7版本。

下载

1.4.7下载地址

解压

sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

mysql驱动包配置

D:\Soft\sqoop-1.4.7.bin__hadoop-2.6.0\lib\mysql-connector-java-5.1.30-bin.jar

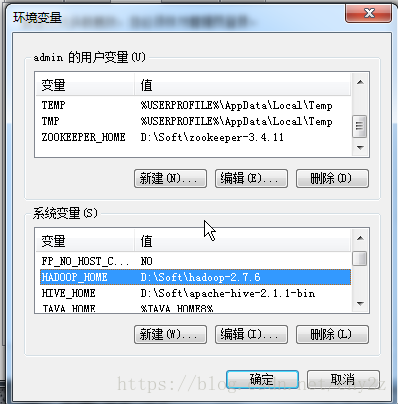

环境变量配置

方式1: 配置在系统环境变量中

方式2: \conf\sqoop-env-template.cmd 拷贝 \conf\sqoop-env.cmd

:: Set Hadoop-specific environment variables here.

::Set path to where bin/hadoop is available

::set HADOOP_COMMON_HOME=

::Set path to where hadoop-*-core.jar is available

::set HADOOP_MAPRED_HOME=

::set the path to where bin/hbase is available

::set HBASE_HOME=

::Set the path to where bin/hive is available

::set HIVE_HOME=

::Set the path for where zookeper config dir is

::set ZOOCFGDIR=

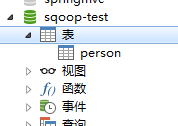

mysql数据库

连接mysql获取数据库信息

sqoop list-databases --connect jdbc:mysql://localhost:3306/ --username root --password wmzycn连接mysql获取表信息

sqoop list-tables --connect jdbc:mysql://localhost:3306/sqoop-test --username root --password wmzycn 导入数据到HDFS

sqoop import --connect jdbc:mysql://localhost:3306/sqoop-test --username root --password wmzycn --table person --m 1 --target-dir /sqoop/importD:\Soft\sqoop-1.4.7.bin__hadoop-2.6.0\bin>sqoop import --connect jdbc:mysql://localhost:3306/sqoop-test --username root --password wmzycn --table person --m 1 --target-dir /sqoop/import

Warning: HBASE_HOME and HBASE_VERSION not set.

Warning: HCAT_HOME not set

Warning: HCATALOG_HOME does not exist HCatalog imports will fail.

Please set HCATALOG_HOME to the root of your HCatalog installation.

Warning: ACCUMULO_HOME not set.

Warning: HBASE_HOME does not exist HBase imports will fail.

Please set HBASE_HOME to the root of your HBase installation.

Warning: ACCUMULO_HOME does not exist Accumulo imports will fail.

Please set ACCUMULO_HOME to the root of your Accumulo installation.

18/07/12 15:37:49 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

18/07/12 15:37:49 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/07/12 15:37:50 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/07/12 15:37:50 INFO tool.CodeGenTool: Beginning code generation

18/07/12 15:37:50 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `person` AS t LIMIT 1

18/07/12 15:37:50 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `person` AS t LIMIT 1

18/07/12 15:37:50 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is D:\Soft\hadoop-2.7.6

注: \tmp\sqoop-admin\compile\e1c43c4f3d5d4ce4a76825d2a087c651\person.java使用或覆盖了已过时的 API。

注: 有关详细信息, 请使用 -Xlint:deprecation 重新编译。

18/07/12 15:37:54 INFO orm.CompilationManager: Writing jar file: \tmp\sqoop-admin\compile\e1c43c4f3d5d4ce4a76825d2a087c651\person.jar

18/07/12 15:37:54 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/07/12 15:37:54 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/07/12 15:37:54 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/07/12 15:37:54 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/07/12 15:37:54 INFO mapreduce.ImportJobBase: Beginning import of person

18/07/12 15:37:55 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/07/12 15:37:55 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

18/07/12 15:37:55 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

18/07/12 15:37:55 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

18/07/12 15:38:03 INFO db.DBInputFormat: Using read commited transaction isolation

18/07/12 15:38:03 INFO mapreduce.JobSubmitter: number of splits:1

18/07/12 15:38:03 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local636586141_0001

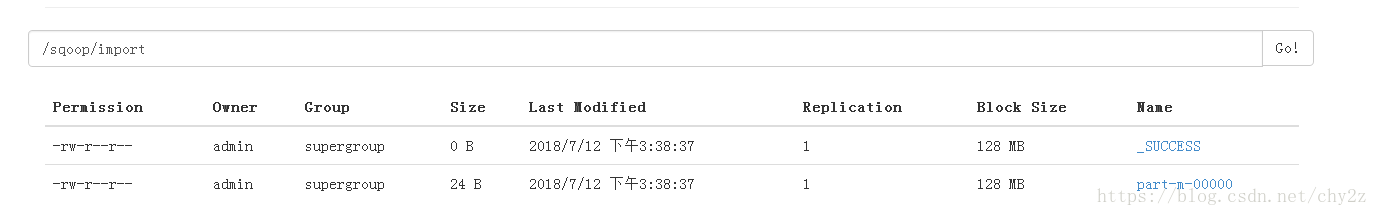

导入结果

导入数据到mysql

sqoop export --connect jdbc:mysql://localhost:3306/sqoop-test --username root --password wmzycn --table person1 --m 1 --export-dir /sqoop/importD:\Soft\sqoop-1.4.7.bin__hadoop-2.6.0\bin>sqoop export --connect jdbc:mysql://localhost:3306/sqoop-test --username root --password wmzycn --table person1 --m 1 --export-dir /sqoop/import

Warning: HBASE_HOME and HBASE_VERSION not set.

Warning: HCAT_HOME not set

Warning: HCATALOG_HOME does not exist HCatalog imports will fail.

Please set HCATALOG_HOME to the root of your HCatalog installation.

Warning: ACCUMULO_HOME not set.

Warning: HBASE_HOME does not exist HBase imports will fail.

Please set HBASE_HOME to the root of your HBase installation.

Warning: ACCUMULO_HOME does not exist Accumulo imports will fail.

Please set ACCUMULO_HOME to the root of your Accumulo installation.

18/07/12 16:45:33 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

18/07/12 16:45:33 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/07/12 16:45:33 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/07/12 16:45:33 INFO tool.CodeGenTool: Beginning code generation

18/07/12 16:45:34 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `person1` AS t LIMIT 1

18/07/12 16:45:34 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `person1` AS t LIMIT 1

18/07/12 16:45:34 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is D:\Soft\hadoop-2.7.6

注: \tmp\sqoop-admin\compile\9599aa5cf923aa1f55607480ebcf7ae5\person1.java使用或覆盖了已过时的 API。

注: 有关详细信息, 请使用 -Xlint:deprecation 重新编译。

18/07/12 16:45:36 INFO orm.CompilationManager: Writing jar file: \tmp\sqoop-admin\compile\9599aa5cf923aa1f55607480ebcf7ae5\person1.jar

18/07/12 16:45:36 INFO mapreduce.ExportJobBase: Beginning export of person1

18/07/12 16:45:36 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/07/12 16:45:37 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

18/07/12 16:45:37 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

18/07/12 16:45:37 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

18/07/12 16:45:37 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

18/07/12 16:45:37 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

18/07/12 16:45:46 INFO input.FileInputFormat: Total input paths to process : 1

18/07/12 16:45:46 INFO input.FileInputFormat: Total input paths to process : 1

18/07/12 16:45:46 INFO mapreduce.JobSubmitter: number of splits:1

18/07/12 16:45:46 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local384039619_0001

18/07/12 16:46:27 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

18/07/12 16:46:27 INFO mapreduce.Job: Running job: job_local384039619_0001

18/07/12 16:46:27 INFO mapred.LocalJobRunner: OutputCommitter set in config null

18/07/12 16:46:27 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.sqoop.mapreduce.NullOutputCommitter

18/07/12 16:46:27 INFO mapred.LocalJobRunner: Waiting for map tasks

18/07/12 16:46:27 INFO mapred.LocalJobRunner: Starting task: attempt_local384039619_0001_m_000000_0

18/07/12 16:46:27 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

18/07/12 16:46:27 INFO mapred.Task: Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@342b9f39

18/07/12 16:46:27 INFO mapred.MapTask: Processing split: Paths:/sqoop/import/part-m-00000:0+24

18/07/12 16:46:27 INFO Configuration.deprecation: map.input.file is deprecated. Instead, use mapreduce.map.input.file

18/07/12 16:46:27 INFO Configuration.deprecation: map.input.start is deprecated. Instead, use mapreduce.map.input.start

18/07/12 16:46:27 INFO Configuration.deprecation: map.input.length is deprecated. Instead, use mapreduce.map.input.length

18/07/12 16:46:27 INFO mapreduce.AutoProgressMapper: Auto-progress thread is finished. keepGoing=false

18/07/12 16:46:27 INFO mapred.LocalJobRunner:

18/07/12 16:46:27 INFO mapred.Task: Task:attempt_local384039619_0001_m_000000_0 is done. And is in the process of committing

18/07/12 16:46:27 INFO mapred.LocalJobRunner: map

18/07/12 16:46:27 INFO mapred.Task: Task 'attempt_local384039619_0001_m_000000_0' done.

18/07/12 16:46:27 INFO mapred.Task: Final Counters for attempt_local384039619_0001_m_000000_0: Counters: 20

File System Counters

FILE: Number of bytes read=19191645

FILE: Number of bytes written=19674868

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=30

HDFS: Number of bytes written=0

HDFS: Number of read operations=12

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Map-Reduce Framework

Map input records=2

Map output records=2

Input split bytes=128

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=0

Total committed heap usage (bytes)=319815680

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

18/07/12 16:46:27 INFO mapred.LocalJobRunner: Finishing task: attempt_local384039619_0001_m_000000_0

18/07/12 16:46:27 INFO mapred.LocalJobRunner: map task executor complete.

18/07/12 16:46:28 INFO mapreduce.Job: Job job_local384039619_0001 running in uber mode : false

18/07/12 16:46:28 INFO mapreduce.Job: map 100% reduce 0%

18/07/12 16:46:28 INFO mapreduce.Job: Job job_local384039619_0001 completed successfully

18/07/12 16:46:28 INFO mapreduce.Job: Counters: 20

File System Counters

FILE: Number of bytes read=19191645

FILE: Number of bytes written=19674868

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=30

HDFS: Number of bytes written=0

HDFS: Number of read operations=12

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Map-Reduce Framework

Map input records=2

Map output records=2

Input split bytes=128

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=0

Total committed heap usage (bytes)=319815680

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

18/07/12 16:46:28 INFO mapreduce.ExportJobBase: Transferred 30 bytes in 50.4022 seconds (0.5952 bytes/sec)

18/07/12 16:46:28 INFO mapreduce.ExportJobBase: Exported 2 records.