支持向量机SVM③——通过4种核函数进行波斯顿房价回归预测

文章目录:

导入库和数据

数据预处理

模型训练和评估

一、导入库和数据

本文采用dataset自带的Boston房价数据集,进行回归预测

# 导入库

from sklearn.datasets import load_boston

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.svm import LinearSVR

from sklearn import metrics

from sklearn.model_selection import GridSearchCV

from sklearn.svm import SVR

import seaborn as sns

import matplotlib.pyplot as plt

# 导入数据集

boston = load_boston()

data = boston.data

target = boston.target

二、数据预处理

先用train_test_split切割出70%的训练集和30%的测试集。由于该数据集各样本取值范围差异很大,直接将数据输入到SVM中的话,学习将会变得困难且容易被噪声干扰。解决方法是对每个特征做标准化或归一化或正则化,本次采用sklearn库自带的z_值标准化

# 数据预处理

train_data,test_data,train_target,test_target = train_test_split(data,target,test_size=0.3)

Stand_X = StandardScaler() # 特征进行标准化

Stand_Y = StandardScaler() # 标签也是数值,也需要进行标准化

train_data = Stand_X.fit_transform(train_data)

test_data = Stand_X.transform(test_data)

train_target = Stand_Y.fit_transform(train_target.reshape(-1,1)) # reshape(-1,1)指将它转化为1列,行自动确定

test_target = Stand_Y.transform(test_target.reshape(-1,1))

三、模型训练和评估

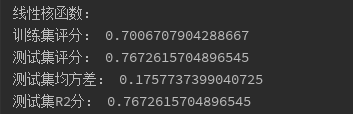

# ① 线性核函数

clf = LinearSVR(C=2)

clf.fit(train_data,train_target)

y_pred = clf.predict(test_data)

print("线性核函数:")

print("训练集评分:", clf.score(train_data,train_target))

print("测试集评分:", clf.score(test_data,test_target))

print("测试集均方差:",metrics.mean_squared_error(test_target,y_pred.reshape(-1,1)))

print("测试集R2分:",metrics.r2_score(test_target,y_pred.reshape(-1,1)))

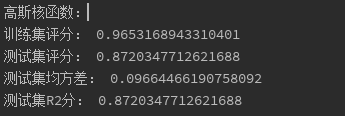

# ② 高斯核函数

clf = SVR(kernel='rbf',C=10,gamma=0.1,coef0=0.1)

clf.fit(train_data,train_target)

y_pred = clf.predict(test_data)

print("高斯核函数:")

print("训练集评分:", clf.score(train_data,train_target))

print("测试集评分:", clf.score(test_data,test_target))

print("测试集均方差:",metrics.mean_squared_error(test_target,y_pred.reshape(-1,1)))

print("测试集R2分:",metrics.r2_score(test_target,y_pred.reshape(-1,1)))

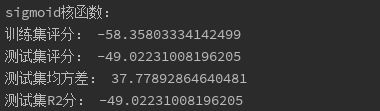

# ③ sigmoid核函数

clf = SVR(kernel='sigmoid',C=2)

clf.fit(train_data,train_target)

y_pred = clf.predict(test_data)

print("sigmoid核函数:")

print("训练集评分:", clf.score(train_data,train_target))

print("测试集评分:", clf.score(test_data,test_target))

print("测试集均方差:",metrics.mean_squared_error(test_target,y_pred.reshape(-1,1)))

print("测试集R2分:",metrics.r2_score(test_target,y_pred.reshape(-1,1)))

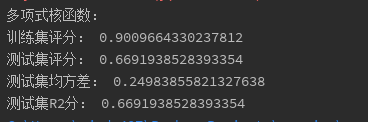

# ④ 多项式核函数

clf = SVR(kernel='poly',C=2)

clf.fit(train_data,train_target)

y_pred = clf.predict(test_data)

print("多项式核函数:")

print("训练集评分:", clf.score(train_data,train_target))

print("测试集评分:", clf.score(test_data,test_target))

print("测试集均方差:",metrics.mean_squared_error(test_target,y_pred.reshape(-1,1)))

print("测试集R2分:",metrics.r2_score(test_target,y_pred.reshape(-1,1)))

从结果上看,模型评分:高斯> 多项式>线性>sigmoid

因为SVM对参数十分敏感,不同的参数会导致模型的评分差距非常大,因此用GridSearchCV进行调参,看下是否高斯的得分依然最高

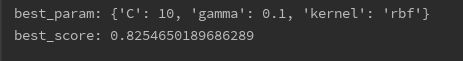

# 调参

clf = GridSearchCV(SVR(),param_grid={'kernel':['poly','sigmoid','rbf'],'C': [0.1,1,10],'gamma': [0.1,1,10]},cv=5)

clf.fit(train_data,train_target)

print("best_param:",clf.best_params_)

print("best_score:", clf.best_score_)