Hive 实践(四)、Hive 语法归纳

hive 常用的语法:

1、 hive中创建表

#创建一个库表,表名为 cwqsolo, 含有2个字段 id, name,并且记录中各个字段的分割符为 ‘,’,在ascii中表示为 '\054';

CREATE TABLE cwqsolo(id INT, name STRING)ROW FORMAT DELIMITED FIELDS TERMINATED BY '\054';

创建分区表(内部表)

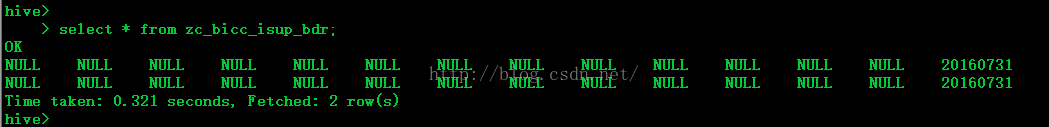

> create table zc_bicc_isup_bdr (

>

> opc int ,

>

> dpc int ,

>

> calling string ,

>

> calling_add_ind int ,

>

> called string ,

>

> ori_called string ,

>

> start_time timestamp ,

>

> alert_time int ,

>

> talk_time int ,

>

> call_result int ,

>

> rel_cause int ,

>

> rel_dir int ,

>

> gen_num string )

>

> partitioned by (ptDate string)

>

> ROW FORMAT DELIMITED

>

> FIELDS TERMINATED BY ','

>

> ;创建分区表(外部表)

2、修改库表结构

修改库表的字段

#修改字段名为ID的字段,修改后名称为 myid, string类型

ALTER TABLE cwqsolo CHANGE id myid String;

#增加一个字段,字段名为 sex,性别

ALTER TABLE cwqsolo ADD COLUMNS ( sex STRING COMMENT 'sex type');

3、数据的装载

可以建立一个文本文件,用,号分隔,然后使用load命令装载。

首先,建立一个文本文件:

[root@archive studydata]# vi test1.txt

1001,cwq,male

1101,lxj,female然后装载这个文本

hive> LOAD DATA LOCAL INPATH '/home/hadoop/hive/studydata/test1.txt' INTO TABLE cwqsolo;

Loading data to table default.cwqsolo

OK

Time taken: 1.83 seconds

hive> select * from cwqsolo;

OK

1001 cwq male

1101 lxj female

Time taken: 0.173 seconds, Fetched: 2 row(s)

hive>4、插入数据

1) 追加的方式插入数据,原有数据没有被修改

insert into table cwqsolo values ( '1005', 'ddd','male' );2) 重写的方式,旧的数据都被清除,指留下新的数据

insert overwrite table test_insert select * from test_table;

insert OVERWRITE table cwqsolo values ( '1006', 'hhh','female' );

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. tez, spark) or using Hive 1.X releases.

Query ID = root_20161007070952_4fc5c176-76da-40ff-8a4a-4522e2138441

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1475846838422_0002, Tracking URL = http://archive.cloudera.com:8088/proxy/application_1475846838422_0002/

Kill Command = /opt/hadoop/hadoop-2.6.4//bin/hadoop job -kill job_1475846838422_0002

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2016-10-07 07:44:25,570 Stage-1 map = 0%, reduce = 0%

2016-10-07 07:44:33,415 Stage-1 map = 100%, reduce = 0%

Ended Job = job_1475846838422_0002

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to: hdfs://192.168.136.144:9000/user/hive/warehouse/cwqsolo/.hive-staging_hive_2016-10-07_07-44-15_130_2736379798501468939-1/-ext-10000

Loading data to table default.cwqsolo

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 2.6 sec HDFS Read: 4598 HDFS Write: 87 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 600 msec

OK

Time taken: 21.032 seconds

hive>

> select * from cwqsolo;

OK

1006 hhh female

Time taken: 0.159 seconds, Fetched: 1 row(s)

hive>

>五、hive 执行 sql 文本

1、 首先编辑一个sql文件,并上传到主机下。

2、执行 hive -f 路径/xxx.sql

如:

hive -f /home/hadoop/hive/sql/creat_table_biss.sql六、导出数据到本地文件

hive>

> insert overwrite local directory '/home/data/cwq/'

>

> select * from zc_bicc_isup_bdr where calling= '13003097698';在本地可以生成 000000_0 文件,

可以制定分隔符:

insert overwrite local directory '/home/data/cwq/output1'row format delimited fields terminated by ',' select * from zc_bicc_isup_bdr where calling= '13003097698';