Android 语音遥控器的整体分析-底层实现机制分析

前面我们知道Writer类中开了一个线程在进行实际的录音。

Writer.addSource()中,有这样一段代码:

const char *kHeader = isWide ? "#!AMR-WB\n" : "#!AMR\n";

ssize_t n = strlen(kHeader);

if (write(mFd, kHeader, n) != n) {

return ERROR_IO;

}其实就是在录音之前先将音频的一些参数信息写进去。

在StagefrightRecorder::createAudioSource()中,有一段代码:

sp audioEncoder =

OMXCodec::Create(client.interface(), encMeta,

true /* createEncoder */, audioSource);

mAudioSourceNode = audioSource;

return audioEncoder; 这里是创建一个编码器

在OMXCodec::Create()中是这样的,先调用findMatchingCodecs查找匹配的编码器,如果没有匹配的就返回,,如果需要添加编码器,那么就添加一个:

if (createEncoder) {

sp softwareCodec =

InstantiateSoftwareEncoder(componentName, source, meta);

if (softwareCodec != NULL) {

ALOGV("Successfully allocated software codec '%s'", componentName);

return softwareCodec;

}

} 因此,添加音频编码器就在这里添加咯!!!

static sp InstantiateSoftwareEncoder(

const char *name, const sp &source,

const sp &meta) {

struct FactoryInfo {

const char *name;

sp (*CreateFunc)(const sp &, const sp &);

};

static const FactoryInfo kFactoryInfo[] = {

FACTORY_REF(AACEncoder)

};

for (size_t i = 0;

i < sizeof(kFactoryInfo) / sizeof(kFactoryInfo[0]); ++i) {

if (!strcmp(name, kFactoryInfo[i].name)) {

return (*kFactoryInfo[i].CreateFunc)(source, meta);

}

}

return NULL;

}

由这个create中的匹配过程,可以知道,编解码器存放的地方是:struct MediaCodecList中,以一个向量保存

\\frameworks\av\media\libstagefright\MediaCodecList.h

Vector mCodecInfos; ssize_t MediaCodecList::findCodecByType(

const char *type, bool encoder, size_t startIndex) const {

ssize_t typeIndex = mTypes.indexOfKey(type);

if (typeIndex < 0) {

return -ENOENT;

}

while (startIndex < mCodecInfos.size()) {

const CodecInfo &info = mCodecInfos.itemAt(startIndex);

if (info.mIsEncoder == encoder && (info.mTypes & typeMask)) {

return startIndex;

}

++startIndex;

}

return -ENOENT;

}对编码器的使用,也就是线程里的 err = mSource->read(&buffer); 由read()函数可以知道音频数据来源是 List

status_t AudioSource::read(

MediaBuffer **out, const ReadOptions *options) {

Mutex::Autolock autoLock(mLock);

*out = NULL;

if (mInitCheck != OK) {

return NO_INIT;

}

while (mStarted && mBuffersReceived.empty()) {

mFrameAvailableCondition.wait(mLock);

}

if (!mStarted) {

return OK;

}

MediaBuffer *buffer = *mBuffersReceived.begin();

mBuffersReceived.erase(mBuffersReceived.begin());

++mNumClientOwnedBuffers;

buffer->setObserver(this);

buffer->add_ref();

// Mute/suppress the recording sound

int64_t timeUs;

CHECK(buffer->meta_data()->findInt64(kKeyTime, &timeUs));

int64_t elapsedTimeUs = timeUs - mStartTimeUs;

if (elapsedTimeUs < kAutoRampStartUs) {

memset((uint8_t *) buffer->data(), 0, buffer->range_length());

} else if (elapsedTimeUs < kAutoRampStartUs + kAutoRampDurationUs) {

int32_t autoRampDurationFrames =

(kAutoRampDurationUs * mSampleRate + 500000LL) / 1000000LL;

int32_t autoRampStartFrames =

(kAutoRampStartUs * mSampleRate + 500000LL) / 1000000LL;

int32_t nFrames = mNumFramesReceived - autoRampStartFrames;

rampVolume(nFrames, autoRampDurationFrames,

(uint8_t *) buffer->data(), buffer->range_length());

}

// Track the max recording signal amplitude.

if (mTrackMaxAmplitude) {

trackMaxAmplitude(

(int16_t *) buffer->data(), buffer->range_length() >> 1);

}

*out = buffer;

return OK;

}

而跟踪这个mBuffersReceived,可以知道Android在构造AudioSource实例的时候会指定一个数据源inputSource和回调函数AudioRecordCallbackFunction,在AudioSource中会构造AudioRecord对象,实际录音过程中就是在AudioRecord中通过这个回调函数中将数据一帧一帧填充进去的。

AudioSource::AudioSource(

audio_source_t inputSource, uint32_t sampleRate, uint32_t channelCount)

: mRecord(NULL),

mStarted(false),

mSampleRate(sampleRate),

mPrevSampleTimeUs(0),

mNumFramesReceived(0),

mNumClientOwnedBuffers(0) {

ALOGV("sampleRate: %d, channelCount: %d", sampleRate, channelCount);

CHECK(channelCount == 1 || channelCount == 2);

size_t minFrameCount;

status_t status = AudioRecord::getMinFrameCount(&minFrameCount,

sampleRate,

AUDIO_FORMAT_PCM_16_BIT,

audio_channel_in_mask_from_count(channelCount));

if (status == OK) {

// make sure that the AudioRecord callback never returns more than the maximum

// buffer size

int frameCount = kMaxBufferSize / sizeof(int16_t) / channelCount;

// make sure that the AudioRecord total buffer size is large enough

int bufCount = 2;

while ((bufCount * frameCount) < minFrameCount) {

bufCount++;

}

mRecord = new AudioRecord(

inputSource, sampleRate, AUDIO_FORMAT_PCM_16_BIT,

audio_channel_in_mask_from_count(channelCount),

bufCount * frameCount,

AudioRecordCallbackFunction,

this,

frameCount);

mInitCheck = mRecord->initCheck();

} else {

mInitCheck = status;

}

}如果想知道录音设备,比如MIC中的数据是怎么通过回调函数写到Buffer中,那就要继续跟踪传到AudioRecord中的回调函数AudioRecordCallbackFunction了。

分析AudioRecord可以知道,在创建AudioRecord的时候会创建一个线程

if (cbf != NULL) {

mAudioRecordThread = new AudioRecordThread(*this, threadCanCallJava);

mAudioRecordThread->run("AudioRecord", ANDROID_PRIORITY_AUDIO);

}调用回调的这个过程就是在这个AudioRecordThread线程中操作的。

bool AudioRecord::AudioRecordThread::threadLoop()

{

{

AutoMutex _l(mMyLock);

if (mPaused) {

mMyCond.wait(mMyLock);

// caller will check for exitPending()

return true;

}

}

if (!mReceiver.processAudioBuffer(this)) {

pause();

}

return true;

}一次次在processAudioBuffer(this)中调用回调函数(也就是AudioRecord.mCbf)。

mCbf(EVENT_MORE_DATA, mUserData, &audioBuffer);前面都是初始化构建过程,现在来看下AudioRecord::start,我们知道其中会调用IAudioRecord类型对象mAudioRecord的start函数。

status_t AudioRecord::start(AudioSystem::sync_event_t event, int triggerSession)

{

status_t ret = NO_ERROR;

sp t = mAudioRecordThread;

ALOGV("start, sync event %d trigger session %d", event, triggerSession);

AutoMutex lock(mLock);

// acquire a strong reference on the IAudioRecord and IMemory so that they cannot be destroyed

// while we are accessing the cblk

sp audioRecord = mAudioRecord;

sp iMem = mCblkMemory;

audio_track_cblk_t* cblk = mCblk;

if (!mActive) {

mActive = true;

cblk->lock.lock();

if (!(cblk->flags & CBLK_INVALID)) {

cblk->lock.unlock();

ALOGV("mAudioRecord->start()");

ret = mAudioRecord->start(event, triggerSession);

cblk->lock.lock();

if (ret == DEAD_OBJECT) {

android_atomic_or(CBLK_INVALID, &cblk->flags);

}

}

if (cblk->flags & CBLK_INVALID) {

audio_track_cblk_t* temp = cblk;

ret = restoreRecord_l(temp);

cblk = temp;

}

cblk->lock.unlock();

if (ret == NO_ERROR) {

mNewPosition = cblk->user + mUpdatePeriod;

cblk->bufferTimeoutMs = (event == AudioSystem::SYNC_EVENT_NONE) ? MAX_RUN_TIMEOUT_MS :

AudioSystem::kSyncRecordStartTimeOutMs;

cblk->waitTimeMs = 0;

if (t != 0) {

t->resume();

} else {

mPreviousPriority = getpriority(PRIO_PROCESS, 0);

get_sched_policy(0, &mPreviousSchedulingGroup);

androidSetThreadPriority(0, ANDROID_PRIORITY_AUDIO);

}

} else {

mActive = false;

}

}

return ret;

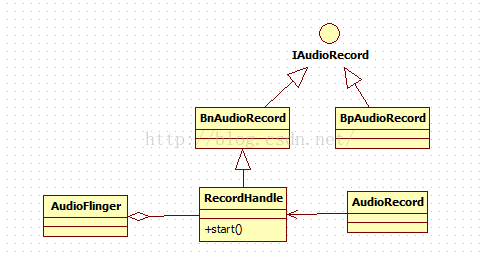

} 分析围绕IAudioRecord接口的类图

可以知道,最终调用的是AudioFinger中RecordHandler这个类的start()函数。

status_t AudioFlinger::RecordHandle::start(int /*AudioSystem::sync_event_t*/ event,

int triggerSession) {

ALOGV("RecordHandle::start()");

return mRecordTrack->start((AudioSystem::sync_event_t)event, triggerSession);

}后面再分析AudioFlinger怎么操作硬件。

这篇博文提到了怎么在AudioFlinger中处理音频多路输出的情况:

http://blog.csdn.net/hgl868/article/details/6888502