hive on spark安装(hive2.3 spark2.1)

简介

之前有写过hive on spark的一个文档,hive版本为2.0,spark版本为1.5。spark升级到2.0后,性能有所提升,程序文件的编排也和之前不一样,这里再更新一个最新的部署方式。

spark2.0引入了spark session的概念,创建一个spark任务和之前也不一样,之前版本的hive并不能兼容spark2.0,所以推荐使用hive2.3以后的版本。

安装步骤可参考官网https://cwiki.apache.org/confluence/display/Hive/Hive+on+Spark%3A+Getting+Started

1.集群环境

hive要安装hadoop的集群节点上,hadoop集群自行安装,yarn和hdfs都要启动。

| 节点 | 组件 | 启动程序 |

|---|---|---|

| node1 | hadoop hive spark | resourcemanager,namenode,hive |

| node2 | hadoop | datanode,nodemanager |

| node3 | hadoop | datanode,nodemanager |

根据下面的步骤将hive和spark放在同一个节点上就可以

2.安装环境

下载hive2.3 bin文件

下载spark2.1源代码并编译

下载scala2.10

3.spark编译

从github上下载spark2.1源代码https://github.com/apache/spark/releases

说一下为什么重新编译spark,spark里面是包含spark-sql的,spark-sql是使用修改过的hive和spark相结合的组件,因为spark-sql里面的hive和我们用的hive2.3版本冲突,所以这里用源代码重新编译,去掉里面的spark-sql里面的hive,编译过后的spark是不能使用spark-sql功能的。

编译需要spark需要maven3.3+,scala2.10+,jdk1.7+

进入spark源代码根目录执行下面程序进行编译

./dev/make-distribution.sh --name "hadoop2-without-hive" --tgz "-Pyarn,hadoop-provided,hadoop-2.7,parquet-provided"编译完成后会在根目录生成spark-2.1.1-bin-hadoop2-without-hive.tgz。

cp ./spark-2.1.1-bin-hadoop2-without-hive.tgz /opt

tar xzvf spark-2.1.1-bin-hadoop2-without-hive.tgz

#创建软连接

ln -s spark-2.1.1-bin-hadoop2-without-hive spark配置spark

cd /opt/spark/conf

cp spark-env.sh.template spark-env.sh

vi spark-env.sh

##在文件中计入下面的代码并保存

export SPARK_DIST_CLASSPATH=$(/opt/hadoop/bin/hadoop classpath)配置上面参数的作用是hive启动spark任务的时候也会把hadoop的classpath加进来

4.hive安装

4.1 在官网下载好hive后,解压

mv apache-hive-2.3.0-bin.tar.gz /opt

tar xzvf apache-hive-2.3.0-bin.tar.gz

#创建软连接

ln -s apache-hive-2.3.0-bin hive4.2 加入必要jar

cp /opt/spark/jars/scala-library-2.11.8.jar /opt/hive/lib

cp /opt/spark/jars/spark-network-common_2.11-2.1.1.jar /opt/hive/lib

cp /opt/spark/jars/spark-core_2.11-2.1.1.jar /opt/hive/lib

#下载并加入mysql的jdbc

cp xx/mysql-connector-java-5.1.38.jar /opt/hive/lib4.3 配置hive

vi hive-site.xml

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://192.168.1.82:3306/hive2?createDatabaseIfNotExist=true&useUnicode=true&characterEncoding=UTF-8value>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>rootvalue>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>123456value>

<description>password to use against metastore databasedescription>

property>

<property>

<name>hive.execution.enginename>

<value>sparkvalue>

property>

<property>

<name>hive.enable.spark.execution.enginename>

<value>truevalue>

property>

<property>

<name>spark.mastername>

<value>yarn-clustervalue>

property>

<property>

<name>spark.serializername>

<value>org.apache.spark.serializer.KryoSerializervalue>

property>

<property>

<name>spark.executor.instancesname>

<value>3value>

property>

<property>

<name>spark.executor.coresname>

<value>4value>

property>

<property>

<name>spark.executor.memoryname>

<value>10240mvalue>

property>

<property>

<name>spark.driver.coresname>

<value>2value>

property>

<property>

<name>spark.driver.memoryname>

<value>4096mvalue>

property>

<property>

<name>spark.yarn.queuename>

<value>defaultvalue>

property>

<property>

<name>spark.app.namename>

<value>myInceptorvalue>

property>

<property>

<name>hive.support.concurrencyname>

<value>truevalue>

property>

<property>

<name>hive.enforce.bucketingname>

<value>truevalue>

property>

<property>

<name>hive.exec.dynamic.partition.modename>

<value>nonstrictvalue>

property>

<property>

<name>hive.txn.managername>

<value>org.apache.hadoop.hive.ql.lockmgr.DbTxnManagervalue>

property>

<property>

<name>hive.compactor.initiator.onname>

<value>truevalue>

property>

<property>

<name>hive.compactor.worker.threadsname>

<value>1value>

property>

<property>

<name>spark.executor.extraJavaOptionsname>

<value>-XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three"

value>

property>

<property>

<name>hive.server2.enable.doAsname>

<value>falsevalue>

property>4.4 hive-env.sh

根据实际情况修改hive的jvm参数,防止内存溢出

export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms1024m -Xms10m -XX:MaxPermSize=256m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:-UseGCOverheadLimit"

export HADOOP_HEAPSIZE=40964.5 初始化数据库

/opt/hive/bin/schematool -initSchema -dbType mysql

###当出现下面的信息表示初始化成功

Starting metastore schema initialization to 2.0.0

Initialization script hive-schema-2.0.0.mysql.sql

Initialization script completed

schemaTool completed

###这时候你就可以去你的mysql数据库中查看metastore的信息5.配置环境变量

vi /etc/profile

##加入下面配置信息

export SCALA_HOME=/opt/scala/

export PATH=$SCALA_HOME/bin:$PATH

export HIVE_HOME=/opt/hive

export PATH=$PATH:$HIVE_HOME/bin:$PATH

export SPARK_HOME=/opt/spark

##保存退出

source /etc/profile6.启动hive

/opt/hive/bin/hive --service hiveserver2用beeline连接hive

beeline -u jdbc:hive2://localhost:10000

Connecting to jdbc:hive2://localhost:10000

Connected to: Apache Hive (version 2.3.0)

Driver: Hive JDBC (version 2.3.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 2.3.0 by Apache Hive

##连接成功后,创建表并插入数据

0: jdbc:hive2://localhost:10000> create table test (f1 string,f2 string) stored as orc;

No rows affected (2.018 seconds)

0: jdbc:hive2://localhost:10000> insert into test values(1,2);

No rows affected (9.908 seconds)

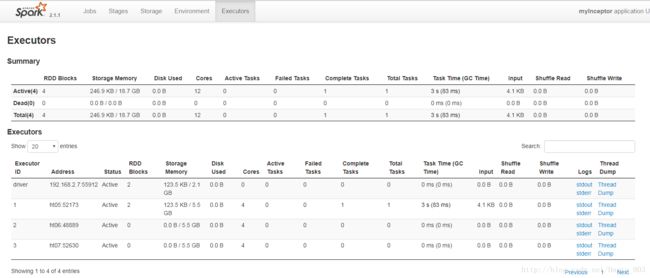

0: jdbc:hive2://localhost:10000> 执行上面的代码创建一个orc表,并插入数据,这时会启动spark来执行任务,可以在yarn页面看到启动的spark任务

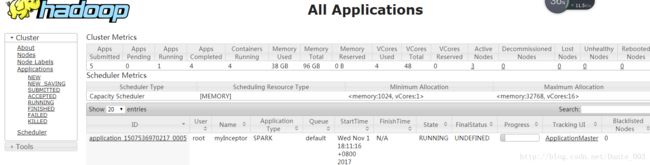

yarn页面