测试计划

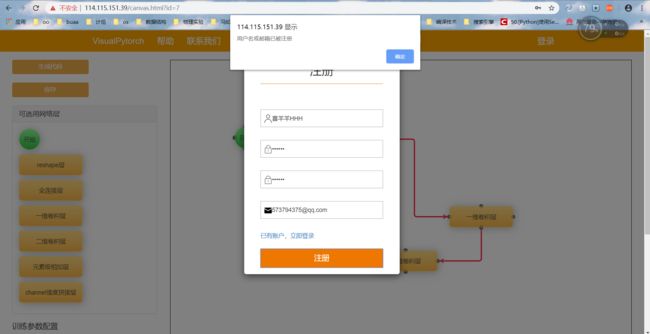

一、对新增加的用户注册、登录及访问控制的测试

注册信息的填写

- 用户名包含纯大小写字母、数字、中文、特殊字符及几种情况的混合

- 密码包含大小写字母、数字和特殊字符

- 用户名长度不大于150个字节

- 密码长度不大于128个字节

- 邮箱格式(由于未进行邮箱验证,故只检查简单格式)

注册时的不合法情况

- 没有填写完整的注册信息

- 两次输入的密码不一致

- 使用已注册或者不合法的用户名注册

- 使用已注册的或者格式不正确的邮箱注册

登录时的不合法情况

- 未填写用户名或密码的情况下直接点击登录

- 用户名或邮箱尚未注册

- 密码错误

访问控制

- 未登录时,不能保存自己搭建的模型

- 登录之后,可以保存搭建的模型,也可以查看并修改之前保存的模型

二、组件的测试

基础测试

- 新增组件及原来组件的拖拽

- 组件之间的连线

- 组件的参数设置

- 组件的删除

稳定性测试

- 通过自动化测试往画布中拖入各种组件共5万次

三、代码生成、保存模型及下载功能测试

代码生成及下载正常测试

- 组件正确连接

- 参数配置正常

在以上条件满足的情况下,测试能否正确生成代码,测试能否下载生成的代码文件

代码生成异常测试

- 未放入“开始”组件

- 组件的连线不正确

- 参数的输入不合法

测试在以上异常情况下网站的报错情况

已登录时

- 拖拽组件、连线及调节参数搭建模型

- 搭建模型之后生成代码

- 保存模型

- 查看模型

- 删除模型

- 修改已保存的模型

- 保存修改之后的模型

- 下载生成的代码文件

- 登出账户

未登录时

- 拖拽组件、连线及调节参数搭建模型

- 搭建模型之后生成代码

- 保存模型

- 下载生成的代码文件

四、其他测试

- 各个页面的跳转

- 下拉框及参数输入

- 针对不同平台的测试

测试过程及测试结果

测试过程

- 4.30~5.4 针对新增加的注册登录进行测试,编写相应地测试用例对内测版本测试

- 5.6~5.10 针对内测版本核心功能测试

- 5.12~5.16 针对线上网站新版本的测试

- 5.18 对线上进行压力测试

- 5.19~5.22 进行整体测试

测试期间发现一些比较严重的bug,部分已经得到修复

测试结果

内测版本发现的主要问题有:

- 注册时,用户名含有;.<>?/:"{}[]|'~`!#$%^&*()等特殊字符或者中文字符时,会弹出“用户名或邮箱已被注册”

- 新增的元素级相加层组件无法删除

- 登录之后无法保存模型

- 查看模型时画布显示一片空白

- 查看模型时对模型进行修改之后无法保存

- 无法删除保存的模型

- 下载下来的代码文件没有换行

对部分bug进行修复之后,当前版本还存在的主要问题有:

针对Beta阶段的新功能、新特性发现的Bug

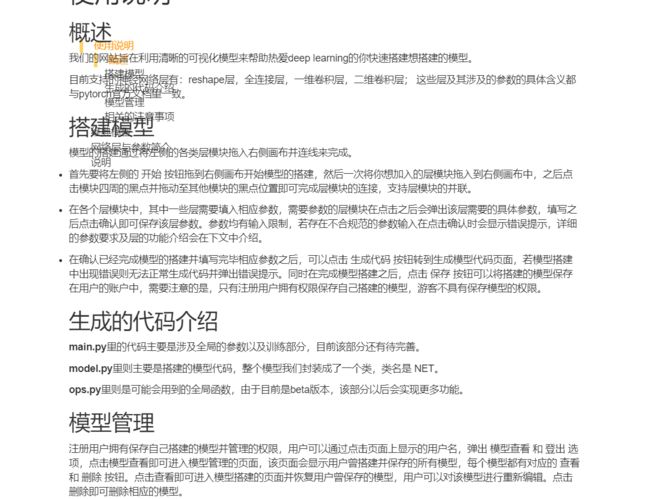

Beta阶段我们加入了注册、登录功能,用户可以保存自己搭建的模型,登录之后可以查看之前保存的模型,并在此基础上进行修改再保存;此外,我们还增加了代码文件下载的新功能,提升用户体验。以下是测试过程中针对新功能发现的问题:

- 注册时,用户名含有;.<>?/:"{}[]|'~`!#$%^&*()等特殊字符或者中文字符时,会弹出“用户名或邮箱已被注册”

- 帮助界面侧边栏与正文重叠

- 可以直接输入模型id查看他人的模型

- 用户搭好模型之后想登录保存,登录之后画布清空

针对新功能的场景测试

典型用户一

猿巨:不了解计算机,路人,一个偶然机会打开网站

需求和目标:试试网站的用途,用来增加闲时的乐趣

使用场景:

猿巨注册了一个账号;

注册完之后猿巨登录成功,然后看了帮助文档后试着自己随便搭了个模型,进行保存;

猿巨想看是否真的保存成功,于是登出账户,再次登录,查看模型;

猿巨点击查看,画布上显示出他之前搭建的模型,于是猿巨又改了改模型,再次保存,查看模型,发现模型成功修改;

猿巨点击代码生成,发现生成了三份代码,可是他看不懂,于是又点回画布,拖着组件玩弄,最后退出登录。

典型用户二

战锤:计算机本科大三学生,了解过深度学习,搭过简单模型

需求和目标:认为自己手写代码太累,想通过简单方法获取模型代码,并且可以随时调整参数

使用场景:

战锤注册了一个账号;

自己拖拽组件并搭建了一个模型,进行保存之后,查看模型并生成代码;

战锤将生成的代码文件下载下来,结合自己的数据进行训练、运行;发现参数调节的不太合适;

战锤回到网站,查看之前保存的模型,并对其中的参数进行调节,重新保存修改后的模型,并查看再次生成代码;

战锤经过多次调节,不用自己手写代码就得到自己想要的模型;

战锤又搭建了几个模型,并进行保存,查看账户的模型,自己搭建的模型都在,并且可以随时进行修改;

战锤完成工作后,登出账户;

一段时间后,战锤登录账户查看模型,发现之前搭建的模型有重复的,于是将重复的几个模型删除,登出账户。

典型用户三

车夫:对深度学习研究较深,经验丰富,希望能快速搭建出较复杂的模型

需求和目标:快速搭建较复杂的模型并且能实时修改,不用重新搭建

使用场景:

车夫注册了一个账号;

车夫利用网站新增加的元素级相加层和channel维度拼接层,再结合网站之前已有的组件,搭建出一个较复杂的并联的模型;

车夫查看已保存的模型并生成代码,将生成的代码文件下载下来,结合自己的数据文件,进行训练、运行;

车夫在调整修改时,突然电脑显示快关机了,于是车夫点击保存,先将模型保存下来,之后找到电源再继续,免于让自己的努力功亏一篑;

车夫再找到电源后登录账户完善模型,得到自己想要的模型代码,并保存最新的模型,登出账户。

回归测试

在这一阶段,我们做了回归测试,首先,第一个向后端发送的数据没有涉及到新增的两个网络层,以此测试新增的网络层对之前的网络层是否会造成影响;

def test_ops_five(self):

"""

Test the addition of two strings returns the two string as one

concatenated string

"""

result = {

"Main": ["'''", "", "Copyright @2019 buaa_huluwa. All rights reserved.", "",

"View more, visit our team's home page: https://home.cnblogs.com/u/1606-huluwa/", "", "",

"This code is the corresponding pytorch code generated from the model built by the user.", "",

" \"main.py\" mainly contains the code of the training and testing part, and you can modify it according to your own needs.",

"", "'''", "", "#standard library", "import os", "", "#third-party library", "import torch",

"import numpy", "import torchvision", "", "", "from Model import *", "from Ops import *", "", "",

"#Hyper Parameters", "epoch = 1", "optimizer = torch.optim.Adam", "learning_rate = 0.001",

"batch_size = 50", "data_dir = None", "data_set = None", "train = True", "", "",

"#initialize a NET object", "net = NET()", "#print net architecture", "print(net)", "", "",

"#load your own dataset and normalize", "", "", "",

"#you can add some functions for visualization here or you can ignore them", "", "", "",

"#training and testing, you can modify these codes as you expect", "for epo in range(epoch):", "", ""],

"Model": ["'''", "", "This code is the corresponding pytorch code generated from the model built by the user.", "",

"\"model.py\" contains the complete model code, and you can modify it according to your own needs", "",

"'''", "", "#standard library", "import os", "", "#third-party library", "import torch", "import numpy",

"import torchvision", "", "", "class NET(torch.nn.Module):", " def __init__(self):",

" super(NET, self).__init__()", " self.conv1d_layer = torch.nn.Sequential(",

" torch.nn.Conv1d(", " in_channels = 1,", " out_channels = 16,",

" kernel_size = 5,", " stride = 1,", " padding = 2,",

" ),", " torch.nn.functional.relu(),",

" torch.nn.functional.max_pool2d(),", " )",

" self.conv2d_layer = torch.nn.Sequential(", " torch.nn.Conv2d(",

" in_channels = 16,", " out_channels = 32,",

" kernel_size = 5,", " stride = 1,", " padding = 2,",

" ),", " torch.nn.functional.relu(),",

" torch.nn.functional.max_pool2d(),", " )",

" self.linear_layer = torch.nn.Linear(32, 10)", " def forward(self, x_data):",

" view_layer_data = x_data.view(1)",

" conv1d_layer_data = self.conv1d_layer(view_layer_data)",

" conv2d_layer_data = self.conv2d_layer(conv1d_layer_data)",

" linear_layer_data = self.linear_layer(conv2d_layer_data)", " return linear_layer_data"],

"Ops": ["'''", "", "This code is the corresponding pytorch code generated from the model built by the user.", "",

"\"ops.py\" contains functions you might use", "", "'''", "", "#standard library", "import os", "",

"#third-party library", "import torch", "import numpy", "import torchvision", "", "",

"def element_wise_add(inputs):", " ans = inputs[0]", " for indx in range(1, len(inputs)):",

" ans.add_(inputs[indx])", " return ans"]

}

data = {

"nets":{

"canvas_4":{

"name":"start",

"attribute":{

"start":"true"

},

"left":"151px",

"top":"102px"

},

"canvas_5":{

"name":"view_layer",

"attribute":{

"shape":"1"

},

"left":"386px",

"top":"98px"

},

"canvas_6":{

"name":"conv1d_layer",

"attribute":{

"in_channels":"1",

"out_channels":"16",

"kernel_size":"5",

"stride":"1",

"padding":"2",

"activity":"torch.nn.functional.relu",

"pool_way":"torch.nn.functional.max_pool2d",

"pool_kernel_size":"3",

"pool_stride":"1",

"pool_padding":"2"

},

"left":"268px",

"top":"282px"

},

"canvas_7":{

"name":"conv2d_layer",

"attribute":{

"in_channels":"16",

"out_channels":"32",

"kernel_size":"5",

"stride":"1",

"padding":"2",

"activity":"torch.nn.functional.relu",

"pool_way":"torch.nn.functional.max_pool2d",

"pool_kernel_size":"3",

"pool_stride":"1",

"pool_padding":"2"

},

"left":"494px",

"top":"354px"

},

"canvas_8":{

"name":"linear_layer",

"attribute":{

"in_channels":"32",

"out_channels":"10"

},

"left":"75px",

"top":"209px"

}

},

"nets_conn":[

{

"source":{

"id":"canvas_4",

"anchor_position":"Right"

},

"target":{

"id":"canvas_5",

"anchor_position":"Left"

}

},

{

"source":{

"id":"canvas_5",

"anchor_position":"Bottom"

},

"target":{

"id":"canvas_6",

"anchor_position":"Top"

}

},

{

"source":{

"id":"canvas_6",

"anchor_position":"Bottom"

},

"target":{

"id":"canvas_7",

"anchor_position":"Left"

}

},

{

"source":{

"id":"canvas_7",

"anchor_position":"Bottom"

},

"target":{

"id":"canvas_8",

"anchor_position":"Bottom"

}

}

],

"static":{

"epoch":"1",

"learning_rate":"0.001",

"batch_size":"50"

}

}

res = NeuralNetwork.translate.ops.main_func(data)

self.assertEqual(res, result)

第二个向后端发送的数据包括之前已有的网络层和新增的网络层。

def test_ops_six(self):

"""

Test the addition of two strings returns the two string as one

concatenated string

"""

result = {

"Main": ["'''", "", "Copyright @2019 buaa_huluwa. All rights reserved.", "",

"View more, visit our team's home page: https://home.cnblogs.com/u/1606-huluwa/", "", "",

"This code is the corresponding pytorch code generated from the model built by the user.", "",

" \"main.py\" mainly contains the code of the training and testing part, and you can modify it according to your own needs.",

"", "'''", "", "#standard library", "import os", "", "#third-party library", "import torch",

"import numpy", "import torchvision", "", "", "from Model import *", "from Ops import *", "", "",

"#Hyper Parameters", "epoch = 1", "optimizer = torch.optim.Adam", "learning_rate = 0.5", "batch_size = 1",

"data_dir = None", "data_set = None", "train = True", "", "", "#initialize a NET object", "net = NET()",

"#print net architecture", "print(net)", "", "", "#load your own dataset and normalize", "", "", "",

"#you can add some functions for visualization here or you can ignore them", "", "", "",

"#training and testing, you can modify these codes as you expect", "for epo in range(epoch):", "", ""],

"Model": ["'''", "", "This code is the corresponding pytorch code generated from the model built by the user.", "",

"\"model.py\" contains the complete model code, and you can modify it according to your own needs", "",

"'''", "", "#standard library", "import os", "", "#third-party library", "import torch", "import numpy",

"import torchvision", "", "", "class NET(torch.nn.Module):", " def __init__(self):",

" super(NET, self).__init__()", " self.conv2d_layer = torch.nn.Sequential(",

" torch.nn.Conv2d(", " in_channels = 1,", " out_channels = 96,",

" kernel_size = 11,", " stride = 4,", " padding = 0,",

" ),", " torch.nn.functional.relu(),",

" torch.nn.functional.max_pool2d(),", " )",

" self.conv2d_layer_1 = torch.nn.Sequential(", " torch.nn.Conv2d(",

" in_channels = 96,", " out_channels = 256,",

" kernel_size = 5,", " stride = 1,", " padding = 2,",

" ),", " torch.nn.functional.relu(),",

" torch.nn.functional.max_pool2d(),", " )",

" self.conv2d_layer_2 = torch.nn.Sequential(", " torch.nn.Conv2d(",

" in_channels = 256,", " out_channels = 384,",

" kernel_size = 3,", " stride = 1,", " padding = 1,",

" ),", " torch.nn.functional.relu(),", " )",

" self.conv2d_layer_3 = torch.nn.Sequential(", " torch.nn.Conv2d(",

" in_channels = 384,", " out_channels = 384,",

" kernel_size = 3,", " stride = 1,", " padding = 1,",

" ),", " torch.nn.functional.relu(),", " )",

" self.conv2d_layer_4 = torch.nn.Sequential(", " torch.nn.Conv2d(",

" in_channels = 384,", " out_channels = 256,",

" kernel_size = 3,", " stride = 1,", " padding = 1,",

" ),", " )", " self.linear_layer = torch.nn.Linear(256, 4096)",

" self.linear_layer_1 = torch.nn.Linear(4096, 4096)",

" self.linear_layer_2 = torch.nn.Linear(4096, 1000)", " def forward(self, x_data):",

" conv2d_layer_data = self.conv2d_layer(x_data)",

" element_wise_add_layer_data = element_wise_add([x_data])",

" conv2d_layer_1_data = self.conv2d_layer_1(conv2d_layer_data)",

" conv2d_layer_2_data = self.conv2d_layer_2(conv2d_layer_1_data)",

" conv2d_layer_3_data = self.conv2d_layer_3(conv2d_layer_2_data)",

" conv2d_layer_4_data = self.conv2d_layer_4(conv2d_layer_3_data)",

" linear_layer_data = self.linear_layer(conv2d_layer_4_data)",

" linear_layer_1_data = self.linear_layer_1(linear_layer_data)",

" linear_layer_2_data = self.linear_layer_2(linear_layer_1_data)",

" return element_wise_add_layer_data, linear_layer_2_data"],

"Ops": ["'''", "", "This code is the corresponding pytorch code generated from the model built by the user.", "",

"\"ops.py\" contains functions you might use", "", "'''", "", "#standard library", "import os", "",

"#third-party library", "import torch", "import numpy", "import torchvision", "", "",

"def element_wise_add(inputs):", " ans = inputs[0]", " for indx in range(1, len(inputs)):",

" ans.add_(inputs[indx])", " return ans"]

}

data = {

"nets": {

"canvas_1": {

"name": "start",

"attribute": {

"start": "true"

},

"left": "365px",

"top": "20px"

},

"canvas_2": {

"name": "conv2d_layer",

"attribute": {

"in_channels": "1",

"out_channels": "96",

"kernel_size": "11",

"stride": "4",

"padding": "0",

"activity": "torch.nn.functional.relu",

"pool_way": "torch.nn.functional.max_pool2d",

"pool_kernel_size": "3",

"pool_stride": "2",

"pool_padding": "0"

},

"left": "317px",

"top": "109px"

},

"canvas_3": {

"name": "conv2d_layer",

"attribute": {

"in_channels": "96",

"out_channels": "256",

"kernel_size": "5",

"stride": "1",

"padding": "2",

"activity": "torch.nn.functional.relu",

"pool_way": "torch.nn.functional.max_pool2d",

"pool_kernel_size": "3",

"pool_stride": "2",

"pool_padding": "0"

},

"left": "315px",

"top": "203px"

},

"canvas_4": {

"name": "conv2d_layer",

"attribute": {

"in_channels": "256",

"out_channels": "384",

"kernel_size": "3",

"stride": "1",

"padding": "1",

"activity": "torch.nn.functional.relu",

"pool_way": "None",

"pool_kernel_size": "",

"pool_stride": "",

"pool_padding": "0"

},

"left": "313px",

"top": "306px"

},

"canvas_5": {

"name": "conv2d_layer",

"attribute": {

"in_channels": "384",

"out_channels": "384",

"kernel_size": "3",

"stride": "1",

"padding": "1",

"activity": "torch.nn.functional.relu",

"pool_way": "None",

"pool_kernel_size": "",

"pool_stride": "",

"pool_padding": "0"

},

"left": "315px",

"top": "355px"

},

"canvas_6": {

"name": "conv2d_layer",

"attribute": {

"in_channels": "384",

"out_channels": "256",

"kernel_size": "3",

"stride": "1",

"padding": "1",

"activity": "None",

"pool_way": "None",

"pool_kernel_size": "3",

"pool_stride": "2",

"pool_padding": "0"

},

"left": "316px",

"top": "404px"

},

"canvas_7": {

"name": "linear_layer",

"attribute": {

"in_channels": "256",

"out_channels": "4096"

},

"left": "316px",

"top": "541px"

},

"canvas_8": {

"name": "linear_layer",

"attribute": {

"in_channels": "4096",

"out_channels": "4096"

},

"left": "318px",

"top": "672px"

},

"canvas_9": {

"name": "linear_layer",

"attribute": {

"in_channels": "4096",

"out_channels": "1000"

},

"left": "319px",

"top": "827px"

},

"canvas_10": {

"name": "element_wise_add_layer",

"attribute": {

"element_wise_add_layer": "true"

},

"left": "91px",

"top": "830px"

}

},

"nets_conn": [

{

"source": {

"id": "canvas_1",

"anchor_position": "Bottom"

},

"target": {

"id": "canvas_2",

"anchor_position": "Top"

}

},

{

"source": {

"id": "canvas_2",

"anchor_position": "Bottom"

},

"target": {

"id": "canvas_3",

"anchor_position": "Top"

}

},

{

"source": {

"id": "canvas_4",

"anchor_position": "Bottom"

},

"target": {

"id": "canvas_5",

"anchor_position": "Top"

}

},

{

"source": {

"id": "canvas_5",

"anchor_position": "Bottom"

},

"target": {

"id": "canvas_6",

"anchor_position": "Top"

}

},

{

"source": {

"id": "canvas_3",

"anchor_position": "Bottom"

},

"target": {

"id": "canvas_4",

"anchor_position": "Top"

}

},

{

"source": {

"id": "canvas_1",

"anchor_position": "Left"

},

"target": {

"id": "canvas_10",

"anchor_position": "Top"

}

},

{"source": {

"id": "canvas_6",

"anchor_position": "Bottom"

},

"target": {

"id": "canvas_7",

"anchor_position": "Top"

}

},

{

"source": {

"id": "canvas_7",

"anchor_position": "Bottom"

},

"target": {

"id": "canvas_8",

"anchor_position": "Top"

}

},

{

"source": {

"id": "canvas_8",

"anchor_position": "Bottom"

},

"target": {

"id": "canvas_9",

"anchor_position": "Top"

}

}

],

"static": {

"epoch": "1",

"learning_rate": "0.5",

"batch_size": "1"

}

}

res = NeuralNetwork.translate.ops.main_func(data)

self.assertEqual(res, result)测试矩阵

| 测试矩阵 | 功能测试 | 页面测试 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 测试浏览器 | 测试环境(浏览器版本) | 组件拖拽 | 组件删除 | 组件连线 | 参数输入 | 点击事件(组件、按钮、链接)下拉框选择 | 报错情况 | 注册登录 | 保存模型 | 查看模型 | 删除模型 | 生成代码 | 代码下载 | 主页面 | 联系我们页面 | 帮助页面 | 代码生成页面 | 页面切换 |

| chrome | 74.0.3729.131 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 缩小查看时侧边栏会与正文重叠 | 正常 | 正常 |

| 火狐 | 67.0(64位) | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 侧边栏居中 | 正常 | 正常 |

| edge | 42.17134.1.0 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 侧边栏居中 | 正常 | 正常 |

| UC | 6.2.4098.3 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 侧边栏与正文重叠 | 正常 | 正常 |

| Opera | 60.0.3255.95 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 缩小查看时侧边栏与正文重叠 | 正常 | 正常 |

| 搜狗 | 8.5.10.30358 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 缩小查看时侧边栏与正文重叠 | 正常 | 正常 |

| 猎豹 | 6.5.115.18552 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 正常 | 缩小查看时侧边栏与正文重叠 | 正常 | 正常 |

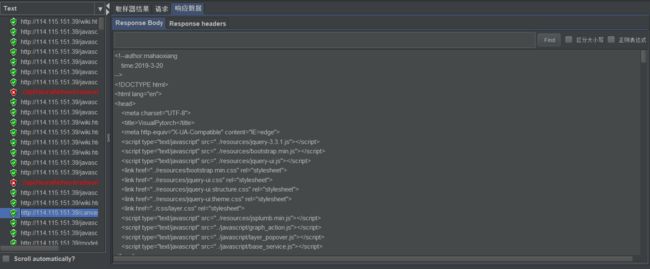

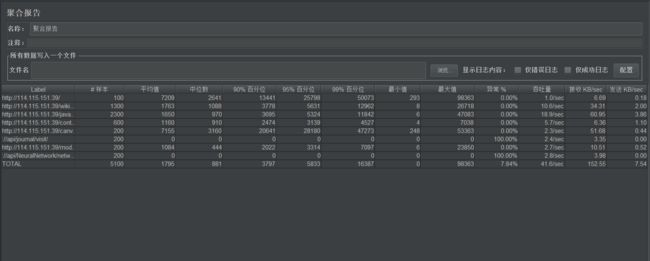

压力测试

通过badboy + jmeter的方式,对网站进行压力测试,设置并发数为100,对用户登录查看模型,查看帮助文档等进行测试。

Beta版本的出口条件

在Beta阶段,我们的目标是在之前的基础上,加入注册登录功能,让用户保存自己的工作成果,不用重复搭建模型或者在紧急时保存当前进度;并且支持了更多的网络层,用户可以搭建更多样的模型。因此,我们将这一版本的出口条件设置为:

实现用户的注册登录,登录之后对之前保存的模型的增删改查;可以利用新增的网络层结合之前的网络层搭建出更多的模型,并且支持对生成代码文件的下载

目前项目的核心功能就是支持注册登录,并且用户可以通过组件的拖拽、连线、配置参数搭建模型,通过模型能生成相应的代码并且支持下载,用户登录之后还可以保存、修改及删除自己搭建的模型。在Beta版本中,我们针对Alpha版本对模型的单一支持与不能实时修改等做了改进,完成了一个基本完善的产品,可用性及用户体验方面都有了很大提升。在接下来的版本中,我们会更多地花时间去丰富产品内容、提升用户体验。

秉承敏捷开发的原则,在达到出口条件的基础上,为了提升用户体验,我们对首页页面做了美化,还对帮助文档做了相应调整,优化了帮助界面,并且细化了各个方面的介绍,为用户提供更精确的操作步骤,此外我们在帮助文档中提供了几个经典模型的搭建,里面有我们提供的一些模型的例子,用户可以查看学习。

虽然已达到了预定的目标,但是这一版本还是存在一些问题的,比如注册时不能使用中文名或者一些特殊字符、页面的UI不够美观等,这些都是影响产品整体表现地问题所在。

在大家的共同努力下,完成了Beta版本预期的主要目标。在此基础上,经过大家的讨论,在接下来的版本中,我们列出了几个目标:

- 将各个组件的参数框,调整至右边的参数菜单(类draw.io)

- 增加复制组件的功能

- 反馈功能

- 添加新手引导功能

- 完善生成的代码,补充注释,帮助用户理解需要修改的地方以及修改的方法

接下来的时间里,我们会继续努力,在完成核心功能的基础上多考虑提升用户体验,交付一个尽可能实现的最好的产品。