阿里云ECS上搭建Hadoop集群环境——使用两台ECS服务器搭建“Cluster mode”的Hadoop集群环境

转载请注明出处:http://blog.csdn.net/dongdong9223/article/details/81275360

本文出自【我是干勾鱼的博客】

Ingredient:

-

Hadoop:hadoop-2.9.1.tar.gz(Apache Hadoop Releases Downloads, All previous releases of Hadoop are available from the Apache release archive site)

-

Java:Java SE Development Kit 8u162(Oracle Java Archive),Linux下安装JDK修改环境变量

之前在:

阿里云ECS上搭建Hadoop集群环境——启动时报错“java.net.BindException: Cannot assign requested address”问题的解决

阿里云ECS上搭建Hadoop集群环境——计算时出现“java.lang.IllegalArgumentException: java.net.UnknownHostException”错误的解决

阿里云ECS上搭建Hadoop集群环境——“启动Hadoop时,datanode没有被启动”问题的解决

阿里云ECS上搭建Hadoop集群环境——设置免密码ssh登陆

这4篇文章里讲述了搭建Hadoop环境时在本地“/etc/hosts”里面的ip域名配置上应该注意的事情,以及如何配置服务器之间的ssh免密码登录,启动Hadoop遇到的一些问题的解决等等,这些都是使用ECS服务器搭建Hadoop时一定要注意的,能够节省搭建过程中的很多精力。这些问题都注意了,就可以完整搭建Hadoop环境了。

1 节点环境介绍

1.1 环境介绍

-

服务器:2台阿里云ECS服务器:1台Master(test7972),1台Slave(test25572)

-

操作系统: Ubuntu 16.04.4 LTS

-

Hadoop:hadoop-2.9.1.tar.gz

-

Java:Java SE Development Kit 8u162

1.2 安装Java

将Java SE Development Kit 8u162在合适位置解压缩,本文中其路径为:

/opt/java/jdk1.8.0_162

设置Java在“/etc/profile”中的环境变量:

# set the java enviroment

export JAVA_HOME=/opt/java/jdk1.8.0_162

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/bin/dt.jar:$JAVA_HOME/lib/tools.jar

1.3 安装ssh与rsync

$sudo apt-get install ssh

$sudo apt-get install rsync

1.4 设置ssh免密码登陆

具体设置方式可以参考阿里云ECS上搭建Hadoop集群环境——设置免密码ssh登陆

2 Hadoop下载

首先下载hadoop-2.9.1.tar.gz,下载之后在合适的位置解压缩即可,笔者这里解压缩之后的路径为:

/opt/hadoop/hadoop-2.9.1

3 设置本地域名

设置本地域名这一步非常关键,ip的本地域名信息配置不好,即有可能造成Hadoop启动出现问题,又有可能造成在使用Hadoop的MapReduce进行计算时报错。如果是在ECS上搭建Hadoop集群环境,那么本文开头提到的之前写的这两篇文章:

阿里云ECS搭建Hadoop集群环境——启动时报错“java.net.BindException: Cannot assign requested address”问题的解决

阿里云ECS搭建Hadoop集群环境——计算时出现“java.lang.IllegalArgumentException: java.net.UnknownHostException”错误的解决

都应该看一下以作参考。

总结一下那就是,在“/etc/hosts”文件中进行域名配置时要遵从2个原则:

-

1 新加域名在前面: 将新添加的Master、Slave服务器ip域名(例如“test7972”),放置在ECS服务器原有本地域名(例如“iZuf67wb***************”)的前面。但是注意ECS服务器原有本地域名(例如“iZuf67wb***************”)不能被删除,因为操作系统别的地方还会使用到。

-

2 IP本机内网,其它外网: 在本机上的操作,都要设置成内网ip;其它机器上的操作,要设置成外网ip。

按照这两个原则,这里配置的两台服务器的域名信息:

- Master:test7972

- Slave:test25572

4 添加Hadoop环境变量

在“/etc/profile”中增加配置:

# hadoop

export HADOOP_HOME=/opt/hadoop/hadoop-2.9.1

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

5 添加Hadoop配置信息

目前共有2台服务器:

- Master:test7972

- Slave:test25572

需要添加的信息,有一些是2台服务器上共同配置的,还有一些是在特定服务器上单独设置的。

5.1 在Master节点的Slave文件中指定Slave节点

编辑文件:

vi vi etc/hadoop/slaves

添加内容:

test25572

5.2 Master、Slave上的共同配置

在Master、Slave上共同添加的配置:

5.2.1 “etc/hadoop/core-site.xml”

编辑文件:

vi etc/hadoop/core-site.xml

添加内容:

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://test7972:9000value>

property>

configuration>

5.2.2 “etc/hadoop/hdfs-site.xml”

编辑文件:

vi etc/hadoop/hdfs-site.xml

添加内容:

<configuration>

<property>

<name>dfs.replicationname>

<value>1value>

property>

configuration>

5.2.3 “etc/hadoop/mapred-site.xml”

编辑文件:

vi etc/hadoop/mapred-site.xml

添加内容:

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>

5.3 在各节点上指定HDFS文件的存储位置(默认为/tmp)

5.3.1 给Master节点namenode创建目录、赋予权限,编辑文件

Master节点: namenode

创建目录并赋予权限:

mkdir -p /opt/hadoop/hadoop-2.9.1/tmp/dfs/name

chmod -R 777 /opt/hadoop/hadoop-2.9.1/tmp

编辑文件:

vi etc/hadoop/hdfs-site.xml

添加内容:

<property>

<name>dfs.namenode.name.dirname>

<value>file:///opt/hadoop/hadoop-2.9.1/tmp/dfs/namevalue>

property>

5.3.2 给Slave节点datanode创建目录、赋予权限,编辑文件

Slave节点:datanode

创建目录并赋予权限:

mkdir -p /opt/hadoop/hadoop-2.9.1/tmp/dfs/data

chmod -R 777 /opt/hadoop/hadoop-2.9.1/tmp

编辑文件:

vi etc/hadoop/hdfs-site.xml

添加内容:

<property>

<name>dfs.datanode.data.dirname>

<value>file:///opt/hadoop/hadoop-2.9.1/tmp/dfs/datavalue>

property>

5.4 YARN设定

5.4.1 给Master节点resourcemanager信息编辑文件

Master节点: resourcemanager

编辑文件:

vi etc/hadoop/yarn-site.xml

添加内容:

<configuration>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>test7972value>

property>

configuration>

5.4.2 给Slave节点nodemanager信息编辑文件

编辑文件:

vi etc/hadoop/yarn-site.xml

添加内容:

<configuration>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>test7972value>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

configuration>

5.4.3 为了在Master上启动“job history server”,需要Slave节点上添加配置信息

Slave节点:配置Master节点的“job history server”信息

编辑文件:

vi etc/hadoop/mapred-site.xml

添加内容:

<property>

<name>mapreduce.jobhistory.addressname>

<value>test7972:10020value>

property>

5.5 JAVA_HOME指定

本来在/etc/profile文件中指定了JAVA_HOME文件,按说Hadoop应该可以识别到JAVA命令了,但是运行时候还是会报错说找不到,这个时候需要在Master和Slave节点的:

etc/hadoop/hadoop-env.sh

文件中加入以下内容:

export JAVA_HOME=/opt/java/jdk1.8.0_162

5.6 确认一下Master、Slave上的配置文件内容

因为刚才确定修改文件的过程比较杂乱,我们来确认一下Master、Slave这2个服务器上的配置文件内容,如果对Hadoop的各文件配置意义都比较清楚了,可以直接根据这个步骤修改配置文件。

5.6.1 Master节点上的配置文件

Master节点(test7972)上共有4个文件添加了内容,分别为:

- 1 etc/hadoop/core-site.xml

vi etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://test7972:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/opt/hadoop/hadoop-2.9.1/tmpvalue>

property>

configuration>

- 2 etc/hadoop/hdfs-site.xml

vi etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>1value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>file:///opt/hadoop/hadoop-2.9.1/tmp/dfs/namevalue>

property>

configuration>

- 3 etc/hadoop/mapred-site.xml

vi etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>

- 4 etc/hadoop/yarn-site.xml

vi etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>test7972value>

property>

configuration>

- 5 etc/hadoop/hadoop-env.sh

etc/hadoop/hadoop-env.sh

export JAVA_HOME=/opt/java/jdk1.8.0_162

5.6.2 Slave节点上的配置文件

Master节点(test25572)上共有4个文件添加了内容,分别为:

- 1 etc/hadoop/core-site.xml

vi etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://test7972:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/opt/hadoop/hadoop-2.9.1/tmpvalue>

property>

configuration>

- 2 etc/hadoop/hdfs-site.xml

vi etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>1value>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>file:///opt/hadoop/hadoop-2.9.1/tmp/dfs/datavalue>

property>

configuration>

- 3 etc/hadoop/mapred-site.xml

vi etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>mapreduce.jobhistory.addressname>

<value>test7972:10020value>

property>

configuration>

- 4 etc/hadoop/yarn-site.xml

vi etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>test7972value>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

configuration>

- 5 etc/hadoop/hadoop-env.sh

etc/hadoop/hadoop-env.sh

export JAVA_HOME=/opt/java/jdk1.8.0_162

6 格式化HDFS(Master,Slave)

在Master、Slave上均执行格式化命令:

hadoop namenode -format

注意: 有的时候会出现Hadoop启动时,没有启动datanode的情况。可以参考阿里云ECS搭建Hadoop集群环境——“启动Hadoop时,datanode没有被启动”问题的解决来解决。

7 启动Hadoop

7.1 在Master上启动Daemon,Slave上的服务会被同时启动

启动HDFS:

sbin/start-dfs.sh

启动YARN:

sbin/start-yarn.sh

当然也可以一个命令来执行这两个启动操作:

sbin/start-all.sh

执行结果:

root@iZ2ze72w***************:/opt/hadoop/hadoop-2.9.1# ./sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [test7972]

test7972: starting namenode, logging to /opt/hadoop/hadoop-2.9.1/logs/hadoop-root-namenode-iZ2ze72w7p5za2ax3zh81cZ.out

test25572: starting datanode, logging to /opt/hadoop/hadoop-2.9.1/logs/hadoop-root-datanode-iZuf67wbvlyduq07idw3pyZ.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /opt/hadoop/hadoop-2.9.1/logs/hadoop-root-secondarynamenode-iZ2ze72w7p5za2ax3zh81cZ.out

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop/hadoop-2.9.1/logs/yarn-root-resourcemanager-iZ2ze72w7p5za2ax3zh81cZ.out

test25572: starting nodemanager, logging to /opt/hadoop/hadoop-2.9.1/logs/yarn-root-nodemanager-iZuf67wbvlyduq07idw3pyZ.out

效果是一样的。

7.2 启动job history server

在Master上执行:

sbin/mr-jobhistory-daemon.sh start historyserver

执行结果:

root@iZ2ze72w***************:/opt/hadoop/hadoop-2.9.1# ./sbin/mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /opt/hadoop/hadoop-2.9.1/logs/mapred-root-historyserver-iZ2ze72w7p5za2ax3zh81cZ.out

root@iZ2ze72w7p5za2ax3zh81cZ:/opt/hadoop/hadoop-2.9.1#

7.3 确定启动进程

7.3.1 确定Master上的进程

- 在Master节点上执行:

jps

显示信息:

root@iZ2ze72w***************:/opt/hadoop/hadoop-2.9.1# jps

867 JobHistoryServer

938 Jps

555 ResourceManager

379 SecondaryNameNode

32639 NameNode

可以看到不算jps自己,启动了4个进程。

7.3.2 确定Slave上的进程

- 在Slave节点上执行:

jps

显示信息:

root@iZuf67wb***************:/opt/hadoop/hadoop-2.9.1# jps

26510 Jps

26222 DataNode

26350 NodeManager

可以看到不算jps自己,启动了2个进程。

8 在Master节点上创建HDFS,并拷贝测试文件

当前文件夹路径:

root@iZ2ze72w7p5za2ax3zh81cZ:/opt/hadoop/hadoop-2.9.1# pwd

/opt/hadoop/hadoop-2.9.1

8.1 在Master节点上创建HDFS文件夹

./bin/hdfs dfs -mkdir -p /user/root/input

8.2 拷贝测试文件到HDFS文件夹下

拷贝测试文件:

./bin/hdfs dfs -put etc/hadoop/* /user/root/input

查看拷贝到HDFS下的文件:

./bin/hdfs dfs -ls /user/root/input

9 执行“Hadoop job”进行测试

9.1 监控系统

9.1.1 监听文件输出

虽然执行命令界面也有输出,但一般直接监控输出文件捕获的信息会更清晰,查看输出信息一般监控两个文件:

tail -f logs/hadoop-root-namenode-iZ2ze72w7p5za2ax3zh81cZ.log

tail -f logs/yarn-root-resourcemanager-iZ2ze72w7p5za2ax3zh81cZ.log

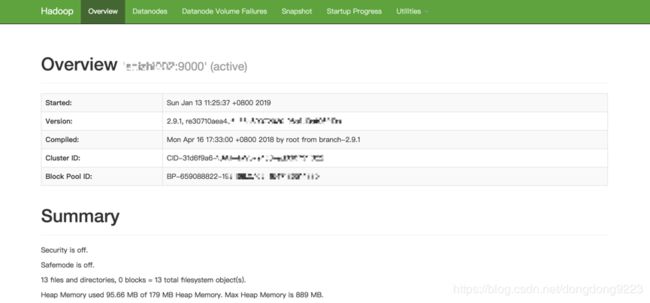

9.1.2 浏览器查看文件系统HDFS的使用情况

首先确保防火墙关闭,如:

[root@test7972 hadoop-2.9.1]# firewall-cmd --state

not running

浏览器输入:

http://test7972:50070/

会跳转至相应页面,如所示:

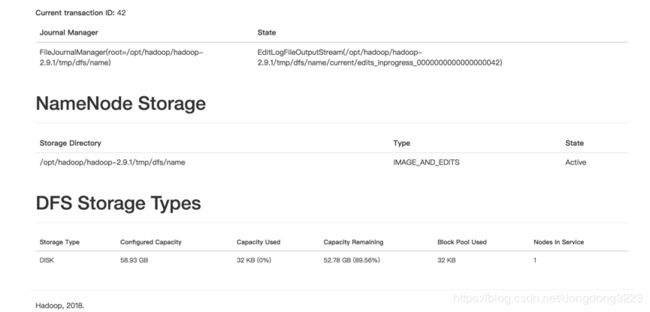

也能够看到HDFS的情况,如图所示:

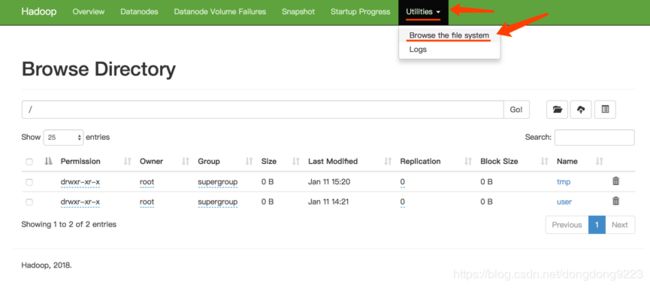

点击上方导航右侧的:

Utilities -> Browse the file system

能够看到HDFS的文件情况,如图所示:

9.2 执行Hadoop job

使用Hadoop自带的一个测试job

./bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.1.jar grep /user/root/input output ‘dfs[a-z.]+’

这时候输出结果会被保存到“output”文件夹中,注意这里的output其实就是:

/user/{username}/output

由于当前登录用户是root,那么实际文件夹就是:

/user/root/output/

这个文件夹。

如果你的计算正常,是能够在执行命令的界面看到一些计算过程的:

......

18/07/30 16:04:32 INFO mapreduce.Job: The url to track the job: http://test7972: 8088/proxy/application_1532936445384_0006/

18/07/30 16:04:32 INFO mapreduce.Job: Running job: job_1532936445384_0006

18/07/30 16:04:57 INFO mapreduce.Job: Job job_1532936445384_0006 running in uber mode : false

18/07/30 16:04:57 INFO mapreduce.Job: map 0% reduce 0%

18/07/30 16:06:03 INFO mapreduce.Job: map 13% reduce 0%

18/07/30 16:06:04 INFO mapreduce.Job: map 20% reduce 0%

18/07/30 16:07:08 INFO mapreduce.Job: map 27% reduce 0%

18/07/30 16:07:09 INFO mapreduce.Job: map 40% reduce 0%

18/07/30 16:08:12 INFO mapreduce.Job: map 50% reduce 0%

18/07/30 16:08:13 INFO mapreduce.Job: map 57% reduce 0%

18/07/30 16:08:15 INFO mapreduce.Job: map 57% reduce 19%

18/07/30 16:09:11 INFO mapreduce.Job: map 60% reduce 19%

18/07/30 16:09:12 INFO mapreduce.Job: map 67% reduce 19%

18/07/30 16:09:13 INFO mapreduce.Job: map 73% reduce 19%

18/07/30 16:09:18 INFO mapreduce.Job: map 73% reduce 24%

18/07/30 16:10:04 INFO mapreduce.Job: map 77% reduce 24%

18/07/30 16:10:06 INFO mapreduce.Job: map 90% reduce 24%

18/07/30 16:10:07 INFO mapreduce.Job: map 90% reduce 26%

18/07/30 16:10:14 INFO mapreduce.Job: map 90% reduce 30%

18/07/30 16:10:40 INFO mapreduce.Job: map 93% reduce 30%

18/07/30 16:10:44 INFO mapreduce.Job: map 100% reduce 31%

18/07/30 16:10:45 INFO mapreduce.Job: map 100% reduce 100%

18/07/30 16:10:53 INFO mapreduce.Job: Job job_1532936445384_0006 completed successfully

......

18/07/30 16:10:56 INFO mapreduce.Job: The url to track the job: http://test7972: 8088/proxy/application_1532936445384_0007/

18/07/30 16:10:56 INFO mapreduce.Job: Running job: job_1532936445384_0007

18/07/30 16:11:21 INFO mapreduce.Job: Job job_1532936445384_0007 running in uber mode : false

18/07/30 16:11:21 INFO mapreduce.Job: map 0% reduce 0%

18/07/30 16:11:35 INFO mapreduce.Job: map 100% reduce 0%

18/07/30 16:11:48 INFO mapreduce.Job: map 100% reduce 100%

18/07/30 16:11:53 INFO mapreduce.Job: Job job_1532936445384_0007 completed successfully

18/07/30 16:11:53 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=362

FILE: Number of bytes written=395249

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=655

HDFS: Number of bytes written=244

HDFS: Number of read operations=7

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=11800

Total time spent by all reduces in occupied slots (ms)=10025

Total time spent by all map tasks (ms)=11800

Total time spent by all reduce tasks (ms)=10025

Total vcore-milliseconds taken by all map tasks=11800

Total vcore-milliseconds taken by all reduce tasks=10025

Total megabyte-milliseconds taken by all map tasks=12083200

Total megabyte-milliseconds taken by all reduce tasks=10265600

Map-Reduce Framework

Map input records=14

Map output records=14

Map output bytes=328

Map output materialized bytes=362

Input split bytes=129

Combine input records=0

Combine output records=0

Reduce input groups=5

Reduce shuffle bytes=362

Reduce input records=14

Reduce output records=14

Spilled Records=28

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=443

CPU time spent (ms)=1480

Physical memory (bytes) snapshot=367788032

Virtual memory (bytes) snapshot=3771973632

Total committed heap usage (bytes)=170004480

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=526

File Output Format Counters

Bytes Written=244

能够看到这里面有计算成功的提示:

mapreduce.Job: Job job_1532936445384_0007 completed successfully

说明我们的MapReduce计算成功了!

9.3 查看执行结果

执行查询命令:

./bin/hdfs dfs -cat output/*

输出计算结果:

root@iZ2ze72w7p5za2ax3zh81cZ:/opt/hadoop/hadoop-2.9.1# ./bin/hdfs dfs -cat output/*

6 dfs.audit.logger

4 dfs.class

3 dfs.logger

3 dfs.server.namenode.

2 dfs.audit.log.maxbackupindex

2 dfs.period

2 dfs.audit.log.maxfilesize

1 dfs.replication

1 dfs.log

1 dfs.file

1 dfs.servers

1 dfsadmin

1 dfsmetrics.log

1 dfs.namenode.name.dir

10 关闭Hadoop

10.1 Master上关闭Daemon

Master上执行:

sbin/stop-all.sh

10.2 Master上关闭job history server

Master上执行:

sbin/mr-jobhistory-daemon.sh stop historyserver

11 搭建完成

至此,在阿里云ECS上搭建1台Master、1台Slave的Hadoop集群环境就搭建完了!

12 参考

Hadoop系列之(二):Hadoop集群部署

解决Hadoop启动时,没有启动datanode

Hadoop: Setting up a Single Node Cluster.

Hadoop Cluster Setup