python之实战----朴素贝叶斯之手写数字位图

先导入数据,输出看看,在画画图吧

#-*- coding=utf-8 -*-

import numpy as np

from sklearn import datasets,naive_bayes

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

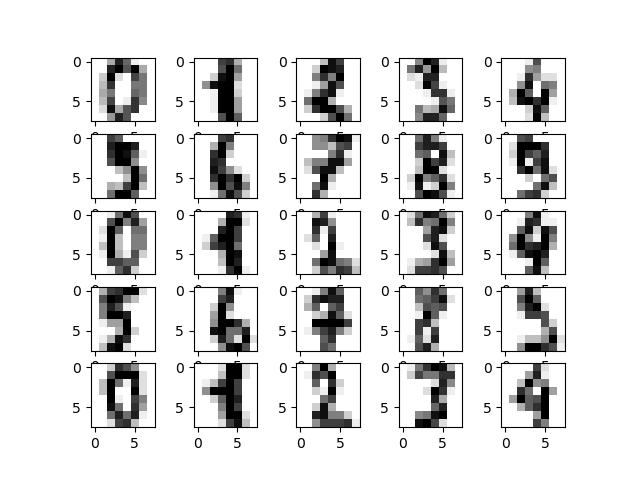

def show_digits():

'''

输出数据集里的数字

'''

digits=datasets.load_digits()

fig=plt.figure()

print("vector from image 0:",digits.data[0])

for i in range(25):

ax=fig.add_subplot(5,5,i+1)

ax.imshow(digits.images[i],cmap=plt.cm.gray_r,interpolation='nearest')#interpolation插值

plt.show()

def load_data():

iris=datasets.load_iris()

X_train=iris.data

y_train=iris.target

return train_test_split(X_train,y_train, test_size=0.25,random_state=0,stratify=y_train)

if __name__=='__main__':

show_digits()输出结果:

PS E:\p> python test.py

('vector from image 0:', array([ 0., 0., 5., 13., 9., 1., 0., 0., 0., 0., 13.,

15., 10., 15., 5., 0., 0., 3., 15., 2., 0., 11.,

8., 0., 0., 4., 12., 0., 0., 8., 8., 0., 0.,

5., 8., 0., 0., 9., 8., 0., 0., 4., 11., 0.,

1., 12., 7., 0., 0., 2., 14., 5., 10., 12., 0.,

0., 0., 0., 6., 13., 10., 0., 0., 0.]))1.特征的条件概率分布满足高斯分布,多项式分布,伯努利(二)

#-*- coding=utf-8 -*-

import numpy as np

from sklearn import datasets,naive_bayes

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

"""

def show_digits():

'''

输出数据集里的数字

'''

digits=datasets.load_digits()

fig=plt.figure()

print("vector from image 0:",digits.data[0])

for i in range(25):

ax=fig.add_subplot(5,5,i+1)

ax.imshow(digits.images[i],cmap=plt.cm.gray_r,interpolation='nearest')#interpolation插值

plt.show()

"""

def load_data():

iris=datasets.load_iris()

X_train=iris.data

y_train=iris.target

return train_test_split(X_train,y_train, test_size=0.25,random_state=0,stratify=y_train)

def test_GaussianNB(*data):

X_train,X_test,y_train,y_test=data

cls=naive_bayes.GaussianNB()

cls.fit(X_train,y_train)

print('GaussianNB Train score :%.2f'%cls.score(X_train,y_train))

print('GaussianNB Test score :%.2f'%cls.score(X_test,y_test))

def test_MultinomialNB(*data):

X_train,X_test,y_train,y_test=data

cls=naive_bayes.MultinomialNB()

cls.fit(X_train,y_train)

print('MultinomialNB Train score :%.2f'%cls.score(X_train,y_train))

print('MultinomialNB Test score :%.2f'%cls.score(X_test,y_test))

def test_BernoulliNB(*data):

X_train,X_test,y_train,y_test=data

cls=naive_bayes.BernoulliNB()

cls.fit(X_train,y_train)

print('BernoulliNB Train score :%.2f'%cls.score(X_train,y_train))

print('BernoulliNB Test score :%.2f'%cls.score(X_test,y_test))

if __name__=='__main__':

X_train,X_test,y_train,y_test=load_data()

test_GaussianNB(X_train,X_test,y_train,y_test)

test_MultinomialNB(X_train,X_test,y_train,y_test)

test_BernoulliNB(X_train,X_test,y_train,y_test)

其结果:

PS E:\p> python test.py

GaussianNB Train score :0.95

GaussianNB Test score :0.97

MultinomialNB Train score :0.96

MultinomialNB Test score :1.00

BernoulliNB Train score :0.34

BernoulliNB Test score :0.32换一个数据集就不一样了

def load_data():

digits=datasets.load_digits()

X_train=digits.data

y_train=digits.target

return train_test_split(X_train,y_train, test_size=0.25,random_state=0,stratify=y_train)对应输出

PS E:\p> python test.py

GaussianNB Train score :0.85

GaussianNB Test score :0.84

MultinomialNB Train score :0.91

MultinomialNB Test score :0.90

BernoulliNB Train score :0.87

BernoulliNB Test score :0.87