- 阅读笔记:阅读方法中的逻辑和转念

施吉涛

聊聊一些阅读的方法论吧,别人家的读书方法刚开始想写,然后就不知道写什么了,因为作者写的非常的“精致”我有一种乡巴佬进城的感觉,看到精美的摆盘,精致的食材不知道该如何下口也就是《阅读的方法》,我们姑且来试一下强劲的大脑篇,第一节:逻辑通俗的来讲,也就是表达的排列和顺序,再进一步就是因果关系和关联实际上书已经看了大概一遍,但直到打算写一下笔记的时候,才发现作者讲的推理更多的是阅读的对象中呈现出的逻辑也

- 深度学习-点击率预估-研究论文2024-09-14速读

sp_fyf_2024

深度学习人工智能

深度学习-点击率预估-研究论文2024-09-14速读1.DeepTargetSessionInterestNetworkforClick-ThroughRatePredictionHZhong,JMa,XDuan,SGu,JYao-2024InternationalJointConferenceonNeuralNetworks,2024深度目标会话兴趣网络用于点击率预测摘要:这篇文章提出了一种新

- 20220226号今日份(6)

张雅苑Momo

考虑以下必备行程安排:1作息规律2三餐规律3早茶下午茶4晨练运动5阅读笔记6挚爱亲朋联络20220226号今日份快乐是有哪一些呢?1:视频号直播的持续今天已经是第221/190天啦今天主讲人在分享事上练的能力,事上见2:持续吉他练习今天已经第25天啦3:今天持续带动某人整理屋子,要加油哦,要持续哦今天的过程持续比较轻松愉快4:今天老佛爷入院的第四天,上阵父子兵,期待他们仨早起凯旋归来如何成为自己喜

- 24营2组锋妈11月13日作业及阅读笔记

锋妈

第一部分,听课心得在《时间管理目标模型课程》中,主要学到了如下四点:一、为什么要制定目标二、怎么样制定目标三、制定目标后要做些什么四、立刻行动起来听完后,对照讲课提纲,是自身的存在的弱点,觉着最大的绊脚石是第四点立刻行动起来。因为再宏伟的目标,再强大的驱动力下,如果没有行动去执行,一切都是空谈。为了避免执行力弱化,结合自己目前实际情况,觉着尽量把目标制定的简单明了、可执行、可衡量、可反馈回顾的。只

- ResNet的半监督和半弱监督模型

Valar_Morghulis

Billion-scalesemi-supervisedlearningforimageclassificationhttps://arxiv.org/pdf/1905.00546.pdfhttps://github.com/facebookresearch/semi-supervised-ImageNet1K-models/权重在timm中也有:https://hub.fastgit.org/r

- 【NLP5-RNN模型、LSTM模型和GRU模型】

一蓑烟雨紫洛

nlprnnlstmgrunlp

RNN模型、LSTM模型和GRU模型1、什么是RNN模型RNN(RecurrentNeuralNetwork)中文称为循环神经网络,它一般以序列数据为输入,通过网络内部的结构设计有效捕捉序列之间的关系特征,一般也是以序列形式进行输出RNN的循环机制使模型隐层上一时间步产生的结果,能够作为当下时间步输入的一部分(当下时间步的输入除了正常的输入外还包括上一步的隐层输出)对当下时间步的输出产生影响2、R

- 他为了她努力发家致富,五年后她却要了他的命 ——《了不起的盖茨比》读后感

一切来得及

《大亨小传》?又一译名春节期间,我参加了网易蜗牛读书举行的“7天CP读”活动。活动规则是在小程序里配对,两人共读一本书。我选的书是《了不起的盖茨比》,早就闻名,早就想读,却一直没开始的一本书。老话说得没错,男女搭配,干活不累。想不到读书也是如此。不到六天,我就读完了全书,写了近30条阅读笔记。与此同时,与我搭档的美女好像忙着发财,一直没动头。不过,我还是感谢她,感谢她赐予我阅读的力量!读完全书,我

- #LLM入门|Prompt#2.3_对查询任务进行分类|意图分析_Classification

向日葵花籽儿

LLM入门教程笔记prompt分类数据库

在本章中,我们将重点探讨评估输入任务的重要性,这关乎到整个系统的质量和安全性。在处理不同情况下的多个独立指令集的任务时,首先对查询类型进行分类,并以此为基础确定要使用哪些指令,具有诸多优势。这可以通过定义固定类别和硬编码与处理特定类别任务相关的指令来实现。例如,在构建客户服务助手时,对查询类型进行分类并根据分类确定要使用的指令可能非常关键。具体来说,如果用户要求关闭其账户,那么二级指令可能是添加有

- 探索深度学习的奥秘:从理论到实践的奇幻之旅

小周不想卷

深度学习

目录引言:穿越智能的迷雾一、深度学习的奇幻起源:从感知机到神经网络1.1感知机的启蒙1.2神经网络的诞生与演进1.3深度学习的崛起二、深度学习的核心魔法:神经网络架构2.1前馈神经网络(FeedforwardNeuralNetwork,FNN)2.2卷积神经网络(CNN)2.3循环神经网络(RNN)及其变体(LSTM,GRU)2.4生成对抗网络(GAN)三、深度学习的魔法秘籍:算法与训练3.1损失

- A1/A2: S.O.S. Urgences, Chapitre 1

自观问渠

阅读笔记,Chapitre11.Allô!喂;公司接线员的用语:Allôbonjour,公司名。2.S.O.S.派遣医生上门服务3.请说!Jevousécoute./Jet'écoute.使用场景:我听你讲,你说吧。私人聊天,正式场合4.C'estpourqqn表示目的用pour5.Ilfaut用法Jepeuxvenir,maisilfautuneadresse.ilfaut+名词必须有某物Ilf

- CycleGAN学习:Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks, 2017.

屎山搬运工

深度学习CycleGANGAN风格迁移

【导读】图像到图像的转换技术一般需要大量的成对数据,然而要收集这些数据异常耗时耗力。因此本文主要介绍了无需成对示例便能实现图像转换的CycleGAN图像转换技术。文章分为五部分,分别概述了:图像转换的问题;CycleGAN的非成对图像转换原理;CycleGAN的架构模型;CycleGAN的应用以及注意事项。图像到图像的转换涉及到生成给定图像的新的合成版本,并进行特定的修改,例如将夏季景观转换为冬季

- arXiv综述论文“Graph Neural Networks: A Review of Methods and Applications”

硅谷秋水

自动驾驶

arXiv于2019年7月10日上载的GNN综述论文“GraphNeuralNetworks:AReviewofMethodsandApplications“。摘要:许多学习任务需要处理图数据,该图数据包含元素之间的丰富关系信息。建模物理系统、学习分子指纹、预测蛋白质界面以及对疾病进行分类都需要一个模型从图输入学习。在其他如文本和图像之类非结构数据学习的领域中,对提取的结构推理,例如句子的依存关系

- C# 网口通信(通过Sockets类)

萨达大

c#服务器网络网口通讯上位机

文章目录1.引入Sockets2.定义TcpClient3.连接网口4.发送数据5.关闭连接1.引入SocketsusingSystem.Net.Sockets;2.定义TcpClientprivateTcpClienttcpClient;//TcpClient实例privateNetworkStreamstream;//网络流,用于与服务器通信3.连接网口tcpClient=newTcpClie

- TextCNN:文本卷积神经网络模型

一只天蝎

编程语言---Pythoncnn深度学习机器学习

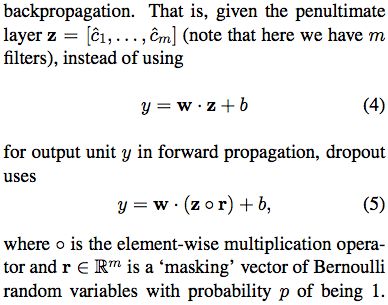

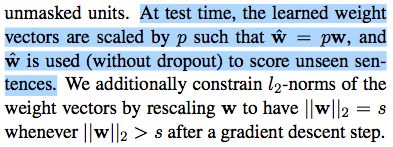

目录什么是TextCNN定义TextCNN类初始化一个model实例输出model什么是TextCNNTextCNN(TextConvolutionalNeuralNetwork)是一种用于处理文本数据的卷积神经网(CNN)。通过在文本数据上应用卷积操作来提取局部特征,这些特征可以捕捉到文本中的局部模式,如n-gram(连续的n个单词或字符)。定义TextCNN类importtorch.nnasn

- [Kaiming]Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification

MTandHJ

neuralnetworks

文章目录概主要内容PReLUKaiming初始化ForwardcaseBackwardcaseHeK,ZhangX,RenS,etal.DelvingDeepintoRectifiers:SurpassingHuman-LevelPerformanceonImageNetClassification[C].internationalconferenceoncomputervision,2015:1

- 阅读笔记-有一种女人

张海佩happy

来自@寒山说,致谢!有一种女人,咋一看性格温柔,为人谦和有礼,易接触,可走近发现她自带“疏离感“。她的疏离感并非源自“傲”,而是因她内心太过丰富,且过于敏感。她觉得人类的悲喜并不相同,故而选择保持距离,她的同理心强,共情力更强,她深深地理解他人的痛苦,绝不轻易伤害他人。她礼貌处世,既不向上奉承,也不向下贬低,不以物喜,不以己悲,是温柔到骨子里的人。她拥有自己的精神世界,她可能会沉迷于小说里的某句台

- 深度神经网络详解:原理、架构与应用

阿达C

活动dnn计算机网络人工智能神经网络机器学习深度学习

深度神经网络(DeepNeuralNetwork,DNN)是机器学习领域中最为重要和广泛应用的技术之一。它模仿人脑神经元的结构,通过多层神经元的连接和训练,能够处理复杂的非线性问题。在图像识别、自然语言处理、语音识别等领域,深度神经网络展示了强大的性能。本文将深入解析深度神经网络的基本原理、常见架构及其实际应用。一、深度神经网络的基本原理1.1神经元和感知器神经元是深度神经网络的基本组成单元。一个

- Centos9 网卡配置文件

码哝小鱼

linux运维linux网络

1、Centosstream9网络介结Centos以前版本,NetworkManage以ifcfg格式存储网络配置文件在/etc/sysconfig/networkscripts/目录中。但是,Centossteam9现已弃用ifcfg格式,默认情况下,NetworkManage不再创建此格式的新配置文件。从Centossteam9开始采用密钥文件格式(基于INI文件),NetworkManage

- Python的情感词典情感分析和情绪计算

yava_free

python大数据人工智能

一.大连理工中文情感词典情感分析(SentimentAnalysis)和情绪分类(EmotionClassification)都是非常重要的文本挖掘手段。情感分析的基本流程如下图所示,通常包括:自定义爬虫抓取文本信息;使用Jieba工具进行中文分词、词性标注;定义情感词典提取每行文本的情感词;通过情感词构建情感矩阵,并计算情感分数;结果评估,包括将情感分数置于0.5到-0.5之间,并可视化显示。目

- 使用C++编写接口调用PyTorch模型,并生成DLL供.NET使用

编程日记✧

pytorch人工智能python.netc#c++

一、将PyTorch模型保存为TorchScript格式1)构造一个pytorch2TorchScript.py,示例代码如下:importtorchimporttorch.nnasnnimportargparsefromnetworks.seg_modelingimportmodelasViT_segfromnetworks.seg_modelingimportCONFIGSasCONFIGS_

- 论文阅读笔记(十九):YOLO9000: Better, Faster, Stronger

__Sunshine__

笔记YOLO9000detectionclassification

WeintroduceYOLO9000,astate-of-the-art,real-timeobjectdetectionsystemthatcandetectover9000objectcategories.FirstweproposevariousimprovementstotheYOLOdetectionmethod,bothnovelanddrawnfrompriorwork.Theim

- 论文阅读笔记: DINOv2: Learning Robust Visual Features without Supervision

小夏refresh

论文计算机视觉深度学习论文阅读笔记深度学习计算机视觉人工智能

DINOv2:LearningRobustVisualFeatureswithoutSupervision论文地址:https://arxiv.org/abs/2304.07193代码地址:https://github.com/facebookresearch/dinov2摘要大量数据上的预训练模型在NLP方面取得突破,为计算机视觉中的类似基础模型开辟了道路。这些模型可以通过生成通用视觉特征(即无

- 深度学习算法在图算法中的应用(图卷积网络GCN和图自编码器GAE)

大嘤三喵军团

深度学习算法网络

深度学习算法在图算法中的应用1.图卷积网络(GraphConvolutionalNetworks,GCN)图卷积网络(GCN)是一种将卷积神经网络(ConvolutionalNeuralNetworks,CNN)推广到图结构数据的方法。GCN被广泛用于节点分类、图分类、链接预测等任务。优势和好处灵活性:GCN可以处理不规则和不均匀的数据结构,比如社交网络、分子结构、交通网络等。高效性:GCN使用局

- 马克 米勒维尼《动量大师 超级交易员圆桌访谈录》阅读笔记59

小二菜园

问题59:你在分析中是否使用利润率或净资产收益率(ROE)?马克·米勒维尼:是的。我喜欢看到不断扩大的利润率。有时,这可能是一个公司业绩改善但销售为负背后的催化剂。但就像我说的,没有销售收入,你只能在一段时间内提高收益。净资产收益率是你应该用来比较你的股票与同一行业其他股票的东西,一般来说,更好的股票会有15-17%或更高的净资产收益率。大卫·瑞恩:它们都是值得关注的指标,也是我进一步研究公司盈利

- SDN系统方法 | 7. 叶棘网络

DeepNoMind

随着互联网和数据中心流量的爆炸式增长,SDN已经逐步取代静态路由交换设备成为构建网络的主流方式,本系列是免费电子书《Software-DefinedNetworks:ASystemsApproach》的中文版,完整介绍了SDN的概念、原理、架构和实现方式。原文:Software-DefinedNetworks:ASystemsApproach第7章叶棘网络(Leaf-SpineFabric)本章介

- 《以色列——一个民族的重生》第四到第六章阅读笔记

惠尔好我

在奥斯曼帝国统治时期,阿拉伯人就意识到,巴勒斯坦生活的犹太人将改变该地区的“阿拉伯属性”。第一次犹太移民潮中,欧洲犹太人带来的观念和现代性和当地犹太人以及阿拉伯人的意识形态发生冲突。可以说,当地人和外来者对国家和社会抱有的不同理念、对荣誉和记忆的不同感受以及许多其他方面的难以沟通,成为后来犹太人和阿拉伯人长期冲突的重要原因。反观中华民族强大的包容性,同化性,共生性带来了强大的生命力。为了架起各方沟

- 基于图的推荐算法(12):Handling Information Loss of Graph Neural Networks for Session-based Recommendation

阿瑟_TJRS

前言KDD2020,针对基于会话推荐任务提出的GNN方法对已有的GNN方法的缺陷进行分析并做出改进主要针对lossysessionencoding和ineffectivelong-rangedependencycapturing两个问题:基于GNN的方法存在损失部分序列信息的问题,主要是在session转换为图以及消息传播过程中的排列无关(permutation-invariant)的聚合过程中造

- ITU-T V-Series Recommendations

技术无疆

Othercompressionstandardsprotocolsinterfacenetworkalgorithm

TheITU-TV-SeriesRecommendationsonDatacommunicationoverthetelephonenetworkspecifytheprotocolsthatgovernapprovedmodemcommunicationstandardsandinterfaces.[1]Note:thebisandtersuffixesareITU-Tstandarddesig

- 《少有人走的路》第三部分信仰和世界观分阅读笔记

芦絮

爱的本质是拓展自我,必须进入未知的领域,放弃落后的,陈旧的自己,把陈腐过时的认知踩在脚下,抛弃狭隘的人生观。做到以上这些必须对过去提出疑问,怀疑和挑战,才能使我们走上神圣的自由之路!作者分别用三位患者举例说明,所有的一切习惯,宗教信仰基本都来源于父母,不幸的童年,所以一个人的心智与家人,朋友,环境是息息相关,我们要给自己输送正能量的东西,让自己充满正能量,做一个阳光的人!

- 关于深度森林的一点理解

Y.G Bingo

机器学习方法机器学习神经网络

2017年年初,南京大学周志华老师上传了一篇名为:DeepForest:TowardsAnAlternativetoDeepNeuralNetworks的论文,一石激起千层浪,各大媒体纷纷讨论着,这似乎意味着机器学习的天色要变,实则不然,周志华老师通过微博解释道,此篇论文不过是为机器学习打开了另一扇窗,是另一种思维,而不是真的去替代深度神经网络(DNN)。下面我就简单概括一下我对这篇论文的理解,如

- 多线程编程之存钱与取钱

周凡杨

javathread多线程存钱取钱

生活费问题是这样的:学生每月都需要生活费,家长一次预存一段时间的生活费,家长和学生使用统一的一个帐号,在学生每次取帐号中一部分钱,直到帐号中没钱时 通知家长存钱,而家长看到帐户还有钱则不存钱,直到帐户没钱时才存钱。

问题分析:首先问题中有三个实体,学生、家长、银行账户,所以设计程序时就要设计三个类。其中银行账户只有一个,学生和家长操作的是同一个银行账户,学生的行为是

- java中数组与List相互转换的方法

征客丶

JavaScriptjavajsonp

1.List转换成为数组。(这里的List是实体是ArrayList)

调用ArrayList的toArray方法。

toArray

public T[] toArray(T[] a)返回一个按照正确的顺序包含此列表中所有元素的数组;返回数组的运行时类型就是指定数组的运行时类型。如果列表能放入指定的数组,则返回放入此列表元素的数组。否则,将根据指定数组的运行时类型和此列表的大小分

- Shell 流程控制

daizj

流程控制if elsewhilecaseshell

Shell 流程控制

和Java、PHP等语言不一样,sh的流程控制不可为空,如(以下为PHP流程控制写法):

<?php

if(isset($_GET["q"])){

search(q);}else{// 不做任何事情}

在sh/bash里可不能这么写,如果else分支没有语句执行,就不要写这个else,就像这样 if else if

if 语句语

- Linux服务器新手操作之二

周凡杨

Linux 简单 操作

1.利用关键字搜寻Man Pages man -k keyword 其中-k 是选项,keyword是要搜寻的关键字 如果现在想使用whoami命令,但是只记住了前3个字符who,就可以使用 man -k who来搜寻关键字who的man命令 [haself@HA5-DZ26 ~]$ man -k

- socket聊天室之服务器搭建

朱辉辉33

socket

因为我们做的是聊天室,所以会有多个客户端,每个客户端我们用一个线程去实现,通过搭建一个服务器来实现从每个客户端来读取信息和发送信息。

我们先写客户端的线程。

public class ChatSocket extends Thread{

Socket socket;

public ChatSocket(Socket socket){

this.sock

- 利用finereport建设保险公司决策分析系统的思路和方法

老A不折腾

finereport金融保险分析系统报表系统项目开发

决策分析系统呈现的是数据页面,也就是俗称的报表,报表与报表间、数据与数据间都按照一定的逻辑设定,是业务人员查看、分析数据的平台,更是辅助领导们运营决策的平台。底层数据决定上层分析,所以建设决策分析系统一般包括数据层处理(数据仓库建设)。

项目背景介绍

通常,保险公司信息化程度很高,基本上都有业务处理系统(像集团业务处理系统、老业务处理系统、个人代理人系统等)、数据服务系统(通过

- 始终要页面在ifream的最顶层

林鹤霄

index.jsp中有ifream,但是session消失后要让login.jsp始终显示到ifream的最顶层。。。始终没搞定,后来反复琢磨之后,得到了解决办法,在这儿给大家分享下。。

index.jsp--->主要是加了颜色的那一句

<html>

<iframe name="top" ></iframe>

<ifram

- MySQL binlog恢复数据

aigo

mysql

1,先确保my.ini已经配置了binlog:

# binlog

log_bin = D:/mysql-5.6.21-winx64/log/binlog/mysql-bin.log

log_bin_index = D:/mysql-5.6.21-winx64/log/binlog/mysql-bin.index

log_error = D:/mysql-5.6.21-win

- OCX打成CBA包并实现自动安装与自动升级

alxw4616

ocxcab

近来手上有个项目,需要使用ocx控件

(ocx是什么?

http://baike.baidu.com/view/393671.htm)

在生产过程中我遇到了如下问题.

1. 如何让 ocx 自动安装?

a) 如何签名?

b) 如何打包?

c) 如何安装到指定目录?

2.

- Hashmap队列和PriorityQueue队列的应用

百合不是茶

Hashmap队列PriorityQueue队列

HashMap队列已经是学过了的,但是最近在用的时候不是很熟悉,刚刚重新看以一次,

HashMap是K,v键 ,值

put()添加元素

//下面试HashMap去掉重复的

package com.hashMapandPriorityQueue;

import java.util.H

- JDK1.5 returnvalue实例

bijian1013

javathreadjava多线程returnvalue

Callable接口:

返回结果并且可能抛出异常的任务。实现者定义了一个不带任何参数的叫做 call 的方法。

Callable 接口类似于 Runnable,两者都是为那些其实例可能被另一个线程执行的类设计的。但是 Runnable 不会返回结果,并且无法抛出经过检查的异常。

ExecutorService接口方

- angularjs指令中动态编译的方法(适用于有异步请求的情况) 内嵌指令无效

bijian1013

JavaScriptAngularJS

在directive的link中有一个$http请求,当请求完成后根据返回的值动态做element.append('......');这个操作,能显示没问题,可问题是我动态组的HTML里面有ng-click,发现显示出来的内容根本不执行ng-click绑定的方法!

- 【Java范型二】Java范型详解之extend限定范型参数的类型

bit1129

extend

在第一篇中,定义范型类时,使用如下的方式:

public class Generics<M, S, N> {

//M,S,N是范型参数

}

这种方式定义的范型类有两个基本的问题:

1. 范型参数定义的实例字段,如private M m = null;由于M的类型在运行时才能确定,那么我们在类的方法中,无法使用m,这跟定义pri

- 【HBase十三】HBase知识点总结

bit1129

hbase

1. 数据从MemStore flush到磁盘的触发条件有哪些?

a.显式调用flush,比如flush 'mytable'

b.MemStore中的数据容量超过flush的指定容量,hbase.hregion.memstore.flush.size,默认值是64M 2. Region的构成是怎么样?

1个Region由若干个Store组成

- 服务器被DDOS攻击防御的SHELL脚本

ronin47

mkdir /root/bin

vi /root/bin/dropip.sh

#!/bin/bash/bin/netstat -na|grep ESTABLISHED|awk ‘{print $5}’|awk -F:‘{print $1}’|sort|uniq -c|sort -rn|head -10|grep -v -E ’192.168|127.0′|awk ‘{if($2!=null&a

- java程序员生存手册-craps 游戏-一个简单的游戏

bylijinnan

java

import java.util.Random;

public class CrapsGame {

/**

*

*一个简单的赌*博游戏,游戏规则如下:

*玩家掷两个骰子,点数为1到6,如果第一次点数和为7或11,则玩家胜,

*如果点数和为2、3或12,则玩家输,

*如果和为其它点数,则记录第一次的点数和,然后继续掷骰,直至点数和等于第一次掷出的点

- TOMCAT启动提示NB: JAVA_HOME should point to a JDK not a JRE解决

开窍的石头

JAVA_HOME

当tomcat是解压的时候,用eclipse启动正常,点击startup.bat的时候启动报错;

报错如下:

The JAVA_HOME environment variable is not defined correctly

This environment variable is needed to run this program

NB: JAVA_HOME shou

- [操作系统内核]操作系统与互联网

comsci

操作系统

我首先申明:我这里所说的问题并不是针对哪个厂商的,仅仅是描述我对操作系统技术的一些看法

操作系统是一种与硬件层关系非常密切的系统软件,按理说,这种系统软件应该是由设计CPU和硬件板卡的厂商开发的,和软件公司没有直接的关系,也就是说,操作系统应该由做硬件的厂商来设计和开发

- 富文本框ckeditor_4.4.7 文本框的简单使用 支持IE11

cuityang

富文本框

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8" />

<title>知识库内容编辑</tit

- Property null not found

darrenzhu

datagridFlexAdvancedpropery null

When you got error message like "Property null not found ***", try to fix it by the following way:

1)if you are using AdvancedDatagrid, make sure you only update the data in the data prov

- MySQl数据库字符串替换函数使用

dcj3sjt126com

mysql函数替换

需求:需要将数据表中一个字段的值里面的所有的 . 替换成 _

原来的数据是 site.title site.keywords ....

替换后要为 site_title site_keywords

使用的SQL语句如下:

updat

- mac上终端起动MySQL的方法

dcj3sjt126com

mysqlmac

首先去官网下载: http://www.mysql.com/downloads/

我下载了5.6.11的dmg然后安装,安装完成之后..如果要用终端去玩SQL.那么一开始要输入很长的:/usr/local/mysql/bin/mysql

这不方便啊,好想像windows下的cmd里面一样输入mysql -uroot -p1这样...上网查了下..可以实现滴.

打开终端,输入:

1

- Gson使用一(Gson)

eksliang

jsongson

转载请出自出处:http://eksliang.iteye.com/blog/2175401 一.概述

从结构上看Json,所有的数据(data)最终都可以分解成三种类型:

第一种类型是标量(scalar),也就是一个单独的字符串(string)或数字(numbers),比如"ickes"这个字符串。

第二种类型是序列(sequence),又叫做数组(array)

- android点滴4

gundumw100

android

Android 47个小知识

http://www.open-open.com/lib/view/open1422676091314.html

Android实用代码七段(一)

http://www.cnblogs.com/over140/archive/2012/09/26/2611999.html

http://www.cnblogs.com/over140/arch

- JavaWeb之JSP基本语法

ihuning

javaweb

目录

JSP模版元素

JSP表达式

JSP脚本片断

EL表达式

JSP注释

特殊字符序列的转义处理

如何查找JSP页面中的错误

JSP模版元素

JSP页面中的静态HTML内容称之为JSP模版元素,在静态的HTML内容之中可以嵌套JSP

- App Extension编程指南(iOS8/OS X v10.10)中文版

啸笑天

ext

当iOS 8.0和OS X v10.10发布后,一个全新的概念出现在我们眼前,那就是应用扩展。顾名思义,应用扩展允许开发者扩展应用的自定义功能和内容,能够让用户在使用其他app时使用该项功能。你可以开发一个应用扩展来执行某些特定的任务,用户使用该扩展后就可以在多个上下文环境中执行该任务。比如说,你提供了一个能让用户把内容分

- SQLServer实现无限级树结构

macroli

oraclesqlSQL Server

表结构如下:

数据库id path titlesort 排序 1 0 首页 0 2 0,1 新闻 1 3 0,2 JAVA 2 4 0,3 JSP 3 5 0,2,3 业界动态 2 6 0,2,3 国内新闻 1

创建一个存储过程来实现,如果要在页面上使用可以设置一个返回变量将至传过去

create procedure test

as

begin

decla

- Css居中div,Css居中img,Css居中文本,Css垂直居中div

qiaolevip

众观千象学习永无止境每天进步一点点css

/**********Css居中Div**********/

div.center {

width: 100px;

margin: 0 auto;

}

/**********Css居中img**********/

img.center {

display: block;

margin-left: auto;

margin-right: auto;

}

- Oracle 常用操作(实用)

吃猫的鱼

oracle

SQL>select text from all_source where owner=user and name=upper('&plsql_name');

SQL>select * from user_ind_columns where index_name=upper('&index_name'); 将表记录恢复到指定时间段以前

- iOS中使用RSA对数据进行加密解密

witcheryne

iosrsaiPhoneobjective c

RSA算法是一种非对称加密算法,常被用于加密数据传输.如果配合上数字摘要算法, 也可以用于文件签名.

本文将讨论如何在iOS中使用RSA传输加密数据. 本文环境

mac os

openssl-1.0.1j, openssl需要使用1.x版本, 推荐使用[homebrew](http://brew.sh/)安装.

Java 8

RSA基本原理

RS