tensorflow+XGBoost 人脸识别

1 训练集提取特征值 把特征值保存于 df_train.csv 中

# -*- coding: utf-8 -*-

from os import listdir

from os.path import isfile, join

from scipy import misc

import tensorflow as tf

import numpy as np

import pandas as pd

import facenet

import pickle

from sklearn.svm import SVC

import os

from sklearn import svm

tf.Graph().as_default()

sess = tf.Session()

with sess.as_default():

# Load the model

facenet.load_model('20170512-110547/20170512-110547.pb')

# Get input and output tensors

images_placeholder = tf.get_default_graph().get_tensor_by_name("input:0")

embeddings = tf.get_default_graph().get_tensor_by_name("embeddings:0")

phase_train_placeholder = tf.get_default_graph().get_tensor_by_name("phase_train:0")

print(images_placeholder,'--------------',embeddings,'-------------',phase_train_placeholder)

#f1path = 'D:/faceRecognition/real_time_face_recognition/faceimg/zhang/f9.jpg'

#f1img = misc.imread(f1path)

#prewhiten_face = facenet.prewhiten(f1img)

#feed_dict = {images_placeholder: [prewhiten_face], phase_train_placeholder: False}

#f1emb = sess.run(embeddings, feed_dict=feed_dict)[0]

#

#mypath = 'D:/faceRecognition/real_time_face_recognition/faceimg/li'

#

#for f in listdir(mypath):

# fn = join(mypath,f)

# if isfile(fn):

# img = misc.imread(fn)

# prewhiten_face = facenet.prewhiten(img)

# feed_dict = {images_placeholder: [prewhiten_face], phase_train_placeholder: False}

# f2emb = sess.run(embeddings, feed_dict=feed_dict)[0]

# dist = np.sqrt(np.sum(np.square(np.subtract(f1emb, f2emb))))

# print('%s: %f' %(f, dist))

faceimgpath = 'faceimg2'

emb_array = []

labels = []

class_names = []

classifier_filename_exp = 'faceimg2/c.pkl'

detecter_filename_exp = 'faceimg2/d.pkl'

classimgspath = []

train = []

test =[]

train_embs = []

train_labels = []

#test_embs = []

#test_labels = []

test_set = []

i = 0

for d in listdir(faceimgpath):

dn = join(faceimgpath,d)

if os.path.isdir(dn):

for f in listdir(dn):

fn = join(dn, f)

if isfile(fn):

# img = misc.imread(fn)

# prewhiten_face = facenet.prewhiten(img)

# feed_dict = {images_placeholder: [prewhiten_face], phase_train_placeholder: False}

# emb = sess.run(embeddings, feed_dict=feed_dict)[0]

# emb_array.append(emb)

# labels.append(i)

classimgspath.append((fn, i))

np.random.shuffle(classimgspath)

#train.append(classimgspath[:int(len(classimgspath)*0.8)])

train += classimgspath[:int(len(classimgspath)*0.8)]

test += classimgspath[int(len(classimgspath)*0.8):]

i += 1

class_names.append(d)

print(d)

# print('classimgspath:',classimgspath,'\ntrain',train,'\ntest',test)

#for item in classimgspath:

# print(item[0])

#print(train)

#for item in train:

# print(item[0])

for item in train:

img = misc.imread(item[0])

prewhiten_face = facenet.prewhiten(img)

feed_dict = {images_placeholder: [prewhiten_face], phase_train_placeholder: False}

emb = sess.run(embeddings, feed_dict=feed_dict)[0]

train_embs.append(emb)

train_labels.append(item[1])

# data_lable={'lable':train_labels}

df_TZ=pd.DataFrame(train_embs)

df_TZ['label']=train_labels

df_TZ.to_csv('df_train.csv',index=None)

# print(df_TZ)

2 测试集提取特征值保存于 df_test.csv 中

# -*- coding: utf-8 -*-

import os

import pickle

import facenet

import numpy as np

import pandas as pd

from os import listdir

from scipy import misc

from sklearn import svm

import tensorflow as tf

from sklearn.svm import SVC

from os.path import isfile, join

tf.Graph().as_default()

sess = tf.Session()

with sess.as_default():

# Load the model

facenet.load_model('20170512-110547/20170512-110547.pb')

# Get input and output tensors

images_placeholder = tf.get_default_graph().get_tensor_by_name("input:0")

embeddings = tf.get_default_graph().get_tensor_by_name("embeddings:0")

phase_train_placeholder = tf.get_default_graph().get_tensor_by_name("phase_train:0")

faceimgpath = 'faceimg2'

emb_array = []

labels = []

class_names = []

classifier_filename_exp = 'faceimg2/c.pkl'

detecter_filename_exp = 'faceimg2/d.pkl'

classimgspath = []

train = []

test =[]

train_embs = []

train_labels = []

test_embs = []

test_labels = []

test_set = []

i = 0

for d in listdir(faceimgpath):

dn = join(faceimgpath,d)

if os.path.isdir(dn):

for f in listdir(dn):

fn = join(dn, f)

if isfile(fn):

# img = misc.imread(fn)

# prewhiten_face = facenet.prewhiten(img)

# feed_dict = {images_placeholder: [prewhiten_face], phase_train_placeholder: False}

# emb = sess.run(embeddings, feed_dict=feed_dict)[0]

# emb_array.append(emb)

# labels.append(i)

classimgspath.append((fn, i))

np.random.shuffle(classimgspath)

#train.append(classimgspath[:int(len(classimgspath)*0.8)])

train += classimgspath[:int(len(classimgspath)*0.8)]

test += classimgspath[int(len(classimgspath)*0.8):]

i += 1

class_names.append(d)

print(d)

for item in test:

img = misc.imread(item[0])

prewhiten_face = facenet.prewhiten(img)

feed_dict = {images_placeholder: [prewhiten_face], phase_train_placeholder: False}

emb = sess.run(embeddings, feed_dict=feed_dict)[0]

test_embs.append(emb)

test_labels.append(item[1])

df_test=pd.DataFrame(test_embs)

df_test['label']=test_labels

df_test.to_csv('df_test.csv',index=None)

'''

testimg = 'faceimg/zhang/f30.jpg'

img = misc.imread(testimg)

prewhiten_face = facenet.prewhiten(img)

feed_dict = {images_placeholder: [prewhiten_face], phase_train_placeholder: False}

emb = sess.run(embeddings, feed_dict=feed_dict)[0]

print(emb)

'''

3 训练 XGBoost 模型并保存模型于 xgb.model 中

import pandas as pd

import xgboost as xgb

from sklearn.preprocessing import MinMaxScaler

# 训练集1

train0 = pd.read_csv('df_train.csv')

# train0.label.replace(-1,0,inplace=True)

train0.drop_duplicates(inplace=True)

# 读取测试数据

test = pd.read_csv('df_test.csv')

train_y = train0.label

train_x = train0.drop(['label'],axis=1)

test_x = test.drop(['label'],axis=1)

# print(train0.shape,train_x.shape,train_y.shape)

# print('-------------------------------------')

# print(test_x.shape)

# test_preds = test[['user_id','coupon_id','date_received']]

test_preds = test.drop(['label'],axis=1)

xgb_train = xgb.DMatrix(train_x,label=train_y)

test = xgb.DMatrix(test_x)

# print(train_x.shape,train_y.shape,test_x.shape)

params={'booster':'gbtree',

#gblinear

'silent':1,

# 输出日志 默认0为输出较全信息,1输出部分日志

'objective': 'multi:softprob',

'num_class':3,

# 'eval_metric':'auc',

'gamma':0.1,

#-----------调参-------------

# 模型在默认情况下,对于一个节点的划分只有在其loss function 得到结果大于0的情况下才进行,而gamma 给定了所需的最低loss function的值

# gamma值使得算法更conservation,且其值依赖于loss function ,在模型中应该进行调参

'min_child_weight':1.1,

#-----------调参-------------

# 孩子节点中最小的样本权重和。如果一个叶子节点的样本权重和小于min_child_weight则拆分过程结束。在现行回归模型中,这个参数是指建立每个模型所需要的最小样本数。该成熟越大算法越conservative。即调大这个参数能够控制过拟合

'max_depth':6,

#----------调参--------------

# 树的深度越大,则对数据的拟合程度越高(过拟合程度也越高)。即该参数也是控制过拟合。通常取值:3-10

'lambda':10,

#lambda [default=0] L2 正则的惩罚系数。用于处理XGBoost的正则化部分。通常不使用,但可以用来降低过拟合

'subsample':0.8,

# 用于训练模型的子样本占整个样本集合的比例。如果设置为0.5则意味着XGBoost将随机的从整个样本集合中抽取出50%的子样本建立树模型,这能够防止过拟合。

'colsample_bytree':0.7,

#在建立树时对特征随机采样的比例。缺省值为1,取值范围:(0,1]

# 'colsample_bylevel':0.7,#决定每次节点划分时子样例的比例,通常不使用,因为subsample和colsample_bytree已经可以起到相同的作用了

'eta': 0.01,

#为了防止过拟合,更新过程中用到的收缩步长,通常最后设置eta为0.01~0.2

'tree_method':'exact',

'seed':0

#随机数的种子。缺省值为0可以用于产生可重复的结果(每次取一样的seed即可得到相同的随机划分)

# 'nthread':12

#XGBoost运行时的线程数。缺省值是当前系统可以获得的最大线程数

}

# 训练并保存模型

watchlist = [(xgb_train,'train')]

model = xgb.train(params,xgb_train,num_boost_round=10000,evals=watchlist)

model.save_model('xgb.model')

# 读取模型 查看预测效果

model_bst = xgb.Booster({'nthread':4}) #init model

model_bst.load_model("xgb.model") # load data

#predict test set

df_pre = pd.DataFrame(model_bst.predict(test))

print(df_pre)

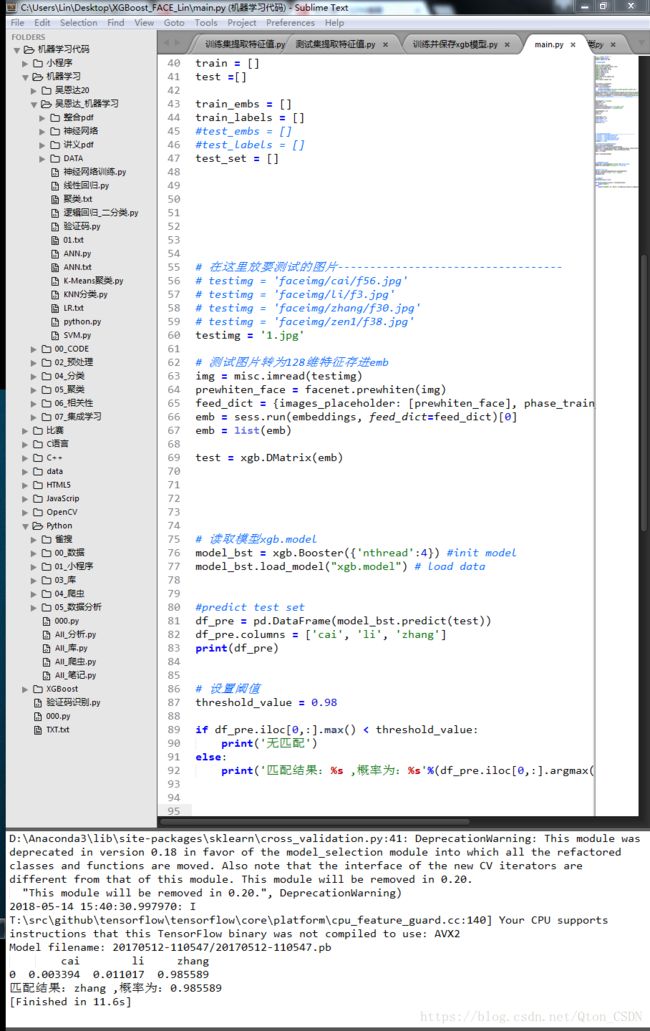

4 读取 xgb.model 模型进行预测 (main.py)

# -*- coding: utf-8 -*-

import pandas as pd

import xgboost as xgb

# 读取测试数据

from os import listdir

from os.path import isfile, join

from scipy import misc

import tensorflow as tf

import numpy as np

import pandas as pd

import facenet

import pickle

from sklearn.svm import SVC

import os

from sklearn import svm

tf.Graph().as_default()

sess = tf.Session()

with sess.as_default():

# Load the model

facenet.load_model('20170512-110547/20170512-110547.pb')

# Get input and output tensors

images_placeholder = tf.get_default_graph().get_tensor_by_name("input:0")

embeddings = tf.get_default_graph().get_tensor_by_name("embeddings:0")

phase_train_placeholder = tf.get_default_graph().get_tensor_by_name("phase_train:0")

# print(images_placeholder,'--------------',embeddings,'-------------',phase_train_placeholder)

faceimgpath = 'faceimg2'

emb_array = []

labels = []

class_names = []

classifier_filename_exp = 'faceimg2/c.pkl'

detecter_filename_exp = 'faceimg2/d.pkl'

classimgspath = []

train = []

test =[]

train_embs = []

train_labels = []

#test_embs = []

#test_labels = []

test_set = []

# 在这里放要测试的图片-----------------------------------

# testimg = 'faceimg/cai/f56.jpg'

# testimg = 'faceimg/li/f3.jpg'

# testimg = 'faceimg/zhang/f30.jpg'

# testimg = 'faceimg/zen1/f38.jpg'

testimg = '1.jpg'

# 测试图片转为128维特征存进emb

img = misc.imread(testimg)

prewhiten_face = facenet.prewhiten(img)

feed_dict = {images_placeholder: [prewhiten_face], phase_train_placeholder: False}

emb = sess.run(embeddings, feed_dict=feed_dict)[0]

emb = list(emb)

test = xgb.DMatrix(emb)

# 读取模型xgb.model

model_bst = xgb.Booster({'nthread':4}) #init model

model_bst.load_model("xgb.model") # load data

#predict test set

df_pre = pd.DataFrame(model_bst.predict(test))

df_pre.columns = ['cai', 'li', 'zhang']

print(df_pre)

# 设置阈值

threshold_value = 0.98

if df_pre.iloc[0,:].max() < threshold_value:

print('无匹配')

else:

print('匹配结果:%s ,概率为:%s'%(df_pre.iloc[0,:].argmax(),df_pre.iloc[0,:].max()))

运行结果: