【OpenCV】立体匹配算法 StereoBM/StereoSGBM/StereoVar

1、OpenCV三种立体匹配求视差图算法总结

2、立体匹配算法

3、Stereo match 基本原理介绍

http://www.cnblogs.com/Crazod/p/5326756.html

这是第一篇博客,想把之前写的一些东西整理成技术博客,陆续的搬运过来吧。介绍一下一直在做的Stereo match 的基本原理:

|

|

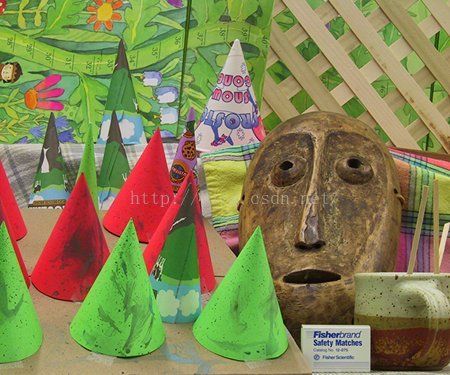

| 图1.1 cones_left.jpg | 图1.2 cones_left.jpg |

为了模拟人眼对立体场景的捕捉和对不同景物远近的识别能力,立体匹配算法要求采用两个摄像头代替人眼,通过获取两幅非常接近的图片以获取景深(视差:Disparity),从而计算出不同景物与摄像头的距离,得到景深图。

|

|

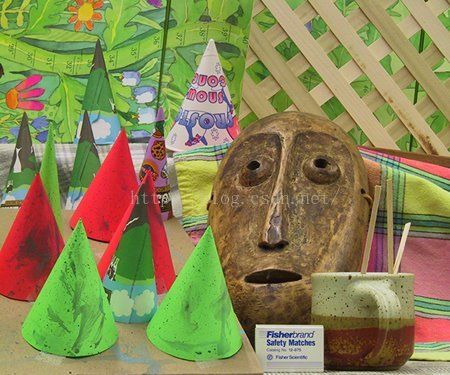

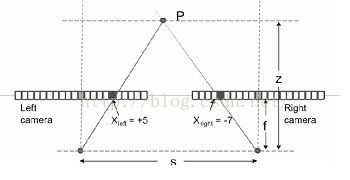

| 图2:不同摄像头中同一个像素对应的位置 | 图3:相似三角形原理 |

具体计算方法为:

当采取两个同一水平线上的摄像头进行拍摄的时候,同一物体将在两个摄像机内被拍摄到,在两个摄像机内部,这个物体相对于摄像机中心点位置有不同的坐标,如上图2所示。Xleft是该物体在左摄像机内相对位置,Xright是该物体在右摄像机内相对位置。两个摄像机相距S,焦距为f,物体P距离摄像机z,z也就是景深。当我们将两幅图像重叠在一起的时候,左摄像机上P的投影和右摄像机上P的投影位置有一个距离|Xleft|+|Xright|,这个距离称为Disparity,根据相似三角形图3可以得到z=sf/d. 也就是只要计算得到了d的值,就可以计算得到深度图。而在计算d的值的过程中需要对两幅图像进行匹配,寻找到物体P在两幅图像中的相对位置。在对图像进行匹配的过程中,需要用到cost computation,即通过寻找同一水平线上两幅图像上的点的最小误差来确定这两个点是否是同一个物体所成的像。由于一个点所能提供的信息太少,因此往往需要扩大对比的范围。

OpenCV2源码:

// OpenCVTest.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include

/*

* stereo_match.cpp

* calibration

*

* Created by Victor Eruhimov on 1/18/10.

* Copyright 2010 Argus Corp. All rights reserved.

*

*/

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/contrib/contrib.hpp"

using namespace cv;

static void print_help()

{

printf("\nDemo stereo matching converting L and R images into disparity and point clouds\n");

printf("\nUsage: stereo_match [--algorithm=bm|sgbm|hh|var] [--blocksize=]\n"

"[--max-disparity=] [--scale=scale_factor>] [-i ] [-e ]\n"

"[--no-display] [-o ] [-p ]\n");

}

static void saveXYZ(const char* filename, const Mat& mat)

{

const double max_z = 1.0e4;

FILE* fp = fopen(filename, "wt");

for (int y = 0; y < mat.rows; y++)

{

for (int x = 0; x < mat.cols; x++)

{

Vec3f point = mat.at(y, x);

if (fabs(point[2] - max_z) < FLT_EPSILON || fabs(point[2]) > max_z) continue;

fprintf(fp, "%f %f %f\n", point[0], point[1], point[2]);

}

}

fclose(fp);

}

int _tmain(int argc, _TCHAR* argv[])

{

const char* algorithm_opt = "--algorithm=";

const char* maxdisp_opt = "--max-disparity=";

const char* blocksize_opt = "--blocksize=";

const char* nodisplay_opt = "--no-display";

const char* scale_opt = "--scale=";

//if (argc < 3)

//{

// print_help();

// return 0;

//}

const char* img1_filename = 0;

const char* img2_filename = 0;

const char* intrinsic_filename = 0;

const char* extrinsic_filename = 0;

const char* disparity_filename = 0;

const char* point_cloud_filename = 0;

enum { STEREO_BM = 0, STEREO_SGBM = 1, STEREO_HH = 2, STEREO_VAR = 3 };

int alg = STEREO_SGBM;

int SADWindowSize = 0, numberOfDisparities = 0;

bool no_display = false;

float scale = 1.f;

StereoBM bm;

StereoSGBM sgbm;

StereoVar var;

//------------------------------

/*img1_filename = "tsukuba_l.png";

img2_filename = "tsukuba_r.png";*/

img1_filename = "01.jpg";

img2_filename = "02.jpg";

int color_mode = alg == STEREO_BM ? 0 : -1;

Mat img1 = imread(img1_filename, color_mode);

Mat img2 = imread(img2_filename, color_mode);

Size img_size = img1.size();

Rect roi1, roi2;

Mat Q;

numberOfDisparities = numberOfDisparities > 0 ? numberOfDisparities : ((img_size.width / 8) + 15) & -16;

bm.state->roi1 = roi1;

bm.state->roi2 = roi2;

bm.state->preFilterCap = 31;

bm.state->SADWindowSize = SADWindowSize > 0 ? SADWindowSize : 9;

bm.state->minDisparity = 0;

bm.state->numberOfDisparities = numberOfDisparities;

bm.state->textureThreshold = 10;

bm.state->uniquenessRatio = 15;

bm.state->speckleWindowSize = 100;

bm.state->speckleRange = 32;

bm.state->disp12MaxDiff = 1;

sgbm.preFilterCap = 63;

sgbm.SADWindowSize = SADWindowSize > 0 ? SADWindowSize : 3;

int cn = img1.channels();

sgbm.P1 = 8 * cn*sgbm.SADWindowSize*sgbm.SADWindowSize;

sgbm.P2 = 32 * cn*sgbm.SADWindowSize*sgbm.SADWindowSize;

sgbm.minDisparity = 0;

sgbm.numberOfDisparities = numberOfDisparities;

sgbm.uniquenessRatio = 10;

sgbm.speckleWindowSize = bm.state->speckleWindowSize;

sgbm.speckleRange = bm.state->speckleRange;

sgbm.disp12MaxDiff = 1;

sgbm.fullDP = alg == STEREO_HH;

var.levels = 3; // ignored with USE_AUTO_PARAMS

var.pyrScale = 0.5; // ignored with USE_AUTO_PARAMS

var.nIt = 25;

var.minDisp = -numberOfDisparities;

var.maxDisp = 0;

var.poly_n = 3;

var.poly_sigma = 0.0;

var.fi = 15.0f;

var.lambda = 0.03f;

var.penalization = var.PENALIZATION_TICHONOV; // ignored with USE_AUTO_PARAMS

var.cycle = var.CYCLE_V; // ignored with USE_AUTO_PARAMS

var.flags = var.USE_SMART_ID | var.USE_AUTO_PARAMS | var.USE_INITIAL_DISPARITY | var.USE_MEDIAN_FILTERING;

Mat disp, disp8;

//Mat img1p, img2p, dispp;

//copyMakeBorder(img1, img1p, 0, 0, numberOfDisparities, 0, IPL_BORDER_REPLICATE);

//copyMakeBorder(img2, img2p, 0, 0, numberOfDisparities, 0, IPL_BORDER_REPLICATE);

int64 t = getTickCount();

if (alg == STEREO_BM)

bm(img1, img2, disp);

else if (alg == STEREO_VAR) {

var(img1, img2, disp);

}

else if (alg == STEREO_SGBM || alg == STEREO_HH)

sgbm(img1, img2, disp);//------

t = getTickCount() - t;

printf("Time elapsed: %fms\n", t * 1000 / getTickFrequency());

//disp = dispp.colRange(numberOfDisparities, img1p.cols);

if (alg != STEREO_VAR)

disp.convertTo(disp8, CV_8U, 255 / (numberOfDisparities*16.));

else

disp.convertTo(disp8, CV_8U);

if (!no_display)

{

namedWindow("left", 1);

imshow("left", img1);

namedWindow("right", 1);

imshow("right", img2);

namedWindow("disparity", 0);

imshow("disparity", disp8);

imwrite("result.bmp", disp8);

printf("press any key to continue...");

fflush(stdout);

waitKey();

printf("\n");

}

return 0;

}

Taily老段的微信公众号,欢迎交流学习

https://blog.csdn.net/taily_duan/article/details/81214815