基于tensorflow_gpu 1.9.0实现的第二个神经网络: 对影评的二值分类

示例来源于Tensorflow的官方教程。

基于tensorflow_gpu 1.9.0实现的第二个神经网络:对影评的二值分类,代码如下:

#!/usr/bin/env python

import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

print(tf.__version__)

# Download the IMDB dataset

imdb = keras.datasets.imdb

(train_data, train_labels), (test_data,

test_labels) = imdb.load_data(num_words=10000)

# Explore the data

print("Training entries: {}, labels: {}".format(

len(train_data), len(train_labels)))

print(train_data[0])

len(train_data[0]), len(train_data[1])

# A dictionary mapping words to an integer index

word_index = imdb.get_word_index()

# The first indices are reserved

word_index = {k: (v+3) for k, v in word_index.items()}

word_index["" ] = 0

word_index["" ] = 1

word_index["" ] = 2 # unknown

word_index["UNUSED"] = 3

reverse_word_index = dict([(value, key)

for (key, value) in word_index.items()])

def decode_review(text):

return ' '.join([reverse_word_index.get(i, '?') for i in text])

decode_review(train_data[0])

# Prepare the data

# The reviews--the arrays of integers--must be converted to tensors before fed

# into the neural network.

# Since the movie reviews must be the same length, we will use the pad_sequences

# function to standardize the lengths.

train_data = keras.preprocessing.sequence.pad_sequences(train_data,

value=word_index["" ],

padding='post',

maxlen=256)

test_data = keras.preprocessing.sequence.pad_sequences(test_data,

value=word_index["" ],

padding='post',

maxlen=256)

len(train_data[0]), len(train_data[1])

print(train_data[0])

# Build the model

# Input shape is the vocabulary count used for the movie reviews (10, 000 words)

vocab_size = 10000

model = keras.Sequential()

model.add(keras.layers.Embedding(vocab_size, 16))

model.add(keras.layers.GlobalAveragePooling1D())

model.add(keras.layers.Dense(16, activation=tf.nn.relu))

model.add(keras.layers.Dense(1, activation=tf.nn.sigmoid))

model.summary()

# Loss function and optimizer

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='binary_crossentropy',

metrics=['accuracy'])

# Create a validation set

x_val = train_data[:10000]

partial_x_train = train_data[10000:]

y_val = train_labels[:10000]

partial_y_train = train_labels[10000:]

# Train the model

history = model.fit(partial_x_train,

partial_y_train,

epochs=40,

batch_size=512,

validation_data=(x_val, y_val),

verbose=1)

# Evaluate the model

results = model.evaluate(test_data, test_labels)

print(results)

# Create a graph of accuracy and loss over time

history_dict = history.history

history_dict.keys()

acc = history_dict['acc']

val_acc = history_dict['val_acc']

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(acc) + 1)

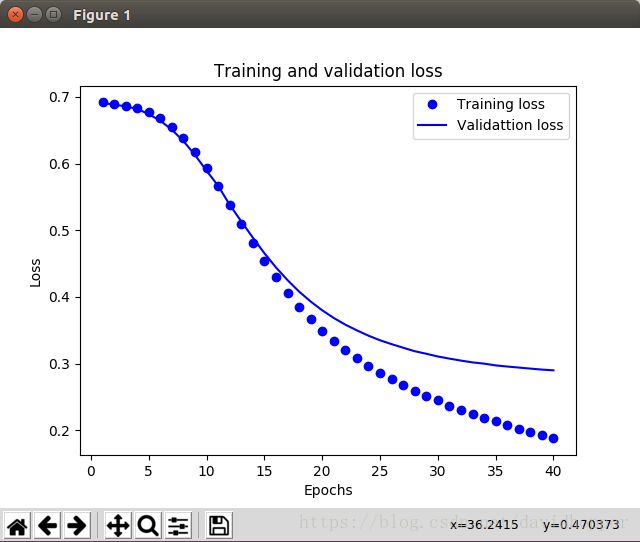

plt.figure()

# "bo" is for "blue dot"

plt.plot(epochs, loss, 'bo', label='Training loss')

# "b" is for "solid blue line"

plt.plot(epochs, val_loss, 'b', label='Validattion loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

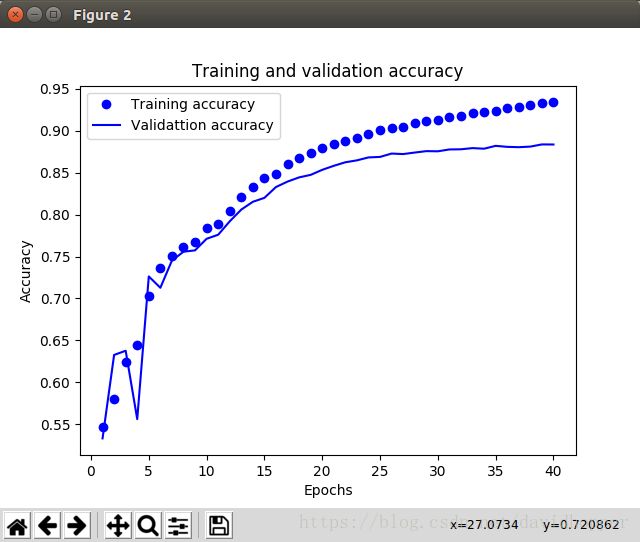

plt.figure()

# "bo" is for "blue dot"

plt.plot(epochs, acc, 'bo', label='Training accuracy')

# "b" is for "solid blue line"

plt.plot(epochs, val_acc, 'b', label='Validattion accuracy')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

运行该程序之前,需要先根据我的另一篇博客《Ubuntu 16.04安装tensorflow_gpu 1.9.0的方法》,安装tensorflow_gpu 1.9.0,然后安装numpy、matplotlib等工具包,此外还需要安装python-tk,命令如下:

sudo pip install numpy

sudo pip install matplotlib

sudo apt-get install python-tk

运行结果如下:

1.9.0

Training entries: 25000, labels: 25000

[1, 14, 22, 16, 43, 530, 973, 1622, 1385, 65, 458, 4468, 66, 3941, 4, 173, 36, 256, 5, 25, 100, 43, 838, 112, 50, 670, 2, 9, 35, 480, 284, 5, 150, 4, 172, 112, 167, 2, 336, 385, 39, 4, 172, 4536, 1111, 17, 546, 38, 13, 447, 4, 192, 50, 16, 6, 147, 2025, 19, 14, 22, 4, 1920, 4613, 469, 4, 22, 71, 87, 12, 16, 43, 530, 38, 76, 15, 13, 1247, 4, 22, 17, 515, 17, 12, 16, 626, 18, 2, 5, 62, 386, 12, 8, 316, 8, 106, 5, 4, 2223, 5244, 16, 480, 66, 3785, 33, 4, 130, 12, 16, 38, 619, 5, 25, 124, 51, 36, 135, 48, 25, 1415, 33, 6, 22, 12, 215, 28, 77, 52, 5, 14, 407, 16, 82, 2, 8, 4, 107, 117, 5952, 15, 256, 4, 2, 7, 3766, 5, 723, 36, 71, 43, 530, 476, 26, 400, 317, 46, 7, 4, 2, 1029, 13, 104, 88, 4, 381, 15, 297, 98, 32, 2071, 56, 26, 141, 6, 194, 7486, 18, 4, 226, 22, 21, 134, 476, 26, 480, 5, 144, 30, 5535, 18, 51, 36, 28, 224, 92, 25, 104, 4, 226, 65, 16, 38, 1334, 88, 12, 16, 283, 5, 16, 4472, 113, 103, 32, 15, 16, 5345, 19, 178, 32]

[ 1 14 22 16 43 530 973 1622 1385 65 458 4468 66 3941

4 173 36 256 5 25 100 43 838 112 50 670 2 9

35 480 284 5 150 4 172 112 167 2 336 385 39 4

172 4536 1111 17 546 38 13 447 4 192 50 16 6 147

2025 19 14 22 4 1920 4613 469 4 22 71 87 12 16

43 530 38 76 15 13 1247 4 22 17 515 17 12 16

626 18 2 5 62 386 12 8 316 8 106 5 4 2223

5244 16 480 66 3785 33 4 130 12 16 38 619 5 25

124 51 36 135 48 25 1415 33 6 22 12 215 28 77

52 5 14 407 16 82 2 8 4 107 117 5952 15 256

4 2 7 3766 5 723 36 71 43 530 476 26 400 317

46 7 4 2 1029 13 104 88 4 381 15 297 98 32

2071 56 26 141 6 194 7486 18 4 226 22 21 134 476

26 480 5 144 30 5535 18 51 36 28 224 92 25 104

4 226 65 16 38 1334 88 12 16 283 5 16 4472 113

103 32 15 16 5345 19 178 32 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0]

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, None, 16) 160000

_________________________________________________________________

global_average_pooling1d (Gl (None, 16) 0

_________________________________________________________________

dense (Dense) (None, 16) 272

_________________________________________________________________

dense_1 (Dense) (None, 1) 17

=================================================================

Total params: 160,289

Trainable params: 160,289

Non-trainable params: 0

_________________________________________________________________

Train on 15000 samples, validate on 10000 samples

Epoch 1/40

2018-07-26 16:23:47.699236: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2018-07-26 16:23:47.754868: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:897] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2018-07-26 16:23:47.755179: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1392] Found device 0 with properties:

name: GeForce GTX 1050 Ti major: 6 minor: 1 memoryClockRate(GHz): 1.392

pciBusID: 0000:01:00.0

totalMemory: 3.94GiB freeMemory: 2.92GiB

2018-07-26 16:23:47.755192: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1471] Adding visible gpu devices: 0

2018-07-26 16:23:47.927017: I tensorflow/core/common_runtime/gpu/gpu_device.cc:952] Device interconnect StreamExecutor with strength 1 edge matrix:

2018-07-26 16:23:47.927050: I tensorflow/core/common_runtime/gpu/gpu_device.cc:958] 0

2018-07-26 16:23:47.927060: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0: N

2018-07-26 16:23:47.927246: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1084] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 2635 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1050 Ti, pci bus id: 0000:01:00.0, compute capability: 6.1)

15000/15000 [==============================] - 1s 57us/step - loss: 0.6918 - acc: 0.5469 - val_loss: 0.6910 - val_acc: 0.5332

Epoch 2/40

15000/15000 [==============================] - 0s 20us/step - loss: 0.6896 - acc: 0.5797 - val_loss: 0.6885 - val_acc: 0.6327

Epoch 3/40

15000/15000 [==============================] - 0s 20us/step - loss: 0.6869 - acc: 0.6238 - val_loss: 0.6859 - val_acc: 0.6377

Epoch 4/40

15000/15000 [==============================] - 0s 18us/step - loss: 0.6829 - acc: 0.6443 - val_loss: 0.6821 - val_acc: 0.5562

Epoch 5/40

15000/15000 [==============================] - 0s 20us/step - loss: 0.6767 - acc: 0.7030 - val_loss: 0.6741 - val_acc: 0.7263

Epoch 6/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.6678 - acc: 0.7368 - val_loss: 0.6643 - val_acc: 0.7128

Epoch 7/40

15000/15000 [==============================] - 0s 18us/step - loss: 0.6551 - acc: 0.7505 - val_loss: 0.6508 - val_acc: 0.7452

Epoch 8/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.6381 - acc: 0.7613 - val_loss: 0.6337 - val_acc: 0.7558

Epoch 9/40

15000/15000 [==============================] - 0s 18us/step - loss: 0.6176 - acc: 0.7673 - val_loss: 0.6128 - val_acc: 0.7574

Epoch 10/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.5929 - acc: 0.7845 - val_loss: 0.5891 - val_acc: 0.7712

Epoch 11/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.5659 - acc: 0.7891 - val_loss: 0.5666 - val_acc: 0.7760

Epoch 12/40

15000/15000 [==============================] - 0s 18us/step - loss: 0.5382 - acc: 0.8040 - val_loss: 0.5381 - val_acc: 0.7920

Epoch 13/40

15000/15000 [==============================] - 0s 18us/step - loss: 0.5087 - acc: 0.8211 - val_loss: 0.5133 - val_acc: 0.8060

Epoch 14/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.4810 - acc: 0.8326 - val_loss: 0.4889 - val_acc: 0.8153

Epoch 15/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.4544 - acc: 0.8431 - val_loss: 0.4655 - val_acc: 0.8200

Epoch 16/40

15000/15000 [==============================] - 0s 18us/step - loss: 0.4299 - acc: 0.8486 - val_loss: 0.4442 - val_acc: 0.8329

Epoch 17/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.4061 - acc: 0.8603 - val_loss: 0.4252 - val_acc: 0.8394

Epoch 18/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.3851 - acc: 0.8675 - val_loss: 0.4080 - val_acc: 0.8444

Epoch 19/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.3664 - acc: 0.8734 - val_loss: 0.3931 - val_acc: 0.8474

Epoch 20/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.3494 - acc: 0.8794 - val_loss: 0.3799 - val_acc: 0.8534

Epoch 21/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.3341 - acc: 0.8845 - val_loss: 0.3684 - val_acc: 0.8582

Epoch 22/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.3203 - acc: 0.8880 - val_loss: 0.3584 - val_acc: 0.8624

Epoch 23/40

15000/15000 [==============================] - 0s 18us/step - loss: 0.3080 - acc: 0.8917 - val_loss: 0.3498 - val_acc: 0.8647

Epoch 24/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2963 - acc: 0.8957 - val_loss: 0.3418 - val_acc: 0.8682

Epoch 25/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2859 - acc: 0.9005 - val_loss: 0.3349 - val_acc: 0.8688

Epoch 26/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2762 - acc: 0.9036 - val_loss: 0.3292 - val_acc: 0.8728

Epoch 27/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2676 - acc: 0.9045 - val_loss: 0.3238 - val_acc: 0.8722

Epoch 28/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2588 - acc: 0.9087 - val_loss: 0.3185 - val_acc: 0.8740

Epoch 29/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2512 - acc: 0.9119 - val_loss: 0.3147 - val_acc: 0.8757

Epoch 30/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2447 - acc: 0.9129 - val_loss: 0.3106 - val_acc: 0.8755

Epoch 31/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2369 - acc: 0.9167 - val_loss: 0.3075 - val_acc: 0.8777

Epoch 32/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2310 - acc: 0.9175 - val_loss: 0.3045 - val_acc: 0.8779

Epoch 33/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2241 - acc: 0.9216 - val_loss: 0.3018 - val_acc: 0.8793

Epoch 34/40

15000/15000 [==============================] - 0s 20us/step - loss: 0.2184 - acc: 0.9229 - val_loss: 0.2999 - val_acc: 0.8786

Epoch 35/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.2133 - acc: 0.9237 - val_loss: 0.2973 - val_acc: 0.8820

Epoch 36/40

15000/15000 [==============================] - 0s 20us/step - loss: 0.2073 - acc: 0.9269 - val_loss: 0.2956 - val_acc: 0.8808

Epoch 37/40

15000/15000 [==============================] - 0s 20us/step - loss: 0.2022 - acc: 0.9286 - val_loss: 0.2940 - val_acc: 0.8804

Epoch 38/40

15000/15000 [==============================] - 0s 20us/step - loss: 0.1974 - acc: 0.9303 - val_loss: 0.2924 - val_acc: 0.8811

Epoch 39/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.1923 - acc: 0.9327 - val_loss: 0.2910 - val_acc: 0.8837

Epoch 40/40

15000/15000 [==============================] - 0s 19us/step - loss: 0.1878 - acc: 0.9338 - val_loss: 0.2898 - val_acc: 0.8836

25000/25000 [==============================] - 0s 20us/step

[0.3051770512676239, 0.87472]