python数据分析(分析文本数据和社交媒体)

1、安装NLTK

pip install nltk至此,我们的安装还未完成,还需要下载NLTK语料库,下载量非常大,大约有1.8GB。可以直接运行代码下载、代码如下:

import nltk

nltk.download()2、滤除停用词、姓名和数字

进行文本分析时,我们经常需要对停用词(Stopwords)进行剔除,这里所谓停用词就是那些非常常见,但没有多大信息含量的词。

代码:

import nltk

sw=set(nltk.corpus.stopwords.words('french'))

print "Stop words",list(sw)[:7]运行结果:

Stop words [u'e\xfbtes', u'\xeates', u'aient', u'auraient', u'aurions', u'auras', u'serait']

nltk还提供一个Gutenberg语料库。该项目是一个数字图书馆计划,旨在收集大量版权已经过期的图书,供人们在互联网上免费阅读。下面代码是加载Gutenberg语料库,并输出部分书名的代码:

gb=nltk.corpus.gutenberg

print "Gutenberg files",gb.fileids()[-5:]Gutenberg files [u'milton-paradise.txt', u'shakespeare-caesar.txt', u'shakespeare-hamlet.txt', u'shakespeare-macbeth.txt', u'whitman-leaves.txt']

代码:

text_sent=gb.sents("milton-paradise.txt")[:2] #取前两个句子

print "Unfiltered:",text_sent

for sent in text_sent: #去除停用词

filtered=[w for w in sent if w.lower() not in sw]

print "Filtered:",filteredFiltered: [u'[', u'Paradise', u'Lost', u'John', u'Milton', u'1667', u']']

Filtered: [u'Book']代码:

#coding:utf8

import nltk

sw=set(nltk.corpus.stopwords.words('english'))

print "Stop words",list(sw)[:7]

gb=nltk.corpus.gutenberg

print "Gutenberg files",gb.fileids()[-5:]

text_sent=gb.sents("milton-paradise.txt")[:2] #取前两个句子

print "Unfiltered:",text_sent

for sent in text_sent: #去除停用词

filtered=[w for w in sent if w.lower() not in sw]

print "Filtered:",filtered

taggled=nltk.pos_tag(filtered) #输出每个词的标签数据

print "Tagged:",taggled

words=[]

for word in taggled: #过滤标签数据

if word[1]!='NNP' and word[1]!='CD':

words.append(word[0])

print words运行结果:

Stop words [u'all', u'just', u'being', u'over', u'both', u'through', u'yourselves']

Gutenberg files [u'milton-paradise.txt', u'shakespeare-caesar.txt', u'shakespeare-hamlet.txt', u'shakespeare-macbeth.txt', u'whitman-leaves.txt']

Unfiltered: [[u'[', u'Paradise', u'Lost', u'by', u'John', u'Milton', u'1667', u']'], [u'Book', u'I']]

Filtered: [u'[', u'Paradise', u'Lost', u'John', u'Milton', u'1667', u']']

Tagged: [(u'[', 'JJ'), (u'Paradise', 'NNP'), (u'Lost', 'NNP'), (u'John', 'NNP'), (u'Milton', 'NNP'), (u'1667', 'CD'), (u']', 'NN')]

[u'[', u']']

Filtered: [u'Book']

Tagged: [(u'Book', 'NN')]

[u'Book']3、词袋模型

所谓词袋模型,即它认为一篇文档是由其中的词构成的一个集合,词与词之间没有顺序以及先后的关系。对于文档中的每个单词,我们都需要计算它出现的次数,即单词计数,据此,我们可以进行垃圾邮件识别之类的统计分析。

利用所有单词的计数,可以为每个文档建立一个特征向量,如果一个单词存在于语料库中,但是不存在于文档中,那么这个特征的值就为0,nltk中并不存在创建特征向量的应用程序,需要借助python机器学习库scikit-learn中的CountVectorizer类来轻松创建特征向量。

首先安装scikit-learn,代码:

pip install scikit-learn然后可以加载文档,去除停用词,创建向量:

#coding:utf8

import nltk

from sklearn.feature_extraction.text import CountVectorizer

#加载两个文档

gb=nltk.corpus.gutenberg

hamlet=gb.raw("shakespeare-hamlet.txt")

macbeth=gb.raw("shakespeare-macbeth.txt")

#去除停用词并生成特征向量

cv=CountVectorizer(stop_words="english")

print "Feature Vector:",cv.fit_transform([hamlet,macbeth]).toarray()运行结果:

Feature Vector: [[ 1 0 1 ..., 14 0 1]

[ 0 1 0 ..., 1 1 0]]4、词频分析

NLTK提供的FreqDist类可以用来将单词封装成字典,并计算给定单词列表中各个单词出现的次数。下面,我们来加载Gutenberg项目中莎士比亚的Julius Caesar中的文本。代码:

#coding:utf8

import nltk

import string

gb=nltk.corpus.gutenberg

sw=set(nltk.corpus.stopwords.words('english'))

words=gb.words("shakespeare-caesar.txt") #加载文档

punctuation=set(string.punctuation) #去除标点符号

filtered=[w.lower() for w in words if w.lower() not in sw and w.lower()not in punctuation]

fd=nltk.FreqDist(filtered) #词频统计

print "Words:",fd.keys()[:5]

print "Counts:",fd.values()[:5]

print "Max:",fd.max()

print "Count",fd['pardon']

#bigrams:对双字词进行统计分析

#trigrams:对三字词进行统计分析

fd=nltk.FreqDist(nltk.bigrams(filtered)) #对双字词进行统计分析

print "Bigrams:",fd.keys()[:5]

print "Counts:",fd.values()[:5]

print "Bigram Max:",fd.max()

print "Bigram Count",fd['decay', 'vseth']运行结果:

Words: [u'fawn', u'writings', u'legacies', u'pardon', u'hats']

Counts: [1, 1, 1, 10, 1]

Max: caesar

Count 10

Bigrams: [(u'bru', u'must'), (u'bru', u'patient'), (u'angry', u'flood'), (u'decay', u'vseth'), (u'cato', u'braue')]

Counts: [1, 1, 1, 1, 1]

Bigram Max: (u'let', u'vs')

Bigram Count 15、朴素贝页斯分类

朴素贝页斯分类是机器学习中常见的算法,常常用于文本文档的研究,它是一个概率算法,基于概率与数理统计中的贝页斯定理。

代码:

#coding:utf8

import nltk

import string

import random

gb=nltk.corpus.gutenberg

sw=set(nltk.corpus.stopwords.words('english'))

punctuation=set(string.punctuation) #去除标点符号

def word_features(word): #计算单词长度

return {'len':len(word)}

def isStopWord(word): #判断是否是停用词

return word in sw or word in punctuation

words=gb.words("shakespeare-caesar.txt") #加载文档

labeled_words=([(word.lower(),isStopWord(word.lower())) for word in words])

random.seed(42)

random.shuffle(labeled_words) #元组随机排序

print labeled_words[:5]

featuresets=[(word_features(n),word) for (n,word) in labeled_words]

cutoff=int(.9*len(featuresets))

train_set,test_set=featuresets[:cutoff],featuresets[cutoff:] #划分训练集和测试集

classifier=nltk.NaiveBayesClassifier.train(train_set)

print "'behold' class:",classifier.classify(word_features('behold'))

print "'the' class:",classifier.classify(word_features('the'))

print "Accuracy:",nltk.classify.accuracy(classifier,test_set) #计算模型准确率

print classifier.show_most_informative_features(5) #查看哪些特征贡献较大[(u'was', True), (u'greeke', False), (u'cause', False), (u'but', True), (u'house', False)]

'behold' class: False

'the' class: True

Accuracy: 0.857585139319

Most Informative Features

len = 7 False : True = 65.7 : 1.0

len = 1 True : False = 52.0 : 1.0

len = 6 False : True = 51.4 : 1.0

len = 5 False : True = 10.9 : 1.0

len = 2 True : False = 10.4 : 1.06、情感分析

随着社交媒体,产品评论网站及论坛的兴起,用来自动抽取意见的观点挖掘或情感分析也随之变成一个刺手可热的新研究领域。通常情况下,我们希望知道某个意见的性质是正面的,中立的,还是负面的。当然,这种类型的分类我们在前面就曾遇到过。也就是说,我们有大量的分类算法可用。还有一个方法就是,通过半自动(经过某些人工编辑)方法来编制一个单词列表,每个单词赋予一个情感分,即一个数值(单词“good“的情感分为5,而单词”bad“的情感分为-5)。如果有了这样一张表,就可以给文本文档中的所有单词打分,从而得出一个情感总分。当然,类别的数量可以大于3,如五星级分级方案。

我们会应用朴素贝叶斯分类方法对NLTK的影评语料进行分析,从而将影评分为正面的或负面的评价。首先,加载影评语料库,并过滤掉停用词和标点符号。这些步骤在此省略,因为之前就介绍过。也可以考虑更精细的过滤方案。不过,需要注意的是,如果过滤得过火了,就会影响准确性,

#coding:utf8

import random

from nltk.corpus import movie_reviews

from nltk.corpus import stopwords

from nltk import FreqDist

from nltk import NaiveBayesClassifier

from nltk.classify import accuracy

import string

#使用categories

labeled_docs=[(list(movie_reviews.words(fid)),cat)

for cat in movie_reviews.categories()

for fid in movie_reviews.fileids(cat)]

random.seed(42)

random.shuffle(labeled_docs)

#print labeled_docs[:1]

review_words=movie_reviews.words()

print "# Review Words:",len(review_words)

sw=set(stopwords.words('english'))

punctuation=set(string.punctuation) #去除标点符号

def isStopWord(word): #判断是否是停用词

return word in sw or word in punctuation

filtered=[w.lower() for w in review_words if not isStopWord(w.lower())]

print "#After filter:",len(filtered) #去除停用词后的长度

words=FreqDist(filtered) #词频统计

N=int(0.05*len(words.keys()))

word_features=words.keys()[:N]

def doc_features(doc):

doc_words=FreqDist(w for w in doc if not isStopWord(w))

features={}

for word in word_features:

features['count (%s)'%word]=(doc_words.get(word,0))

return features

featuresets=[(doc_features(d),c) for (d,c) in labeled_docs]

train_set,test_set=featuresets[200:],featuresets[:200]

classifier=NaiveBayesClassifier.train(train_set)

print "Accuracy",accuracy(classifier,test_set)

print classifier.show_most_informative_features()

运行结果:

# Review Words: 1583820

#After filter: 710579

Accuracy 0.695

Most Informative Features

count (nature) = 2 pos : neg = 8.5 : 1.0

count (ugh) = 1 neg : pos = 8.2 : 1.0

count (sans) = 1 neg : pos = 8.2 : 1.0

count (effortlessly) = 1 pos : neg = 6.3 : 1.0

count (mediocrity) = 1 neg : pos = 6.2 : 1.0

count (dismissed) = 1 pos : neg = 5.8 : 1.0

count (wits) = 1 pos : neg = 5.8 : 1.0

count (also) = 6 pos : neg = 5.8 : 1.0

count (want) = 3 neg : pos = 5.5 : 1.0

count (caan) = 1 neg : pos = 5.5 : 1.07、创建词云

可以直接利用wordle网站在线创建词云,地址:http://www.wordle.net/advanced。网站需要支持Java插件,最好使用MAC的Safari浏览器。利用Wordle生成词云时,需要提供一个单词列表及其对应的权值,具体格式为word1 : weight

word2 :weight

利用之前代码,生成词频,代码如下:

#coding:utf8

import random

from nltk.corpus import movie_reviews

from nltk.corpus import stopwords

from nltk import FreqDist

from nltk import NaiveBayesClassifier

from nltk.classify import accuracy

import string

sw=set(stopwords.words('english'))

punctuation=set(string.punctuation) #去除标点符号

def isStopWord(word): #判断是否是停用词

return word in sw or word in punctuation

review_words=movie_reviews.words()

filtered=[w.lower() for w in review_words if not isStopWord(w.lower())]

#print filtered

words=FreqDist(filtered) #词频统计

N=int(0.01*len(words.keys()))

tags=words.keys()[:N]

for tag in tags:

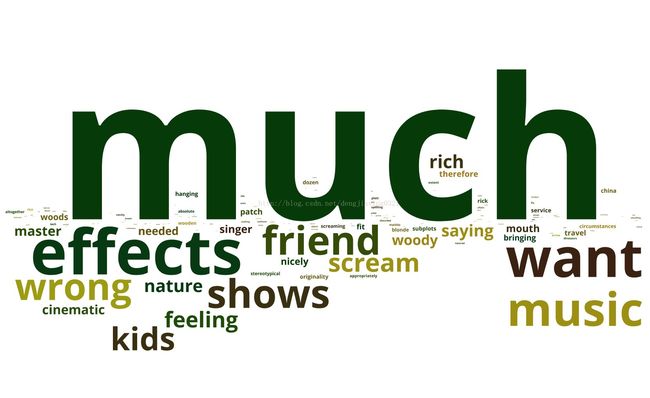

print tag,":",words[tag]将上面运行结果复制粘贴到wordle页面,就可以得到如下词云图:

仔细研究这个词云图,发现并不完美,还有很大改进空间。因此可以进一步改进:

进一步过滤:剔除包含数字字符和姓名的单词,可以借助NLTK的names语料库。此外,对于只出现一次的单词,可以置之不理,因为不太可能提供足够有价值的信息。

使用更好的度量标签:词频和逆文档频率(TF-IDF)

度量指标TF-IDF可以通过对语料库的单词进行排名,并据此赋予这些单词相应的权重。这些权重的值与单词在特定文档中出现的次数即词频成正比。同时,它还与语料库中含有改单词的文档数量成反比,及逆文档频率。TF-IDF的值为词频和逆文档频率之积。如果需要自己动手实现TF-IDF,那么还必须考虑对数标处理,幸运的是,scikit-learn已经为我们准备好了一个TfidfVectorizer类,它有效实现了TF-IDF。

代码:

进一步过滤:剔除包含数字字符和姓名的单词,可以借助NLTK的names语料库。此外,对于只出现一次的单词,可以置之不理,因为不太可能提供足够有价值的信息。

使用更好的度量标签:词频和逆文档频率(TF-IDF)

度量指标TF-IDF可以通过对语料库的单词进行排名,并据此赋予这些单词相应的权重。这些权重的值与单词在特定文档中出现的次数即词频成正比。同时,它还与语料库中含有改单词的文档数量成反比,及逆文档频率。TF-IDF的值为词频和逆文档频率之积。如果需要自己动手实现TF-IDF,那么还必须考虑对数标处理,幸运的是,scikit-learn已经为我们准备好了一个TfidfVectorizer类,它有效实现了TF-IDF。

代码:

#coding:utf8

import random

from nltk.corpus import movie_reviews

from nltk.corpus import stopwords

from nltk.corpus import names

from nltk import FreqDist

from nltk import NaiveBayesClassifier

from nltk.classify import accuracy

from sklearn.feature_extraction.text import TfidfVectorizer

import itertools

import pandas as pd

import numpy as np

import string

sw=set(stopwords.words('english'))

punctuation=set(string.punctuation) #去除标点符号

all_names=set([name.lower() for name in names.words()]) #得到所有名字信息

def isStopWord(word): #判断是否是停用词 isalpha函数判断字符是否都是由字母组成

return (word in sw or word in punctuation or word in all_names or not word.isalpha())

review_words=movie_reviews.words()

filtered=[w.lower() for w in review_words if not isStopWord(w.lower())]

#print filtered

words=FreqDist(filtered) #词频统计

texts=[]

for fid in movie_reviews.fileids():

#print fid fid表示文件

texts.append(" ".join([w.lower() for w in movie_reviews.words(fid) if not isStopWord(w.lower()) and words[w.lower()]>1]))

vectorizer=TfidfVectorizer(stop_words='english')

matrix=vectorizer.fit_transform(texts) #计算TD-IDF

#print matrix

sums=np.array(matrix.sum(axis=0)).ravel() #每个单词的TF-IDF值求和,并将结果存在numpy数组

ranks=[]

#itertools.izip把不同的迭代器的元素聚合到一个迭代器中。类似zip()方法,但是返回的是一个迭代器而不是一个list

for word, val in itertools.izip(vectorizer.get_feature_names(),sums):

ranks.append((word,val))

df=pd.DataFrame(ranks,columns=["term","tfidf"])

df=df.sort_values(['tfidf'])

#print df.head()

N=int(0.01*len(df)) #得到排名靠前的1%

df=df.tail(N)

for term,tfidf in itertools.izip(df["term"].values,df["tfidf"].values):

print term,":",tfidf

# tags=words.keys()[:N]

# for tag in tags:

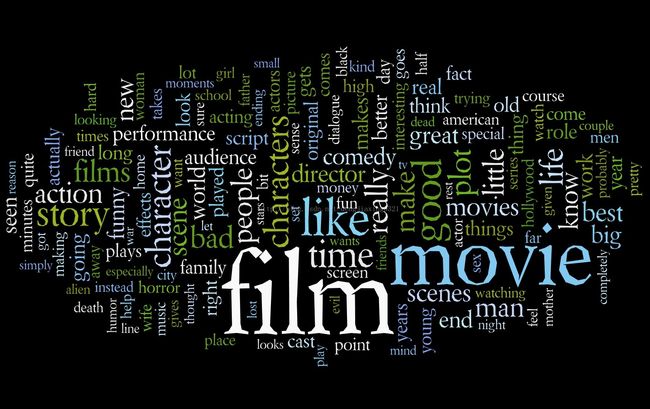

# print tag,":",words[tag]同样放到wordle中生成词云图:

8、社交网络分析

所谓社交网络分析,实际上就是利用网络理论来研究社会关系。其中网络的节点代表的是网络中的参与者。节点之间的连线代表的是参与者之间的相互关系。本节介绍如何使用Python库NetworkX来分析简单的图。并通过matplotlib库对这些网络图可视化。

安装networkx:pip install networkx

networkx提供许多示例图,可以列出,具体代码如下:

#coding:utf8

import networkx as nx

#networkx提供许多示例图,可以列出

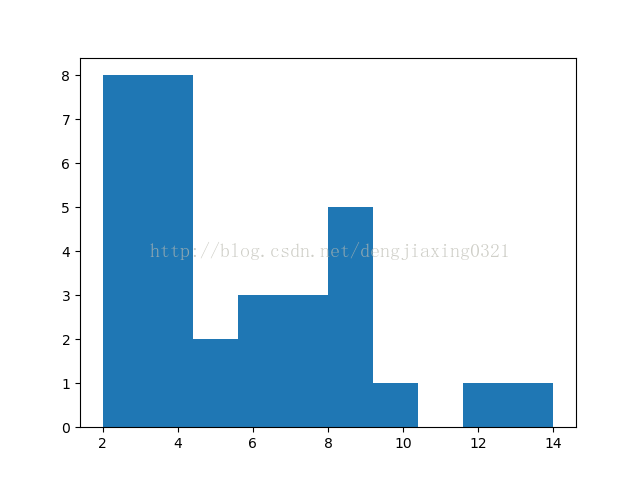

print [s for s in dir(nx) if s.endswith('graph')]['LCF_graph', 'adjacency_graph', 'barabasi_albert_graph', 'barbell_graph', 'binomial_graph', 'bull_graph', 'caveman_graph', 'chordal_cycle_graph', 'chvatal_graph', 'circulant_graph', 'circular_ladder_graph', 'complete_bipartite_graph', 'complete_graph', 'complete_multipartite_graph', 'connected_caveman_graph', 'connected_watts_strogatz_graph', 'cubical_graph', 'cycle_graph', 'cytoscape_graph', 'davis_southern_women_graph', 'dense_gnm_random_graph', 'desargues_graph', 'diamond_graph', 'digraph', 'directed_havel_hakimi_graph', 'dodecahedral_graph', 'dorogovtsev_goltsev_mendes_graph', 'duplication_divergence_graph', 'edge_subgraph', 'ego_graph', 'empty_graph', 'erdos_renyi_graph', 'expected_degree_graph', 'extended_barabasi_albert_graph', 'fast_gnp_random_graph', 'florentine_families_graph', 'frucht_graph', 'gaussian_random_partition_graph', 'general_random_intersection_graph', 'geographical_threshold_graph', 'gn_graph', 'gnc_graph', 'gnm_random_graph', 'gnp_random_graph', 'gnr_graph', 'graph', 'grid_2d_graph', 'grid_graph', 'havel_hakimi_graph', 'heawood_graph', 'hexagonal_lattice_graph', 'hoffman_singleton_graph', 'house_graph', 'house_x_graph', 'hypercube_graph', 'icosahedral_graph', 'induced_subgraph', 'is_directed_acyclic_graph', 'jit_graph', 'joint_degree_graph', 'json_graph', 'k_random_intersection_graph', 'karate_club_graph', 'kl_connected_subgraph', 'krackhardt_kite_graph', 'ladder_graph', 'line_graph', 'lollipop_graph', 'make_max_clique_graph', 'make_small_graph', 'margulis_gabber_galil_graph', 'moebius_kantor_graph', 'multidigraph', 'multigraph', 'navigable_small_world_graph', 'newman_watts_strogatz_graph', 'node_link_graph', 'null_graph', 'nx_agraph', 'octahedral_graph', 'pappus_graph', 'partial_duplication_graph', 'path_graph', 'petersen_graph', 'planted_partition_graph', 'powerlaw_cluster_graph', 'projected_graph', 'quotient_graph', 'random_clustered_graph', 'random_degree_sequence_graph', 'random_geometric_graph', 'random_k_out_graph', 'random_kernel_graph', 'random_partition_graph', 'random_regular_graph', 'random_shell_graph', 'relabel_gexf_graph', 'relaxed_caveman_graph', 'scale_free_graph', 'sedgewick_maze_graph', 'star_graph', 'stochastic_graph', 'subgraph', 'tetrahedral_graph', 'to_networkx_graph', 'tree_graph', 'triad_graph', 'triangular_lattice_graph', 'trivial_graph', 'truncated_cube_graph', 'truncated_tetrahedron_graph', 'turan_graph', 'tutte_graph', 'uniform_random_intersection_graph', 'watts_strogatz_graph', 'waxman_graph', 'wheel_graph', 'windmill_graph']导入davis_southern_women_graph,并绘制各个节点的度的柱状图,代码如下:

G=nx.davis_southern_women_graph()

plt.figure(1)

a={}

a=dict(nx.degree(G))

plt.hist(a.values())plt.figure(2)

pos=nx.spring_layout(G)

nx.draw(G,node_size=9)

nx.draw_networkx_labels(G,pos)

plt.show()