两层卷积网络实现手写字母的识别(基于tensorflow)

可和这篇文章对比,https://blog.csdn.net/fanzonghao/article/details/81489049,数据集来源代码和链接一样。

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import read_pickle_dataset

train_dataset,train_label,valid_dataset,valid_label,test_dataset,test_label=read_pickle_dataset.pickle_dataset()

print('Training:', train_dataset.shape, train_label.shape)

print('Validing:', valid_dataset.shape, valid_label.shape)

print('Testing:', test_dataset.shape, test_label.shape)

print(valid_label[0])

"""

变化维度

"""

def reformat(dataset):

dataset=dataset.reshape(-1,28,28,1).astype(np.float32)

return dataset

"""

计算精度

"""

def accuracy(predictions,lables):

acc=np.sum(np.argmax(predictions,1)==np.argmax(lables,1))/lables.shape[0]

return acc

train_dataset=reformat(train_dataset)

valid_dataset=reformat(valid_dataset)

test_dataset=reformat(test_dataset)

print('Training:', train_dataset.shape, train_label.shape)

print('Validing:', valid_dataset.shape, valid_label.shape)

print('Testing:', test_dataset.shape, test_label.shape)

tf_valid_dataset=tf.constant(valid_dataset)

tf_test_dataset=tf.constant(test_dataset)

batch_size=128

def creat_placeholder():

X = tf.placeholder(dtype=tf.float32, shape=(None, 28,28,1))

Y = tf.placeholder(dtype=tf.float32, shape=(None, 10))

return X,Y

"""

初始化权重:两层卷积

"""

def initialize_parameters():

# CNN权重初始化

W1 = tf.Variable(tf.truncated_normal(shape=[5,5,1,16],stddev=np.sqrt(2.0/(5*5*1))))

b1=tf.Variable(tf.zeros(16))

W2 = tf.Variable(tf.truncated_normal(shape=[5, 5, 16, 16], stddev=np.sqrt(2.0 / (5 * 5*16))))

b2 = tf.Variable(tf.constant(1.0,shape=[16]))

parameters={'W1':W1,

'b1':b1,

'W2':W2,

'b2':b2

}

return parameters

def forward_propagation(X,parameters):

W1 = parameters['W1']

b1 = parameters['b1']

W2 = parameters['W2']

b2 = parameters['b2']

Z1=tf.nn.conv2d(X,W1,strides=[1,1,1,1],padding='SAME')

print('Z1.shape',Z1.shape)

A1=tf.nn.relu(Z1+b1)

P1 = tf.nn.max_pool(A1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

print(P1.shape)

Z2 = tf.nn.conv2d(P1, W2, strides=[1, 1, 1, 1], padding='SAME')

print(Z2.shape)

A2 = tf.nn.relu(Z2 + b2)

P2 = tf.nn.max_pool(A2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

print(P2.shape)

P_flatten = tf.contrib.layers.flatten(P2)

output = tf.contrib.layers.fully_connected(P_flatten, 10, activation_fn=None)

return output

def compute_cost(Y,Y_pred):

#计算loss

loss=tf.reduce_mean( #(10000,10) (10000,10)

tf.nn.softmax_cross_entropy_with_logits_v2(labels=Y,logits=Y_pred))

return loss

def model():

X,Y=creat_placeholder()

parameters=initialize_parameters()

Y_pred=forward_propagation(X, parameters)

loss=compute_cost(Y, Y_pred)

optimizer=tf.train.GradientDescentOptimizer(learning_rate=0.05).minimize(loss)

# 预测

train_prediction = tf.nn.softmax(Y_pred)

init = tf.global_variables_initializer()

corect_prediction = tf.equal(tf.argmax(Y_pred, 1), tf.argmax(Y, 1))

accuarcy = tf.reduce_mean(tf.cast(corect_prediction, 'float'))

with tf.Session() as sess:

print('initialize')

sess.run(init)

costs = []

for step in range(1001):

offset = (step * batch_size) % (train_label.shape[0] - batch_size)

batch_data = train_dataset[offset:(offset + batch_size), :, :, :]

#batch_data = train_dataset[offset:(offset + batch_size), :]

batch_label = train_label[offset:(offset + batch_size), :]

feed = {X: batch_data, Y: batch_label}

_, train_predictions, cost = sess.run([optimizer, train_prediction, loss], feed_dict=feed)

if step%100==0:

costs.append(cost)

print('loss ={},at step {}'.format(cost, step))

print('train accuracy={}'.format(accuracy(train_predictions, batch_label)))

feed = {X: valid_dataset, Y: valid_label}

valid_accuarcy = sess.run(accuarcy, feed_dict=feed)

print('valid accuracy={}'.format(valid_accuarcy))

feed = {X: test_dataset, Y: test_label}

test_accuarcy = sess.run(accuarcy, feed_dict=feed)

print('Test accuracy={}'.format(test_accuarcy))

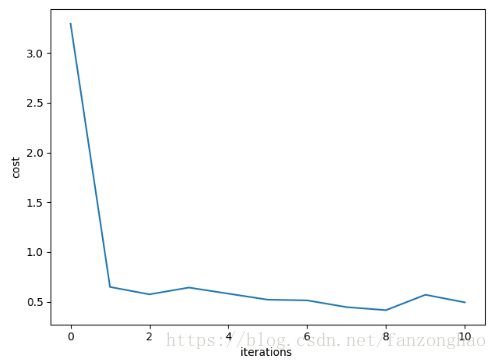

plt.plot(costs)

plt.ylabel('cost')

plt.xlabel('iterations ')

plt.savefig('ELU.jpg')

plt.show()

if __name__ == '__main__':

model()