【Flume】准实时增量采集MySQL数据到HBase和Hive的集成表

本文是将MySQL中的增量数据准实时采集到HBase和Hive的集成表里,实质上就是在Hive中创建HBase的映射表,两张表数据同步。HBase适合做在线实时的数据存储,而Hive适合做离线数据处理(将SQL语句转换为MapReduce作业)。要从MySQL中准实时的采集数据,但官方没有相关实现,于是用自定义开源的source:https://github.com/keedio/flume-ng-sql-source

但启动flume之后,DEBUG报错:select * from student limit ?,?,我的query语句里并没有limit,卡在这一天,各种尝试无果

下面是自己开发的功能类似flume-ng-source,但显然不如flume-ng-sql-source功能强大,但能满足我个人需求,与flume-ng-sql-source 不同的是,我将当前索引存进MySQL的一张表(flume_meta)里

下面就来具体操作一下,有错误请斧正

先在mysql中创建student表,并插入两条数据

student必须具有增量字段,这里设置id为主键自增

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| flume |

| hive |

| mysql |

| performance_schema |

| sys |

+--------------------+

6 rows in set (0.00 sec)

mysql> use flume;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> create table student(id int primary key auto_increment,name varchar(20),age int );

Query OK, 0 rows affected (0.36 sec)

mysql> show tables;

+-----------------+

| Tables_in_flume |

+-----------------+

| student |

+-----------------+

1 row in set (0.00 sec)

mysql> insert into student values(1,"wang",20);

Query OK, 1 row affected (0.05 sec)

mysql> insert into student values(2,"zhang",18);

Query OK, 1 row affected (0.12 sec)

mysql> select *from student;

+----+-------+------+

| id | name | age |

+----+-------+------+

| 1 | wang | 20 |

| 2 | zhang | 18 |

+----+-------+------+

2 rows in set (0.00 sec)

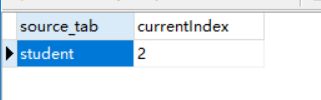

再新建一个元数据表,用于存储采集的表名和当前索引,必须指定source_tab为主键,不然flume运行报错

mysql> create table flume_meta (source_tab varchar(255) NOT NULL,currentIndex varchar(255) NOT NULL,PRIMARY KEY (source_tab));

Query OK, 0 rows affected (0.41 sec)

创建HBase表

hbase(main):001:0> list_namespace

NAMESPACE

default

hbase

2 row(s) in 0.1510 seconds

hbase(main):002:0> create_namespace 'testdb'

0 row(s) in 1.2280 seconds

hbase(main):003:0> create 'testdb:student','info'

0 row(s) in 2.4050 seconds

=> Hbase::Table - testdb:student

hbase(main):004:0> list_namespace_tables 'testdb'

TABLE

student

1 row(s) in 0.0070 seconds

flume将mysql数据导入hbase

将自定义的jar和mysql驱动丢到flume目录的lib下

在flume的conf目录下新建student.conf

写入如下

#student.conf

#name

agent.sources = sql-source

agent.channels = ch1

agent.sinks = hbase-sink

#source

agent.sources.sql-source.type = com.soou.source.SQLSource

agent.sources.sql-source.connection.url = jdbc:mysql://192.168.1.12:3306/flume

agent.sources.sql-source.connection.user = root

agent.sources.sql-source.connection.password = root506

agent.sources.sql-source.table = student

agent.sources.sql-source.columns.to.select = *

agent.sources.sql-source.incremental.column.name = id

agent.sources.sql-source.incremental.value = 0

#多久查一次,默认为10s,单位为ms

agent.sources.sql-source.run.query.delay = 5000

#channel

agent.channels.ch1.type = memory

#数据量大可开启

#agent.channels.ch1.capacity = 1000

#agent.channels.ch1.transactionCapacity = 100

#sink

#logger sink

#agent.sinks.hbase-sink.type = logger

agent.sinks.hbase-sink.type = org.apache.flume.sink.hbase.HBaseSink

agent.sinks.hbase-sink.table = testdb:student

agent.sinks.hbase-sink.columnFamily = info

#采用RegexHbaseEventSerializer序列化

agent.sinks.hbase-sink.serializer = org.apache.flume.sink.hbase.RegexHbaseEventSerializer

#agent.sinks.hbase-sink.serializer.regex = ^\"(.*?)\",\"(.*?)\",\"(.*?)\",$

#使用正则拆分event

agent.sinks.hbase-sink.serializer.regex = ^(.*?),(.*?),(.*?),$

#指定在info下的列名

agent.sinks.hbase-sink.serializer.colNames = id,name,age,

#绑定

agent.sources.sql-source.channels = ch1

agent.sinks.hbase-sink.channel = ch1

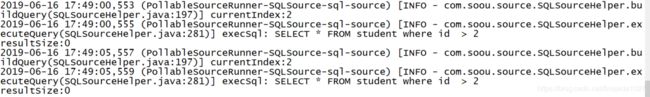

开启flume,控制台输出相关信息

flume-ng agent --name agent --conf $FLUME_HOME/conf --conf-file $FLUME_HOME/conf/student.conf -Dflume.root.logger=INFO,console

查看状态表,原本为空

查看HBase表,数据已按指定格式存入HBase

hbase(main):051:0> scan 'testdb:student'

ROW COLUMN+CELL

1560678526500-GnZMhll4wD-0 column=info:age, timestamp=1560678529624, value=20

1560678526500-GnZMhll4wD-0 column=info:id, timestamp=1560678529624, value=1

1560678526500-GnZMhll4wD-0 column=info:name, timestamp=1560678529624, value=wang

1560678526506-GnZMhll4wD-1 column=info:age, timestamp=1560678529624, value=18

1560678526506-GnZMhll4wD-1 column=info:id, timestamp=1560678529624, value=2

1560678526506-GnZMhll4wD-1 column=info:name, timestamp=1560678529624, value=zhang

2 row(s) in 0.0310 seconds

在Hive中创建testdb:student的映射表,查看数据

hive (default)> show databases;

OK

database_name

default

Time taken: 2.966 seconds, Fetched: 1 row(s)

hive (default)> create database testdb;

OK

Time taken: 0.226 seconds

hive (default)> CREATE EXTERNAL TABLE testdb.hivestu(key string, id int,name string,age int) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,info:id,info:name,info:age") TBLPROPERTIES("hbase.table.name" = "testdb:student");

OK

Time taken: 1.453 seconds

hive (default)> use testdb;

OK

Time taken: 0.017 seconds

hive (testdb)> show tables;

OK

tab_name

hivestu

Time taken: 0.019 seconds, Fetched: 1 row(s)

hive (testdb)> select * from hivestu;

OK

hivestu.key hivestu.id hivestu.name hivestu.age

1560678526500-GnZMhll4wD-0 1 wang 20

1560678526506-GnZMhll4wD-1 2 zhang 18

Time taken: 0.15 seconds, Fetched: 2 row(s)

测试数据准实时采集

再往mysql中插入两条数据,查看控制台显示,已打印相关信息

查看HBase表,增量数据已被采集存储

hbase(main):052:0> scan 'testdb:student'

ROW COLUMN+CELL

1560678526500-GnZMhll4wD-0 column=info:age, timestamp=1560678529624, value=20

1560678526500-GnZMhll4wD-0 column=info:id, timestamp=1560678529624, value=1

1560678526500-GnZMhll4wD-0 column=info:name, timestamp=1560678529624, value=wang

1560678526506-GnZMhll4wD-1 column=info:age, timestamp=1560678529624, value=18

1560678526506-GnZMhll4wD-1 column=info:id, timestamp=1560678529624, value=2

1560678526506-GnZMhll4wD-1 column=info:name, timestamp=1560678529624, value=zhang

1560678815764-GnZMhll4wD-2 column=info:age, timestamp=1560678818789, value=26

1560678815764-GnZMhll4wD-2 column=info:id, timestamp=1560678818789, value=3

1560678815764-GnZMhll4wD-2 column=info:name, timestamp=1560678818789, value=tom

1560678833785-GnZMhll4wD-3 column=info:age, timestamp=1560678836804, value=26

1560678833785-GnZMhll4wD-3 column=info:id, timestamp=1560678836804, value=4

1560678833785-GnZMhll4wD-3 column=info:name, timestamp=1560678836804, value=jerry

4 row(s) in 0.0320 seconds

查看Hive表

hive (testdb)> select * from hivestu;

OK

hivestu.key hivestu.id hivestu.name hivestu.age

1560678526500-GnZMhll4wD-0 1 wang 20

1560678526506-GnZMhll4wD-1 2 zhang 18

1560678815764-GnZMhll4wD-2 3 tom 26

1560678833785-GnZMhll4wD-3 4 jerry 26

Time taken: 0.131 seconds, Fetched: 4 row(s)

数据已经能从MySQL增量采集到HBase和Hive的集成表