goharbor harbor-helm 搭建 记录

先本地安装

本地docker已经有了

安装 yum install docker-compose

下载离线安装包 https://github.com/goharbor/harbor/releases

这种方式不支持helm chart, 重新使用k8s安装 helm chart

https://github.com/goharbor/harbor-helm

添加源

helm repo add harbor https://helm.goharbor.io

helm repo update开始安装, 这里面的持久化卷, 昨天选择使用nfs,没有用起来 , 原来是pv跟pvc是一一对应关系, 只使用静态方式创建了一个pv, 被mysql pvc 使用了,导致后面的都无法申请到pv, 所以采用动态方式去创建pv

参考外部存储 https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy

外部存储部署参考:

storageClass 应该使用 managed-nfs-storage

先卸载干净原来的

helm del --purge harbor

清除pvc

kubectl delete pvc harbor-harbor-chartmuseum

kubectl delete pvc harbor-harbor-jobservice

kubectl delete pvc harbor-harbor-registry

kubectl delete pvc data-harbor-harbor-redis-0

kubectl delete pvc database-data-harbor-harbor-database-0这个反斜杠的使用也有讲究, 下面的写法就执行不了,报错 Error: This command needs 1 argument: chart name

helm install --name harbor --set \

expose.type=nodePort,expose.tls.enabled=false,expose.tls.commonName=harbor,\

persistence.persistentVolumeClaim.registry.storageClass=nfs-client, \

persistence.persistentVolumeClaim.registry.size=2Gi, \

persistence.persistentVolumeClaim.chartmuseum.storageClass=nfs-client, \

persistence.persistentVolumeClaim.chartmuseum.size=1Gi, \

persistence.persistentVolumeClaim.jobservice.storageClass=nfs-client,\

persistence.persistentVolumeClaim.database.storageClass=nfs-client, \

persistence.persistentVolumeClaim.redis.storageClass=nfs-client \

harbor/harbor改成这种方式就可以执行了

helm install --name harbor \

--set expose.type=nodePort,expose.tls.enabled=false,expose.tls.commonName=harbor,persistence.persistentVolumeClaim.registry.storageClass=nfs-client,persistence.persistentVolumeClaim.registry.size=2Gi,persistence.persistentVolumeClaim.chartmuseum.storageClass=nfs-client,persistence.persistentVolumeClaim.chartmuseum.size=1Gi,persistence.persistentVolumeClaim.jobservice.storageClass=nfs-client,persistence.persistentVolumeClaim.database.storageClass=nfs-client,persistence.persistentVolumeClaim.redis.storageClass=nfs-client \

harbor/harbor执行结果如下

[root@node0 ~]# helm install --name harbor \

> --set expose.type=nodePort,expose.tls.enabled=false,expose.tls.commonName=harbor,persistence.persistentVolumeClaim.registry.storageClass=nfs-client,persistence.persistentVolumeClaim.registry.size=2Gi,persistence.persistentVolumeClaim.chartmuseum.storageClass=nfs-client,persistence.persistentVolumeClaim.chartmuseum.size=1Gi,persistence.persistentVolumeClaim.jobservice.storageClass=nfs-client,persistence.persistentVolumeClaim.database.storageClass=nfs-client,persistence.persistentVolumeClaim.redis.storageClass=nfs-client \

> harbor/harbor

NAME: harbor

LAST DEPLOYED: Tue Jun 25 11:33:46 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/ConfigMap

NAME DATA AGE

harbor-harbor-chartmuseum 23 1s

harbor-harbor-clair 1 1s

harbor-harbor-core 34 1s

harbor-harbor-jobservice 1 1s

harbor-harbor-nginx 1 1s

harbor-harbor-notary-server 5 1s

harbor-harbor-registry 2 1s

==> v1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

harbor-harbor-chartmuseum 0/1 1 0 1s

harbor-harbor-clair 0/1 1 0 1s

harbor-harbor-core 0/1 1 0 1s

harbor-harbor-jobservice 0/1 0 0 1s

harbor-harbor-notary-server 0/1 0 0 1s

harbor-harbor-notary-signer 0/1 0 0 1s

harbor-harbor-portal 0/1 0 0 1s

harbor-harbor-registry 0/1 0 0 1s

==> v1/PersistentVolumeClaim

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

harbor-harbor-chartmuseum Pending nfs-client 1s

harbor-harbor-jobservice Bound pvc-0ab3ab52-96fa-11e9-837b-000c2915f050 1Gi RWO nfs-client 1s

harbor-harbor-registry Bound pvc-0ab41cac-96fa-11e9-837b-000c2915f050 2Gi RWO nfs-client 1s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

harbor-harbor-chartmuseum-7ff65b9699-f8s5x 0/1 Pending 0 1s

harbor-harbor-clair-54c47dfdfc-nxxkf 0/1 ContainerCreating 0 1s

harbor-harbor-core-5884f5fc7d-bj88g 0/1 ContainerCreating 0 1s

harbor-harbor-database-0 0/1 Pending 0 0s

harbor-harbor-jobservice-788bc864b6-9zmsz 0/1 Pending 0 1s

harbor-harbor-nginx-dbdb9d7ff-tcfks 0/1 Pending 0 1s

harbor-harbor-notary-server-6d85446444-mvtxj 0/1 Pending 0 1s

harbor-harbor-notary-signer-7794d76ff-mm7cm 0/1 Pending 0 1s

harbor-harbor-redis-0 0/1 Pending 0 0s

==> v1/Secret

NAME TYPE DATA AGE

harbor-harbor-chartmuseum Opaque 1 1s

harbor-harbor-core Opaque 7 1s

harbor-harbor-database Opaque 1 1s

harbor-harbor-jobservice Opaque 1 1s

harbor-harbor-registry Opaque 2 1s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

harbor NodePort 10.1.42.97 80:30002/TCP,4443:30004/TCP 1s

harbor-harbor-chartmuseum ClusterIP 10.1.29.63 80/TCP 1s

harbor-harbor-clair ClusterIP 10.1.213.52 6060/TCP,6061/TCP 1s

harbor-harbor-core ClusterIP 10.1.42.246 80/TCP 1s

harbor-harbor-database ClusterIP 10.1.237.0 5432/TCP 1s

harbor-harbor-jobservice ClusterIP 10.1.77.55 80/TCP 1s

harbor-harbor-notary-server ClusterIP 10.1.254.26 4443/TCP 1s

harbor-harbor-notary-signer ClusterIP 10.1.16.142 7899/TCP 1s

harbor-harbor-portal ClusterIP 10.1.231.103 80/TCP 1s

harbor-harbor-redis ClusterIP 10.1.117.105 6379/TCP 1s

harbor-harbor-registry ClusterIP 10.1.40.234 5000/TCP,8080/TCP 1s

==> v1/StatefulSet

NAME READY AGE

harbor-harbor-database 0/1 1s

harbor-harbor-redis 0/1 0s

==> v1beta1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

harbor-harbor-nginx 0/1 0 0 1s

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the Harbor portal at https://core.harbor.domain.

For more details, please visit https://github.com/goharbor/harbor. 下面进k8s管理页面看一下状态

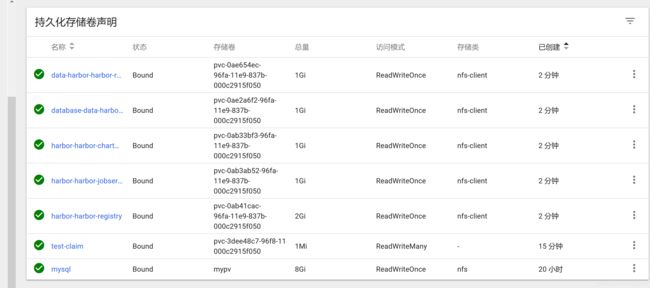

持久化卷声明全部成功了

每个个持久化卷声明都绑定了一个卷

目前harbor部署成功了, 怎么访问它的服务呢?

回想一个暴露服务的四种方式

The way how to expose the service: ingress, clusterIP, nodePortor loadBalancer

我们使用的是expose.type=nodePort

这个时候去服务看端口映射

http访问端口应该是 30002

http://192.168.220.128:30002/harbor/sign-in?redirect_url=%2Fharbor%2Fprojects

默认账号 admin ,密码 Harbor12345

这个端口我们在官方文档[https://github.com/goharbor/harbor-helm]的默认值里也能找到

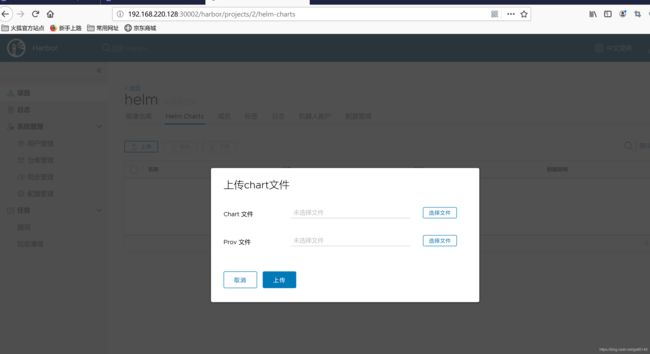

成功登入并出现Helm Charts 标签

下面记录一下使用过程

既然有这么好的工具来存储管理charts, 那么如何上传及使用?

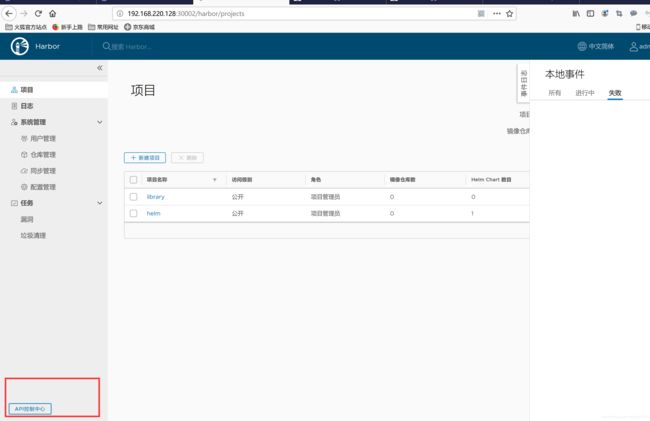

第一步得找repo的地址找出来 , 网上看到的都是非常简单, 直接把地址写出来,可是我写的却用不了

左下角有API控制中心,可以看到api的网址 http://192.168.220.128:30002/devcenter

这个接口不错,可以用来调用

如何找到网址,与其在网上搜索不如去官网看看, 下面查看官网

git hub上面没有找到有价值的信息

在网上找到类似的写法

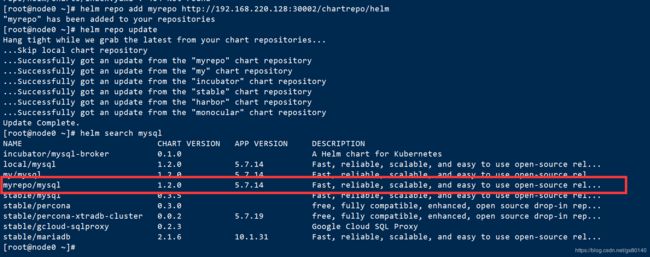

helm repo add myrepo http://192.168.220.128:30002/chartrepo/helm

chartrepo 是固定的, helm是我定义的项目名, 可以成功加进去

可以搜索到上传到 harbor helm项目下的mysql

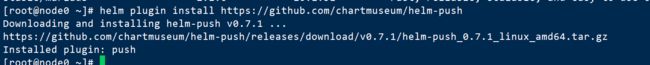

下面开始演练push, 需要提交安装 helm push

尝试命令 helm plugin install https://github.com/chartmuseum/helm-push

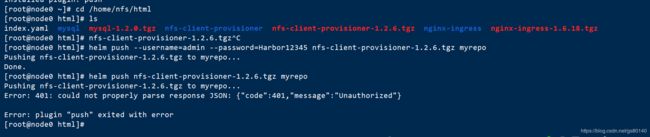

安装完成后尝试 push, 不加用户密码无法push的

cd /home/nfs/html

helm push --username=admin --password=Harbor12345 nfs-client-provisioner-1.2.6.tgz myrepo