序列到序列的语言翻译模型代码(tensorflow)解析

序列到序列的RNN语言机器翻译模型的tensorflow代码解析。

0x00.前言

(原文发表在博客,欢迎访问

这份代码最开始在基于RNN的语言模型与机器翻译NMT看到。本着溯本求源的心态,我搜了一下代码,找到了Brok-Bucholtz/P4-Beta/language_translation/dlnd_language_translation.ipynb

。

(更完整版在Language Translation

因为工作中需要,所以要对其代码及神经网络结构有所了解。但是其中涉及了很多seq2seq函数,我没有接触过。所以这里对其进行一次代码分析。

Ps:这份代码版本在貌似在1.0,而tensorflow到目前已经到1.3了。最重要的是,其中很多函数已经发生了变化。但是,作为学习,我们依旧可以从中学到很多东西。最新代码可以参考从Encoder到Decoder实现Seq2Seq模型 - 知乎专栏

0x01.代码解析

1.数据预处理

定义了两个函数text_to_ids解析文本,sentence_to_ids将一句话中的词转换为对应的id。

2.输入

定义函数model_inputs来创建tf.placeholder。

3.编码

RNN的编码层:

def encoding_layer(rnn_inputs, rnn_size, num_layers, keep_prob):

"""

Create encoding layer

:param rnn_inputs: Inputs for the RNN

:param rnn_size: RNN Size

:param num_layers: Number of layers

:param keep_prob: Dropout keep probability

:return: RNN state

"""

# TODO: Implement Function

# Encoder

# 首先创建多层lstm

enc_cell = tf.contrib.rnn.MultiRNNCell([tf.contrib.rnn.BasicLSTMCell(rnn_size)] * num_layers)

# 加dropout层

enc_cell = tf.contrib.rnn.DropoutWrapper(enc_cell, output_keep_prob=keep_prob)

# 动态rnn

rnn, state = tf.nn.dynamic_rnn(enc_cell, rnn_inputs, dtype=tf.float32)

return state4.解码-训练层

下面介绍几个其中用到的函数:

tf.contrib.seq2seq.simple_decoder_fn_train(encoder_state, name=None)在dynamic_rnn_decoder中使用的序列到序列模型的简单解码器。simple_decoder_fn_train是序列到序列模型的简单训练函数。当dynamic_rnn_decoder处于训练模式时应该使用它。tf.contrib.seq2seq.dynamic_rnn_decoder(cell, decoder_fn, inputs=None, sequence_length=None, parallel_iterations=None, swap_memory=False, time_major=False, scope=None, name=None)由RNNCell和解码器功能规定的序列到序列模型的动态RNN(drnn)解码器。有训练和推理两种模式。

def decoding_layer_train(encoder_state, dec_cell, dec_embed_input, sequence_length, decoding_scope,

output_fn, keep_prob):

"""

Create a decoding layer for training

:param encoder_state: Encoder State

:param dec_cell: Decoder RNN Cell

:param dec_embed_input: Decoder embedded input

:param sequence_length: Sequence Length

:param decoding_scope: TenorFlow Variable Scope for decoding

:param output_fn: Function to apply the output layer

:param keep_prob: Dropout keep probability

:return: Train Logits

"""

# TODO: Implement Function

train_decoder_fn = tf.contrib.seq2seq.simple_decoder_fn_train(encoder_state)

train_pred, _, _ = tf.contrib.seq2seq.dynamic_rnn_decoder(

dec_cell, train_decoder_fn, dec_embed_input, sequence_length, scope=decoding_scope)

# Apply output function

train_logits = output_fn(train_pred)

# dropout层

train_logits = tf.nn.dropout(train_logits, keep_prob)

return train_logits5.解码-推理层

tf.contrib.seq2seq.simple_decoder_fn_inference(output_fn, encoder_state, embeddings, start_of_sequence_id, end_of_sequence_id, maximum_length, num_decoder_symbols, dtype=tf.int32, name=None)在dynamic_rnn_decoder中使用的序列到序列模型的简单解码器

simple_decoder_fn_inference是序列到序列模型的简单推理函数。当dynamic_rnn_decoder处于推理模式时应该使用。

def decoding_layer_infer(encoder_state, dec_cell, dec_embeddings, start_of_sequence_id, end_of_sequence_id,

maximum_length, vocab_size, decoding_scope, output_fn, keep_prob):

"""

Create a decoding layer for inference

:param encoder_state: Encoder state

:param dec_cell: Decoder RNN Cell

:param dec_embeddings: Decoder embeddings

:param start_of_sequence_id: GO ID

:param end_of_sequence_id: EOS Id

:param maximum_length: The maximum allowed time steps to decode

:param vocab_size: Size of vocabulary

:param decoding_scope: TensorFlow Variable Scope for decoding

:param output_fn: Function to apply the output layer

:param keep_prob: Dropout keep probability

:return: Inference Logits

"""

# TODO: Implement Function

infer_decoder_fn = tf.contrib.seq2seq.simple_decoder_fn_inference(

output_fn, encoder_state, dec_embeddings, start_of_sequence_id, end_of_sequence_id,

maximum_length, vocab_size)

inference_logits, _, _ = tf.contrib.seq2seq.dynamic_rnn_decoder(dec_cell, infer_decoder_fn, scope=decoding_scope)

inference_logits = tf.nn.dropout(inference_logits, keep_prob)

return inference_logits6.构建解码层

引自原文:

构建解码层

- 实现decode_layer()来创建一个解码器RNN层。

- 使用rnn_size和num_layers创建RNN单元进行解码。

使用lambda创建输出函数,将其输入,logits转换为class logits。

- 使用您的decode_layer_train(encoder_state,dec_cell,dec_embed_input,sequence_length,decode_scope,output_fn,keep_prob)函数来获取训练分对数(logits)。

- 使用你的decode_layer_infer(encoder_state,dec_cell,dec_embeddings,start_of_sequence_id,end_of_sequence_id,maximum_length,vocab_size,decode_scope,output_fn,keep_prob)来获取推理分对数(logits)。

- (注意:您需要使用tf.variable_scope来在训练和推理之间共享变量。

def decoding_layer(dec_embed_input, dec_embeddings, encoder_state, vocab_size, sequence_length, rnn_size,

num_layers, target_vocab_to_int, keep_prob):

"""

Create decoding layer

:param dec_embed_input: Decoder embedded input

:param dec_embeddings: Decoder embeddings

:param encoder_state: The encoded state

:param vocab_size: Size of vocabulary

:param sequence_length: Sequence Length

:param rnn_size: RNN Size

:param num_layers: Number of layers

:param target_vocab_to_int: Dictionary to go from the target words to an id

:param keep_prob: Dropout keep probability

:return: Tuple of (Training Logits, Inference Logits)

"""

# Decoder RNNs

# 多层lstm

dec_cell = tf.contrib.rnn.MultiRNNCell([tf.contrib.rnn.BasicLSTMCell(rnn_size)] * num_layers)

with tf.variable_scope("decoding") as decoding_scope:

# Output Layer

# 添加全连接层

output_fn = lambda x: tf.contrib.layers.fully_connected(x, vocab_size, None, scope=decoding_scope)

# 解码-训练

train_logits = decoding_layer_train(encoder_state, dec_cell, dec_embed_input, sequence_length, decoding_scope,

output_fn, keep_prob)

with tf.variable_scope("decoding", reuse=True) as decoding_scope:

# 解码-推理

infer_logits = decoding_layer_infer(encoder_state, dec_cell, dec_embeddings, target_vocab_to_int['' ], target_vocab_to_int['' ],

sequence_length, vocab_size, decoding_scope, output_fn, keep_prob)

return train_logits, infer_logits7.构建神经网络

引自原文

将上述实现的功能应用于:

- 应用嵌入于解码的输入数据。

- 使用encoding_layer(rnn_inputs,rnn_size,num_layers,keep_prob)编码输入。

- 使用process_decoding_input(target_data,target_vocab_to_int,batch_size)函数处理目标数据。

- 应用嵌入于解码的目标数据。

- 使用decode_layer(dec_embed_input,dec_embeddings,encoder_state,vocab_size,sequence_length,rnn_size,num_layers,target_vocab_to_int,keep_prob)对编码输入进行解码。

tf.contrib.layers.embed_sequence将一系列符号(字符)映射到嵌入序列。典型的用例是在编码器和解码器之间重用嵌入。tf.nn.embedding_lookup在嵌入张量列表中查找ids。

def seq2seq_model(input_data, target_data, keep_prob, batch_size, sequence_length, source_vocab_size, target_vocab_size,

enc_embedding_size, dec_embedding_size, rnn_size, num_layers, target_vocab_to_int):

"""

Build the Sequence-to-Sequence part of the neural network

:param input_data: Input placeholder

:param target_data: Target placeholder

:param keep_prob: Dropout keep probability placeholder

:param batch_size: Batch Size

:param sequence_length: Sequence Length

:param source_vocab_size: Source vocabulary size

:param target_vocab_size: Target vocabulary size

:param enc_embedding_size: Decoder embedding size

:param dec_embedding_size: Encoder embedding size

:param rnn_size: RNN Size

:param num_layers: Number of layers

:param target_vocab_to_int: Dictionary to go from the target words to an id

:return: Tuple of (Training Logits, Inference Logits)

"""

# TODO: Implement Function

enc_embed_input = tf.contrib.layers.embed_sequence(input_data, source_vocab_size, enc_embedding_size)

encoder_state = encoding_layer(enc_embed_input, rnn_size, num_layers, keep_prob)

target_data = process_decoding_input(target_data, target_vocab_to_int, batch_size)

#target_embed = tf.contrib.layers.embed_sequence(target_data, target_vocab_size, dec_embedding_size)

dec_embeddings = tf.Variable(tf.random_uniform([target_vocab_size, dec_embedding_size]))

target_embed = tf.nn.embedding_lookup(dec_embeddings, target_data)

return decoding_layer(target_embed, dec_embeddings, encoder_state, target_vocab_size, sequence_length, rnn_size, \

num_layers, target_vocab_to_int, keep_prob)8.训练神经网络

定义参数

创建图

tf.identity返回与输入张量或值相同的维度与内容的张量。tf.contrib.seq2seq.sequence_loss一序列分对数(logits)的加权交叉熵。tf.train.Optimizer.compute_gradients(loss, var_list=None, gate_gradients=1, aggregation_method=None, colocate_gradients_with_ops=False, grad_loss=None)计算var_list中变量的损失梯度。是minimize()的第一部分。tf.train.Optimizer.apply_gradients(grads_and_vars, global_step=None, name=None)将梯度应用于变量。是minimize()的第二部分。

train_graph = tf.Graph()

with train_graph.as_default():

input_data, targets, lr, keep_prob = model_inputs()

sequence_length = tf.placeholder_with_default(max_source_sentence_length, None, name='sequence_length')

input_shape = tf.shape(input_data)

# 我们创建的模型

train_logits, inference_logits = seq2seq_model(

tf.reverse(input_data, [-1]), targets, keep_prob, batch_size, sequence_length, len(source_vocab_to_int), len(target_vocab_to_int),

encoding_embedding_size, decoding_embedding_size, rnn_size, num_layers, target_vocab_to_int)

tf.identity(inference_logits, 'logits')

with tf.name_scope("optimization"):

# Loss function

cost = tf.contrib.seq2seq.sequence_loss(

train_logits,

targets,

tf.ones([input_shape[0], sequence_length]))

# Optimizer

optimizer = tf.train.AdamOptimizer(lr)

# Gradient Clipping

gradients = optimizer.compute_gradients(cost)

capped_gradients = [(tf.clip_by_value(grad, -1., 1.), var) for grad, var in gradients if grad is not None]

train_op = optimizer.apply_gradients(capped_gradients)- 训练

def get_accuracy(target, logits):

"""

Calculate accuracy

"""

max_seq = max(target.shape[1], logits.shape[1])

if max_seq - target.shape[1]:

target = np.pad(

target,

[(0,0),(0,max_seq - target.shape[1])],

'constant')

if max_seq - logits.shape[1]:

logits = np.pad(

logits,

[(0,0),(0,max_seq - logits.shape[1]), (0,0)],

'constant')

return np.mean(np.equal(target, np.argmax(logits, 2)))

train_source = source_int_text[batch_size:]

train_target = target_int_text[batch_size:]

valid_source = helper.pad_sentence_batch(source_int_text[:batch_size])

valid_target = helper.pad_sentence_batch(target_int_text[:batch_size])

with tf.Session(graph=train_graph) as sess:

sess.run(tf.global_variables_initializer())

for epoch_i in range(epochs):

for batch_i, (source_batch, target_batch) in enumerate(

helper.batch_data(train_source, train_target, batch_size)):

start_time = time.time()

_, loss = sess.run(

[train_op, cost],

{input_data: source_batch,

targets: target_batch,

lr: learning_rate,

sequence_length: target_batch.shape[1],

keep_prob: keep_probability})

batch_train_logits = sess.run(

inference_logits,

{input_data: source_batch, keep_prob: 1.0})

batch_valid_logits = sess.run(

inference_logits,

{input_data: valid_source, keep_prob: 1.0})

train_acc = get_accuracy(target_batch, batch_train_logits)

valid_acc = get_accuracy(np.array(valid_target), batch_valid_logits)

end_time = time.time()

print('Epoch {:>3} Batch {:>4}/{} - Train Accuracy: {:>6.3f}, Validation Accuracy: {:>6.3f}, Loss: {:>6.3f}'

.format(epoch_i, batch_i, len(source_int_text) // batch_size, train_acc, valid_acc, loss))

0x02.神经网络结构

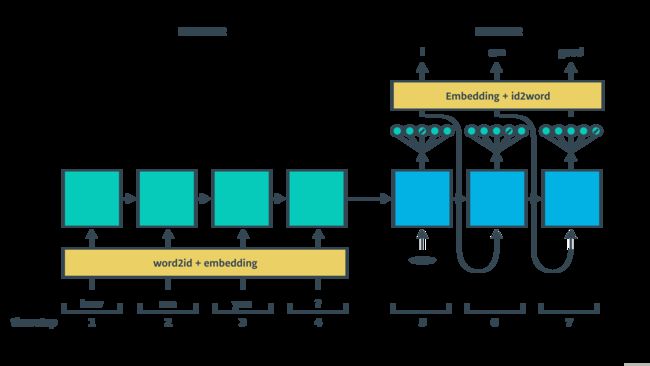

代码中神经网络可以看作编码与解码两层结构,其中编码层是动态的多层lstm,解码层可分为训练和推理两部分,参数包含了编码层的state和另一个多层lstm。

seq2seq模型由编码与解码两部分构成,编码部分将输入编码为状态向量,解码部分将状态向量作为输入输出序列。下面是一个常见的seq2seq模型,其中编解码采用的神经网络是lstm(rnn)。(后续争取对seq2seq做一个总结

0x03.参考

从Encoder到Decoder实现Seq2Seq模型