caffe初探3:结合数据集与设计的网络模型进行训练

续caffe初探1和caffe初探2,回首一下,此时已经有一些收获了呢,已经生成了数据集,并准备了均值文件还有网络结构文件,现在就可以进行模型的训练了。首先,我们来清点一下训练所需要的物资清单吧。

兹训练物资清单如下:

(1)数据集 准备完毕,分别是./caffe/forkrecognition/train_lmdb和./caffe/forkrecognition/test_lmdb

(2)网络架构文件 准备完毕,./caffe/forkrecognition/train_val.prototxt

(3)均值文件 准备完毕,./caffe/forkrecognition/imagenet_mean.binaryproto

(4)训练参数文件 还未准备完毕

(5)训练脚本文件 还未准备完毕

阅览物资清单之后,是不是对下一步更加清楚了呢?好的,现在我们就来准备训练参数文件,在./caffe/forkrecognition/目录下面新建一个名为solver的prototxt文件,并在里面撰写如下代码:

net: "forkrecognition/train_val.prototxt" #制定网络文件的路径

test_iter: 10 #测试时执行的迭代次数

test_interval: 100 #迭代100次进行测试

base_lr: 0.0001 #基础的学习速率

lr_policy: "step"

gamma: 0.1

stepsize: 100

display: 20 #迭代20次便显示一次信息

max_iter: 10000 #一共迭代10000次

momentum: 0.9

weight_decay: 0.0005

snapshot: 2000 #迭代2000次生成一次快照

snapshot_prefix: "forkrecognition/fork_alexnet_train" #生成快照的文件名前缀

solver_mode: GPU #训练模式为GPU模式

在拟定训练参数之后,需要撰写训练脚本文件,在./caffe/forkrecognition/目录下新建train_caffenet.sh文件并在文件中撰写如下代码

#!/usr/bin/env sh

set -e

echo "begin:"

./build/tools/caffe train --solver=forkrecognition/solver.prototxt #指定训练的参数文件来源

echo "end"

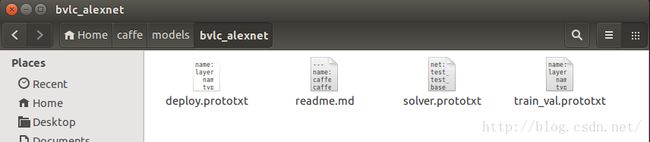

点开路径下面的readme.md文件,可以看到

---

name: BVLC AlexNet Model

caffemodel: bvlc_alexnet.caffemodel

caffemodel_url: http://dl.caffe.berkeleyvision.org/bvlc_alexnet.caffemodel #官方模型下载地址

license: unrestricted

sha1: 9116a64c0fbe4459d18f4bb6b56d647b63920377

caffe_commit: 709dc15af4a06bebda027c1eb2b3f3e3375d5077

---

This model is a replication of the model described in the [AlexNet](http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks) publication.

Differences:

- not training with the relighting data-augmentation;

- initializing non-zero biases to 0.1 instead of 1 (found necessary for training, as initialization to 1 gave flat loss).

The bundled model is the iteration 360,000 snapshot.

The best validation performance during training was iteration 358,000 with validation accuracy 57.258% and loss 1.83948.

This model obtains a top-1 accuracy 57.1% and a top-5 accuracy 80.2% on the validation set, using just the center crop.

(Using the average of 10 crops, (4 + 1 center) * 2 mirror, should obtain a bit higher accuracy.)

This model was trained by Evan Shelhamer @shelhamer

## License

This model is released for unrestricted use.

好,让我们下载bvlc_alexnet.caffemodel并存放在./caffe/forkrecognition路径下面。在这里笔者可以告诉大家,我们在caffe初探2中的撰写的网络架构文件train_val.prptotxt就是参考了./caffe/models/bvlc_alexnet/目录下面的train_val.prototxt文件,连文件名称都没有改动,可是我们在进行分类时,输入与输出是和经典的AlexNet模型不一样的,为了在训练的时候能够参考经典的模型,我们需要在撰写网络架构时更改与经典网络不同的层的名字,这样说起来有一些绕口,那么笔者下面就接地气地说一下我们到底改了哪些地方,首先请大家打开./caffe/models/bvlc_alexnet/train_val.prototxt文件,在这里笔者把此文件demostrate在下面:

name: "AlexNet"

layer {

name: "data" #训练时使用的数据层名称

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: true

crop_size: 227

mean_file: "data/ilsvrc12/imagenet_mean.binaryproto" #训练时使用的均值文件

}

data_param {

source: "examples/imagenet/ilsvrc12_train_lmdb" #训练集

batch_size: 256 #训练批次大小

backend: LMDB

}

}

layer {

name: "data" #测试时使用的数据层名称

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

mirror: false

crop_size: 227

mean_file: "data/ilsvrc12/imagenet_mean.binaryproto" #测试时使用的均值文件

}

data_param {

source: "examples/imagenet/ilsvrc12_val_lmdb" #测试集

batch_size: 50 #测试批次大小

backend: LMDB

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 96

kernel_size: 11

stride: 4

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "norm1"

type: "LRN"

bottom: "conv1"

top: "norm1"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "pool1"

type: "Pooling"

bottom: "norm1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "pool1"

top: "conv2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "norm2"

type: "LRN"

bottom: "conv2"

top: "norm2"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "pool2"

type: "Pooling"

bottom: "norm2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "pool2"

top: "conv3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "conv5"

type: "Convolution"

bottom: "conv4"

top: "conv5"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "fc6"

type: "InnerProduct"

bottom: "pool5"

top: "fc6"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "InnerProduct"

bottom: "fc6"

top: "fc7"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc8" #第八全连接层

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 1000 #输出神经元个数

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "fc8"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "fc8"

bottom: "label"

top: "loss"

}然后我们再打开./caffe/forkrecognition/train_val.prototxt文件,这个文件就是笔者在caffe初探2中撰写的文件,同样demonstrate在下面:

name: "AlexNet"

layer {

name: "forkdata" #在训练岔路口分类网络中使用的数据层名称

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: true

crop_size: 227

mean_file: "forkrecognition/imagenet_mean.binaryproto" #在训练岔路口分类网络中使用的均值文件

}

data_param {

source: "forkrecognition/train_lmdb" #在训练岔路口分类网络中的训练集

batch_size: 5 #在训练岔路口分类网络中的训练批次大小

backend: LMDB

}

}

layer {

name: "forkdata" #在测试岔路口分类网络中的数据层名称

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

mirror: false

crop_size: 227

mean_file: "forkrecognition/imagenet_mean.binaryproto" #在测试岔路口分类网络中的均值文件

}

data_param {

source: "forkrecognition/test_lmdb" #在测试岔路口分类网络中的测试集

batch_size: 5 #测试集批次

backend: LMDB

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 96

kernel_size: 11

stride: 4

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "norm1"

type: "LRN"

bottom: "conv1"

top: "norm1"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "pool1"

type: "Pooling"

bottom: "norm1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "pool1"

top: "conv2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "norm2"

type: "LRN"

bottom: "conv2"

top: "norm2"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "pool2"

type: "Pooling"

bottom: "norm2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "pool2"

top: "conv3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "conv5"

type: "Convolution"

bottom: "conv4"

top: "conv5"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "fc6"

type: "InnerProduct"

bottom: "pool5"

top: "fc6"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "InnerProduct"

bottom: "fc6"

top: "fc7"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "forkfc8" #第八全连接层名称

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 2 #岔路口分类网络输出神经元个数,0代表没有岔路口,1代表有岔路口

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "fc8"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "fc8"

bottom: "label"

top: "loss"

}细心的读者朋友们已经发现了吧?两个文件中不同的仅仅是三个地方(已经注释在代码里面了,无注释的地方都一样)。

不同之处一:训练网络时使用的数据层名称与参数不一样

不同之处二:测试网络时使用的数据层名称与参数不一样

不同之处三:第八全连接层名称与参数不一样

首先,参数不一样的原因很好理解,因地制宜而已,结合我们自己的需要拟定自己的参数,可是,当参数变了的时候对应名称为啥有变化呢?目的就是为了在训练的时候能参考经典模型的层的数据,而我们又不想参考与经典模型参数不同的层的数据,因此需要把对应层的name选项做出相应的变化,赋予我们自己起的名称。

接下来,修改训练脚本文件如下:

#!/usr/bin/env sh

set -e

echo "begin:"

./build/tools/caffe train \

--solver=forkrecognition/solver.prototxt \ #指定训练的参数文件来源

--weights=forkrecognition/bvlc_alexnet.caffemodel #参考的经典模型

echo "end"接下来,我们就可以在caffe目录下输入以下命令进行训练了:

./forkrecognition/train_caffenet.sh

看着loss在一步一步地减小,是不是很有成就感呢?

到下面这一步,就说明训练完成了!

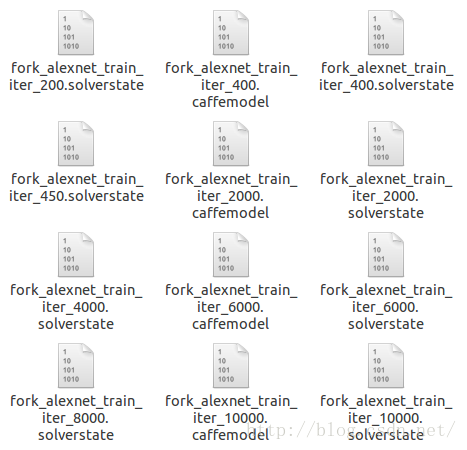

看看./caffe/forkrecognition/目录下面,是不是生成了好多模型快照呢?现在终于也有自己的模型啦~

好的,现在有了属于自己的模型,下一步就可以测试模型了!

欢迎阅读笔者后续测试模型的博客,期待各位读者朋友们提出宝贵意见!

written by jiong

科学技术是第一生产力!