浅入浅出TensorFlow 5 - 可视化工具TensorBoard

一. TensorBoard 介绍

TensorFlow 可视化可以借助 Python 的 matplotlib 进行,也可以使用 TensorFlow 自带的 TensorBoard,推荐大家使用 TensorBoard 进行可视化,这样可以不依赖于TensorFLow 的 Python 接口。

可视化内容包括:

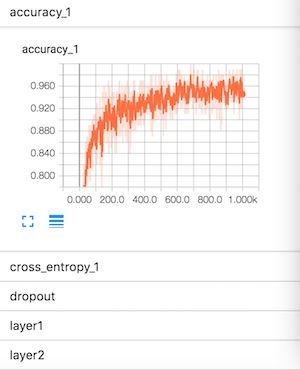

Event:训练过程中的统计数据,主要包括 Loss、Accuracy等

Image:记录的图像数据

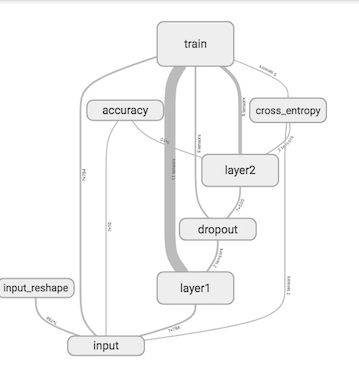

Graphs:网络结构图

Audio:记录的音频数据

Histogram:直方图描述的统计结果

二. 生成过程

理解 TensorBoard 使用最关键的一点就是 Summary,Summary对应流程也就是我们的使用流程:

a)调用 TensorFlow API中的summary接口

上面 summay 得到的输出为包含对应数据的 ProtoBuf,通常的做法是先将这些数据合并,然后再写入。tf.scalar_summary(tags, values, collections=None, name=None) # 标量数据 tf.histogram_summary(tag, values, collections=None, name=None) # 直方图数据 tf.image_summary(tag, tensor, max_images=3, collections=None, name=None) # 图像数据b)将Summary信息汇总

将上面函数输出的 protobuf 数据进行合并,提供两种接口(通常我们用第二个就可以了):

c)指定写入路径merged_summary_op = tf.merge_summary(inputs, collections=None, name=None) merged_summary_op = tf.merge_all_summaries(key='summaries')

关键类:tf.train.SummaryWriter,在该目录下,生成对应event文件

train_writer = tf.train.SummaryWriter(summary_dir + '/train',session.graph) test_writer = tf.train.SummaryWriter(summary_dir + '/test')d)Training调用及单步写出

total_step = 0 while training: total_step += 1 session.run(training_op) # 执行一次Training if total_step % 100 == 0: summary_step = session.run(merged_summary_op) # 运行summary_op,统计一次 summary_writer.add_summary(summary_step, total_step) # 写出一个step,写出到event

三. 可视化展示

TensorFLow 主要对网络和参数进行可视化,通过读取运行过程中生成的 Log文件 进行可视化,输入参数为 日志文件路径。

tensorboard --logdir="/…" # logfile dir四. 代码示例

下面通过实例代码进行 TensorBoard 的使用展示,里面 with tf.name_scope() 需要理解一下,主要是做层级划分(与c++的namespace类似),比如:

with tf.name_scope('hidden'):

a = tf.constant(5, name='alpha')

W = tf.Variable(tf.random_uniform([1, 2], -1.0, 1.0), name='weights')

b = tf.Variable(tf.zeros([1]), name='biases')形成结点的层次关系描述(可以参考 Tensorflow中文手册v1.2):

• hidden/alpha

• hidden/weights

• hidden/biases

完整的 MNIST训练示例:

#coding=utf-8

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

# define W & b

def weight_variable(para):

# 采用截断的正态分布,标准差stddev=0.1

initial = tf.truncated_normal(para,stddev=0.1)

return tf.Variable(initial)

def bias_variable(para):

initial = tf.constant(0.1, shape=para)

return tf.Variable(initial)

# 定义变量汇总

def variable_summaries(var):

with tf.name_scope('summaries'): # 定义的scope范围

mean = tf.reduce_mean(var)

tf.summary.scalar('mean',mean)

with tf.name_scope('stddev'):

stddev = tf.sqrt( tf.reduce_mean( tf.reduce_mean(tf.square(var-mean)) ) )

tf.summary.scalar('stddev',stddev)

tf.summary.scalar('max',tf.reduce_max(var))

tf.summary.scalar('min',tf.reduce_min(var)) # 记录变量var的 均值、标准差、最大值、最小值

tf.summary.histogram('histogram',var) # 记录变量var的直方图 - histogram

# 定义卷积层 - 定义输入数据、维度

# - 定义输出数据、维度

# - 默认激活函数采用relu

def nn_layer(in_data,in_dim,out_dim,layer_name, act=tf.nn.relu):

with tf.name_scope(layer_name): # 针对每一层,记录数据

with tf.name_scope('weights'):

weights = weight_variable([in_dim,out_dim]) # 调用函数,生成权值

variable_summaries(weights) # 记录每一层的权值

with tf.name_scope('biases'):

biases = bias_variable([out_dim]) # 调用函数,生成biases

variable_summaries(biases) # 记录每一层的biases

with tf.name_scope('Wx_plus_b'):

Wx_plus_b = tf.matmul(in_data,weights) + biases # 计算 wx+b

tf.summary.histogram('Wx_plus_b',Wx_plus_b) # 记录wx+b结果 - histogram

activations = act(Wx_plus_b,name='activation') # 激活

tf.summary.histogram('activations',activations) # 记录激活层结果 - histogram

return activations

# 准备训练数据,创建session

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

sess = tf.InteractiveSession()

# 定义原始数据输入

with tf.name_scope('input'):

x = tf.placeholder(tf.float32, [None,784],name='x_input') # 28*28=784 dim,添加了一个name属性,用于显示

y_ = tf.placeholder(tf.float32, [None, 10],name='x_input') # label - 10 dim

# 原始数据reshape

with tf.name_scope('input_reshape'):

x_shaped = tf.reshape(x, [-1,28,28,1]) # reshape for conv, -1表示不固定数量,1为通道数

tf.summary.image('input',x_shaped,10) # 记录input数据,显示 - image

# 创建第一层layer

layer1 = nn_layer(x,784,500,'layer1')

with tf.name_scope('dropout'): # layer1 后面follow一层dropout

keep_prob = tf.placeholder(tf.float32)

tf.summary.scalar('dropout_keep_probability',keep_prob)

dropped = tf.nn.dropout(layer1,keep_prob)

# 创建第二层layer

y = nn_layer(dropped,500,10,'layer2',act=tf.identity)

# softmax

# 计算交叉熵cross_entropy

with tf.name_scope('cross_entropy'):

diff = tf.nn.softmax_cross_entropy_with_logits(logits=y,labels=y_) # diff

with tf.name_scope('total'):

cross_entropy = tf.reduce_mean(diff)

tf.summary.scalar('cross_entropy',cross_entropy)

# training

with tf.name_scope('train'):

train_step = tf.train.AdamOptimizer(0.001).minimize(cross_entropy)

with tf.name_scope('accuracy'):

with tf.name_scope('correct_prediction'):

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(y_,1))

with tf.name_scope('accuracy'):

accuracy_op = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

tf.summary.scalar('accuracy',accuracy_op)

# 汇总所有summary

merge_op = tf.summary.merge_all()

train_writer = tf.summary.FileWriter('./logs/mnist/train',sess.graph) # 保存log文件路径

test_writer = tf.summary.FileWriter('./logs/mnist/test',sess.graph) # 保存log文件路径

# 开始训练

tf.global_variables_initializer().run() # 初始化所有变量

# 使用tf.train.Saver()创建模型的保存器

saver = tf.train.Saver()

for i in range(1000):

if i % 10 == 0: # for test,每10个step保存一次

# 读入数据

xs, ys = mnist.test.images, mnist.test.labels

# 执行merge_op(数据汇总) 和 accuracy_op(准确率测试)

summary, acc = sess.run([merge_op, accuracy_op], feed_dict={x:xs,y_:ys,keep_prob:1.0})

print('Accuracy at step %s: %s' % (i, acc)) # 打印输出结果

else: # for train

if i == 99:

# tf.RunOption定义运行选项,

# tf.RunMetadata()定义运行的元数据信息,描述运算时间和内存占用等

run_options = tf.RunOptions(trace_level=tf.RunOptions.FULL_TRACE)

run_metadata_op = tf.RunMetadata()

xs, ys = mnist.train.next_batch(100)

summary, _ = sess.run([merge_op, train_step], feed_dict={x:xs,y_:ys,keep_prob:0.6},

options=run_options, run_metadata=run_metadata_op)

train_writer.add_run_metadata(run_metadata_op,'step % 03d', i)

train_writer.add_summary(summary,i)

saver.save(sess,'./logs/model.ckpt', i)

print('Adding run metadata for', i)

else:

xs, ys = mnist.train.next_batch(100)

# 执行merged_op和train_step,记录summary

summary, _ = sess.run([merge_op, train_step], feed_dict={x:xs,y_:ys,keep_prob:0.6})

train_writer.add_summary(summary,i)

# 训练完毕,关闭writer

train_writer.close

test_writer.close四. 劳动成果

好了按照上面的命令,输入:

tensorboard --logdir=./logs/mnist/train

Starting TensorBoard 47 at http://0.0.0.0:6006

(Press CTRL+C to quit)

根据提示的IP地址进行浏览(注:用Chrome浏览器,IE或者Safri因兼容问题可能会出现空白问题):