Linux中hive的安装部署

目录

- hive简介

- hive官网地址

- hive安装配置

- hive服务启动脚本

hive简介

Hive是一个数据仓库基础工具在Hadoop中用来处理结构化数据。它架构在Hadoop之上,总归为大数据,并使得查询和分析方便。并提供简单的sql查询功能,可以将sql语句转换为MapReduce任务进行运行。

Hive 不是:

- 一个关系数据库

- 一个设计用于联机事务处理(OLTP)

- 实时查询和行级更新的语言

Hive特点:

- 建立在Hadoop之上

- 处理结构化的数据

- 存储依赖于HDSF:hive表中的数据是存储在hdfs之上

- SQL语句的执行依赖于MapReduce

- hive的功能:让Hadoop实现了SQL的接口,实际就是将SQL语句转化为MapReduce程序

- hive的本质就是Hadoop的客户端

- hive支持的计算框架有MapReduce、Spark、Tez

hive官网地址

hive官网下载地址:https://mirrors.tuna.tsinghua.edu.cn/apache/hive/

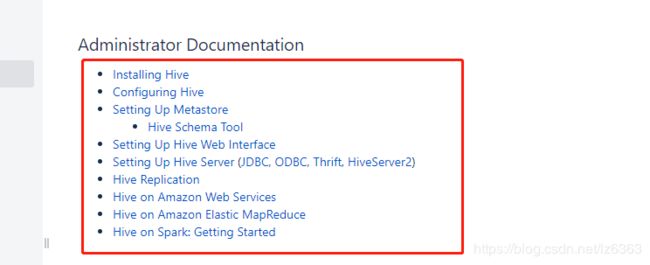

hive配置:https://cwiki.apache.org/confluence/display/Hive/AdminManual+Configuration

hive官网:https://cwiki.apache.org/confluence/display/Hive/Home#Home-ResourcesforContributors

hive安装配置

配置hive之前先要保证已经安装部署好了Java、Hadoop、MySQL

原因:hive基于Java开发,hive是映射Hadoop中hdfs的文件、引擎位Hadoop的MapReduce,Remote Metastore Server改为MySQL

Centos7的Java、Hadoop、MySQL安装部署前面已经写了,这里就不一一赘述了

CentOS 7 下使用yum安装MySQL5.7.20,并设置开启启动

hadoop在Linux中的安装步骤及开发环境搭建

下载安装包后在Linux上解压安装

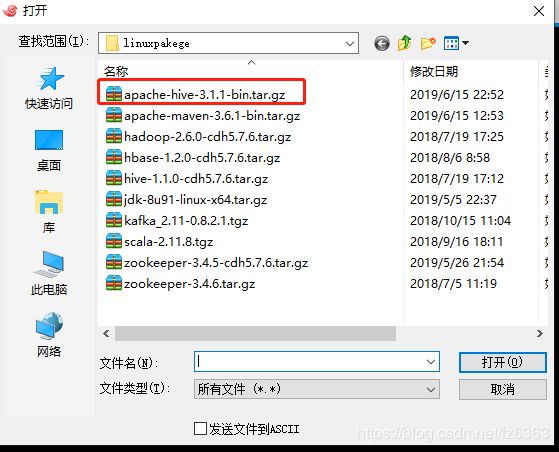

先在官网下载hive(我这里下载是hive3.1.1版本)

下载到windows本地后在Linux中通过rz命令将安装包放入Linux

解压安装:tar apache-hive-3.1.1-bin.tar.gz -C /opt/software/

修改文件夹名称:mv apache-hive-3.1.1-bin hive-3.1.1

修改opt/modules/hive-1.2.1/conf/目录下的配置文件

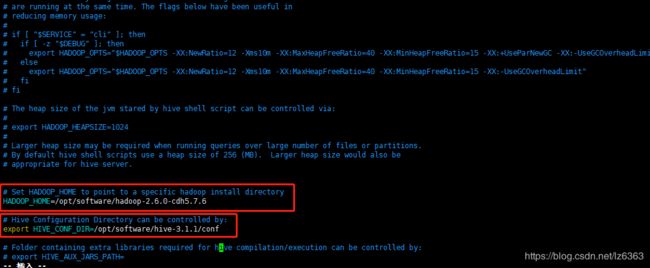

修改hive-env.sh文件(配置Hadoop环境变量和hive配置文件目录)

cd opt/modules/hive-1.2.1/conf/

mv hive-env.sh.template hive-env.sh

vi hive-env.sh

HADOOP_HOME=/opt/software/hadoop-2.6.0-cdh5.7.6

export HIVE_CONF_DIR=/opt/software/hive-3.1.1/conf

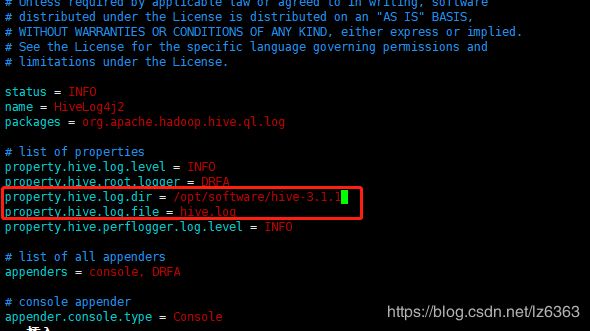

修改hive-log4j2.properties文件(修改日志目录和文件名)

mv hive-log4j2.properties.template hive-log4j2.properties

vi hive-log4j2.properties

property.hive.log.dir = /opt/software/hive-3.1.1/logs

property.hive.log.file = hive.log

配置Hive的Metastore

cd /opt/software/hive-3.1.1/conf

cp hive-default.xml.template hive-site.xml

清空文件内容::>hive-site.xml

vi hive-site.xml

<configuration>

<property>

<name>hive.exec.dynamic.partitionname>

<value>falsevalue>

<description>Whether or not to allow dynamic partitions in DML/DDL.description>

property>

<property>

<name>hive.exec.dynamic.partition.modename>

<value>strictvalue>

<description>How many jobs at most can be executed in paralleldescription>

property>

<property>

<name>hive.metastore.db.typename>

<value>mysqlvalue>

<description>

Expects one of [derby, oracle, mysql, mssql, postgres].

Type of database used by the metastore. Information schema & JDBCStorageHandler depend on it.

description>

property>

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://bigdata.fuyun:3306/hive_3__metastore?createDatabaseIfNotExist=true&characterEncoding=UTF-8value>

<description>JDBC connect string for a JDBC metastoredescription>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

<description>Driver class name for a JDBC metastoredescription>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>rootvalue>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>Mysql@123value>

property>

<property>

<name>hive.server2.thrift.portname>

<value>10000value>

property>

<property>

<name>hive.server2.thrift.bind.hostname>

<value>bigdata.fuyunvalue>

property>

<property>

<name>hive.metastore.urisname>

<value>thrift://bigdata.fuyun:9083value>

property>

<property>

<name>hive.metastore.localname>

<value>falsevalue>

property>

<property>

<name>hive.metastore.warehouse.dirname>

<value>/user/hive/warehouse-3.1.1value>

property>

<property>

<name>datanucleus.schema.autoCreateAllname>

<value>truevalue>

property>

<property>

<name>datanucleus.metadata.validatename>

<value>falsevalue>

property>

<property>

<name>hive.metastore.schema.verificationname>

<value>falsevalue>

property>

<property>

<name>hive.execution.enginename>

<value>mrvalue>

property>

<property>

<name>hive.cli.print.current.dbname>

<value>truevalue>

<description>Whether to include the current database in the Hive prompt.description>

property>

<property>

<name>hive.cli.print.headername>

<value>truevalue>

<description>Whether to print the names of the columns in query output.description>

property>

configuration>

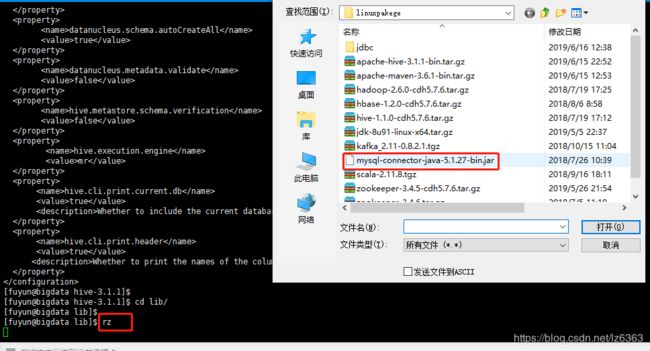

将MySQL的JDBC驱动包放到hive的lib目录下

我的MySQL是5.7版本的,所以要下载8.0及以上的驱动

rz

cp mysql-connector-java-8.0.13.tar.gz ../software/hive-3.1.1/lib/

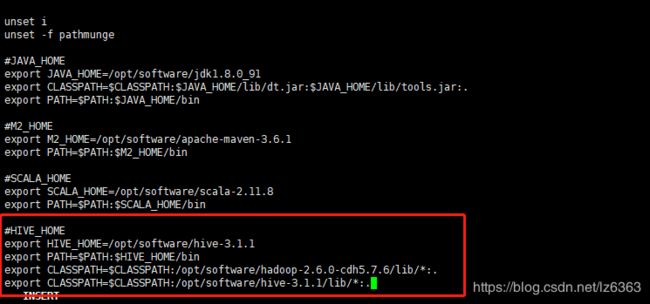

设置为全局变量(如果不想设置为全部变量此步骤可以忽略)

sudo vi /etc/profile

#HIVE_HOME

export HIVE_HOME=/opt/software/hive-3.1.1

export PATH=$PATH:$HIVE_HOME/bin

export CLASSPATH=$CLASSPATH:/opt/software/hadoop-2.6.0-cdh5.7.6/lib/*:.

export CLASSPATH=$CLASSPATH:/opt/software/hive-3.1.1/lib/*:.

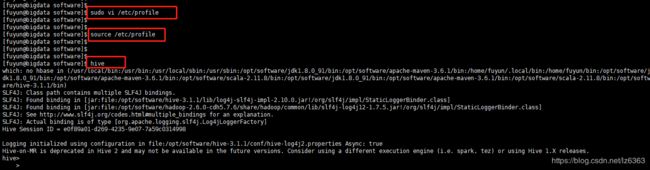

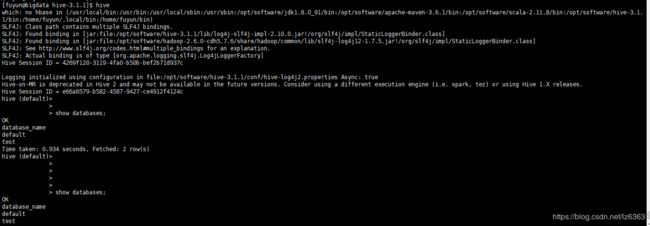

刷新环境变量:source /etc/profile

输入命令测试

hive服务启动脚本

cat start-hiveserver2.sh

#!/bin/sh

HIVE_HOME=/opt/software/hive-3.1.1

## 启动服务的时间

DATE_STR=`/bin/date '+%Y%m%d%H%M%S'`

# 日志文件名称(包含存储路径)

HIVE_SERVER2_LOG=${HIVE_HOME}/logs/hiveserver2-${DATE_STR}.log

## 启动服务

/usr/bin/nohup ${HIVE_HOME}/bin/hiveserver2 > ${HIVE_SERVER2_LOG} 2>&1 &

cat start-metastore.sh

#!/bin/sh

HIVE_HOME=/opt/software/hive-3.1.1

## 启动服务的时间

DATE_STR=`/bin/date '+%Y%m%d%H%M%S'`

# 日志文件名称(包含存储路径)

HIVE_SERVER2_LOG=${HIVE_HOME}/logs/hivemetastore-${DATE_STR}.log

## 启动服务

/usr/bin/nohup ${HIVE_HOME}/bin/hive --service metastore > ${HIVE_SERVER2_LOG} 2>&1 &

后记:中间遇到了很多坑,差点弄了个通宵,也总算解决了,具体问题主要是metestore配置和JDBC的jar包问题。具体怎么解决的,忘了,想起来后再补补记录。