eclipse通过hdfs提供的api对hdfs进行文件操作

上一文介绍了在本机windows搭建一个伪分布式环境,接下来就是在eclipse进行hdfs文件的读写等操作。

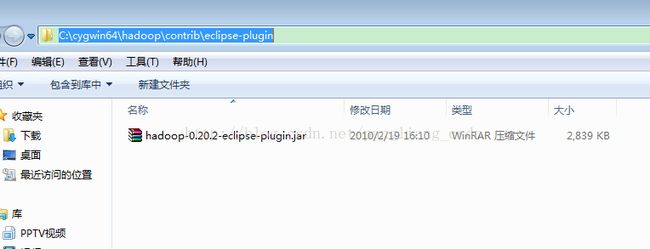

第一步需要获取eclipse进行hadoop开发的插件支持,hadoop第一代和第二代不同,第二代貌似没直接给插件,需要网上找,第一代的有,怎样去获取呢?想必大家很关心,我开始也纳闷这玩意儿在哪里?在网上也找过放进去结果没用,不知道是什么问题。后来查资料发现第一代的安装包有给。

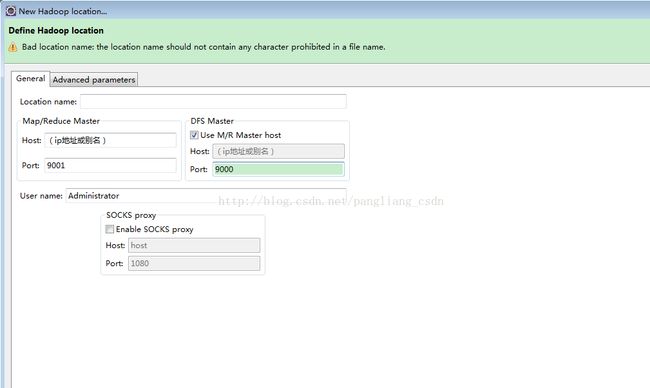

拿到jar包找到eclipse的安装目录下的plugins文件夹下面,然后重启eclipse,接下来打开就发现有个蓝色大象,你可以在eclipse直接看hdfs上面的文件,点击新建一个连接

接下来写代码进行操作

package com.qqw.hadoop;

import java.io.BufferedOutputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.BlockLocation;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hdfs.DistributedFileSystem;

import org.apache.hadoop.hdfs.protocol.DatanodeInfo;

public class HdfsUtil {

private Configuration conf = new Configuration();

private FileSystem coreSys=null;

private String rootPath=new String("hdfs://localhost:9000/");

/**

* 在HDFS上创建文件目录

* @throws IOException */

public void createDirOnHDFS() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path demoDir=new Path(rootPath+"demoDir");

boolean isSuccess=true;

try {

isSuccess=coreSys.mkdirs(demoDir);

} catch (IOException e) {

isSuccess=false;

}

System.out.println(isSuccess?"目录创建成功!":"目录创建失败!");

}

/**

* 在hdfs上创建文件

* @throws IOException

*/

public void createFileOnHDFS() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path hdfsPath = new Path(rootPath + "demoDir/createDemoFile1.txt");

System.out.println(coreSys.getHomeDirectory());

String content = "Hello hadoop,this is first time that I create file on hdfs";

FSDataOutputStream fsout = coreSys.create(hdfsPath);

BufferedOutputStream bout = new BufferedOutputStream(fsout);

bout.write(content.getBytes(), 0, content.getBytes().length);

bout.close();

fsout.close();

System.out.println("文件创建完毕!");

}

/**

* 从hdfs上下载文件到本地

* @throws IOException

*/

public void downLoadFromHdfs() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path hdfsPath = new Path(rootPath + "demoDir/createDemoFile1.txt");

FSDataInputStream hdfsInStream = coreSys.open(hdfsPath);

OutputStream out = new FileOutputStream("D:\\createDemoFile1.txt");

byte[] ioBuffer = new byte[1024];

int readLen = hdfsInStream.read(ioBuffer);

while(-1 != readLen){

out.write(ioBuffer, 0, readLen);

readLen = hdfsInStream.read(ioBuffer);

}

out.close();

hdfsInStream.close();

coreSys.close();

}

/**

* 上传文件到hdfs

* @throws IOException

*/

public void upLoadFromHdfs() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path remotePath=new Path(rootPath+"demoDir/");

coreSys.copyFromLocalFile(new Path("D:\\qq.txt"), remotePath);

System.out.println("Upload to:"+conf.get("fs.default.name"));

FileStatus [] files=coreSys.listStatus(remotePath);

for(FileStatus file:files){

System.out.println(file.getPath().toString());

}

}

/**

* 重命名文件

* @throws IOException

*/

public void rename() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path oldFileName=new Path(rootPath+"demoDir/createDemoFile1.txt");

Path newFileName=new Path(rootPath+"demoDir/newDemoFile.txt");

boolean isSuccess=true;

try {

isSuccess=coreSys.rename(oldFileName, newFileName);

} catch (IOException e) {

isSuccess=false;

}

System.out.println(isSuccess?"重命名成功!":"重命名失败!");

}

/**

* 删除文件

* @throws IOException

*/

public void deleteFile() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path deleteFile=new Path(rootPath+"demoDir/qq.txt");

boolean isSuccess=true;

try {

isSuccess=coreSys.delete(deleteFile, false);

} catch (IOException e) {

isSuccess=false;

}

System.out.println(isSuccess?"删除成功!":"删除失败!");

}

/**

* 查看文件是否存在

* @throws IOException

*/

public void exitFile() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path checkFile=new Path(rootPath+"demoDir/qq.txt");

boolean isSuccess=true;

try {

isSuccess=coreSys.exists(checkFile);

} catch (IOException e) {

isSuccess=false;

}

System.out.println(isSuccess?"存在!":"不存在!");

}

/**

* 获取某个路径下的全部文件

* @throws IOException

*/

public void allFile() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path targetDir=new Path(rootPath+"demoDir/");

FileStatus [] files=coreSys.listStatus(targetDir);

for(FileStatus file:files){

System.out.println(file.getPath()+"--"+file.getGroup()+"--"+file.getBlockSize()

+"--"+file.getLen()+"--"+file.getModificationTime()+"--"+file.getOwner());

}

}

/** *

* 查看某个文件在HDFS集群的位置

* @throws IOException

*/

public void findLocationOnHadoop() throws IOException{

coreSys=FileSystem.get(URI.create(rootPath), conf);

Path targetFile=new Path(rootPath+"demoDir/qq.txt");

FileStatus fileStaus=coreSys.getFileStatus(targetFile);

BlockLocation []bloLocations=coreSys.getFileBlockLocations(fileStaus, 0, fileStaus.getLen());

for(int i=0;i"+dataInfos[j].getDatanodeReport()+"-->"+

dataInfos[j].getDfsUsedPercent()+"-->"+dataInfos[j].getLevel());

}

}

} 然后可以写个main方法或junit测试,用hadoop跑,可以在eclipse上或访问网页http://localhost:50070实时参看hdfs上文件。