中文分词

写在前面

我是菜鸟(先表明身份)

实习过程中,没有任务的期间,自己找了一些事情做。比如toy级的中文分词工具。内附参考链接。

中文分词

问题列表

- 分词歧义

- 未登录词识别

改进方案

- 基于N-gram模型筛选双向最大匹配中最优结果

- 基于N-gram模型组合双向最大匹配分词结果,并返回最优结果

难点

- 目前使用的是搜狗字典,基于N-gram需要对完整语料构建模型,计算词频和概率(下载已有中文词语搭配表)

- 如何组合双向最大匹配分词结果,得到除两种匹配算法返回结果外的其他分词结果

参考

- 最大匹配算法

- 52nlp-初学者

- 正向匹配和逆向匹配的区别

- 引入概率

- 交叉组合

- 52nlp-初学者-1-gram

- 改进的双向最大匹配论文

导入词典

搜狗词库

def load_dic(maxl):

pathname=input('请输入字典文件路径或end退出:')

try:

f=open(pathname,'r')

except:

if pathname=="end":

sys.exit()

else:

print("路径名错误,请重新输入!")

load_dic()

line=f.readline()

seg_dic={}

N=0

num=0

while line:

strList=line.split("\t",2)

seg_dic[strList[0]]=strList[1].split("\n",1)[0]

N=N+int(seg_dic[strList[0]])

num=num+1

if(maxl0])):

maxl = len(strList[0])

try:

line=f.readline()##此处会报编码错误,可能是无法识别相应的字符

except:

continue

seg_dic["total"]=N+num

f.close()

print("字典中词汇最长:",maxl,"个字符。")

return seg_dic,maxl 计算词的概率

def word_p(word,dic):

if word not in dic:

pvalue=1/dic["total"]

else:

pvalue=(float(dic[word])+1)/dic["total"]

return pvalue计算句子的概率(假设词之间是相互独立的)

def sen_p(wordl,dic):

spvalue=1

for word in wordl:

spvalue=spvalue*word_p(word,dic)

return spvalue输入句子

def input_sentence(dic,maxl):

##参数:用于匹配的字典

sentence = input('请输入中文句子或end退出:')

if sentence=='end':

sys.exit()

length=len(sentence)

print('句子长度:')

print (length)

if(lengthelse:

slen=maxl##最大匹配词长max(dic中最大词长,句子长度)

return sentence,slen 打印分词结果

def print_result(words,pre=''):

print(pre+"分词结果:")

for word in words:

print (word,end=' / ')最大匹配实现分词

依赖的包

import string

import sys正向匹配实现

def forward_seg(dic,slen,sentence):

rest_s=sentence

seg_list=[]

while len(rest_s)>0:

if len(rest_s)0

bool_word=rest_s[i]

word=bool_word

while i1:

i=i+1

bool_word=bool_word+rest_s[i]

if bool_word in dic:

word=bool_word

seg_list.append(word)

rest_s=rest_s[len(word):]

return seg_list 逆向匹配实现

def reverse_seg(dic,slen,sentence):

rest_s=sentence

seg_list=[]

while (len(rest_s)>0):

word=rest_s[-slen:]

while (word not in dic) and (len(word)>1):

word=word[1-len(word):]

seg_list.append(word)

rest_s=rest_s[:-len(word)]

seg_list.reverse()

return seg_list选择双向匹配中概率最大的分词结果

假设每一个词出现的概率是相互独立的,句子的概率就等于所有词的概率的积。计算分词后句子出现的概率,返回最大的。

- 问题1:没有考虑上下文(是1-gram的,不是2-gram)

- 问题2:没有考虑分词列表交叉组合成的句子

- 问题3:没有解决未登录词

def good_words(word0,word1,dic):

pvalue0=sen_p(word0,dic)

pvalue1=sen_p(word1,dic)

if pvalue0>pvalue1:

goodwords=word0

else:

goodwords=word1

return goodwords交叉组合最大概率分词结果

两种分词列表交叉组合等到概率最大的

- 解决了问题2

- 下一步加入2-gram模型

def maxp_wordlist(word0,word1,dic):

result_words=[]

if len(word0)==len(word1):

end=0

start=end

for i in range(0,len(word0)):

if word0[i]==word1[i]:

if start!=end:

p0=sen_p(word0[start:end],dic)

p1=sen_p(word1[start:end],dic)

if p0>p1:

result_words=result_words+word0[start:end]

else:

result_words=result_words+word1[start:end]

result_words.append(word0[i])

start=i+1

end=i+1

else:

end=end+1

if end==len(word0):

p0=sen_p(word0[start:end],seg_dic)

p1=sen_p(word1[start:end],seg_dic)

if p0>p1:

result_words=result_words+word0[start:end]

else:

result_words=result_words+word1[start:end]

return result_words主函数

def main_seg():

maxl=0

dic,maxl=load_dic(maxl)

while(True):

sentence,slen=input_sentence(dic,maxl)

word0=forward_seg(dic,slen,sentence)

print_result(word0,pre='正向匹配')

print('\n')

word1=reverse_seg(dic,slen,sentence)

print_result(word1,pre='逆向匹配')

print('\n')

goodwords=good_words(word0,word1,dic)

print_result(goodwords,pre='双向匹配中概率最大的')

print('\n')

maxp_words=maxp_wordlist(word1,word0,dic)

print_result(maxp_words,pre='交叉组合后概率最大的')

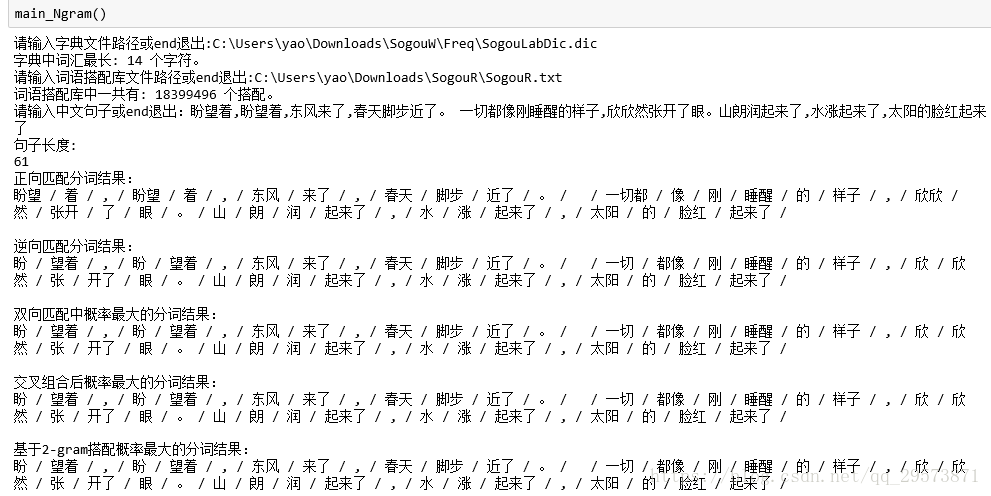

print('\n')测试

字典路径:C:\Users\yao\Downloads\SogouW\Freq\SogouLabDic.dic

词语搭配库路径:C:\Users\yao\Downloads\SogouR\SogouR.txt

测试样例:

* 明天应该也是个大晴天

* 我们的士兵同志想要下去吃饭

* 姑姑想过过儿过过的生活

* 长春市长春药店

* 无聊总是没人聊,寂寞全因太冷漠

* 我盼望着长夜漫漫,你却卧听海涛闲话

* 你爱我或不爱我,我都不爱你,管你是谁

* 盼望着,盼望着,东风来了,春天脚步近了。 一切都像刚睡醒的样子,欣欣然张开了眼。山朗润起来了,水涨起来了,太阳的脸红起来了。

main_seg()#开始测试吧~加入N-gram

载入词语搭配库

和搜狗词库在一个网站。

def load_gram():

pathname=input('请输入词语搭配库文件路径或end退出:')

try:

f=open(pathname,'r')

except:

if pathname=="end":

sys.exit()

else:

print("路径名错误,请重新输入!")

load_gram()

line=f.readline()

gram_dic={}

N=0

num=0

while line:

strList=line.split("\t",2)

gram_dic[strList[0]]=strList[1].split("\n",1)[0]

N=N+int(gram_dic[strList[0]])

num=num+1

try:

line=f.readline()##此处会报编码错误,可能是无法识别相应的字符

except:

continue

gram_dic["total"]=N+num

f.close()

print("词语搭配库中一共有:",num,"个搭配。")

return gram_dic构建N-gram语言模型

def getNgram(words,n):

output=[]

for i in range(len(words)-n+1):

ngram = "-".join(words[i:i+n])

output.append(ngram)

return output基于N-gram计算概率

def Ngram_p(grams,gram_dic):

pvalue=1

for gram in grams:

if gram in gram_dic:

p=(int(gram_dic[gram])+1)/gram_dic['total']

else:

p=1/gram_dic['total']

pvalue=pvalue*p

return pvaluedef maxp_gram(word0,word1,gram_dic):

gram0=getNgram(word0,2)

gram1=getNgram(word1,2)

p0=Ngram_p(gram0,gram_dic)

p1=Ngram_p(gram1,gram_dic)

if p0>p1:

return word0

else:

return word1def main_Ngram():

maxl=0

dic,maxl=load_dic(maxl)

gram_dic=load_gram()

while(True):

sentence,slen=input_sentence(dic,maxl)

word0=forward_seg(dic,slen,sentence)

print_result(word0,pre='正向匹配')

print('\n')

word1=reverse_seg(dic,slen,sentence)

print_result(word1,pre='逆向匹配')

print('\n')

goodwords=good_words(word0,word1,dic)

print_result(goodwords,pre='双向匹配中概率最大的')

print('\n')

maxp_words=maxp_wordlist(word0,word1,dic)

print_result(maxp_words,pre='交叉组合后概率最大的')

print('\n')

maxg_words=maxp_gram(word0,word1,gram_dic)

print_result(maxg_words,pre='基于2-gram搭配概率最大的')写在后面

作者是一个对事物只有三分钟热度的人。但是希望自己能够继续探索学习中文分词。还有很多很多要学,比如条件随机场和深度学习分词等。刚巴爹!!!