CDH5.7.1上Kafka+SparkStream+Hive的实战

目前的项目中需要将kafka队列的数据实时存到hive表中。应为之前工作中用到的是CDH5.11,而且spark等用的基本是最新版(2.2),语言也一直是Scala,所以这次要求Java语言以及低版本的spark,在写程序的时候还是遇到了一些头疼的事情。

环境:Centos6.5 Spark1.6.0 Kafka0.9.x Hive1.1.0 Zookeeper3.4.5 都是基于CDH5.7.1的

阅读本文,你将了解到:

- 采用Direct方式消费Kafka数据到hive,并将offset提交到Zookeeper。Spark一些参数的使用

- 使用Kafka Old Hight-Level API消费Kafka数据,手动提交offset到zookeeper

- Hive JDBC的注意事项

##############################首先来看第一条

下面的代码采用的是Direct方式从Kafka消费数据.官网链接:http://spark.apache.org/docs/1.6.0/streaming-kafka-integration.html

import java.util.HashMap;

import java.util.HashSet;

import java.util.Map;

import java.util.Set;

import java.util.concurrent.atomic.AtomicReference;

import org.I0Itec.zkclient.ZkClient;

import org.I0Itec.zkclient.ZkConnection;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.sql.hive.HiveContext;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka.HasOffsetRanges;

import org.apache.spark.streaming.kafka.KafkaUtils;

import org.apache.spark.streaming.kafka.OffsetRange;

import org.apache.zookeeper.ZooDefs;

import kafka.common.TopicAndPartition;

import kafka.serializer.StringDecoder;

import kafka.utils.ZKGroupTopicDirs;

import kafka.utils.ZkUtils;

/**

* 采用Direct 方式拉取kafka数据到hive表,可以用hiveSql写,也可以直接将数据写入对应的hive目录,然后执行任意刷新语句

* ALTER TABLE xxx ADD IF NOT EXISTS PARTITION (yue='2018-05',ri='2018-05-20')

* offset手动提交到zookeeper

*/

public class SparkStreamKafka2HiveDirect {

public static void main(String[] args) {

String topic = "";

String group = "";

SparkConf conf = new SparkConf().setAppName("待保存队列到hive");

//削峰,在任务积压时,会减少每秒的拉取量

conf.set("spark.streaming.backpressure.enabled", "true");

// maxRetries默认就是1 接受数据相关的一共就只有两个配置

conf.set("spark.streaming.kafka.maxRetries", "1");

//每秒最多拉取partition * 2 的数据

conf.set("spark.streaming.kafka.maxRatePerPartition", "2");

JavaSparkContext jsc = new JavaSparkContext(conf);

JavaStreamingContext jssc = new JavaStreamingContext(jsc, Durations.seconds(5));

// 如果在这里初始化hivecontext,在下面的算子内使用hivecontext会报一个空指针异常,原因貌似是用的时候Hivecontext未初始化成功(请知道的大佬普及一下)

// HiveContext hiveContext = new HiveContext(jsc);

// kafka 参数

HashMap kafkaParams = new HashMap<>();

kafkaParams.put("metadata.broker.list", "");

kafkaParams.put("group.id", group);

// kafkaParams.put("auto.offset.reset", "smallest");

Set topicSet = new HashSet<>();

topicSet.add(topic);

// 赋值操作不是线程安全的。若想不用锁来实现,可以用AtomicReference这个类,实现对象引用的原子更新

final AtomicReference offsetRanges = new AtomicReference<>();

// 读取zookeeper中消费组的偏移量

ZKGroupTopicDirs zgt = new ZKGroupTopicDirs(group, topic);

final String zkTopicPath = zgt.consumerOffsetDir();

// System.out.println(zkTopicPath);

// 会写在zookeeper根目录下consumers下!!!

ZkClient zkClient = new ZkClient("");

int countChildren = zkClient.countChildren(zkTopicPath);

Map fromOffsets = new HashMap<>();

if (countChildren > 0) {

for (int i = 0; i < countChildren; i++) {

String path = zkTopicPath + "/" + i;

String offset = zkClient.readData(path);

TopicAndPartition topicAndPartition = new TopicAndPartition("", i);

fromOffsets.put(topicAndPartition, Long.parseLong(offset));

}

/**

* createDirectStream(JavaStreamingContext jssc, java.lang.Class keyClass,

* java.lang.Class valueClass, java.lang.Class keyDecoderClass,

* java.lang.Class valueDecoderClass, java.lang.Class recordClass,

* java.util.Map kafkaParams,

* java.util.Map fromOffsets,

* Function,R> messageHandler) Create an

* input stream that directly pulls messages from Kafka Brokers without using

* any receiver.

*/

//幸亏java8支持lambda表达式呀,要不然写惯了Scala的人简直没法活了~~~

KafkaUtils.createDirectStream(jssc, String.class, String.class, StringDecoder.class, StringDecoder.class,

String.class, kafkaParams, fromOffsets, v -> v.message()).foreachRDD(rdd -> {

OffsetRange[] offsets = ((HasOffsetRanges) rdd.rdd()).offsetRanges();

offsetRanges.set(offsets);

// 逻辑处理

HiveContext hiveContext = new HiveContext(jsc);

try {

//1-采用hivecontext执行"insert into table" 插入数据到hive

//2-将DF以Hive的存储格式存到Hive目录下

//更新zookeeper

ZkClient zkClient1 = new ZkClient("");

OffsetRange[] offsets1 = offsetRanges.get();

if (null != offsets1) {

for (OffsetRange o : offsets1) {

String zkPath = zkTopicPath + "/" + o.partition();

// System.out.println(zkPath + o.untilOffset());

new ZkUtils(zkClient1,

new ZkConnection(""), false)

.updatePersistentPath(zkPath, o.untilOffset() + "",

ZooDefs.Ids.OPEN_ACL_UNSAFE);

}

}

zkClient.close();

} catch (Exception e) {

e.printStackTrace();

}

});

} else {

KafkaUtils.createDirectStream(jssc, String.class, String.class, StringDecoder.class, StringDecoder.class,

kafkaParams, topicSet).foreachRDD(rdd -> {

if (!rdd.isEmpty()) {

OffsetRange[] offsets = ((HasOffsetRanges) rdd.rdd()).offsetRanges();

offsetRanges.set(offsets);

HiveContext hiveContext = new HiveContext(jsc);

try {

//处理逻辑代码

// 更新zookeeper

ZkClient zkClient1 = new ZkClient("");

OffsetRange[] offsets1 = offsetRanges.get();

if (null != offsets1) {

for (OffsetRange o : offsets1) {

String zkPath = zkTopicPath + "/" + o.partition();

new ZkUtils(zkClient1,

new ZkConnection(""), false)

.updatePersistentPath(zkPath, o.untilOffset() + "",

ZooDefs.Ids.OPEN_ACL_UNSAFE);

}

}

zkClient.close();

} catch (Exception e) {

e.printStackTrace();

}

}

});

}

jssc.start();

jssc.awaitTermination();

}

} 说说上面代码需要注意的地方,这种使用kafkaApi提交offset,会提交到zookeeper'/consumers/xxx'下,而我们项目需要提交到'/kafka/xxx'。除非改源码,否则貌似实现不了,我下载源码看了看,貌似路径是写死的,所以这种方案被pass掉了。

kafka源码里是这样的,第一个就声明了根目录

object ZkUtils {

val ConsumersPath = "/consumers"

val BrokerIdsPath = "/brokers/ids"

val BrokerTopicsPath = "/brokers/topics"

val ControllerPath = "/controller"

val ControllerEpochPath = "/controller_epoch"

val ReassignPartitionsPath = "/admin/reassign_partitions"

val DeleteTopicsPath = "/admin/delete_topics"

val PreferredReplicaLeaderElectionPath = "/admin/preferred_replica_election"

val BrokerSequenceIdPath = "/brokers/seqid"

val IsrChangeNotificationPath = "/isr_change_notification"

val EntityConfigPath = "/config"

val EntityConfigChangesPath = "/config/changes"所以尝试用kafka自己的消费者API,这种方式和spark Receive方式一样,会把offset提交到 zkBrokerList的路径下,例如xxxx:2181;xxxx:2181;xxxx:2181/kafka,就不会走上面/consumers路径了。

##############################第二条

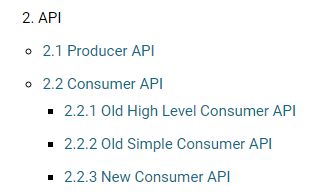

kafka0.9.X提供了3种api,我只尝试了第一种http://kafka.apache.org/090/documentation.html

import com.alibaba.fastjson.JSONObject;

import kafka.consumer.Consumer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import org.apache.hadoop.fs.FSDataOutputStream;

import java.io.IOException;

import java.io.UnsupportedEncodingException;

import java.time.LocalDate;

import java.util.*;

public class Save2Hive {

public static void main(String[] args) throws IOException {

ConsumerConnector consum = new Save2Hive().createConsumer();

Map topicCountMap = new HashMap();

topicCountMap.put(Configs.Glob_KafkaTopic_save, 1);

Map>> messageStream = consum.createMessageStreams(topicCountMap);

KafkaStream stream = messageStream.get(Configs.Glob_KafkaTopic_save).get(0);

ConsumerIterator it = stream.iterator();

// 定义一个list存放message的value,我觉得这里放一个数组资源占用会少点,但是会加大代码量

ArrayList list = new ArrayList(3000);

HdfsFileUtil fileUtil = new HdfsFileUtil();

String tableName1 = "source_feature_hive";

String tableName2 = "source_detection_vehicle_all";

while (it.hasNext()) {

if (list.size() < 3000) {

list.add(new String(it.next().message()));

} else {

String ri = LocalDate.now().toString();

String yue = ri.substring(0, 7);

//这里是包装了hdfs的append方法,追加写一个文件,直接写到Hive表的数据目录下,按照月和日分组

FSDataOutputStream out1 = fileUtil.getOut(yue, ri, tableName1);

FSDataOutputStream out2 = fileUtil.getOut(yue, ri, tableName2);

list.forEach(x -> {

try {

out1.write("\n".getBytes("UTF-8"));

out1.hflush();

out2.write("\n".getBytes("UTF-8"));

out2.hflush();

} catch (UnsupportedEncodingException e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e,"encodingException");

} catch (IOException e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e,"kafka2HDFS failed");

}

});

list.clear();

if (null != out1 && null != out2) {

try {

out1.close();

out2.close();

consum.commitOffsets();

//log 记录写入成功

} catch (Exception e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e,"FSoutput close fail");

} finally {

//TODO

}

}

}

}

}

private ConsumerConnector createConsumer() {

Properties properties = new Properties();

properties.put("zookeeper.connect", Configs.Glob_KafkaZkQuorum);// 声明zk

properties.put("group.id", Configs.SaveHive_KafkaConsumerGroup);// 指定消费组

properties.put("rebalance.max.retries", "5");

properties.put("refresh.leader.backoff.ms", "10000");

properties.put("zookeeper.session.timeout.ms", "40000");

properties.put("auto.commit.enable", "false");

// properties.put("auto.offset.reset", "smallest");

return Consumer.createJavaConsumerConnector(new ConsumerConfig(properties));

}

} 上面的代码还是有一个致命的缺点,对是致命的缺点,那就是只能单节点部署,因为append方法不可以同时操作一个文件。改进:部署到三台的机器上,配置文件也需要三个,分别配置每台机器的文件名。

##############################第三条

这种方式被改进后的第二种方式取代。要克服第二种方式只能单节点部署的缺点,最先想到的是将数据写到本地,然后利用HiveJDBC'load data local inpath' 的方式写到hive表,来看下面的错误代码:

getStream方法返回一个stmt,然后在main函数里执行execute()load数据import java.io.File;

import java.io.FileWriter;

import java.io.IOException;

import java.io.UnsupportedEncodingException;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.Statement;

import java.time.LocalDate;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import com.alibaba.fastjson.JSONObject;

import kafka.consumer.Consumer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import ..kafkaConsumerAPI.Configs;

/**

* 写数据到临时表 source_feature_hive 和 source_detection_vehicle_all

*/

public class SaveHiveService {

public static void main(String[] args) {

ConsumerConnector consum = new SaveHiveService().createConsumer();

Map topicCountMap = new HashMap();

topicCountMap.put(Configs.Glob_KafkaTopic_save, 1);

Map>> messageStream = consum.createMessageStreams(topicCountMap);

KafkaStream stream = messageStream.get(Configs.Glob_KafkaTopic_save).get(0);

ConsumerIterator it = stream.iterator();

// 定义一个list存放message的value

ArrayList list = new ArrayList();

String tableName1 = "";

String tableName10 = "";

String tableName2 = "";

while (it.hasNext()) {

if (list.size() < Integer.parseInt(Configs.Save_SyncBatchCount)) {

list.add(new String(it.next().message()));

} else {

String ri = LocalDate.now().toString();

String yue = ri.substring(0, 7);

FileWriter stream1 = getStream(tableName1);

FileWriter stream2 = getStream(tableName2);

list.forEach(x -> {

try {

JSONObject value = JSONObject.parseObject(x);

stream1.write("" + "\n");

stream1.flush();

} catch (UnsupportedEncodingException e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e, "encodingException");

} catch (IOException e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e, "kafka2local failed");

}

});

if (null != stream1 && null != stream2) {

try {

stream1.close();

stream2.close();

} catch (Exception e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e, "关闭流失败");

}

}

try {

SaveHiveService.getStmt()

.execute("load data local inpath '/h/" + tableName1 + ".txt'"

+ " into table " + tableName10 + " partition(yue='" + yue + "',ri='" + ri + "')");

SaveHiveService.getStmt()

.execute("load data local inpath '/home/dispatch/hivedata/" + tableName2 + ".txt'"

+ " into table " + tableName2 + " partition(yue='" + yue + "',ri='" + ri + "')");

consum.commitOffsets();

// LogSet.WriteLog("info", "4", "06", "600", true, "data write success");

list.clear();

} catch (Exception e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e, "hiveSql failed");

}

}

}

}

private static Statement getStmt() {

String driverName = "org.apache.hive.jdbc.HiveDriver";

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e, "hiveJDBC反射失败");

}

Connection con = null;

try {

con = DriverManager.getConnection(Configs.HiveUrl, Configs.HiveUserName, Configs.HiveUserPwd);

} catch (SQLException e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e, "hiveJDBC创建连接失败");

}

Statement stmt = null;

try {

stmt = con.createStatement();

} catch (SQLException e) {

// LogSet.WriteLog("Error", "4", "06", "600", false, e, "con.createStatement失败");

}

return stmt;

}

private static FileWriter getStream(String tableName) {

FileWriter fw = null;

File f = null;

try {

f = new File("/h/" + tableName + ".txt");

if (!f.exists())

f.createNewFile();

fw = new FileWriter("/h/" + tableName + ".txt");

} catch (IOException e) {

// LogSet.WriteLog("error", "4", "06", "600", false, e, "FileWriter 创建流失败");

}

return fw;

}

private ConsumerConnector createConsumer() {

Properties properties = new Properties();

properties.put("zookeeper.connect", Configs.Glob_KafkaZkQuorum);// 声明zk

properties.put("group.id", Configs.SaveHive_KafkaConsumerGroup);// 指定消费组

properties.put("rebalance.max.retries", "5");

properties.put("refresh.leader.backoff.ms", "10000");

properties.put("zookeeper.session.timeout.ms", "40000");

properties.put("auto.commit.enable", "false");

properties.put("auto.offset.reset", "smallest");

return Consumer.createJavaConsumerConnector(new ConsumerConfig(properties));

}

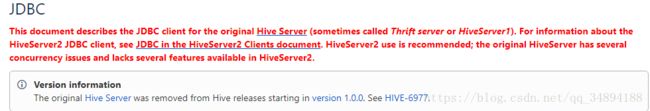

} 是不是以为没问题?实际跑起来会找不到 local path 报'can not find file:/xxx'纳尼?明明文件已经写在本地了呀?而且在hive命令行是可以load成功的,这就奇了怪了,跑去看官网:https://cwiki.apache.org/confluence/display/Hive/HiveClient#HiveClient-JDBC 以下三条注释摘自官网

// load data into table // NOTE: filepath has to be local to the hive server emmm..wtf...文件路径必须在server本地才行!!!!OK,这种方案pass!!! // NOTE: /tmp/a.txt is a ctrl-A separated file with two fields per line

OK,kafka写hive到这里就结束了。

补充:hive官网:

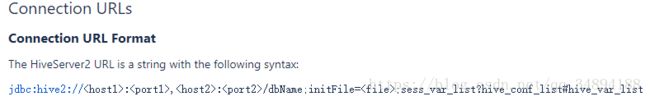

我的是hive1.1.0所以用的是hive2https://cwiki.apache.org/confluence/display/Hive/HiveServer2+Clients#HiveServer2Clients-JDBC

时间过了一周,发现这个程序还是有个bug,我们集群的datamanage与namenode通信发生过故障,导致文件副本数不足的情况下关闭流,会报close execption。想到的就是在关闭流之前,调用fs的getFilestatus(),判断副本的数量,如果不够,就等1秒。