BP神经网络python代码实现

首先直接上代码,后面有时间再把代码里面的详解写出来,

import numpy as np

import random

class neru_bp():

def __init__(self, learning_rate = .2, n_iterations = 4000):

self.learning_rate = learning_rate

self.n_iterations = n_iterations

self.bp_gredient=[]

self.train_weights=[]; # 训练权重

self.train_weights_grad=[];# 权重梯度

self.train_bias=[]; # 训练偏置

self.train_bias_grad=[]; # 偏置梯度

self.feedforward_a=[]; # 前向传播得到的激活值的中间输出

self.error_term=[]; # 残差

self.w_gredient=[]

self.predict_a=[]; # 预测中间值, 用于单个样本的预测输出

self.train_labels=[]#1Xmini_batch_size

#self.learning_rate; # 反向传播学习率

self.lambda1=0.0 # 过拟合参数

self.mini_batch_size=10; # 批量梯度下降的一个批次数量

self.iteration_size=3000; # 迭代次数

self.architecture=[64,100,10]; # 神经网络的结构(4, 4, 1) 表示有一个input layer(4个神经元, 和输入数据的维度一致),

def init_value(self):

for i in range(len(self.architecture)):

if i>=1:

w=np.random.rand(self.architecture[i],self.architecture[i-1])*0.01

self.train_weights.append(w)

wg=np.zeros([self.architecture[i],self.architecture[i-1]])

self.train_weights_grad.append(wg)

b=np.random.rand(self.architecture[i],1)

self.train_bias.append(b)

bg=np.zeros([self.architecture[i],1])

self.train_bias_grad.append(bg)

a=np.zeros([self.architecture[i],self.mini_batch_size])

self.feedforward_a.append(a)#这个和bais,bais_gredient以及下面的变量都是从第二层才开始有的!!!!!

e=np.zeros([self.architecture[i],self.mini_batch_size])

self.error_term.append(e)

self.bp_gredient.append(e)

pa=np.zeros([self.architecture[i],1])

self.predict_a.append(pa)

def get_batch_bias(self,bias):

n=bias.shape[0]

res_bias=np.zeros([n,self.mini_batch_size])

for i in range(self.mini_batch_size):

res_bias[:,i]=bias.reshape(n,)

return res_bias

def sigmoid(self,z):

return 1/(1+np.exp(-z))

def feedforward(self,X):#不包括输入层的x

for i in range(len(self.feedforward_a)):

#print(i)

if i==0:

z=np.dot(self.train_weights[i],X)+self.get_batch_bias(self.train_bias[i])

z=z.reshape(z.shape[0],self.mini_batch_size)

self.feedforward_a[i]=self.sigmoid(z)

else:

self.feedforward_a[i]=self.sigmoid(np.dot(self.train_weights[i],self.feedforward_a[i-1])+self.get_batch_bias(self.train_bias[i]))

return self.feedforward_a

def sig_bg(self,z):

return self.sigmoid(z)*(1-self.sigmoid(z))

def back_forward(self,X,train_labels):

train_labels=train_labels.reshape(train_labels.shape[0],self.mini_batch_size)

X=X.reshape(X.shape[0],self.mini_batch_size)

bp_gredient=[]

bk_len=list(range(len(self.architecture)))

bk_len.reverse()

bk_len.pop()#第一层是x,不是z,

self.feedforward(X)

for i in bk_len:

if i==len(self.architecture)-1:

#er1=-(self.feedforward_a[i-1]-train_labels)*self.sig_bg(np.dot(self.train_weights[i-1],self.feedforward_a[i-2]))

er1=-(-self.feedforward_a[i-1]+train_labels)*self.sig_bg(self.feedforward_a[i-1])

#self.bp_gredient.append(er1)

self.bp_gredient[i-1]=er1

#bp_mean=np.mean(self.bp_gredient[i-1],axis=1)

w_g=np.dot(self.bp_gredient[i-1],self.feedforward_a[i-2].T)/self.mini_batch_size

b_g=np.mean(self.bp_gredient[i-1],axis=1)

# self.train_weights_grad.append(w_g)

# self.train_bias_grad.append(b_g)

b_g=np.reshape(b_g,newshape=self.train_bias_grad[i-1].shape)#!!!!!!!!

self.train_weights_grad[i-1]=w_g

self.train_bias_grad[i-1]=b_g

elif i==1:

#print(self.bp_gredient[i-1].T)

#er1=(np.dot(self.bp_gredient[i].T,self.train_weights[i])).T*self.sig_bg(np.dot(self.train_weights[i-1],X))

er1=(np.dot(self.bp_gredient[i].T,self.train_weights[i])).T*self.sig_bg(self.feedforward_a[i-1])

self.bp_gredient[i-1]=er1

bp_mean=np.mean(self.bp_gredient[i-1],axis=1)

w_g=np.dot(self.bp_gredient[i-1],X.T)/self.mini_batch_size

b_g=np.mean(self.bp_gredient[i-1],axis=1)

# self.train_weights_grad.append(w_g)

# self.train_bias_grad.append(b_g)

self.train_weights_grad[i-1]=w_g

b_g=np.reshape(b_g,newshape=self.train_bias_grad[i-1].shape)#!!!!!!!!

self.train_bias_grad[i-1]=b_g

else:

#er1=(np.dot(self.bp_gredient[i].T,self.train_weights[i])).T*self.sig_bg(np.dot(self.train_weights[i-1],self.feedforward_a[i-2]))

er1=(np.dot(self.bp_gredient[i].T,self.train_weights[i])).T*self.sig_bg(self.feedforward_a[i-1])

self.bp_gredient[i-1]=er1

w_g=np.dot(self.bp_gredient[i-1],self.feedforward_a[i-2].T)/self.mini_batch_size

# self.train_weights_grad.append(w_g)

# self.train_bias_grad.append(b_g)

b_g=np.mean(self.bp_gredient[i-1],axis=1)

b_g=np.reshape(b_g,newshape=self.train_bias_grad[i-1].shape)

self.train_weights_grad[i-1]=w_g

self.train_bias_grad[i-1]=b_g

#self.bp_gredient.clear()

def get_minibatch(self,X,y):

m=X.shape[1]

list1=list(range(m))

sample_num=random.sample(list1,self.mini_batch_size)

#sample_num=np.random.randint(m)

#print("sample is:",sample_num)

return X[:,sample_num],y[:,sample_num]

def predict(self,X):#对单个样本预测

n=X.shape[0]

X=X.reshape([n,1])

for i in range(len(self.predict_a)):

if i==0:

#print(np.dot(self.train_weights[i],X))

z=np.dot(self.train_weights[i],X)+self.train_bias[i]

#print(self.train_bias[i])

self.predict_a[i]=self.sigmoid(z)

else:

self.predict_a[i]=self.sigmoid(np.dot(self.train_weights[i],self.predict_a[i-1])+self.train_bias[i])

return self.predict_a[len(self.predict_a)-1]

def loss(self,X,y,pred_y):

m=y.shape[1]

loss_squre=0

loss_regular=0

for i in range(m):

loss_squre+=np.dot((y-pred_y).T,(y-pred_y))

loss_squre=loss_squre/m

for j in range(len(self.train_weights)):

temp_w=self.train_weights[j]

loss_regular+=np.sum(temp_w*temp_w)

loss_regular*=self.lambda1/2

return loss_squre+loss_regular

def evaluate(self,test_X,test_y,onehot):

print(test_X.shape)

cnt=0

for i in range(test_X.shape[1]):

pred_y=self.predict(test_X[:,i])

#print("pred_y",pred_y)

#print("真实结果与预测结果",np.argmax(test_y[:,i],axis=0),np.argmax(pred_y,axis=0)[0])

if(onehot):

if np.argmax(test_y[:,i],axis=0)==np.argmax(pred_y,axis=0)[0]:

#print("真实结果与预测结果",np.argmax(test_y[:,i],axis=0),np.argmax(pred_y,axis=0)[0])

cnt+=1

else:

if(pred_y[0,0]==test_y[0,i]):

cnt+=1

return cnt/test_y.shape[1]

def train(self,X,y):

for i in range(self.iteration_size):

X_batch,y_batch=self.get_minibatch(X,y)

self.back_forward(X_batch,y_batch)

for j in range(len(self.train_weights)):

self.train_weights[j]=self.train_weights[j]-self.learning_rate*(self.train_weights_grad[j]+self.lambda1*self.train_weights[j])

self.train_bias[j]-=self.learning_rate*self.train_bias_grad[j]

#for i in range(len(self.train_weights)):

#print("train_weights %d"%i)

#print(self.train_weights[i])

#print("train_bias %d"%i)

#print(self.train_bias[i])

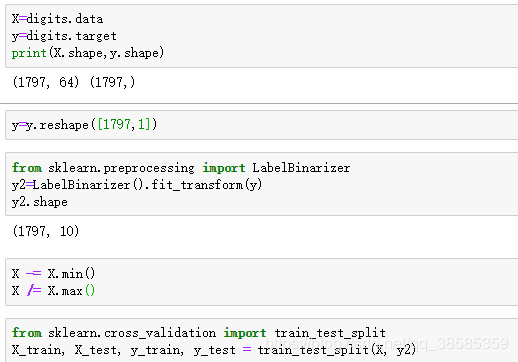

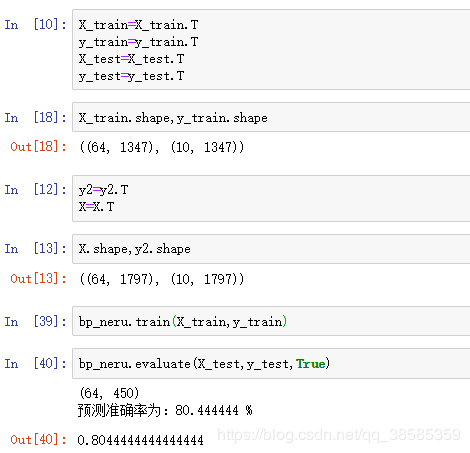

接下来利用MINIST的数字手写图片数据进行训练及预测:

准确度达到了80%,在开始准确度很低,后来检查代码发现是里面的一些公式表示出了问题,花了很久进行完善,后面写代码还是要仔细一些。