tensorflow使用基础(2)-- 非线性回归

非线性回归

import tensorflow as tf

import numpy as np

- 生成饱含随机噪音的数据

#生成饱含随机噪音的数据

x_data = np.linspace(-1.0, 1.0, 200)

noise = np.random.normal(0, 0.02, 200)

y_data = x_data ** 2 + noise

print(x_data[0:10],'\n',y_data[0:10])

[-1. -0.98994975 -0.9798995 -0.96984925 -0.95979899 -0.94974874

-0.93969849 -0.92964824 -0.91959799 -0.90954774]

[0.98826083 1.00405787 0.93467031 0.93196639 0.9507247 0.89131818

0.8945671 0.90021127 0.83955296 0.82885712]

- 对x,y进行结构处理

#对x,y进行结构处理,变成列向量

x_data = np.reshape(x_data, (200, 1))

y_data = np.reshape(y_data, (200, 1))

print(x_data.shape,y_data.shape)

(200, 1) (200, 1)

- 创建三层的神经网络

神经网络的结构为 [1, 8, 1]

对于1,8之间,有权重 w.shape = (1,8), b.shape() = (1,8)

对于8,1之间,有权重 w.shape = (8,1), b.shape() = (1,1)

x = tf.placeholder(tf.float32,[None,1])

y = tf.placeholder(tf.float32,[None,1])

L1_weight = tf.Variable(tf.random_normal([1,8]))

L1_bias = tf.Variable(tf.random_normal([1,8]))

L1_z = tf.matmul(x, L1_weight) + L1_bias

L1_out = tf.nn.tanh(L1_z)

L2_weight = tf.Variable(tf.random_normal([8,1]))

L2_bias = tf.Variable(tf.random_normal([1,1]))

L2_z = tf.matmul(L1_out, L2_weight) + L2_bias

L2_out = tf.nn.tanh(L2_z)

loss = tf.reduce_mean(tf.square(y - L2_out))

train = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

init = tf.global_variables_initializer()

import matplotlib.pyplot as plt

with tf.Session() as sess:

sess.run(init)

for _ in range(1000):

sess.run(train,feed_dict={x:x_data,y:y_data})

prediction_value = sess.run(L2_out,feed_dict={x:x_data})

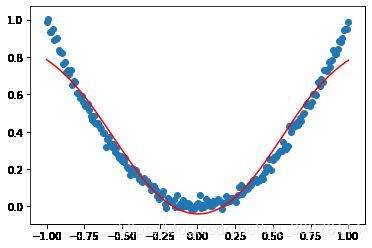

plt.figure()

plt.scatter(x_data,y_data)

plt.plot(x_data,prediction_value,'r-')

plt.show()